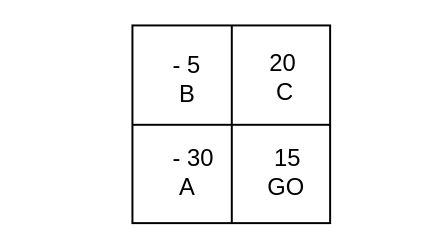

A highly simplified game of “Monopoly” is played on a board with four squares as shown in Figure. You start at GO. You roll a die and move clockwise around the board a number of squares equal to the number that turns up on the die. You collect or pay an amount indicated on the square on which you land. You then roll the die again

and move around the board in the same manner from your last position. Using the result of Exercise, estimate the amount you should expect to win in the long run playing this version of Monopoly.

Show that if [math]\mat P[/math] is the transition matrix of a regular Markov chain, and [math]\mat W[/math] is the matrix each of whose rows is the fixed probability vector corresponding to [math]\mat {P}[/math], then [math]\mat {P}\mat {W} = \mat {W}[/math], and [math]\mat{W}^k = \mat {W}[/math] for all positive integers [math]k[/math].

Assume that an ergodic Markov chain has states [math]s_1,s_2, \ldots,s_k[/math]. Let [math]S^{(n)}_j[/math] denote the number of times that the chain is in state [math]s_j[/math] in the first [math]n[/math] steps. Let [math]\mat{w}[/math] denote the fixed probability row vector for this chain. Show that, regardless of the starting state, the expected value of [math]S^{(n)}_j[/math],

divided by [math]n[/math], tends to [math]w_j[/math] as [math]n \rightarrow \infty[/math]. Hint: If the chain starts in state [math]s_i[/math], then the expected value of [math]S^{(n)}_j[/math] is given by the expression

In the course of a walk with Snell along Minnehaha Avenue in Minneapolis in the fall of 1983, Peter Doyle[Notes 1] suggested the following explanation for the constancy of Kemeny's constant (see Exercise). Choose a target state according to the fixed vector [math]\mat{w}[/math]. Start from state [math]i[/math] and wait until the time [math]T[/math] that the target state occurs for the first time. Let [math]K_i[/math] be the expected value

of [math]T[/math]. Observe that

and hence

By the maximum principle, [math]K_i[/math] is a constant. Should Peter have been given the prize?

Notes