guide:87cf36f969: Difference between revisions

No edit summary |

mNo edit summary |

||

| (6 intermediate revisions by 2 users not shown) | |||

| Line 1: | Line 1: | ||

<div class="d-none"><math> | |||

\newcommand{\NA}{{\rm NA}} | |||

\newcommand{\mat}[1]{{\bf#1}} | |||

\newcommand{\exref}[1]{\ref{##1}} | |||

\newcommand{\secstoprocess}{\all} | |||

\newcommand{\NA}{{\rm NA}} | |||

\newcommand{\mathds}{\mathbb}</math></div> | |||

The subject of Markov chains is best studied by considering special types of | |||

Markov chains. The first type that we shall study is called an ''absorbing | |||

Markov chain.'' | |||

{{defncard|label=|id=|A state <math>s_i</math> of a Markov chain is called ''absorbing'' if it is impossible | |||

to leave | |||

it (i.e., | |||

<math>p_{ii} = 1</math>). A Markov chain is ''absorbing'' if it has at least one | |||

absorbing | |||

state, and if from every state it is possible to go to an absorbing state (not | |||

necessarily | |||

in one step).}} | |||

{{defncard|label=|id=|In an absorbing Markov chain, a state which is not absorbing is | |||

called ''transient.''}} | |||

===Drunkard's Walk=== | |||

<span id="exam 11.2.1"/> | |||

'''Example''' | |||

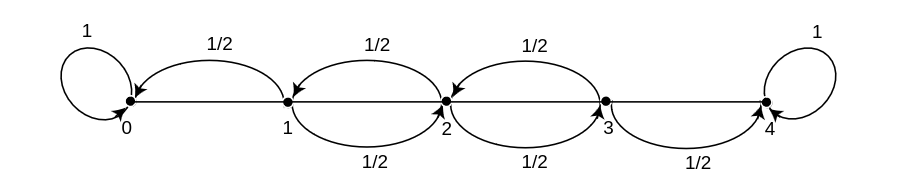

A man walks along a four-block stretch of Park Avenue (see [[#fig | |||

11.3|Figure]]). If he is | |||

at corner 1, 2, or 3, then he walks to the left or right with equal | |||

probability. | |||

He continues until he reaches | |||

corner 4, which is a bar, or corner 0, which is his home. If he | |||

reaches either home or the bar, he stays there. | |||

We form a Markov chain with states 0, 1, 2, 3, and 4. States 0 and 4 are | |||

absorbing states. The transition matrix is then | |||

<div class="d-flex justify-content-center"> | |||

{| | |||

|- | |||

| || || <span style="width:40px;text-align:center;display:inline-block;margin-left:5px;"><math>0</math></span><span style="width:35px;;text-align:center;display:inline-block;"><math>1</math></span> <span style="width:35px;;text-align:center;display:inline-block;"><math>2</math></span> <span style="width:35px;text-align:center;display:inline-block;"><math>3</math></span> <span style="width:35px;;text-align:center;display:inline-block;"><math>4</math></span> | |||

|- | |||

| <math>\mat{P} =\,\,</math> | |||

| style= "padding:0px" |<math>\begin{array}{c c c c} | |||

0 \\ | |||

1\\ | |||

2\\ | |||

3\\ | |||

4 | |||

\end{array}</math> || <math>\begin{pmatrix} | |||

1 & 0 & 0 & 0 & 0 \\ | |||

1/2 & 0 & 1/2 & 0 & 0 \\ | |||

0 & 1/2 & 0 & 1/2 & 0 \\ | |||

0 & 0 & 1/2 & 0 & 1/2 \\ | |||

0 & 0 & 0 & 0 & 1 \\ | |||

\end{pmatrix}</math> | |||

|} | |||

</div> | |||

The states 1, 2, and 3 are transient states, and from any of these | |||

it is possible to reach the absorbing states 0 and 4. Hence the chain is an | |||

absorbing chain. When a process reaches an absorbing state, we shall say that | |||

it is ''absorbed''. | |||

The most obvious question that can be asked about such a chain is: What is the | |||

probability that the process will eventually reach an absorbing state? | |||

Other interesting questions include: (a) What is the probability that the | |||

process will | |||

end up in a given absorbing state? (b) On the average, how long will it take | |||

for the | |||

process to be absorbed? (c) On the average, how many times will the process be | |||

in each | |||

transient state? The answers to all these questions depend, in general, on the | |||

state from which the process starts as well as the transition probabilities. | |||

<div id="fig 11.3" class="d-flex justify-content-center"> | |||

[[File:guide_e6d15_PSfig11-3.png | 400px | thumb | Drunkard's walk. ]] | |||

</div> | |||

===Canonical Form=== | |||

Consider an arbitrary absorbing Markov chain. Renumber the states so that the | |||

transient states come first. If there are <math>r</math> absorbing states and <math>t</math> | |||

transient states, the transition matrix will have the following ''canonical | |||

form'' | |||

<div class="d-flex justify-content-center"> | |||

{| | |||

|- | |||

| || || <span style="text-align:center;display:inline-block;margin-right:10px;margin-left:5px;"><math>\hbox{TR.}</math></span><span style="text-align:center;display:inline-block;margin-right:10px;"><math>\hbox{ABS.}</math></span> | |||

|- | |||

| <math>\mat{P} =</math> | |||

| style= "padding:0px" |<math>\begin{array}{cc} | |||

\hbox{TR.}\strut \\ | |||

\hbox{ABS.}\strut | |||

\end{array}</math> || <math>\left(\begin{array}{c|c} | |||

\mat{Q} &\mat{R} \\ | |||

\hline | |||

\mat{0} &\mat{I} | |||

\end{array}\right)</math> | |||

|} | |||

</div> | |||

Here <math>\mat{I}</math> is an <math>r</math>-by-<math>r</math> indentity matrix, <math>\mat{0}</math> is an <math>r</math>-by-<math>t</math> | |||

zero matrix, <math>\mat{R}</math> is a nonzero <math>t</math>-by-<math>r</math> matrix, and <math>\mat{Q}</math> is an | |||

<math>t</math>-by-<math>t</math> matrix. The first <math>t</math> states are transient and the last <math>r</math> states | |||

are absorbing. | |||

In [[guide:52e01d4de7|Introduction]], we saw that the entry <math>p_{ij}^{(n)}</math> of the matrix | |||

<math>\mat{P}^n</math> is the probability of being in the state <math>s_j</math> after <math>n</math> steps, | |||

when | |||

the chain is started in state <math>s_i</math>. A standard matrix algebra argument shows | |||

that | |||

<math>\mat{P}^n</math> is of the form | |||

<div class="d-flex justify-content-center"> | |||

{| | |||

|- | |||

| || || <span style="text-align:center;display:inline-block;margin-right:10px;margin-left:5px;"><math>\hbox{TR.}</math></span><span style="text-align:center;display:inline-block;margin-right:10px;"><math>\hbox{ABS.}</math></span> | |||

|- | |||

| <math>\mat{P} =</math> | |||

| style= "padding:0px" |<math>\begin{array}{cc} | |||

\hbox{TR.}\strut \\ | |||

\hbox{ABS.}\strut | |||

\end{array}</math> || <math>\left(\begin{array}{c|c} | |||

\mat{Q}^n &\ast \\ | |||

\hline | |||

\mat{0} &\mat{I} | |||

\end{array}\right)</math> | |||

|} | |||

</div> | |||

where the asterisk <math>*</math> stands for the <math>t</math>-by-<math>r</math> matrix in the upper right-hand | |||

corner of <math>\mat{P}^n.</math> (This submatrix can be written in terms of <math>\mat{Q}</math> | |||

and <math>\mat{R}</math>, but the expression is complicated and is not needed at this time.) | |||

The form of <math>\mat{P}^n</math> shows that the entries of | |||

<math>\mat{Q}^n</math> give the probabilities for being in each of the transient states after <math>n</math> | |||

steps for each possible transient starting state. For our first theorem we prove that the probability of being in the transient states after <math>n</math> steps approaches zero. Thus every entry | |||

of <math>\mat{ Q}^n</math> must approach zero as <math>n</math> approaches infinity (i.e, <math>\mat{Q}^n | |||

\to \mat{0}</math>). | |||

===Probability of Absorption=== | |||

{{proofcard|Theorem|thm_11.2.1|In an absorbing Markov chain, the probability that the process will be absorbed | |||

is 1 (i.e., <math>\mat{Q}^n \to \mat{0}</math> as <math>n \to \infty</math>).|From each nonabsorbing state <math>s_j</math> it is possible to reach an absorbing state. | |||

Let <math>m_j</math> be the minimum number of steps required to reach an absorbing state, | |||

starting from <math>s_j</math>. Let <math>p_j</math> be the probability that, starting from <math>s_j</math>, | |||

the process will not reach an absorbing state in <math>m_j</math> steps. | |||

Then <math>p_j < 1</math>. Let <math>m</math> be the largest of the <math>m_j</math> and let <math>p</math> be the largest | |||

of <math>p_j</math>. The probability of not being absorbed in <math>m</math> steps is less than or | |||

equal to <math>p</math>, | |||

in <math>2m</math> steps less than or equal to <math>p^2</math>, etc. Since <math>p < 1</math> these | |||

probabilities tend to 0. | |||

Since the probability of not being absorbed in <math>n</math> steps is monotone | |||

decreasing, these | |||

probabilities also tend to 0, hence <math>\lim_{n \rightarrow \infty } \mat{Q}^n = | |||

0.</math>}} | |||

===The Fundamental Matrix=== | |||

{{proofcard|Theorem|thm_11.2.2|For an absorbing Markov chain the matrix <math>\mat{I} - \mat{Q}</math> has an inverse | |||

<math>\mat{N}</math> and | |||

<math>\mat{N} =\mat{I} + \mat{Q} + \mat{Q}^{2} + \cdots\ </math>. The <math>ij</math>-entry | |||

<math>n_{ij}</math> of the | |||

matrix <math>\mat{N}</math> is the expected number of times the chain is in state <math>s_j</math>, | |||

given that | |||

it starts in state <math>s_i</math>. The initial state is counted if <math>i = j</math>.|Let <math>(\mat{I} - \mat{Q})\mat{x}~=~0;</math> that is <math>\mat{x}~=~\mat{Q}\mat{x}.</math> Then, | |||

iterating | |||

this we see that | |||

<math>\mat{x}~=~\mat{Q}^{n}\mat x.</math> Since <math>\mat{Q}^{n} \rightarrow \mat{0}</math>, we | |||

have | |||

<math>\mat{Q}^n\mat{x} \rightarrow \mat{0}</math>, so | |||

<math>\mat{x}~=~\mat{0}</math>. Thus <math>(\mat{I} - \mat{Q})^{-1}~=~\mat{N}</math> exists. Note | |||

next that | |||

<math display="block"> | |||

(\mat{I} - \mat{Q}) (\mat{I} + \mat{Q} + \mat{Q}^2 + \cdots + \mat{Q}^n) = | |||

\mat{I} - | |||

\mat{Q}^{n + 1}\ . | |||

</math> | |||

Thus multiplying both sides by <math>\mat{N}</math> gives | |||

<math display="block"> | |||

\mat{I} + \mat{Q} + \mat{Q}^2 + \cdots + \mat{Q}^n = \mat{N} (\mat{I} - | |||

\mat{Q}^{n + 1})\ . | |||

</math> | |||

Letting <math>n</math> tend to infinity we have | |||

<math display="block"> | |||

\mat{N} = \mat{I} + \mat{Q} + \mat{Q}^2 + \cdots\ . | |||

</math> | |||

Let <math>s_i</math> and <math>s_j</math> be two transient states, and assume throughout the | |||

remainder of the proof | |||

that <math>i</math> and <math>j</math> are fixed. Let <math>X^{(k)}</math> be a random variable which equals 1 | |||

if the chain is in | |||

state <math>s_j</math> after <math>k</math> steps, and equals 0 otherwise. For each <math>k</math>, this random | |||

variable | |||

depends upon both <math>i</math> and <math>j</math>; we choose not to explicitly show this dependence | |||

in the | |||

interest of clarity. We have | |||

<math display="block"> | |||

P(X^{(k)} = 1) = q_{ij}^{(k)}\ , | |||

</math> | |||

and | |||

<math display="block"> | |||

P(X^{(k)} = 0) = 1 - q_{ij}^{(k)}\ , | |||

</math> | |||

where <math>q_{ij}^{(k)}</math> is the <math>ij</math>th entry of <math>\mat{Q}^k</math>. These equations hold | |||

for <math>k = 0</math> since <math>\mat{Q}^0 = \mat{I}</math>. Therefore, since <math>X^{(k)}</math> is a 0-1 | |||

random variable, <math>E(X^{(k)}) = q_{ij}^{(k)}</math>. | |||

The expected number of times the chain is in state <math>s_j</math> in the first <math>n</math> | |||

steps, | |||

given that it starts in state <math>s_i</math>, is clearly | |||

<math display="block"> | |||

E\Bigl(X^{(0)} + X^{(1)} + \cdots + X^{(n)} \Bigr) = q_{ij}^{(0)} + | |||

q_{ij}^{(1)} + | |||

\cdots + q_{ij}^{(n)}\ . | |||

</math> | |||

Letting <math>n</math> tend to infinity we have | |||

<math display="block"> | |||

E\Bigl(X^{(0)} + X^{(1)} + \cdots \Bigr) = q_{ij}^{(0)} + | |||

q_{ij}^{(1)} + \cdots = n_{ij} \ . | |||

</math>}} | |||

{{defncard|label=|id=|For an absorbing Markov chain <math>\mat{P}</math>, the matrix <math>\mat{N} = (\mat{I} - | |||

\mat{Q})^{-1}</math> is | |||

called the | |||

''fundamental matrix'' for <math>\mat{P}</math>. The entry <math>n_{ij}</math> of <math>\mat{N}</math> gives the | |||

expected number | |||

of times that the process is in the transient state <math>s_j</math> if it is started in | |||

the transient | |||

state <math>s_i</math>.}} | |||

<span id="exam 11.2.2"/> | |||

'''Example''' | |||

([[#exam 11.2.1 |Example]] continued) | |||

In the Drunkard's Walk example, the transition matrix in canonical form is | |||

<div class="d-flex justify-content-center"> | |||

{| | |||

|- | |||

| || || <span style="text-align:center;display:inline-block;margin-left:20px;padding-right:30px;"><math>1</math></span><span style=";text-align:center;display:inline-block;padding-right:30px;"><math>2</math></span> <span style="text-align:center;display:inline-block;padding-right:20px;"><math> 3</math></span> <span style="text-align:center;display:inline-block;padding-right:17px;"><math>0</math></span> <span style="text-align:center;display:inline-block;"><math>4</math></span> | |||

|- | |||

| ||<span><math>1</math></span> || <span style="text-align:center;display:inline-block;margin-left:20px;padding-right:25px;"><math>0</math></span><span style=";text-align:center;display:inline-block;padding-right:20px;"><math>1/2</math></span> <span style="text-align:center;display:inline-block;padding-right:18px;"><math> 0</math></span><span style="text-align:center;display:inline-block;padding-right:8px;"><math>1/2</math></span> <span style="text-align:center;display:inline-block;"><math>0</math></span> | |||

|- | |||

| <math>\mat{P} =\,\,</math> | |||

| style= "padding:0px" |<math>\begin{array}{c c c c} | |||

2\\ | |||

3\\ | |||

0\\ | |||

4 | |||

\end{array}</math> || <math>\left(\begin{array}{ccc|cc} | |||

1/2 & 0 &1/2 & 0 & 0 \\ | |||

0 &1/2 & 0 & 0 & 1/2\\ | |||

\hline | |||

0 & 0 & 0 & 1 & 0 \\ | |||

0 & 0 & 0 & 0 & 1 | |||

\end{array}\right)</math> | |||

|} | |||

</div> | |||

From this we see that the matrix <math>\mat{Q}</math> is | |||

<math display="block"> | |||

\mat{Q} = \pmatrix{ | |||

0 & 1/2 & 0 \cr | |||

1/2 & 0 & 1/2 \cr | |||

0 & 1/2 & 0 \cr}\ , | |||

</math> | |||

and | |||

<math display="block"> | |||

\mat{I} - \mat{Q} = \pmatrix{ | |||

1 & -1/2 & 0 \cr | |||

-1/2 & 1 & -1/2 \cr | |||

0 & -1/2 & 1 \cr}\ . | |||

</math> | |||

Computing <math>(\mat{I} - \mat{Q})^{-1}</math>, we find | |||

<div class="d-flex justify-content-center"> | |||

{| | |||

|- | |||

| || || <span style="text-align:center;display:inline-block;margin-right:22px;margin-left:15px;"><math>1</math></span><span style="text-align:center;display:inline-block;margin-right:15px;"><math>2</math></span> <span style="text-align:center;display:inline-block;"><math>3</math></span> | |||

|- | |||

| <math>\hbox{$\mat{N} = (\mat{I} - \mat{Q})^{-1} = {}$} </math> | |||

| style= "padding:0px" |<math>\begin{array}{c c c c} | |||

\mbox{1} \\ | |||

\mbox{2}\\ | |||

\mbox{3}\\ | |||

\end{array}</math> || <math>\begin{pmatrix} | |||

1 \,& 0\, & 0 \\ | |||

.5 \,& .5\, & 0 \\ | |||

0 \,& 1\, & 0\\ | |||

\end{pmatrix}</math> | |||

|} | |||

</div> | |||

From the middle row of <math>\mat{N}</math>, we see that if we start in state 2, then the expected number of times in states 1, 2, and 3 before being absorbed are 1, 2, and 1. | |||

===Time to Absorption=== | |||

We now consider the question: Given that the chain starts in state <math>s_i</math>, what | |||

is the expected number of steps before the chain is absorbed? The answer is | |||

given | |||

in the next theorem. | |||

{{proofcard|Theorem|thm_11.2.2.5|Let <math>t_i</math> be the expected number of steps | |||

before | |||

the chain is absorbed, given that the chain starts in state <math>s_i</math>, and let | |||

<math>\mat{t}</math> | |||

be the column vector whose <math>i</math>th entry is <math>t_i</math>. Then | |||

<math display="block"> | |||

\mat{t} = \mat{N}\mat{c}\ , | |||

</math> | |||

where <math>\mat{c}</math> is a column vector all of whose entries are 1.|If we add all the entries in the <math>i</math>th row of <math>\mat{N}</math>, | |||

we will have the expected number of times in any of the transient states for a | |||

given | |||

starting state <math>s_i</math>, that is, the expected time required before being | |||

absorbed. Thus, | |||

<math>t_i</math> is the sum of the entries in the <math>i</math>th row of <math>\mat{N}</math>. If we write | |||

this statement | |||

in matrix form, we obtain the theorem.}} | |||

===Absorption Probabilities=== | |||

{{proofcard|Theorem|thm_11.2.3|Let <math>b_{ij}</math> be the probability that an absorbing chain will be absorbed in the | |||

absorbing state <math>s_j</math> if it starts in the transient state <math>s_i</math>. Let <math>\mat{B}</math> | |||

be the matrix with entries <math>b_{ij}</math>. Then <math>\mat{B}</math> is an <math>t</math>-by-<math>r</math> matrix, | |||

and | |||

<math display="block"> | |||

\mat{B} = \mat{N} \mat{R}\ , | |||

</math> | |||

where <math>\mat{N}</math> is the fundamental matrix and <math>\mat{R}</math> is as in the canonical | |||

form.|We have | |||

<math display="block"> | |||

\begin{eqnarray*} | |||

\mat{B}_{ij} &=& \sum_n\sum_k q_{ik}^{(n)} r_{kj} \\ | |||

&=& \sum_k \sum_n q_{ik}^{(n)} r_{kj} \\ | |||

&=& \sum_k n_{ik}r_{kj} \\ | |||

&=& (\mat{N}\mat{R})_{ij}\ . | |||

\end{eqnarray*} | |||

</math> | |||

This completes the proof.}} | |||

Another proof of this is given in [[exercise:Ef04d1ba60 |Exercise]]. | |||

<span id="exam 11.2.3"/> | |||

'''Example''' | |||

([[#exam 11.2.2|Example]] continued) | |||

In the Drunkard's Walk example, we found that | |||

<div class="d-flex justify-content-center"> | |||

{| | |||

|- | |||

| || || <span style="text-align:center;display:inline-block;margin-right:22px;margin-left:20px;"><math>1</math></span><span style="text-align:center;display:inline-block;margin-right:22px;"><math>2</math></span> <span style="text-align:center;display:inline-block;"><math>3</math></span> | |||

|- | |||

| <math>\hbox{$\mat{N} = {}$}</math> | |||

| style= "padding:0px" |<math>\begin{array}{c c c c} | |||

\mbox{1} \\ | |||

\mbox{2}\\ | |||

\mbox{3}\\ | |||

\end{array}</math> || <math>\begin{pmatrix} | |||

3/2 & 1 & 1/2 \\ | |||

1 & 2 & 1 \\ | |||

1/2 & 1 & 3/2 \\ | |||

\end{pmatrix}</math> | |||

|} | |||

</div> | |||

Hence, | |||

<math display="block"> | |||

\begin{eqnarray*} | |||

\mat{t} = \mat{N}\mat{c} &=& \pmatrix{ | |||

3/2 & 1 & 1/2 \cr | |||

1 & 2 & 1 \cr | |||

1/2 & 1 & 3/2 \cr | |||

} \pmatrix{ | |||

1 \cr | |||

1 \cr | |||

1 \cr | |||

} | |||

\\ | |||

&=& \pmatrix{ | |||

3 \cr | |||

4 \cr | |||

3 \cr | |||

}\ . | |||

\end{eqnarray*} | |||

</math> | |||

Thus, starting in states 1, 2, and 3, the expected times to absorption are | |||

3, 4, and 3, respectively. | |||

From the canonical form, | |||

<div class="d-flex justify-content-center"> | |||

{| | |||

|- | |||

| || || <span style="text-align:center;display:inline-block;margin-right:33px;margin-left:20px;"><math>0</math></span><span><math>4</math></span> | |||

|- | |||

| <math>\hbox{$\mat{N} = {}$}</math> | |||

| style= "padding:0px" |<math>\begin{array}{c c c c} | |||

1 \\ | |||

2 \\ | |||

3 | |||

\end{array}</math> || <math>\begin{pmatrix} | |||

1/2 & 0 \\ | |||

0 & 0 \\ | |||

0 & 1/2 | |||

\end{pmatrix}</math> | |||

|} | |||

</div> | |||

Hence, | |||

<math display="block"> | |||

\begin{eqnarray*} | |||

\mat{B} = \mat{N} \mat{R} &=& \pmatrix{ | |||

3/2 & 1 & 1/2 \cr | |||

1 & 2 & 1 \cr | |||

1/2 & 1 & 3/2 \cr} \cdot \pmatrix{ | |||

1/2 & 0 \cr | |||

0 & 0 \cr | |||

0 & 1/2 \cr} \cr | |||

\end{eqnarray*} | |||

</math> | |||

<div class="d-flex justify-content-center"> | |||

{| | |||

|- | |||

| || || <span style="text-align:center;display:inline-block;margin-right:33px;margin-left:20px;"><math>0</math></span><span><math>4</math></span> | |||

|- | |||

| <math>=\,\,</math> | |||

| style= "padding:0px" |<math>\begin{array}{c c c c} | |||

1 \\ | |||

2 \\ | |||

3 | |||

\end{array}</math> || <math>\begin{pmatrix} | |||

1/2 & 0 \\ | |||

0 & 0 \\ | |||

0 & 1/2 | |||

\end{pmatrix}</math> | |||

|} | |||

</div> | |||

Here the first row tells us that, starting from state <math>1</math>, there is | |||

probability 3/4 | |||

of absorption in state <math>0</math> and 1/4 of absorption in state <math>4</math>. | |||

===Computation=== | |||

The fact that we have been able to obtain these three descriptive quantities in | |||

matrix form makes it very easy to write a computer program that determines | |||

these quantities for a given absorbing chain matrix. | |||

The program ''' AbsorbingChain''' calculates the basic | |||

descriptive quantities of an | |||

absorbing Markov chain. | |||

We have run the program ''' AbsorbingChain''' for the example of the | |||

drunkard's walk ([[#exam 11.2.1 |Example]]) with | |||

5 blocks. | |||

The results are as follows: | |||

<div class="d-flex justify-content-center"> | |||

{| | |||

|- | |||

| || || <span style="text-align:center;display:inline-block;margin-right:25px;margin-left:22px;"><math>1</math></span><span style="text-align:center;display:inline-block;margin-right:30px;"><math>2</math></span><span style="text-align:center;display:inline-block;margin-right:25px;"><math>3</math></span><span style="text-align:center;display:inline-block;"><math>4</math></span> | |||

|- | |||

| <math>\mat{Q} =\,\,</math> | |||

| style= "padding:0px" |<math>\begin{array}{c c c c} | |||

1 \\ | |||

2 \\ | |||

3 \\ | |||

4 | |||

\end{array}</math> || <math>\begin{pmatrix} | |||

.00 & .50 & .00 & .00\\ | |||

.50 & .00 & .50 & .00\\ | |||

.00 & .50 & .00 & .50\\ | |||

.00 & .00 & .50 & .00\\ | |||

\end{pmatrix};</math> | |||

|} | |||

</div> | |||

<div class="d-flex justify-content-center"> | |||

{| | |||

|- | |||

| || || <span style="text-align:center;display:inline-block;margin-right:25px;margin-left:22px;"><math>0</math></span><span style="text-align:center;display:inline-block;"><math>5</math></span> | |||

|- | |||

| <math>\mat{R} =\,\,</math> | |||

| style= "padding:0px" |<math>\begin{array}{c c c c} | |||

1 \\ | |||

2 \\ | |||

3 \\ | |||

4 | |||

\end{array}</math> || <math>\begin{pmatrix} | |||

.50 & .00 \\ | |||

.00 & .00 \\ | |||

.00 & .00 \\ | |||

.00 & .50 | |||

\end{pmatrix};</math> | |||

|} | |||

</div> | |||

<div class="d-flex justify-content-center"> | |||

{| | |||

|- | |||

| || || <span style="text-align:center;display:inline-block;margin-right:35px;margin-left:22px;"><math>1</math></span><span style="text-align:center;display:inline-block;margin-right:35px;"><math>2</math></span><span style="text-align:center;display:inline-block;margin-right:35px;"><math>3</math></span><span style="text-align:center;display:inline-block;"><math>4</math></span> | |||

|- | |||

| <math>\mat{N} =\,\,</math> | |||

| style= "padding:0px" |<math>\begin{array}{c c c c} | |||

1 \\ | |||

2 \\ | |||

3 \\ | |||

4 | |||

\end{array}</math> || <math>\begin{pmatrix} | |||

1.60 & 1.20 & .80 & .40 \\ | |||

1.20 & 2.40 & 1.60 & .80 \\ | |||

.80 & 1.60 & 2.40 & 1.20\\ | |||

.40 & .80 & 1.20 & 1.60 | |||

\end{pmatrix};</math> | |||

|} | |||

</div> | |||

<div class="d-flex justify-content-center"> | |||

{| | |||

|- | |||

| <math>\mat{t} =\,\,</math> | |||

| style= "padding:0px" |<math>\begin{array}{c c c c} | |||

1 \\ | |||

2 \\ | |||

3 \\ | |||

4 | |||

\end{array}</math> || <math>\begin{pmatrix} | |||

4.00 \\ | |||

6.00 \\ | |||

6.00 \\ | |||

4.00 | |||

\end{pmatrix};</math> | |||

|} | |||

</div> | |||

<div class="d-flex justify-content-center"> | |||

{| | |||

|- | |||

| || || <span style="text-align:center;display:inline-block;margin-right:25px;margin-left:22px;"><math>0</math></span><span style="text-align:center;display:inline-block;"><math>5</math></span> | |||

|- | |||

| <math>\mat{B} =\,\,</math> | |||

| style= "padding:0px" |<math>\begin{array}{c c c c} | |||

1 \\ | |||

2 \\ | |||

3 \\ | |||

4 | |||

\end{array}</math> || <math>\begin{pmatrix} | |||

.80 & .20 \\ | |||

.60 & .40 \\ | |||

.40 & .60 \\ | |||

.20 & .80 \\ | |||

\end{pmatrix};</math> | |||

|} | |||

</div> | |||

Note that the probability of reaching the bar before reaching home, starting | |||

at <math>x</math>, is <math>x/5</math> (i.e., proportional to the distance of home from the starting | |||

point). (See [[exercise:087f70d94a |Exercise]].) | |||

==General references== | |||

{{cite web |url=https://math.dartmouth.edu/~prob/prob/prob.pdf |title=Grinstead and Snell’s Introduction to Probability |last=Doyle |first=Peter G.|date=2006 |access-date=June 6, 2024}} | |||

Latest revision as of 14:44, 17 June 2024

The subject of Markov chains is best studied by considering special types of Markov chains. The first type that we shall study is called an absorbing Markov chain.

A state [math]s_i[/math] of a Markov chain is called absorbing if it is impossible to leave it (i.e., [math]p_{ii} = 1[/math]). A Markov chain is absorbing if it has at least one absorbing state, and if from every state it is possible to go to an absorbing state (not necessarily in one step).

In an absorbing Markov chain, a state which is not absorbing is called transient.

Drunkard's Walk

Example A man walks along a four-block stretch of Park Avenue (see [[#fig 11.3|Figure]]). If he is at corner 1, 2, or 3, then he walks to the left or right with equal probability. He continues until he reaches corner 4, which is a bar, or corner 0, which is his home. If he reaches either home or the bar, he stays there.

We form a Markov chain with states 0, 1, 2, 3, and 4. States 0 and 4 are

absorbing states. The transition matrix is then

| [math]0[/math][math]1[/math] [math]2[/math] [math]3[/math] [math]4[/math] | ||

| [math]\mat{P} =\,\,[/math] | [math]\begin{array}{c c c c} 0 \\ 1\\ 2\\ 3\\ 4 \end{array}[/math] | [math]\begin{pmatrix} 1 & 0 & 0 & 0 & 0 \\ 1/2 & 0 & 1/2 & 0 & 0 \\ 0 & 1/2 & 0 & 1/2 & 0 \\ 0 & 0 & 1/2 & 0 & 1/2 \\ 0 & 0 & 0 & 0 & 1 \\ \end{pmatrix}[/math] |

The states 1, 2, and 3 are transient states, and from any of these

it is possible to reach the absorbing states 0 and 4. Hence the chain is an

absorbing chain. When a process reaches an absorbing state, we shall say that

it is absorbed.

The most obvious question that can be asked about such a chain is: What is the probability that the process will eventually reach an absorbing state? Other interesting questions include: (a) What is the probability that the process will end up in a given absorbing state? (b) On the average, how long will it take for the process to be absorbed? (c) On the average, how many times will the process be in each transient state? The answers to all these questions depend, in general, on the state from which the process starts as well as the transition probabilities.

Canonical Form

Consider an arbitrary absorbing Markov chain. Renumber the states so that the transient states come first. If there are [math]r[/math] absorbing states and [math]t[/math] transient states, the transition matrix will have the following canonical form

| [math]\hbox{TR.}[/math][math]\hbox{ABS.}[/math] | ||

| [math]\mat{P} =[/math] | [math]\begin{array}{cc} \hbox{TR.}\strut \\ \hbox{ABS.}\strut \end{array}[/math] | [math]\left(\begin{array}{c|c} \mat{Q} &\mat{R} \\ \hline \mat{0} &\mat{I} \end{array}\right)[/math] |

Here [math]\mat{I}[/math] is an [math]r[/math]-by-[math]r[/math] indentity matrix, [math]\mat{0}[/math] is an [math]r[/math]-by-[math]t[/math]

zero matrix, [math]\mat{R}[/math] is a nonzero [math]t[/math]-by-[math]r[/math] matrix, and [math]\mat{Q}[/math] is an

[math]t[/math]-by-[math]t[/math] matrix. The first [math]t[/math] states are transient and the last [math]r[/math] states

are absorbing.

In Introduction, we saw that the entry [math]p_{ij}^{(n)}[/math] of the matrix [math]\mat{P}^n[/math] is the probability of being in the state [math]s_j[/math] after [math]n[/math] steps, when the chain is started in state [math]s_i[/math]. A standard matrix algebra argument shows that [math]\mat{P}^n[/math] is of the form

| [math]\hbox{TR.}[/math][math]\hbox{ABS.}[/math] | ||

| [math]\mat{P} =[/math] | [math]\begin{array}{cc} \hbox{TR.}\strut \\ \hbox{ABS.}\strut \end{array}[/math] | [math]\left(\begin{array}{c|c} \mat{Q}^n &\ast \\ \hline \mat{0} &\mat{I} \end{array}\right)[/math] |

where the asterisk [math]*[/math] stands for the [math]t[/math]-by-[math]r[/math] matrix in the upper right-hand

corner of [math]\mat{P}^n.[/math] (This submatrix can be written in terms of [math]\mat{Q}[/math]

and [math]\mat{R}[/math], but the expression is complicated and is not needed at this time.)

The form of [math]\mat{P}^n[/math] shows that the entries of

[math]\mat{Q}^n[/math] give the probabilities for being in each of the transient states after [math]n[/math]

steps for each possible transient starting state. For our first theorem we prove that the probability of being in the transient states after [math]n[/math] steps approaches zero. Thus every entry

of [math]\mat{ Q}^n[/math] must approach zero as [math]n[/math] approaches infinity (i.e, [math]\mat{Q}^n

\to \mat{0}[/math]).

Probability of Absorption

In an absorbing Markov chain, the probability that the process will be absorbed is 1 (i.e., [math]\mat{Q}^n \to \mat{0}[/math] as [math]n \to \infty[/math]).

Show ProofFrom each nonabsorbing state [math]s_j[/math] it is possible to reach an absorbing state. Let [math]m_j[/math] be the minimum number of steps required to reach an absorbing state, starting from [math]s_j[/math]. Let [math]p_j[/math] be the probability that, starting from [math]s_j[/math], the process will not reach an absorbing state in [math]m_j[/math] steps. Then [math]p_j \lt 1[/math]. Let [math]m[/math] be the largest of the [math]m_j[/math] and let [math]p[/math] be the largest of [math]p_j[/math]. The probability of not being absorbed in [math]m[/math] steps is less than or equal to [math]p[/math], in [math]2m[/math] steps less than or equal to [math]p^2[/math], etc. Since [math]p \lt 1[/math] these probabilities tend to 0. Since the probability of not being absorbed in [math]n[/math] steps is monotone decreasing, these probabilities also tend to 0, hence [math]\lim_{n \rightarrow \infty } \mat{Q}^n = 0.[/math]

The Fundamental Matrix

For an absorbing Markov chain the matrix [math]\mat{I} - \mat{Q}[/math] has an inverse [math]\mat{N}[/math] and [math]\mat{N} =\mat{I} + \mat{Q} + \mat{Q}^{2} + \cdots\ [/math]. The [math]ij[/math]-entry [math]n_{ij}[/math] of the matrix [math]\mat{N}[/math] is the expected number of times the chain is in state [math]s_j[/math], given that it starts in state [math]s_i[/math]. The initial state is counted if [math]i = j[/math].

Show ProofLet [math](\mat{I} - \mat{Q})\mat{x}~=~0;[/math] that is [math]\mat{x}~=~\mat{Q}\mat{x}.[/math] Then, iterating this we see that [math]\mat{x}~=~\mat{Q}^{n}\mat x.[/math] Since [math]\mat{Q}^{n} \rightarrow \mat{0}[/math], we have [math]\mat{Q}^n\mat{x} \rightarrow \mat{0}[/math], so [math]\mat{x}~=~\mat{0}[/math]. Thus [math](\mat{I} - \mat{Q})^{-1}~=~\mat{N}[/math] exists. Note next that

Let [math]s_i[/math] and [math]s_j[/math] be two transient states, and assume throughout the remainder of the proof that [math]i[/math] and [math]j[/math] are fixed. Let [math]X^{(k)}[/math] be a random variable which equals 1 if the chain is in state [math]s_j[/math] after [math]k[/math] steps, and equals 0 otherwise. For each [math]k[/math], this random variable depends upon both [math]i[/math] and [math]j[/math]; we choose not to explicitly show this dependence in the interest of clarity. We have

The expected number of times the chain is in state [math]s_j[/math] in the first [math]n[/math]

steps,

given that it starts in state [math]s_i[/math], is clearly

For an absorbing Markov chain [math]\mat{P}[/math], the matrix [math]\mat{N} = (\mat{I} - \mat{Q})^{-1}[/math] is called the fundamental matrix for [math]\mat{P}[/math]. The entry [math]n_{ij}[/math] of [math]\mat{N}[/math] gives the expected number of times that the process is in the transient state [math]s_j[/math] if it is started in the transient state [math]s_i[/math].

Example (Example continued) In the Drunkard's Walk example, the transition matrix in canonical form is

| [math]1[/math][math]2[/math] [math] 3[/math] [math]0[/math] [math]4[/math] | ||

| [math]1[/math] | [math]0[/math][math]1/2[/math] [math] 0[/math][math]1/2[/math] [math]0[/math] | |

| [math]\mat{P} =\,\,[/math] | [math]\begin{array}{c c c c} 2\\ 3\\ 0\\ 4 \end{array}[/math] | [math]\left(\begin{array}{ccc|cc} 1/2 & 0 &1/2 & 0 & 0 \\ 0 &1/2 & 0 & 0 & 1/2\\ \hline 0 & 0 & 0 & 1 & 0 \\ 0 & 0 & 0 & 0 & 1 \end{array}\right)[/math] |

From this we see that the matrix [math]\mat{Q}[/math] is

and

Computing [math](\mat{I} - \mat{Q})^{-1}[/math], we find

| [math]1[/math][math]2[/math] [math]3[/math] | ||

| [math]\hbox{$\mat{N} = (\mat{I} - \mat{Q})^{-1} = {}$} [/math] | [math]\begin{array}{c c c c} \mbox{1} \\ \mbox{2}\\ \mbox{3}\\ \end{array}[/math] | [math]\begin{pmatrix} 1 \,& 0\, & 0 \\ .5 \,& .5\, & 0 \\ 0 \,& 1\, & 0\\ \end{pmatrix}[/math] |

From the middle row of [math]\mat{N}[/math], we see that if we start in state 2, then the expected number of times in states 1, 2, and 3 before being absorbed are 1, 2, and 1.

Time to Absorption

We now consider the question: Given that the chain starts in state [math]s_i[/math], what is the expected number of steps before the chain is absorbed? The answer is given in the next theorem.

Let [math]t_i[/math] be the expected number of steps before the chain is absorbed, given that the chain starts in state [math]s_i[/math], and let [math]\mat{t}[/math] be the column vector whose [math]i[/math]th entry is [math]t_i[/math]. Then

If we add all the entries in the [math]i[/math]th row of [math]\mat{N}[/math], we will have the expected number of times in any of the transient states for a given starting state [math]s_i[/math], that is, the expected time required before being absorbed. Thus, [math]t_i[/math] is the sum of the entries in the [math]i[/math]th row of [math]\mat{N}[/math]. If we write this statement in matrix form, we obtain the theorem.

Absorption Probabilities

Let [math]b_{ij}[/math] be the probability that an absorbing chain will be absorbed in the absorbing state [math]s_j[/math] if it starts in the transient state [math]s_i[/math]. Let [math]\mat{B}[/math] be the matrix with entries [math]b_{ij}[/math]. Then [math]\mat{B}[/math] is an [math]t[/math]-by-[math]r[/math] matrix, and

We have

Another proof of this is given in Exercise.

Example (Example continued)

In the Drunkard's Walk example, we found that

| [math]1[/math][math]2[/math] [math]3[/math] | ||

| [math]\hbox{$\mat{N} = {}$}[/math] | [math]\begin{array}{c c c c} \mbox{1} \\ \mbox{2}\\ \mbox{3}\\ \end{array}[/math] | [math]\begin{pmatrix} 3/2 & 1 & 1/2 \\ 1 & 2 & 1 \\ 1/2 & 1 & 3/2 \\ \end{pmatrix}[/math] |

Hence,

Thus, starting in states 1, 2, and 3, the expected times to absorption are 3, 4, and 3, respectively.

From the canonical form,

| [math]0[/math][math]4[/math] | ||

| [math]\hbox{$\mat{N} = {}$}[/math] | [math]\begin{array}{c c c c} 1 \\ 2 \\ 3 \end{array}[/math] | [math]\begin{pmatrix} 1/2 & 0 \\ 0 & 0 \\ 0 & 1/2 \end{pmatrix}[/math] |

Hence,

| [math]0[/math][math]4[/math] | ||

| [math]=\,\,[/math] | [math]\begin{array}{c c c c} 1 \\ 2 \\ 3 \end{array}[/math] | [math]\begin{pmatrix} 1/2 & 0 \\ 0 & 0 \\ 0 & 1/2 \end{pmatrix}[/math] |

Here the first row tells us that, starting from state [math]1[/math], there is probability 3/4 of absorption in state [math]0[/math] and 1/4 of absorption in state [math]4[/math].

Computation

The fact that we have been able to obtain these three descriptive quantities in matrix form makes it very easy to write a computer program that determines these quantities for a given absorbing chain matrix. The program AbsorbingChain calculates the basic descriptive quantities of an absorbing Markov chain. We have run the program AbsorbingChain for the example of the drunkard's walk (Example) with 5 blocks. The results are as follows:

| [math]1[/math][math]2[/math][math]3[/math][math]4[/math] | ||

| [math]\mat{Q} =\,\,[/math] | [math]\begin{array}{c c c c} 1 \\ 2 \\ 3 \\ 4 \end{array}[/math] | [math]\begin{pmatrix} .00 & .50 & .00 & .00\\ .50 & .00 & .50 & .00\\ .00 & .50 & .00 & .50\\ .00 & .00 & .50 & .00\\ \end{pmatrix};[/math] |

| [math]0[/math][math]5[/math] | ||

| [math]\mat{R} =\,\,[/math] | [math]\begin{array}{c c c c} 1 \\ 2 \\ 3 \\ 4 \end{array}[/math] | [math]\begin{pmatrix} .50 & .00 \\ .00 & .00 \\ .00 & .00 \\ .00 & .50 \end{pmatrix};[/math] |

| [math]1[/math][math]2[/math][math]3[/math][math]4[/math] | ||

| [math]\mat{N} =\,\,[/math] | [math]\begin{array}{c c c c} 1 \\ 2 \\ 3 \\ 4 \end{array}[/math] | [math]\begin{pmatrix} 1.60 & 1.20 & .80 & .40 \\ 1.20 & 2.40 & 1.60 & .80 \\ .80 & 1.60 & 2.40 & 1.20\\ .40 & .80 & 1.20 & 1.60 \end{pmatrix};[/math] |

| [math]\mat{t} =\,\,[/math] | [math]\begin{array}{c c c c} 1 \\ 2 \\ 3 \\ 4 \end{array}[/math] | [math]\begin{pmatrix} 4.00 \\ 6.00 \\ 6.00 \\ 4.00 \end{pmatrix};[/math] |

| [math]0[/math][math]5[/math] | ||

| [math]\mat{B} =\,\,[/math] | [math]\begin{array}{c c c c} 1 \\ 2 \\ 3 \\ 4 \end{array}[/math] | [math]\begin{pmatrix} .80 & .20 \\ .60 & .40 \\ .40 & .60 \\ .20 & .80 \\ \end{pmatrix};[/math] |

Note that the probability of reaching the bar before reaching home, starting

at [math]x[/math], is [math]x/5[/math] (i.e., proportional to the distance of home from the starting

point). (See Exercise.)

General references

Doyle, Peter G. (2006). "Grinstead and Snell's Introduction to Probability" (PDF). Retrieved June 6, 2024.