guide:Eb6d7b34e1: Difference between revisions

No edit summary |

mNo edit summary |

||

| (3 intermediate revisions by 2 users not shown) | |||

| Line 1: | Line 1: | ||

<div class="d-none"><math> | |||

\newcommand{\indep}[0]{\ensuremath{\perp\!\!\!\perp}} | |||

\newcommand{\dpartial}[2]{\frac{\partial #1}{\partial #2}} | |||

\newcommand{\abs}[1]{\left| #1 \right|} | |||

\newcommand\autoop{\left(} | |||

\newcommand\autocp{\right)} | |||

\newcommand\autoob{\left[} | |||

\newcommand\autocb{\right]} | |||

\newcommand{\vecbr}[1]{\langle #1 \rangle} | |||

\newcommand{\ui}{\hat{\imath}} | |||

\newcommand{\uj}{\hat{\jmath}} | |||

\newcommand{\uk}{\hat{k}} | |||

\newcommand{\V}{\vec{V}} | |||

\newcommand{\half}[1]{\frac{#1}{2}} | |||

\newcommand{\recip}[1]{\frac{1}{#1}} | |||

\newcommand{\invsqrt}[1]{\recip{\sqrt{#1}}} | |||

\newcommand{\halfpi}{\half{\pi}} | |||

\newcommand{\windbar}[2]{\Big|_{#1}^{#2}} | |||

\newcommand{\rightinfwindbar}[0]{\Big|_{0}^\infty} | |||

\newcommand{\leftinfwindbar}[0]{\Big|_{-\infty}^0} | |||

\newcommand{\state}[1]{\large\protect\textcircled{\textbf{\small#1}}} | |||

\newcommand{\shrule}{\\ \centerline{\rule{13cm}{0.4pt}}} | |||

\newcommand{\tbra}[1]{$\bra{#1}$} | |||

\newcommand{\tket}[1]{$\ket{#1}$} | |||

\newcommand{\tbraket}[2]{$\braket{1}{2}$} | |||

\newcommand{\infint}[0]{\int_{-\infty}^{\infty}} | |||

\newcommand{\rightinfint}[0]{\int_0^\infty} | |||

\newcommand{\leftinfint}[0]{\int_{-\infty}^0} | |||

\newcommand{\wavefuncint}[1]{\infint|#1|^2} | |||

\newcommand{\ham}[0]{\hat{H}} | |||

\newcommand{\mathds}{\mathbb}</math></div> | |||

===The Basic Foundation=== | |||

In this section, we consider the case where there are unobserved confounders. In such a case, we cannot rely on regression, as these unobserved confounders prevent a learner from identifying <math>p^*(y|a, x)</math> correctly. One may tempted to simply fit a predictive model on <math>(a, y)</math> pairs to get <math>p(y|a; \theta)</math> to approximate <math>p^*(y|a)</math> and call it a day. We have however learned earlier that this does not approximate the causal effect, that is, <math>p^*(y|\mathrm{do}(a))</math>, due to the spurious path, <math>a \leftarrow x \to y</math>, which is open because we did not observe <math>x</math>. | |||

When there are unobserved confounders, we can actively collect data that allows us to perform causal inference. This is a departure from a usual practice in machine learning, where we often assume that data is provided to us and the goal is for us to use a learning algorithm to build a predictive model. This is often not enough in causal inference, and we are now presented with the first such case, where the assumption of ‘no unobserved confounder’ has been violated. | |||

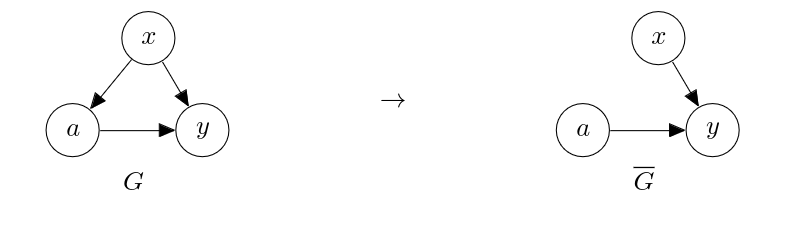

In order to estimate the causal effect of the action <math>a</math> on the outcome <math>y</math>, we severed the edge from the confounder <math>x</math> to the action <math>a</math>, as in | |||

<div id="" class="d-flex justify-content-center"> | |||

[[File:5fe26.png | 400px | thumb]] | |||

</div> | |||

This suggests that if we collect data according to the latter graph <math>\overline{G}</math>, | |||

we may be able to estimate the causal effect of <math>a</math> on <math>y</math> despite the unobserved confounder <math>x</math>. | |||

To do so, we need to decide on the prior distribution over the action, <math>\overline{p}(a)</math>, such that | |||

<math>a</math> is independent of the covariate <math>x</math>. It is a common practice to choose a uniform distribution over the action as <math>\overline{p}(a)</math>. For instance, if <math>a</math> is binary, | |||

<math display="block"> | |||

\begin{align} | |||

\overline{p}(a=0) = \overline{p}(a=1) = 0.5. | |||

\end{align} | |||

</math> | |||

The prior distribution over the covariate <math>x</math>, <math>p(x)</math>, is not what we choose, but is what the environment is like. | |||

That is, instead of specifying <math>p(x)</math> nor sampling explicitly from it (which was by assumption impossible), we | |||

go out there and recruit samples from this prior distribution. In the vaccination example above, this would correspond | |||

to recruiting subjects from a general population without any particular filtering or selection.<ref group="Notes" > | |||

Of course, we can filter these subjects to satisfy a certain set of criteria (inclusion criteria) in order to estimate a conditional average treatment effect. | |||

</ref> | |||

For each recruited subject <math>x</math>, we assign the treatment <math>a</math>, drawn from <math>\overline{p}(a)</math> that is independent of <math>x</math>. | |||

This process is called ‘randomization’, because this | |||

process assigns an individual drawn from the population, <math>x \sim p(x)</math>, randomly | |||

to either a treatment or placebo group according to <math>\overline{p}(a)</math>, where ‘randomly’ refer to ‘without any information’. | |||

This process is also ‘controlled’, because we control the assignment of each individual to a group. | |||

For the randomly assigned pair <math>(a,x)</math>, we observe the outcome <math>y</math> by letting the environment (nature) simulate and sample from <math>p^*(y|a,x)</math>. | |||

This process is a ‘trial’, where we try the action <math>a</math> on the subject <math>x</math> by administering <math>a</math> to <math>x</math>. Putting all these together, we call this process of collecting data from <math>\overline{G}</math> a '' randomized controlled trial'' (RCT). | |||

It is important to emphasize that <math>x</math> is not recorded, fully known nor needed, and we end up with | |||

<math display="block"> | |||

\begin{align} | |||

D = \left\{ (a_1, y_1), \ldots, (a_N, y_N) \right\}. | |||

\end{align} | |||

</math> | |||

Using this dataset, we can now approximate the interventional probability as | |||

<math display="block"> | |||

\begin{align} | |||

\mathbb{E}_G\left[ y | \mathrm{do}(a=\hat{a})\right] | |||

&= | |||

\mathbb{E}_{\overline{G}} \left[ y | a = \hat{a}\right] | |||

\\ | |||

&= | |||

\sum_{y} | |||

y | |||

\frac{ | |||

\sum_{x} p(x) | |||

\cancel{\overline{p}(\hat{a})} | |||

p(y | \hat{a}, x)} | |||

{\cancel{\overline{p}(\hat{a})}} | |||

\\ | |||

&= | |||

\sum_{x} | |||

\sum_{y} | |||

y | |||

p(x) | |||

p(y | \hat{a}, x) | |||

\\ | |||

&\approx | |||

\frac{\sum_{n=1}^N | |||

\mathds{1}(a_n = \hat{a}) | |||

y_n} | |||

{\sum_{n'=1}^N \mathds{1}(a_{n'} = \hat{a})}, | |||

\end{align} | |||

</math> | |||

because <math>x_n \sim p(x)</math>, <math>a_n \sim \overline{p}(a)</math> and <math>y_n \sim p(y|\hat{a}, x)</math>. | |||

As evident from the final line, we do not need to know the confounder <math>x</math>, which means that | |||

RCT avoids the issue of unobserved, or even unknown, confounders. Furthermore, it does not | |||

involve <math>\overline{p}(a)</math>, implying that we can use an arbitrary mechanism to randomly assign | |||

each subject to a treatment option, as long as we do not condition it on the confounder <math>x</math>. | |||

If we have strong confidence in the effectiveness of a newly developed vaccine, for instance, | |||

we would choose <math>\overline{p}(a)</math> to be skewed toward the treatment (<math>a=1</math>). | |||

===Important Considerations=== | |||

Perhaps the most important consideration that must be given when implementing a randomized controlled trial is to ensure that the action <math>a</math> is independent of the covariate <math>x</math>. As soon as dependence forms between <math>a</math> and <math>x</math>, the estimated causal effect <math>\mathbb{E}_G\left[ y | \mathrm{do}(a=\hat{a})\right]</math> becomes biased. It is however very easy for such dependency to arise without a careful design of an RCT, often due to subconscious biases formed by people who implement the randomized assignment procedure. | |||

For instance, in the case of the vaccination trial above, a doctor may subconsciously assign older people less to the vaccination arm<ref group="Notes" > | |||

An ‘arm’ here refers to a ‘group’. | |||

</ref> | |||

simply because she is subconsciously worried that vaccination may have more severe side effects on an older population. Such a subconscious decision will create a dependency between the action <math>a</math> and the covariate <math>x</math> (the age of a subject in this case), which will lead to the bias in the eventual estimate of the causal effect. In order to avoid such a subconscious bias in assignment, it is a common practice to automate the assignment process so that the trial administrator is not aware of to which action group each subject was assigned. | |||

Second, we must ensure that the causal effect of the action <math>a</math> on the outcome <math>y</math> must stay constant throughout the trial. More precisely, <math>p^*(y|a,x)</math> must not change throughout the trial. This may sound obvious, as it is difficult to imagine how for instance the effect of vaccination changes rapidly over a single trial. There are however many ways in which this does not hold true. A major way by which <math>p^*(y|a,x)</math> drifts during a trial is when a participant changes their behavior. Continuing the example of vaccination above, let us assume that each participant knows that whether they were vaccinated and also that the pandemic is ongoing. Once the participant knows that they were given placebo instead of actual vaccine, they may become more careful about their hygiene, as they are worried about potential contracting the rampant infectious disease. Similarly, if they knew they were given actual vaccine, they may become less careful and expose themselves to more situations in which they could contract the disease. That is, the causal effect of vaccination changes due to the alteration of participants' behaviours. It is thus a common and important practice to blind participants from knowing to which treatment groups they were assigned. For instance, in the case of vaccination above, we would administer saline solution via injection to control (untreated) participants so that they cannot tell whether they are being injected actual vaccine or placebo. | |||

Putting these two considerations together, we end up with a '' double blind'' trial design. In a double blind trial design, neither the participant nor the trial administrator is made aware of their action/treatment assignment. This helps ensure that the underlying causal effect is stationary throughout the study and that there is no bias creeping in due to the undesirable dependency of the action/treatment assignment on the covariate (information about the participant.) | |||

The final consideration is not about designing an RCT but about interpreting the conclusion from an RCT. As we saw above, the causal effect from the RCT based on <math>\overline{G}</math> is mathematically expressed as | |||

<math display="block"> | |||

\begin{align} | |||

\mathbb{E}_G\left[ y | \mathrm{do}(a=\hat{a})\right] | |||

= | |||

\sum_{x} | |||

\sum_{y} | |||

y | |||

p(x) | |||

p(y | \hat{a}, x). | |||

\end{align} | |||

</math> | |||

The right hand side of this equation includes <math>p(x)</math>, meaning that the causal effect is conditioned on the prior distribution over the covariate. | |||

We do not have direct access to <math>p(x)</math>, but we have samples from this distribution, in the form of participants arriving and being included into the trial. That is, we have implicit access to <math>p(x)</math>. This implies that the estimated causal effect would only be valid when it is used for a population that closely follows <math>p(x)</math>. If there is any shift in the population distribution itself or there was any filtering applied to participants in the trial stage that does not apply after the trial, the estimated causal effect would not be valid anymore. For instance, clinical trials, such as the vaccination trial above, are often run by research-oriented and financially-stable clinics which are often located in affluent neighbourhoods. The effect of the treatment from such a trial is thus more precise for the population in such affluent neighbourhoods. This is the reason why inclusion is important in randomized controlled trials. | |||

Overall, a successful RCT requires the following conditions to be met: | |||

<ul><li> Randomization: the action distribution must be independent of the covariate. | |||

</li> | |||

<li> Stationarity of the causal effect: the causal effect must be stable throughout the trial. | |||

</li> | |||

<li> Stationarity of the popluation: the covariate distribution must not change during and after the trial. | |||

</li> | |||

</ul> | |||

As long as these three conditions are met, RCT provides us with an opportunity to cope with unobserved confounders. | |||

==General references== | |||

{{cite arXiv|last1=Cho|first1=Kyunghyun|year=2024|title=A Brief Introduction to Causal Inference in Machine Learning|eprint=2405.08793|class=cs.LG}} | |||

==Notes== | |||

{{Reflist|group=Notes}} | |||

Latest revision as of 12:31, 19 May 2024

The Basic Foundation

In this section, we consider the case where there are unobserved confounders. In such a case, we cannot rely on regression, as these unobserved confounders prevent a learner from identifying [math]p^*(y|a, x)[/math] correctly. One may tempted to simply fit a predictive model on [math](a, y)[/math] pairs to get [math]p(y|a; \theta)[/math] to approximate [math]p^*(y|a)[/math] and call it a day. We have however learned earlier that this does not approximate the causal effect, that is, [math]p^*(y|\mathrm{do}(a))[/math], due to the spurious path, [math]a \leftarrow x \to y[/math], which is open because we did not observe [math]x[/math]. When there are unobserved confounders, we can actively collect data that allows us to perform causal inference. This is a departure from a usual practice in machine learning, where we often assume that data is provided to us and the goal is for us to use a learning algorithm to build a predictive model. This is often not enough in causal inference, and we are now presented with the first such case, where the assumption of ‘no unobserved confounder’ has been violated. In order to estimate the causal effect of the action [math]a[/math] on the outcome [math]y[/math], we severed the edge from the confounder [math]x[/math] to the action [math]a[/math], as in

This suggests that if we collect data according to the latter graph [math]\overline{G}[/math], we may be able to estimate the causal effect of [math]a[/math] on [math]y[/math] despite the unobserved confounder [math]x[/math]. To do so, we need to decide on the prior distribution over the action, [math]\overline{p}(a)[/math], such that [math]a[/math] is independent of the covariate [math]x[/math]. It is a common practice to choose a uniform distribution over the action as [math]\overline{p}(a)[/math]. For instance, if [math]a[/math] is binary,

The prior distribution over the covariate [math]x[/math], [math]p(x)[/math], is not what we choose, but is what the environment is like.

That is, instead of specifying [math]p(x)[/math] nor sampling explicitly from it (which was by assumption impossible), we

go out there and recruit samples from this prior distribution. In the vaccination example above, this would correspond

to recruiting subjects from a general population without any particular filtering or selection.[Notes 1]

For each recruited subject [math]x[/math], we assign the treatment [math]a[/math], drawn from [math]\overline{p}(a)[/math] that is independent of [math]x[/math].

This process is called ‘randomization’, because this

process assigns an individual drawn from the population, [math]x \sim p(x)[/math], randomly

to either a treatment or placebo group according to [math]\overline{p}(a)[/math], where ‘randomly’ refer to ‘without any information’.

This process is also ‘controlled’, because we control the assignment of each individual to a group.

For the randomly assigned pair [math](a,x)[/math], we observe the outcome [math]y[/math] by letting the environment (nature) simulate and sample from [math]p^*(y|a,x)[/math].

This process is a ‘trial’, where we try the action [math]a[/math] on the subject [math]x[/math] by administering [math]a[/math] to [math]x[/math]. Putting all these together, we call this process of collecting data from [math]\overline{G}[/math] a randomized controlled trial (RCT).

It is important to emphasize that [math]x[/math] is not recorded, fully known nor needed, and we end up with

Using this dataset, we can now approximate the interventional probability as

because [math]x_n \sim p(x)[/math], [math]a_n \sim \overline{p}(a)[/math] and [math]y_n \sim p(y|\hat{a}, x)[/math]. As evident from the final line, we do not need to know the confounder [math]x[/math], which means that RCT avoids the issue of unobserved, or even unknown, confounders. Furthermore, it does not involve [math]\overline{p}(a)[/math], implying that we can use an arbitrary mechanism to randomly assign each subject to a treatment option, as long as we do not condition it on the confounder [math]x[/math]. If we have strong confidence in the effectiveness of a newly developed vaccine, for instance, we would choose [math]\overline{p}(a)[/math] to be skewed toward the treatment ([math]a=1[/math]).

Important Considerations

Perhaps the most important consideration that must be given when implementing a randomized controlled trial is to ensure that the action [math]a[/math] is independent of the covariate [math]x[/math]. As soon as dependence forms between [math]a[/math] and [math]x[/math], the estimated causal effect [math]\mathbb{E}_G\left[ y | \mathrm{do}(a=\hat{a})\right][/math] becomes biased. It is however very easy for such dependency to arise without a careful design of an RCT, often due to subconscious biases formed by people who implement the randomized assignment procedure.

For instance, in the case of the vaccination trial above, a doctor may subconsciously assign older people less to the vaccination arm[Notes 2] simply because she is subconsciously worried that vaccination may have more severe side effects on an older population. Such a subconscious decision will create a dependency between the action [math]a[/math] and the covariate [math]x[/math] (the age of a subject in this case), which will lead to the bias in the eventual estimate of the causal effect. In order to avoid such a subconscious bias in assignment, it is a common practice to automate the assignment process so that the trial administrator is not aware of to which action group each subject was assigned.

Second, we must ensure that the causal effect of the action [math]a[/math] on the outcome [math]y[/math] must stay constant throughout the trial. More precisely, [math]p^*(y|a,x)[/math] must not change throughout the trial. This may sound obvious, as it is difficult to imagine how for instance the effect of vaccination changes rapidly over a single trial. There are however many ways in which this does not hold true. A major way by which [math]p^*(y|a,x)[/math] drifts during a trial is when a participant changes their behavior. Continuing the example of vaccination above, let us assume that each participant knows that whether they were vaccinated and also that the pandemic is ongoing. Once the participant knows that they were given placebo instead of actual vaccine, they may become more careful about their hygiene, as they are worried about potential contracting the rampant infectious disease. Similarly, if they knew they were given actual vaccine, they may become less careful and expose themselves to more situations in which they could contract the disease. That is, the causal effect of vaccination changes due to the alteration of participants' behaviours. It is thus a common and important practice to blind participants from knowing to which treatment groups they were assigned. For instance, in the case of vaccination above, we would administer saline solution via injection to control (untreated) participants so that they cannot tell whether they are being injected actual vaccine or placebo. Putting these two considerations together, we end up with a double blind trial design. In a double blind trial design, neither the participant nor the trial administrator is made aware of their action/treatment assignment. This helps ensure that the underlying causal effect is stationary throughout the study and that there is no bias creeping in due to the undesirable dependency of the action/treatment assignment on the covariate (information about the participant.)

The final consideration is not about designing an RCT but about interpreting the conclusion from an RCT. As we saw above, the causal effect from the RCT based on [math]\overline{G}[/math] is mathematically expressed as

The right hand side of this equation includes [math]p(x)[/math], meaning that the causal effect is conditioned on the prior distribution over the covariate. We do not have direct access to [math]p(x)[/math], but we have samples from this distribution, in the form of participants arriving and being included into the trial. That is, we have implicit access to [math]p(x)[/math]. This implies that the estimated causal effect would only be valid when it is used for a population that closely follows [math]p(x)[/math]. If there is any shift in the population distribution itself or there was any filtering applied to participants in the trial stage that does not apply after the trial, the estimated causal effect would not be valid anymore. For instance, clinical trials, such as the vaccination trial above, are often run by research-oriented and financially-stable clinics which are often located in affluent neighbourhoods. The effect of the treatment from such a trial is thus more precise for the population in such affluent neighbourhoods. This is the reason why inclusion is important in randomized controlled trials. Overall, a successful RCT requires the following conditions to be met:

- Randomization: the action distribution must be independent of the covariate.

- Stationarity of the causal effect: the causal effect must be stable throughout the trial.

- Stationarity of the popluation: the covariate distribution must not change during and after the trial.

As long as these three conditions are met, RCT provides us with an opportunity to cope with unobserved confounders.

General references

Cho, Kyunghyun (2024). "A Brief Introduction to Causal Inference in Machine Learning". arXiv:2405.08793 [cs.LG].