guide:7306337907: Difference between revisions

No edit summary |

mNo edit summary |

||

| (2 intermediate revisions by 2 users not shown) | |||

| Line 1: | Line 1: | ||

<div class="d-none"><math> | |||

\newcommand{\indep}[0]{\ensuremath{\perp\!\!\!\perp}} | |||

\newcommand{\dpartial}[2]{\frac{\partial #1}{\partial #2}} | |||

\newcommand{\abs}[1]{\left| #1 \right|} | |||

\newcommand\autoop{\left(} | |||

\newcommand\autocp{\right)} | |||

\newcommand\autoob{\left[} | |||

\newcommand\autocb{\right]} | |||

\newcommand{\vecbr}[1]{\langle #1 \rangle} | |||

\newcommand{\ui}{\hat{\imath}} | |||

\newcommand{\uj}{\hat{\jmath}} | |||

\newcommand{\uk}{\hat{k}} | |||

\newcommand{\V}{\vec{V}} | |||

\newcommand{\half}[1]{\frac{#1}{2}} | |||

\newcommand{\recip}[1]{\frac{1}{#1}} | |||

\newcommand{\invsqrt}[1]{\recip{qrt{#1}}} | |||

\newcommand{\halfpi}{\half{\pi}} | |||

\newcommand{\windbar}[2]{\Big|_{#1}^{#2}} | |||

\newcommand{\rightinfwindbar}[0]{\Big|_{0}^\infty} | |||

\newcommand{\leftinfwindbar}[0]{\Big|_{-\infty}^0} | |||

\newcommand{tate}[1]{\large\protect\textcircled{\textbf{mall#1}}} | |||

\newcommand{hrule}{\\ \centerline{\rule{13cm}{0.4pt}}} | |||

\newcommand{\tbra}[1]{$\bra{#1}$} | |||

\newcommand{\tket}[1]{$\ket{#1}$} | |||

\newcommand{\tbraket}[2]{$\braket{1}{2}$} | |||

\newcommand{\infint}[0]{\int_{-\infty}^{\infty}} | |||

\newcommand{\rightinfint}[0]{\int_0^\infty} | |||

\newcommand{\leftinfint}[0]{\int_{-\infty}^0} | |||

\newcommand{\wavefuncint}[1]{\infint|#1|^2} | |||

\newcommand{\ham}[0]{\hat{H}} | |||

\newcommand{\mathds}{\mathbb}</math></div> | |||

An autoregressive language model is described as a repeated application of the next-token conditional probability, as in | |||

<math display="block"> | |||

\begin{align} | |||

p(w_1, w_2, \ldots, w_T) = \prod_{t=1}^T p(w_t | w_{ < t}). | |||

\end{align} | |||

</math> | |||

A conditional autoregressive language model is exactly the same except that it is conditioned on another variable <math>X</math>: | |||

<math display="block"> | |||

\begin{align} | |||

p(w_1, w_2, \ldots, w_T | x) = \prod_{t=1}^T p(w_t | w_{ < t}, x). | |||

\end{align} | |||

</math> | |||

There are many different ways to build a neural network to implement the next-token conditional distribution. We do not discuss any of those approaches, as they are out of the course's scope. | |||

An interesting property of a language model is that it can be used for two purposes: | |||

<ul><li> Scoring a sequence: we can use <math>p(w_1, w_2, \ldots, w_T | X)</math> to score an answer sequence <math>w</math> given a query <math>x</math>. | |||

</li> | |||

<li> Approximately finding the best sequence: we can use approximate decoding to find <math>\arg\max_w p(w | x)</math>. | |||

</li> | |||

</ul> | |||

This allows us to perform causal inference and outcome maximization simultaneously. | |||

Consider the problem of query-based text generation, where the goal is to produce an open-ended answer <math>w</math> to a query <math>x</math>. Because it is often impossible to give an absolute score to the answer <math>w</math> given a query <math>x</math>, it is customary to ask a human annotator a relative ranking between two (or more) answers <math>w_+</math> and <math>w_-</math> given a query <math>x</math>. Without loss of generality, let <math>w_+</math> be the preferred answer to <math>w_-</math>. | |||

We assume that there exists a strict total order among all possible answers. That is, | |||

<ul><li> Irreflexive: <math>r(w|x) < r(w|x)</math> cannot hold. | |||

</li> | |||

<li> Asymmetric: If <math>r(w|x) < r(w'|x)</math>, then <math>r(w|x) > r(w'|x)</math> cannot hold. | |||

</li> | |||

<li> Transitive: If <math>r(w|x) < r(w'|x)</math> and <math>r(w'|x) < r(w''|x)</math>, then <math>r(w|x) < r(w''|x)</math>. | |||

</li> | |||

<li> Connected: If <math>w \neq w'</math>, then either <math>r(w|x) < r(w'|x)</math> or <math>r(w|x) > r(w'|x)</math> holds. | |||

</li> | |||

</ul> | |||

In other words, we can enumerate all possible answers according to their (unobserved) ratings on a 1-dimensional line. | |||

'''A non-causal approach.''' | |||

It is then relatively trivial to train this language model, assuming that we have a large amount of triplets | |||

<math display="block"> | |||

D=\left\{(x^1, w^1_+, w^1_-), \ldots, (x^N, w^N_+, w^N_-)\right\}. | |||

</math> | |||

For each triplet, we ensure that the language model puts a higher probability on <math>w_+</math> than on <math>w_-</math> given <math>x</math> by minimizing the following loss function: | |||

<math display="block"> | |||

\begin{align} | |||

L_{\mathrm{pairwise}}(p) = | |||

\frac{1}{N} | |||

um_{n=1}^N | |||

\max(0, m-\log p(w^n_+|x) + \log p(w^n_- | x)), | |||

\end{align} | |||

</math> | |||

where <math>m \in [0, \infty)</math> is a margin hyperparameter. For each triplet, the loss inside the summation is zero, if the language model puts the log-probability on <math>w_+</math> more than that on <math>w_-</math> with the minimum margin of <math>m</math>. | |||

This loss alone is however not enough to train a well-trained language model from which we can produce a high-quality answer. For we have only pair-wise preference triplets for reasonable answers only. The language model trained in this way is not encouraged to put low probabilities on gibberish. We avoid this issue by ensuring that the language model puts reasonably high probabilities on all reasonable answer by minimizing the following extra loss function: | |||

<math display="block"> | |||

\begin{align} | |||

L_{\mathrm{likelihood}}(p) = | |||

- | |||

\frac{1}{2N} | |||

um_{n=1}^N | |||

\left( | |||

\log p(w^n_+ | x) | |||

+ | |||

\log p(w^n_- | x) | |||

\right), | |||

\end{align} | |||

</math> | |||

which corresponds to the so-called negative log-likelihood loss. | |||

'''A causal consideration.''' | |||

This approach works well under the assumption that it is only the content that is embedded in the answer <math>w</math>. This is unfortunately not the case. Any answer is a combination of the content and the style, and the latter should not be the basis on which the answer is rated. For instance, one aspect of style is the verbosity. Often, a longer answer is considered to be highly rated, because of the subconscious bias by a human rater believing a better answer would be able to write a longer answer, although there is no reason why there should not be a better and more concise answer. | |||

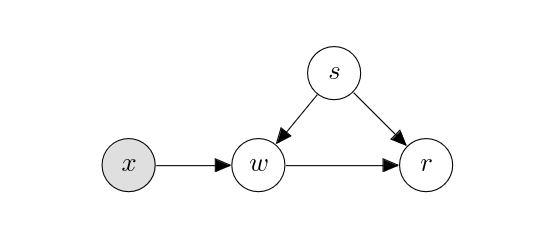

This process can be described as the graph below, where <math>r</math> is the rating and <math>s</math> is the style: | |||

<div class="d-flex justify-content-center"> | |||

[[File:tikz6a86d.png | 400px | thumb | ]] | |||

</div> | |||

The direct effect of <math>w</math> on the rating <math>r</math> is based on the content, but then there is spourious correlation between <math>w</math> and <math>r</math> via the style <math>s</math>. For instance, <math>s</math> could encode the verbosity which affects both how <math>w</math> is written and how a human rater perceives the quality and gives the rating <math>r</math>. In the naive approach above, the language model, as a scorer, will fail to distinguish between these two and capture both, which is clearly undesirable; a longer answer is not necessarily a better answer. In other words, a language model <math>p_0</math> trained in a purely supervised learning way above will score <math>w</math> high for both causal and spurious (via <math>s</math>) reasons. An answer <math>w</math> sampled from <math>p_0</math> can then be considered dependent upon not only the question <math>x</math> itself but also of an unobserved style variable <math>s</math>. | |||

<b>Direct preference optimization<ref name="rafailov2024direct''>{{cite journal||last1=Rafailov|first1=Rafael|last2=Sharma|first2=Archit|last3=Mitchell|first3=Eric|last4=Manning|first4=Christopher D|last5=Ermon|first5=Stefano|last6=Finn|first6=Chelsea|journal=Advances in Neural Information Processing Systems|year=2024|title=Direct preference optimization: Your language model is secretly a reward model|volume=36}}</ref> or unlikelihood learning<ref name="welleck2019neural">{{cite journal||last1=Welleck|first1=Sean|last2=Kulikov|first2=Ilia|last3=Roller|first3=Stephen|last4=Dinan|first4=Emily|last5=Cho|first5=Kyunghyun|last6=Weston|first6=Jason|journal=arXiv preprint arXiv:1908.04319|year=2019|title=Neural text generation with unlikelihood training}}</ref></b> | |||

We can resolve this issue by combining two ideas we have studied earlier; randomized controlled trials (RCT; [[guide:Eb6d7b34e1#sec:randomized-controlled-trials |Randomized Controlled Trials]]) and inverse probability weighting (IPW; [[guide:5fcedc2288#sec:ipw |Inverse Probability Weighting]]). First, we sample two answers, <math>w</math> and <math>w'</math>, from the already trained model <math>p_0</math>, using supervised learning above: | |||

<math display="block"> | |||

\begin{align} | |||

w, w' im p_0(w|x). | |||

\end{align} | |||

</math> | |||

These two answers (approximately) maximize the estimated outcome (rating) by capturing both the content and style. | |||

One interesting side-effect of imperfect learning and inference (generation) is that both of these answers would largely share the style. If we use <math>s'</math> to denote that style, we can think of each answer as sampled from <math>w | x, s'</math>. | |||

With a new language model <math>p_1</math> (potentially initialized from <math>p_0</math>), we can compute the rating after removing the dependence on the style <math>s</math> by IPW: | |||

<math display="block"> | |||

\begin{align} | |||

\hat{r}(w|x) = \frac{p_1(w|x)}{p_0(w|x)}. | |||

\end{align} | |||

</math> | |||

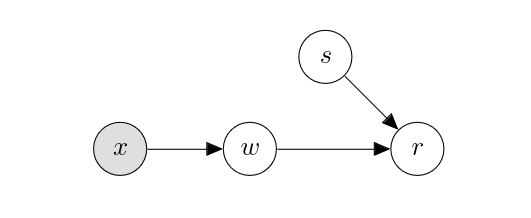

This reminds us of <math>\mathrm{do}</math> operation, resulting in the following modified graph: | |||

<div class="d-flex justify-content-center"> | |||

[[File:tikza8b6b.png | 400px | thumb | ]] | |||

</div> | |||

Of course, this score <math>\hat{r}</math> does not mean anything, since <math>p_1</math> does not mean anything yet. We have to train <math>p_1</math> by asking an expert to provide their preference between <math>w</math> and <math>w'</math>. Without loss of generality, let <math>w</math> be the preferred answer over <math>w'</math>. That is, <math>w_+=w</math> and <math>w_-=w'</math>. We train <math>p_1</math> by minimizing | |||

<math display="block"> | |||

\begin{align} | |||

L'_{\mathrm{pairwise}}(p_1) = | |||

\frac{1}{N} | |||

um_{n=1}^N | |||

\max\left(0, m-\log \frac{p_1(w^n_+|x)}{p_0(w^n_+|x)} + | |||

\log \frac{p_1(w^n_- | x)}{p_0(w^n_- | x)} | |||

\right), | |||

\end{align} | |||

</math> | |||

where we assume have <math>N</math> pairs. <math>m</math> is a margin as before. It is possible to replace the margin loss with another loss function, such as a log loss or linear loss. | |||

This procedure encourages <math>p_1</math> to capture only the direct (causal) effect of the answer on the rating, dissecting out the indirect (spurious) effect via the style <math>s</math>. One training is done, we use <math>p_1</math> to produce a better answer, which dependes less on the spurious correlation between the answer and the rating via the style. | |||

Because this procedure is extremely implicit about the existence of and the dependence on the style, it can be beneficial to repeat this procedure multiple rounds in order to further remove the effect of the spurious correlation and improve the quality of a generated answer~<ref name="ouyang2022training">{{cite journal||last1=Ouyang|first1=Long|last2=Wu|first2=Jeffrey|last3=Jiang|first3=Xu|last4=Almeida|first4=Diogo|last5=Wainwright|first5=Carroll|last6=Mishkin|first6=Pamela|last7=Zhang|first7=Chong|last8=Agarwal|first8=S|last9=hini|last10=Slama|first10=Katarina|last11=Ray|first11=Alex|last12=others|journal=Advances in Neural Information Processing Systems|year=2022|title=Training language models to follow instructions with human feedback|volume=35}}</ref>. | |||

==General references== | |||

{{cite arXiv|last1=Cho|first1=Kyunghyun|year=2024|title=A Brief Introduction to Causal Inference in Machine Learning|eprint=2405.08793|class=cs.LG}} | |||

==References== | |||

{{reflist}} | |||

Latest revision as of 23:09, 19 May 2024

An autoregressive language model is described as a repeated application of the next-token conditional probability, as in

A conditional autoregressive language model is exactly the same except that it is conditioned on another variable [math]X[/math]:

There are many different ways to build a neural network to implement the next-token conditional distribution. We do not discuss any of those approaches, as they are out of the course's scope. An interesting property of a language model is that it can be used for two purposes:

- Scoring a sequence: we can use [math]p(w_1, w_2, \ldots, w_T | X)[/math] to score an answer sequence [math]w[/math] given a query [math]x[/math].

- Approximately finding the best sequence: we can use approximate decoding to find [math]\arg\max_w p(w | x)[/math].

This allows us to perform causal inference and outcome maximization simultaneously. Consider the problem of query-based text generation, where the goal is to produce an open-ended answer [math]w[/math] to a query [math]x[/math]. Because it is often impossible to give an absolute score to the answer [math]w[/math] given a query [math]x[/math], it is customary to ask a human annotator a relative ranking between two (or more) answers [math]w_+[/math] and [math]w_-[/math] given a query [math]x[/math]. Without loss of generality, let [math]w_+[/math] be the preferred answer to [math]w_-[/math]. We assume that there exists a strict total order among all possible answers. That is,

- Irreflexive: [math]r(w|x) \lt r(w|x)[/math] cannot hold.

- Asymmetric: If [math]r(w|x) \lt r(w'|x)[/math], then [math]r(w|x) \gt r(w'|x)[/math] cannot hold.

- Transitive: If [math]r(w|x) \lt r(w'|x)[/math] and [math]r(w'|x) \lt r(w''|x)[/math], then [math]r(w|x) \lt r(w''|x)[/math].

- Connected: If [math]w \neq w'[/math], then either [math]r(w|x) \lt r(w'|x)[/math] or [math]r(w|x) \gt r(w'|x)[/math] holds.

In other words, we can enumerate all possible answers according to their (unobserved) ratings on a 1-dimensional line.

A non-causal approach. It is then relatively trivial to train this language model, assuming that we have a large amount of triplets

For each triplet, we ensure that the language model puts a higher probability on [math]w_+[/math] than on [math]w_-[/math] given [math]x[/math] by minimizing the following loss function:

where [math]m \in [0, \infty)[/math] is a margin hyperparameter. For each triplet, the loss inside the summation is zero, if the language model puts the log-probability on [math]w_+[/math] more than that on [math]w_-[/math] with the minimum margin of [math]m[/math]. This loss alone is however not enough to train a well-trained language model from which we can produce a high-quality answer. For we have only pair-wise preference triplets for reasonable answers only. The language model trained in this way is not encouraged to put low probabilities on gibberish. We avoid this issue by ensuring that the language model puts reasonably high probabilities on all reasonable answer by minimizing the following extra loss function:

which corresponds to the so-called negative log-likelihood loss.

A causal consideration. This approach works well under the assumption that it is only the content that is embedded in the answer [math]w[/math]. This is unfortunately not the case. Any answer is a combination of the content and the style, and the latter should not be the basis on which the answer is rated. For instance, one aspect of style is the verbosity. Often, a longer answer is considered to be highly rated, because of the subconscious bias by a human rater believing a better answer would be able to write a longer answer, although there is no reason why there should not be a better and more concise answer. This process can be described as the graph below, where [math]r[/math] is the rating and [math]s[/math] is the style:

The direct effect of [math]w[/math] on the rating [math]r[/math] is based on the content, but then there is spourious correlation between [math]w[/math] and [math]r[/math] via the style [math]s[/math]. For instance, [math]s[/math] could encode the verbosity which affects both how [math]w[/math] is written and how a human rater perceives the quality and gives the rating [math]r[/math]. In the naive approach above, the language model, as a scorer, will fail to distinguish between these two and capture both, which is clearly undesirable; a longer answer is not necessarily a better answer. In other words, a language model [math]p_0[/math] trained in a purely supervised learning way above will score [math]w[/math] high for both causal and spurious (via [math]s[/math]) reasons. An answer [math]w[/math] sampled from [math]p_0[/math] can then be considered dependent upon not only the question [math]x[/math] itself but also of an unobserved style variable [math]s[/math].

Direct preference optimization[1] or unlikelihood learning[2] We can resolve this issue by combining two ideas we have studied earlier; randomized controlled trials (RCT; Randomized Controlled Trials) and inverse probability weighting (IPW; Inverse Probability Weighting). First, we sample two answers, [math]w[/math] and [math]w'[/math], from the already trained model [math]p_0[/math], using supervised learning above:

These two answers (approximately) maximize the estimated outcome (rating) by capturing both the content and style. One interesting side-effect of imperfect learning and inference (generation) is that both of these answers would largely share the style. If we use [math]s'[/math] to denote that style, we can think of each answer as sampled from [math]w | x, s'[/math]. With a new language model [math]p_1[/math] (potentially initialized from [math]p_0[/math]), we can compute the rating after removing the dependence on the style [math]s[/math] by IPW:

This reminds us of [math]\mathrm{do}[/math] operation, resulting in the following modified graph:

Of course, this score [math]\hat{r}[/math] does not mean anything, since [math]p_1[/math] does not mean anything yet. We have to train [math]p_1[/math] by asking an expert to provide their preference between [math]w[/math] and [math]w'[/math]. Without loss of generality, let [math]w[/math] be the preferred answer over [math]w'[/math]. That is, [math]w_+=w[/math] and [math]w_-=w'[/math]. We train [math]p_1[/math] by minimizing

where we assume have [math]N[/math] pairs. [math]m[/math] is a margin as before. It is possible to replace the margin loss with another loss function, such as a log loss or linear loss. This procedure encourages [math]p_1[/math] to capture only the direct (causal) effect of the answer on the rating, dissecting out the indirect (spurious) effect via the style [math]s[/math]. One training is done, we use [math]p_1[/math] to produce a better answer, which dependes less on the spurious correlation between the answer and the rating via the style. Because this procedure is extremely implicit about the existence of and the dependence on the style, it can be beneficial to repeat this procedure multiple rounds in order to further remove the effect of the spurious correlation and improve the quality of a generated answer~[3].

General references

Cho, Kyunghyun (2024). "A Brief Introduction to Causal Inference in Machine Learning". arXiv:2405.08793 [cs.LG].

References

- "Direct preference optimization: Your language model is secretly a reward model" (2024). Advances in Neural Information Processing Systems 36.

- "Neural text generation with unlikelihood training" (2019). arXiv preprint arXiv:1908.04319.

- "Training language models to follow instructions with human feedback" (2022). Advances in Neural Information Processing Systems 35.