guide:D9c33cd067: Difference between revisions

No edit summary |

mNo edit summary |

||

| (2 intermediate revisions by the same user not shown) | |||

| Line 1: | Line 1: | ||

<div class="d-none"><math> | <div class="d-none"><math> | ||

\newcommand{\smallfrac}[2]{\frac{#1}{#2}} | |||

\newcommand{\medfrac}[2]{\frac{#1}{#2}} | |||

\newcommand{\textfrac}[2]{\frac{#1}{#2}} | |||

\newcommand{\smallfrac}[2] | |||

\newcommand{\medfrac}[2] | |||

\newcommand{\textfrac}[2] | |||

\newcommand{\tr}{\operatorname{tr}} | \newcommand{\tr}{\operatorname{tr}} | ||

\newcommand{\e}{\operatorname{e}} | \newcommand{\e}{\operatorname{e}} | ||

\newcommand{\B}{\operatorname{B}} | \newcommand{\B}{\operatorname{B}} | ||

\newcommand{\Bbar}{\ | \newcommand{\Bbar}{\overline{\operatorname{B}}} | ||

\newcommand{\pr}{\operatorname{pr}} | \newcommand{\pr}{\operatorname{pr}} | ||

\newcommand{\dd}{\operatorname{d}\hspace{-1pt}} | \newcommand{\dd}{\operatorname{d}\hspace{-1pt}} | ||

| Line 33: | Line 12: | ||

\newcommand{\V}{\operatorname{V}} | \newcommand{\V}{\operatorname{V}} | ||

\newcommand{\Cov}{\operatorname{Cov}} | \newcommand{\Cov}{\operatorname{Cov}} | ||

\newcommand{\Bigsum}[2] | \newcommand{\Bigsum}[2]{\mathop{\textstyle\sum}_{#1}^{#2}} | ||

\newcommand{\ran}{\operatorname{ran}} | \newcommand{\ran}{\operatorname{ran}} | ||

\newcommand{\card}{\#} | \newcommand{\card}{\#} | ||

\ | \renewcommand{\P}{\operatorname{P}} | ||

\renewcommand{\L}{\operatorname{L}} | |||

\newcommand{\mathds}{\mathbb} | |||

</math> | |||

</div> | |||

In this chapter we denote by <math>\lambda^d</math> the <math>d</math>-dimensional Lebesgue measure on <math>\mathbb{R}^d</math>. The euclidean norm we denote by <math>\|\cdot\|</math> and balls with respect to this norm are denoted by <math>\B_r(x)</math> and <math>\Bbar_r(x)</math> respectively. | In this chapter we denote by <math>\lambda^d</math> the <math>d</math>-dimensional Lebesgue measure on <math>\mathbb{R}^d</math>. The euclidean norm we denote by <math>\|\cdot\|</math> and balls with respect to this norm are denoted by <math>\B_r(x)</math> and <math>\Bbar_r(x)</math> respectively. | ||

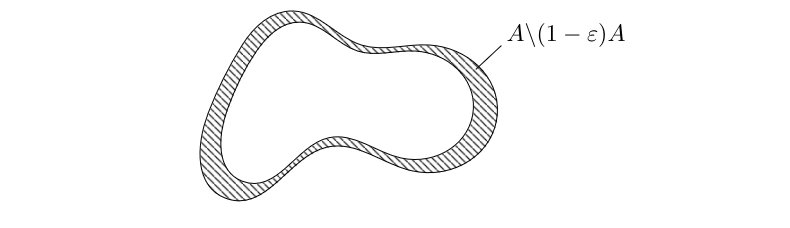

We start with the following observation. Let <math>A\subseteq\mathbb{R}^d</math> be a measurable star-shaped set and assume that zero is a center. Then <math>(1-\epsilon)A\subseteq A</math> and we can interpret <math>A\backslash(1-\epsilon)A</math> as that part of <math>A</math> that is close to its surface. | We start with the following observation. Let <math>A\subseteq\mathbb{R}^d</math> be a measurable star-shaped set and assume that zero is a center. Then <math>(1-\epsilon)A\subseteq A</math> and we can interpret <math>A\backslash(1-\epsilon)A</math> as that part of <math>A</math> that is close to its surface. | ||

<div class="d-flex justify-content-center"> | |||

[[File:tikzcacb22a4.png | 400px | thumb |Volume close to the surface of a ‘nice’ subset of <math>\mathbb{R}^2</math>.]] | |||

[[File:tikzcacb22a4.png | 400px | thumb | | </div> | ||

If we compute the measure of the latter set, then we get | If we compute the measure of the latter set, then we get | ||

| Line 68: | Line 40: | ||

</math> | </math> | ||

which will be close to one if <math>\epsilon</math> is small and <math>d</math> is large. This means that in this case the measure of <math>A</math> is located close to the surface of <math>A</math> whereas its center contributes only little to its volume. Our first aim in this section is to quantify the latter observation in the case that <math>A</math> is the unit ball. | which will be close to one if <math>\epsilon</math> is small and <math>d</math> is large. This means that in this case the measure of <math>A</math> is located close to the surface of <math>A</math> whereas its center contributes only little to its volume. Our first aim in this section is to quantify the latter observation in the case that <math>A</math> is the unit ball. | ||

{{proofcard|Lemma|LEM-9-1|For <math>d\geqslant 1</math> and <math>0 < \epsilon\leqslant1</math> we have <math>(1-\epsilon)^d\leqslant \exp(-\epsilon d)</math>. | {{proofcard|Lemma|LEM-9-1|For <math>d\geqslant 1</math> and <math>0 < \epsilon\leqslant1</math> we have <math>(1-\epsilon)^d\leqslant \exp(-\epsilon d)</math>. | ||

|Define <math>f\colon[0,1]\rightarrow\mathbb{R}</math> via <math>f(x)=\exp(-x)+x-1</math>. Then <math>f(0)=0</math> and <math>f'(x)=1-\exp(-x)\geqslant0</math> holds for all <math>x\in[0,1]</math>. Therefore <math>f(x)\geqslant0</math> and thus <math>\exp(-x)\geqslant1-x\geqslant0</math> holds for all <math>x\in[0,1]</math>. Evaluating at <math>x=\epsilon</math> and taking the <math>d</math>--th power finishes the proof.}} | |Define <math>f\colon[0,1]\rightarrow\mathbb{R}</math> via <math>f(x)=\exp(-x)+x-1</math>. Then <math>f(0)=0</math> and <math>f'(x)=1-\exp(-x)\geqslant0</math> holds for all <math>x\in[0,1]</math>. Therefore <math>f(x)\geqslant0</math> and thus <math>\exp(-x)\geqslant1-x\geqslant0</math> holds for all <math>x\in[0,1]</math>. Evaluating at <math>x=\epsilon</math> and taking the <math>d</math>--th power finishes the proof.}} | ||

| Line 79: | Line 51: | ||

</math> | </math> | ||

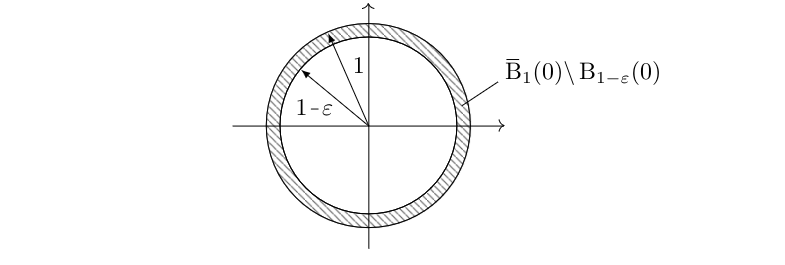

i.e., at least <math>1-\exp(-\epsilon d)</math> fraction of the volume of the <math>d</math>-dimensional unit ball is located <math>\epsilon</math>-close to its surface. | i.e., at least <math>1-\exp(-\epsilon d)</math> fraction of the volume of the <math>d</math>-dimensional unit ball is located <math>\epsilon</math>-close to its surface. | ||

<div class{{=}}"d-flex justify-content-center"> | |||

[[File:tikz4262b634.png | 400px | thumb | Volume close to the surface of the unit ball. ]] | |||

</div> | |||

[[File:tikz4262b634.png | 400px | thumb | | |||

|We compute | |We compute | ||

| Line 125: | Line 92: | ||

</math> | </math> | ||

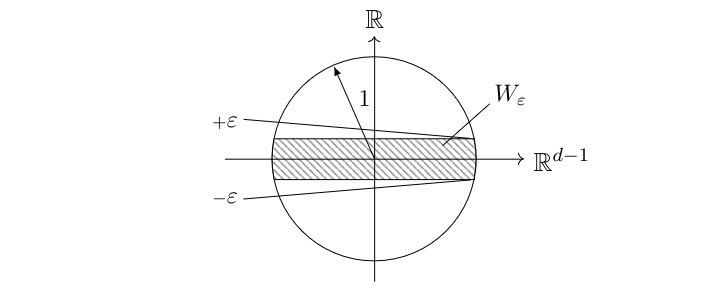

where <math>W_{\epsilon}=\bigl\{(x_1,\dots,x_d)\in \Bbar_1(0)\:;\:|x_1|\leqslant\epsilon\bigr\}</math>. | where <math>W_{\epsilon}=\bigl\{(x_1,\dots,x_d)\in \Bbar_1(0)\:;\:|x_1|\leqslant\epsilon\bigr\}</math>. | ||

<div class{{=}}"d-flex justify-content-center"> | |||

[[File:tikz62fda0be.png | 400px | thumb | Most of the volume is located close to the equator of the unit ball. ]] | |||

</div>|We first observe that the statement is trivial for <math>\epsilon\geqslant1</math>, so we may assume <math>\epsilon < 1</math> for the rest of the proof. We now compute the volume of that part | |||

[[File:tikz62fda0be.png | 400px | thumb | | |||

|We first observe that the statement is trivial for <math>\epsilon\geqslant1</math>, so we may assume <math>\epsilon < 1</math> for the rest of the proof. We now compute the volume of that part | |||

<math display="block"> | <math display="block"> | ||

| Line 150: | Line 110: | ||

</math> | </math> | ||

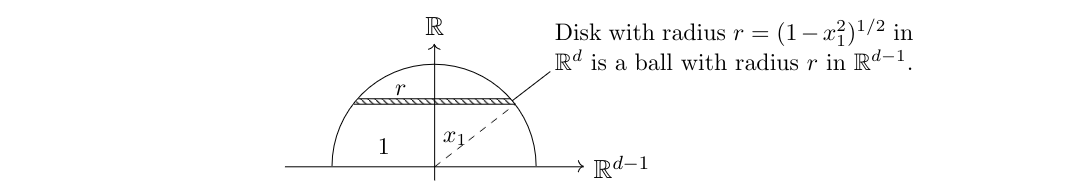

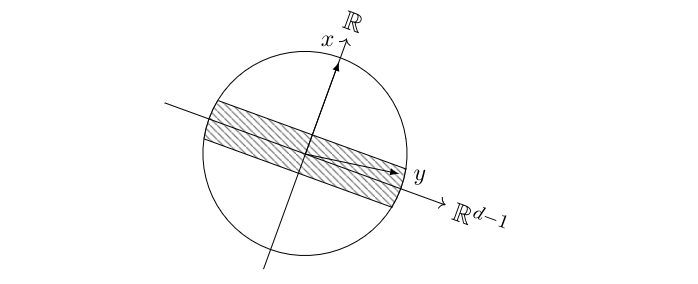

where the ball is taken in <math>\mathbb{R}^{d-1}</math>, compare the picture below. | where the ball is taken in <math>\mathbb{R}^{d-1}</math>, compare the picture below. | ||

<div class{{=}}"d-flex justify-content-center"> | |||

[[File:tikz53fe5393.png | 400px | thumb | Slice of the unit ball <math>\Bbar_1(0)</math>. ]] | |||

[[File:tikz53fe5393.png | 400px | thumb | | </div> | ||

Now we employ Cavalieri's Principle and get | Now we employ Cavalieri's Principle and get | ||

<span id="Caval-1"/> | <span id{{=}}"Caval-1"/> | ||

<math display="block"> | <math display="block"> | ||

\begin{equation}\label{Caval-1} | \begin{equation}\label{Caval-1} | ||

| Line 171: | Line 128: | ||

\end{equation} | \end{equation} | ||

</math> | </math> | ||

Next we estimate the integral at the end of \eqref{Caval-1} in two ways. Firstly, we put <math>\delta=0</math> and observe that <math>0\leqslant x_1\leqslant1/\sqrt{d-1}</math> implies <math>x_1^2\leqslant1/(d-1)</math>. This in turn leads to <math>1-1/(d-1)\leqslant 1-x_1^2</math> which implies <math>(1-1/(d-1))^{(d-1)/2}\leqslant (1-x_1^2)^{(d-1)/2}</math>. It follows | Next we estimate the integral at the end of \eqref{Caval-1} in two ways. Firstly, we put <math>\delta=0</math> and observe that <math>0\leqslant x_1\leqslant1/\sqrt{d-1}</math> implies <math>x_1^2\leqslant1/(d-1)</math>. This in turn leads to <math>1-1/(d-1)\leqslant 1-x_1^2</math> which implies <math>(1-1/(d-1))^{(d-1)/2}\leqslant (1-x_1^2)^{(d-1)/2}</math>. It follows | ||

<span id="Caval-2"/> | <span id{{=}}"Caval-2"/> | ||

<math display="block"> | <math display="block"> | ||

\begin{equation}\label{Caval-2} | \begin{equation}\label{Caval-2} | ||

| Line 189: | Line 145: | ||

where we used Bernoulli's inequality in the last estimate as <math>(d-1)/2\geqslant1</math> holds due to our assumption <math>d\geqslant3</math>. Secondly, we estimate the integral for <math>\delta=\epsilon > 0</math> from above. We employ <math>1-x_1^2\leqslant \exp(-x_1^2)</math> from [[#LEM-9-2 |Lemma]] and <math>x_1/\epsilon\geqslant1</math> to get | where we used Bernoulli's inequality in the last estimate as <math>(d-1)/2\geqslant1</math> holds due to our assumption <math>d\geqslant3</math>. Secondly, we estimate the integral for <math>\delta=\epsilon > 0</math> from above. We employ <math>1-x_1^2\leqslant \exp(-x_1^2)</math> from [[#LEM-9-2 |Lemma]] and <math>x_1/\epsilon\geqslant1</math> to get | ||

<span id="Caval-3"/> | <span id{{=}}"Caval-3"/> | ||

<math display="block"> | <math display="block"> | ||

\begin{equation}\label{Caval-3} | \begin{equation}\label{Caval-3} | ||

| Line 200: | Line 156: | ||

\end{equation} | \end{equation} | ||

</math> | </math> | ||

Combining \eqref{Caval-1}--\eqref{Caval-3} we obtain | Combining \eqref{Caval-1}--\eqref{Caval-3} we obtain | ||

| Line 223: | Line 178: | ||

|The function <math>f\colon(0,\infty)\rightarrow\mathbb{R}</math>, <math>f(a)=\frac{2}{a}\exp(-\frac{a^2}{2})</math> is strictly decreasing as both of its factors are strictly decreasing and strictly positive. We have <math>f(1)=2/\sqrt{e} > 1</math> and <math>f(2)=1/\e^2 < 1</math>. Consequently there is <math>a_0 > 0</math> such that <math>f(a_0)=1</math> and necessarily <math>1 < a_0 < 1</math>. For <math>a > a_0</math> we get <math>f(a) < 1</math> and for <math>a < a_0</math> we get <math>f(a) > 1</math>, so <math>a_0</math> is unique.}} | |The function <math>f\colon(0,\infty)\rightarrow\mathbb{R}</math>, <math>f(a)=\frac{2}{a}\exp(-\frac{a^2}{2})</math> is strictly decreasing as both of its factors are strictly decreasing and strictly positive. We have <math>f(1)=2/\sqrt{e} > 1</math> and <math>f(2)=1/\e^2 < 1</math>. Consequently there is <math>a_0 > 0</math> such that <math>f(a_0)=1</math> and necessarily <math>1 < a_0 < 1</math>. For <math>a > a_0</math> we get <math>f(a) < 1</math> and for <math>a < a_0</math> we get <math>f(a) > 1</math>, so <math>a_0</math> is unique.}} | ||

Substituting <math>a=\epsilon\sqrt{d-1}</math> shows that the factor in [[#WC-THM |Theorem]] is strictly positive if and only if <math>\epsilon > a_0/\sqrt{d-1}</math> holds with some <math>a_0\in(1,2)</math>, compare [[ | Substituting <math>a=\epsilon\sqrt{d-1}</math> shows that the factor in [[#WC-THM |Theorem]] is strictly positive if and only if <math>\epsilon > a_0/\sqrt{d-1}</math> holds with some <math>a_0\in(1,2)</math>, compare [[exercise:1cae9d071a |Problem]]. That is, as bigger as we choose the dimension, the smaller we can make <math>\epsilon > 0</math>. | ||

We however ''cannot'' let <math>\epsilon</math> tend to zero for a fixed dimension <math>d</math>. The interpretation of [[#WC-THM |Theorem]], i.e., that <math>1-\frac{2}{\epsilon\sqrt{d-1}}\exp({-\frac{\epsilon^2(d-1)}{2}})</math> fraction of the volume of the <math>d</math>-dimensional unit ball is located <math>\epsilon</math>-close to the equator, is only valid if <math>\epsilon</math> is not too small. Although this might right now be fairly obvious, we point out that in contrast to the ‘typical epsilontics’, <math>\epsilon > 0</math> cannot be thought of being arbitrarily small, but what we can choose for it depends on the space's dimension to be large enough. | We however ''cannot'' let <math>\epsilon</math> tend to zero for a fixed dimension <math>d</math>. The interpretation of [[#WC-THM |Theorem]], i.e., that <math>1-\frac{2}{\epsilon\sqrt{d-1}}\exp({-\frac{\epsilon^2(d-1)}{2}})</math> fraction of the volume of the <math>d</math>-dimensional unit ball is located <math>\epsilon</math>-close to the equator, is only valid if <math>\epsilon</math> is not too small. Although this might right now be fairly obvious, we point out that in contrast to the ‘typical epsilontics’, <math>\epsilon > 0</math> cannot be thought of being arbitrarily small, but what we can choose for it depends on the space's dimension to be large enough. | ||

For later use we note the following reformulation of [[#WC-THM |Theorem]]. | For later use we note the following reformulation of [[#WC-THM |Theorem]]. | ||

{{proofcard|Corollary|WC-COR|Let <math>d\geqslant3</math> and <math>\epsilon > 0</math>. Then we have | {{proofcard|Corollary|WC-COR|Let <math>d\geqslant3</math> and <math>\epsilon > 0</math>. Then we have | ||

| Line 233: | Line 188: | ||

\frac{\lambda^d(\{(x_1,\dots,x_d)\in\Bbar_1(0)\:;\:|x_1| > \epsilon\})}{\lambda^d(\Bbar_1(0))} \leqslant\smallfrac{2}{\epsilon\sqrt{d-1}}\exp\bigl({-\smallfrac{\epsilon^2(d-1)}{2}}\bigr) | \frac{\lambda^d(\{(x_1,\dots,x_d)\in\Bbar_1(0)\:;\:|x_1| > \epsilon\})}{\lambda^d(\Bbar_1(0))} \leqslant\smallfrac{2}{\epsilon\sqrt{d-1}}\exp\bigl({-\smallfrac{\epsilon^2(d-1)}{2}}\bigr) | ||

</math> | </math> | ||

for every <math>\epsilon > 0</math>. | for every <math>\epsilon > 0</math>.|In the notation of [[#WC-THM |Theorem]] we have | ||

|In the notation of [[#WC-THM |Theorem]] we have | |||

<math display="block"> | <math display="block"> | ||

| Line 281: | Line 235: | ||

holds. | holds. | ||

|We first consider the case of two points <math>x, y\in\Bbar_1(0)</math>. As we are interested only in their scalar product it should make no difference if we draw both points at random or if we treat the first point's direction as fixed (but the length still chosen at random) and draw only the second one at random. Since <math>\lambda^d(\{0\})=0</math>, the first points will be non-zero with probability one and we can thus arrange a new coordinate system, in which the unit ball is still the same, but in which our first points is directed towards the (new) north pole of the ball. In these coordinates we can then apply the waist concentration theorem to see that the other point is likely to be located close to the new equator. This means in turn that the scalar product of both points will be close to zero. | |We first consider the case of two points <math>x, y\in\Bbar_1(0)</math>. As we are interested only in their scalar product it should make no difference if we draw both points at random or if we treat the first point's direction as fixed (but the length still chosen at random) and draw only the second one at random. Since <math>\lambda^d(\{0\})=0</math>, the first points will be non-zero with probability one and we can thus arrange a new coordinate system, in which the unit ball is still the same, but in which our first points is directed towards the (new) north pole of the ball. In these coordinates we can then apply the waist concentration theorem to see that the other point is likely to be located close to the new equator. This means in turn that the scalar product of both points will be close to zero. | ||

<div class{{=}}"d-flex justify-content-center"> | |||

[[File:tikz6e5b4b9b.png | 400px | thumb | We apply he Waist Concentration Theorem\\with respect to the new north pole. ]] | |||

[[File:tikz6e5b4b9b.png | 400px | thumb | | </div> | ||

To make the above idea formal, we put <math>\epsilon=\sqrt{6\ln n}/\sqrt{d-1}</math> and consider two random variables <math>X,Y\colon\Omega\rightarrow\mathbb{R}^d</math> with <math>X,Y\sim\mathcal{U}(\Bbar_1(0))</math>. Then we can find for each <math>\omega_1\in\Omega</math> with <math>X(\omega_1)\not=0</math> an orthogonal linear map <math>T(\omega)\colon\mathbb{R}^d\rightarrow\mathbb{R}^d</math> with <math>T(\omega_1)X(\omega_1)=(\|X(\omega_1)\|,0,\dots,0)</math>. Indeed, we can put <math>f^{\scriptscriptstyle(1)}=X(\omega)/\|X(\omega)\|</math> and extend to an orthonormal basis <math>\mathcal{F}=\{ f^{\scriptscriptstyle(1)}, f^{\scriptscriptstyle(2)},\dots,f^{\scriptscriptstyle(d)}\}</math> by first applying the basis extension theorem and then the Gram-Schmidt procedure. The map <math>T(\omega_1)</math> is then defined as the linear extension of <math>T(\omega_1)f^{\scriptscriptstyle(j)}:=e^{\scriptscriptstyle(j)}</math> where the <math>e^{\scriptscriptstyle(j)}</math> are the standard unit vectors. We obtain <math>T(\omega_1)X(\omega_1)=T(\omega_1)(\|X(\omega_1)\|f^{\scriptscriptstyle(1)})=\|X(\omega_1)\|e^{\scriptscriptstyle(1)}</math> and thus | To make the above idea formal, we put <math>\epsilon=\sqrt{6\ln n}/\sqrt{d-1}</math> and consider two random variables <math>X,Y\colon\Omega\rightarrow\mathbb{R}^d</math> with <math>X,Y\sim\mathcal{U}(\Bbar_1(0))</math>. Then we can find for each <math>\omega_1\in\Omega</math> with <math>X(\omega_1)\not=0</math> an orthogonal linear map <math>T(\omega)\colon\mathbb{R}^d\rightarrow\mathbb{R}^d</math> with <math>T(\omega_1)X(\omega_1)=(\|X(\omega_1)\|,0,\dots,0)</math>. Indeed, we can put <math>f^{\scriptscriptstyle(1)}=X(\omega)/\|X(\omega)\|</math> and extend to an orthonormal basis <math>\mathcal{F}=\{ f^{\scriptscriptstyle(1)}, f^{\scriptscriptstyle(2)},\dots,f^{\scriptscriptstyle(d)}\}</math> by first applying the basis extension theorem and then the Gram-Schmidt procedure. The map <math>T(\omega_1)</math> is then defined as the linear extension of <math>T(\omega_1)f^{\scriptscriptstyle(j)}:=e^{\scriptscriptstyle(j)}</math> where the <math>e^{\scriptscriptstyle(j)}</math> are the standard unit vectors. We obtain <math>T(\omega_1)X(\omega_1)=T(\omega_1)(\|X(\omega_1)\|f^{\scriptscriptstyle(1)})=\|X(\omega_1)\|e^{\scriptscriptstyle(1)}</math> and thus | ||

| Line 327: | Line 278: | ||

</math> | </math> | ||

as desired.}} | as desired.}} | ||

If we apply the estimate of [[#UO-THM |Theorem]] for only two points <math>x</math>, <math>y\in\Bbar_1(0)</math> we get only a probability of greater or equal to <math>1/2</math> for <math>\langle{}x,y\rangle{}</math> being smaller than <math>\sqrt{6\ln 2}/\sqrt{d-1}</math>. Going through the proof of of the theorem yields however that | If we apply the estimate of [[#UO-THM |Theorem]] for only two points <math>x</math>, <math>y\in\Bbar_1(0)</math> we get only a probability of greater or equal to <math>1/2</math> for <math>\langle{}x,y\rangle{}</math> being smaller than <math>\sqrt{6\ln 2}/\sqrt{d-1}</math>. Going through the proof of of the theorem yields however that | ||

| Line 334: | Line 286: | ||

from where we can see that for fixed <math>\epsilon > 0</math> and <math>d</math> large enough the probability for the scalar product to be <math>\epsilon</math>--small will be close to one which means that two points picked at random from the high dimensional unit ball will be almost orthogonal with high probability. | from where we can see that for fixed <math>\epsilon > 0</math> and <math>d</math> large enough the probability for the scalar product to be <math>\epsilon</math>--small will be close to one which means that two points picked at random from the high dimensional unit ball will be almost orthogonal with high probability. | ||

We remark here, that when interpreting the scalar product as a measure for how close to orthogonal two vectors are, one usually does normalize the vectors first. In the above result we did not do so, since, by [[#UA-THM |Theorem]] we know that the points considered in [[#UO-THM |Theorem]] will have norm close to one with high probability, anyway. We leave a formal proof as [[exercise:8dbb0c4f40 |Problem]]. | |||

We remark here, that when interpreting the scalar product as a measure for how close to orthogonal two vectors are, one usually does normalize the vectors first. In the above result we did not do so, since, by [[#UA-THM |Theorem]] we know that the points considered in [[#UO-THM |Theorem]] will have norm close to one with high probability, anyway. We leave a formal proof as [[ | |||

==General references== | ==General references== | ||

{{cite arXiv|last1=Wegner|first1=Sven-Ake|year=2024|title=Lecture Notes on High-Dimensional Data|eprint=2101.05841|class=math.FA}} | {{cite arXiv|last1=Wegner|first1=Sven-Ake|year=2024|title=Lecture Notes on High-Dimensional Data|eprint=2101.05841|class=math.FA}} | ||

Latest revision as of 00:05, 2 June 2024

In this chapter we denote by [math]\lambda^d[/math] the [math]d[/math]-dimensional Lebesgue measure on [math]\mathbb{R}^d[/math]. The euclidean norm we denote by [math]\|\cdot\|[/math] and balls with respect to this norm are denoted by [math]\B_r(x)[/math] and [math]\Bbar_r(x)[/math] respectively.

We start with the following observation. Let [math]A\subseteq\mathbb{R}^d[/math] be a measurable star-shaped set and assume that zero is a center. Then [math](1-\epsilon)A\subseteq A[/math] and we can interpret [math]A\backslash(1-\epsilon)A[/math] as that part of [math]A[/math] that is close to its surface.

If we compute the measure of the latter set, then we get

and thus

which will be close to one if [math]\epsilon[/math] is small and [math]d[/math] is large. This means that in this case the measure of [math]A[/math] is located close to the surface of [math]A[/math] whereas its center contributes only little to its volume. Our first aim in this section is to quantify the latter observation in the case that [math]A[/math] is the unit ball.

For [math]d\geqslant 1[/math] and [math]0 \lt \epsilon\leqslant1[/math] we have [math](1-\epsilon)^d\leqslant \exp(-\epsilon d)[/math].

Define [math]f\colon[0,1]\rightarrow\mathbb{R}[/math] via [math]f(x)=\exp(-x)+x-1[/math]. Then [math]f(0)=0[/math] and [math]f'(x)=1-\exp(-x)\geqslant0[/math] holds for all [math]x\in[0,1][/math]. Therefore [math]f(x)\geqslant0[/math] and thus [math]\exp(-x)\geqslant1-x\geqslant0[/math] holds for all [math]x\in[0,1][/math]. Evaluating at [math]x=\epsilon[/math] and taking the [math]d[/math]--th power finishes the proof.

Using the lemma we can now prove that most of the unit ball's volume is located close to its surface. This is very reasonable, since if we think of the ball consisting of many thin shells all of the same depth but with different radii, then the outer ones will have (in high dimensions significantly!) larger volume then the inner ones.

(Surface Concentration Theorem) Let [math]d\geqslant1[/math] and [math]0 \lt \epsilon\leqslant1[/math]. Then we have

We compute

For the second concentration theorem we need the following lemma.

For [math]d\geqslant3[/math] we have [math]\lambda^d(\Bbar_1(0))=\frac{2\pi}{d}\cdot\lambda^{d-2}(\Bbar_1(0))[/math] where the unit balls are taken in [math]\mathbb{R}^d[/math] and [math]\mathbb{R}^{d-2}[/math], respectively.

Since [math]\B_1(0)=\{(x_1,\dots,x_d)\in\mathbb{R}^d\:;\:x_1^2+\cdots+x_d^2\leqslant1\}[/math] we may compute

After we have seen that most of the volume of the unit ball is concentrated close to its surface we show in the next result that if we designate one point of the surface as the north pole, then most of the unit ball's volume will be concentrated at its waist. As with the surface concentration, this is not surprising, since if we go from the center in the direction of the north pole, then the ball becomes thinner in all of the [math]d-1[/math] remaining dimensions. The precise result is as follows.

(Waist Concentration Theorem) Let [math]d\geqslant3[/math] and [math]\epsilon \gt 0[/math]. Then we have

We first observe that the statement is trivial for [math]\epsilon\geqslant1[/math], so we may assume [math]\epsilon \lt 1[/math] for the rest of the proof. We now compute the volume of that part

Now we employ Cavalieri's Principle and get

Next we estimate the integral at the end of \eqref{Caval-1} in two ways. Firstly, we put [math]\delta=0[/math] and observe that [math]0\leqslant x_1\leqslant1/\sqrt{d-1}[/math] implies [math]x_1^2\leqslant1/(d-1)[/math]. This in turn leads to [math]1-1/(d-1)\leqslant 1-x_1^2[/math] which implies [math](1-1/(d-1))^{(d-1)/2}\leqslant (1-x_1^2)^{(d-1)/2}[/math]. It follows

Combining \eqref{Caval-1}--\eqref{Caval-3} we obtain

To finish the proof we observe that [math]H^0[/math] is the upper half of the unit ball and [math]H^0\backslash{}H^{\epsilon}[/math] is the upper half of [math]W_{\epsilon}[/math]. Therefore we derive from the above

The estimate in Theorem is only of value if the factor on the right hand side is strictly positive. The following lemma explains when this happens.

There exists a unique [math]a_0 \gt 0[/math] such that [math]\frac{2}{a}\exp(-\frac{a^2}{2}) \lt 1[/math] holds for every [math]a \gt a_0[/math]. We have [math]a_0\in(1,2)[/math].

The function [math]f\colon(0,\infty)\rightarrow\mathbb{R}[/math], [math]f(a)=\frac{2}{a}\exp(-\frac{a^2}{2})[/math] is strictly decreasing as both of its factors are strictly decreasing and strictly positive. We have [math]f(1)=2/\sqrt{e} \gt 1[/math] and [math]f(2)=1/\e^2 \lt 1[/math]. Consequently there is [math]a_0 \gt 0[/math] such that [math]f(a_0)=1[/math] and necessarily [math]1 \lt a_0 \lt 1[/math]. For [math]a \gt a_0[/math] we get [math]f(a) \lt 1[/math] and for [math]a \lt a_0[/math] we get [math]f(a) \gt 1[/math], so [math]a_0[/math] is unique.

Substituting [math]a=\epsilon\sqrt{d-1}[/math] shows that the factor in Theorem is strictly positive if and only if [math]\epsilon \gt a_0/\sqrt{d-1}[/math] holds with some [math]a_0\in(1,2)[/math], compare Problem. That is, as bigger as we choose the dimension, the smaller we can make [math]\epsilon \gt 0[/math].

We however cannot let [math]\epsilon[/math] tend to zero for a fixed dimension [math]d[/math]. The interpretation of Theorem, i.e., that [math]1-\frac{2}{\epsilon\sqrt{d-1}}\exp({-\frac{\epsilon^2(d-1)}{2}})[/math] fraction of the volume of the [math]d[/math]-dimensional unit ball is located [math]\epsilon[/math]-close to the equator, is only valid if [math]\epsilon[/math] is not too small. Although this might right now be fairly obvious, we point out that in contrast to the ‘typical epsilontics’, [math]\epsilon \gt 0[/math] cannot be thought of being arbitrarily small, but what we can choose for it depends on the space's dimension to be large enough.

For later use we note the following reformulation of Theorem.

Let [math]d\geqslant3[/math] and [math]\epsilon \gt 0[/math]. Then we have

In the notation of Theorem we have

Now we will use the volume estimates from above in order to establish where points that we draw at random from the [math]d[/math]-dimensional unit ball will be located and what mutual angles between the points we can expect. To make our proofs formal, we will employ in the rest of the chapter a probability space [math](\Omega,\Sigma,\P)[/math] and random variables [math]X\colon\Omega\rightarrow\mathbb{R}^d[/math] with distribution [math]X\sim\mathcal{U}(\Bbar_1(0))[/math], compare Definition.

Let [math]d\geqslant3[/math] and assume that we draw [math]n\geqslant2[/math] points [math]x^{\scriptscriptstyle(1)},\dots,x^{\scriptscriptstyle(n)}[/math] at random from the [math]d[/math]-dimensional unit ball. Then

In view of the Surface Concentration Theorem, most of the unit ball's volume is located close to its surface and thus a point chosen uniformly at random is likely to be close to the surface. For a formal proof, we put [math]\epsilon=\frac{2\ln n}{d}[/math] and consider a random vector [math]X\sim\mathcal{U}(\Bbar_1(0))[/math]. For fixed [math]1\leqslant j\leqslant n[/math] we employ Theorem to get

Although we get a trivial estimate if we sub [math]n=1[/math] into the Theorem, its proof showed that

holds, so if [math]d[/math] is large enough and [math]\epsilon \gt 0[/math] is not too small, a single point chosen at random will be [math]\epsilon[/math]--close to the surface of [math]\Bbar_1(0)[/math] with a high probability. We continue by studying angles between points.

Let [math]d\geqslant3[/math] and assume that we draw [math]n\geqslant2[/math] points [math]x^{\scriptscriptstyle(1)},\dots,x^{\scriptscriptstyle(n)}[/math] uniformly at random from the [math]d[/math]-dimensional unit ball. Then

We first consider the case of two points [math]x, y\in\Bbar_1(0)[/math]. As we are interested only in their scalar product it should make no difference if we draw both points at random or if we treat the first point's direction as fixed (but the length still chosen at random) and draw only the second one at random. Since [math]\lambda^d(\{0\})=0[/math], the first points will be non-zero with probability one and we can thus arrange a new coordinate system, in which the unit ball is still the same, but in which our first points is directed towards the (new) north pole of the ball. In these coordinates we can then apply the waist concentration theorem to see that the other point is likely to be located close to the new equator. This means in turn that the scalar product of both points will be close to zero.

To make the above idea formal, we put [math]\epsilon=\sqrt{6\ln n}/\sqrt{d-1}[/math] and consider two random variables [math]X,Y\colon\Omega\rightarrow\mathbb{R}^d[/math] with [math]X,Y\sim\mathcal{U}(\Bbar_1(0))[/math]. Then we can find for each [math]\omega_1\in\Omega[/math] with [math]X(\omega_1)\not=0[/math] an orthogonal linear map [math]T(\omega)\colon\mathbb{R}^d\rightarrow\mathbb{R}^d[/math] with [math]T(\omega_1)X(\omega_1)=(\|X(\omega_1)\|,0,\dots,0)[/math]. Indeed, we can put [math]f^{\scriptscriptstyle(1)}=X(\omega)/\|X(\omega)\|[/math] and extend to an orthonormal basis [math]\mathcal{F}=\{ f^{\scriptscriptstyle(1)}, f^{\scriptscriptstyle(2)},\dots,f^{\scriptscriptstyle(d)}\}[/math] by first applying the basis extension theorem and then the Gram-Schmidt procedure. The map [math]T(\omega_1)[/math] is then defined as the linear extension of [math]T(\omega_1)f^{\scriptscriptstyle(j)}:=e^{\scriptscriptstyle(j)}[/math] where the [math]e^{\scriptscriptstyle(j)}[/math] are the standard unit vectors. We obtain [math]T(\omega_1)X(\omega_1)=T(\omega_1)(\|X(\omega_1)\|f^{\scriptscriptstyle(1)})=\|X(\omega_1)\|e^{\scriptscriptstyle(1)}[/math] and thus

If we apply the estimate of Theorem for only two points [math]x[/math], [math]y\in\Bbar_1(0)[/math] we get only a probability of greater or equal to [math]1/2[/math] for [math]\langle{}x,y\rangle{}[/math] being smaller than [math]\sqrt{6\ln 2}/\sqrt{d-1}[/math]. Going through the proof of of the theorem yields however that

from where we can see that for fixed [math]\epsilon \gt 0[/math] and [math]d[/math] large enough the probability for the scalar product to be [math]\epsilon[/math]--small will be close to one which means that two points picked at random from the high dimensional unit ball will be almost orthogonal with high probability.

We remark here, that when interpreting the scalar product as a measure for how close to orthogonal two vectors are, one usually does normalize the vectors first. In the above result we did not do so, since, by Theorem we know that the points considered in Theorem will have norm close to one with high probability, anyway. We leave a formal proof as Problem.

General references

Wegner, Sven-Ake (2024). "Lecture Notes on High-Dimensional Data". arXiv:2101.05841 [math.FA].