guide:21bfd24860: Difference between revisions

No edit summary |

mNo edit summary |

||

| (2 intermediate revisions by the same user not shown) | |||

| Line 6: | Line 6: | ||

\newcommand{\NA}{{\rm NA}} | \newcommand{\NA}{{\rm NA}} | ||

\newcommand{\mathds}{\mathbb}</math></div> | \newcommand{\mathds}{\mathbb}</math></div> | ||

Much of this section is based upon an article by Brad Mann,<ref group="Notes" >B. Mann, “How Many | Much of this section is based upon an article by Brad Mann,<ref group="Notes" >B. Mann, “How Many | ||

Times Should You Shuffle a Deck of Cards?”, ''UMAP Journal'', vol. 15, no. 4 (1994), pp. 303--331.</ref> | Times Should You Shuffle a Deck of Cards?”, ''UMAP Journal'', vol. 15, no. 4 (1994), pp. 303--331.</ref> | ||

| Line 163: | Line 163: | ||

that <math>(T_1, T_2)</math> corresponds to <math>T_3</math>. If <math>T_1</math> is applied to the identity ordering, | that <math>(T_1, T_2)</math> corresponds to <math>T_3</math>. If <math>T_1</math> is applied to the identity ordering, | ||

and <math>T_2</math> is applied to the resulting ordering, then the final ordering is the same as | and <math>T_2</math> is applied to the resulting ordering, then the final ordering is the same as | ||

the ordering that is obtained by applying <math>T_3</math> to the identity ordering. | the ordering that is obtained by applying <math>T_3</math> to the identity ordering.|The easiest way to describe the required correspondence is through the idea of | ||

an unshuffle. An <math>a</math>-unshuffle begins with a deck of <math>n</math> cards. One by one, cards | an unshuffle. An <math>a</math>-unshuffle begins with a deck of <math>n</math> cards. One by one, cards | ||

are taken from the top of the deck and placed, with equal probability, on the bottom of any one | are taken from the top of the deck and placed, with equal probability, on the bottom of any one | ||

| Line 203: | Line 203: | ||

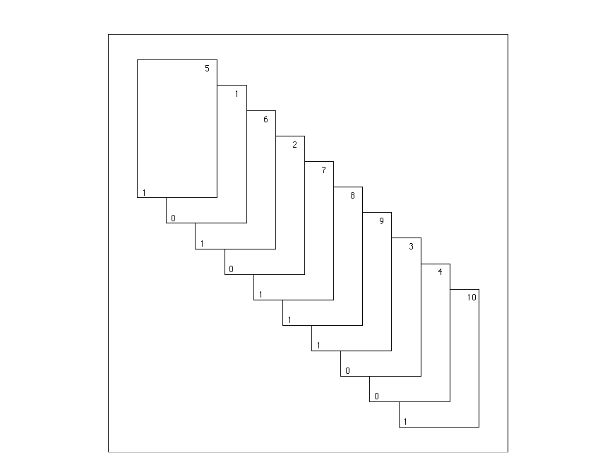

In | In [[#fig 3.14|Figure]], we show the labels for a 2-unshuffle of a deck with 10 cards. There | ||

are 4 cards with the label 0 and 6 cards with the label 1, so if the 2-unshuffle is performed, | are 4 cards with the label 0 and 6 cards with the label 1, so if the 2-unshuffle is performed, | ||

the first stack will have 4 cards and the second stack will have 6 cards. When this unshuffle is | the first stack will have 4 cards and the second stack will have 6 cards. When this unshuffle is | ||

| Line 209: | Line 209: | ||

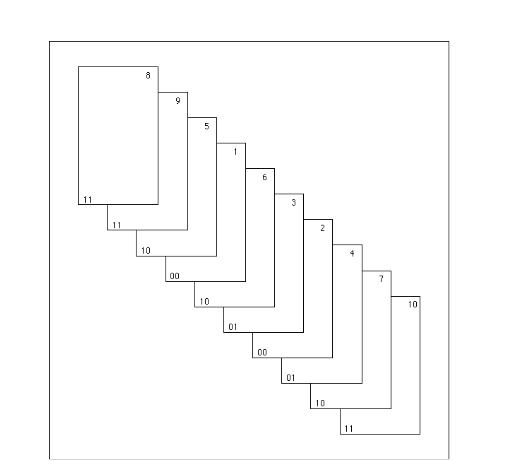

In | In [[#fig 3.15|Figure]], we show the labels for a 4-unshuffle of the same deck (because there | ||

are four labels being used). This figure can also be regarded as an example of a pair of | are four labels being used). This figure can also be regarded as an example of a pair of | ||

2-unshuffles, as described in the proof above. The first 2-unshuffle will use the second coordinate | 2-unshuffles, as described in the proof above. The first 2-unshuffle will use the second coordinate | ||

| Line 220: | Line 220: | ||

After this 2-unshuffle has been performed, the | After this 2-unshuffle has been performed, the | ||

deck is in the order shown in | deck is in the order shown in [[#fig 3.14|Figure]], as the reader should check. If we wish to | ||

perform a 4-unshuffle on the deck, using the labels shown, we sort the cards lexicographically, | perform a 4-unshuffle on the deck, using the labels shown, we sort the cards lexicographically, | ||

obtaining the four stacks | obtaining the four stacks | ||

| Line 230: | Line 230: | ||

The point of the above theorem is that both sorting procedures always lead to the same initial | The point of the above theorem is that both sorting procedures always lead to the same initial | ||

ordering. | ordering. | ||

<div id=" | <div id="fig 3.14" class="d-flex justify-content-center"> | ||

[[File:guide_e6d15_PSfig3-14. | [[File:guide_e6d15_PSfig3-14.png | 400px | thumb |Before a 2-unshuffle.]] | ||

</div> | </div> | ||

<div id=" | <div id="fig 3.15" class="d-flex justify-content-center"> | ||

[[File:guide_e6d15_PSfig3-15. | [[File:guide_e6d15_PSfig3-15.png | 400px | thumb |Before a 4-unshuffle. ]] | ||

</div> | </div> | ||

{{proofcard|Theorem|thm_3.3.2|If <math>D</math> is any ordering that is the result of applying | {{proofcard|Theorem|thm_3.3.2|If <math>D</math> is any ordering that is the result of applying | ||

an <math>a</math>-shuffle and then a <math>b</math>-shuffle to the identity ordering, then the probability | an <math>a</math>-shuffle and then a <math>b</math>-shuffle to the identity ordering, then the probability | ||

assigned to <math>D</math> by this pair of operations is the same as the probability assigned to <math>D</math> by the | assigned to <math>D</math> by this pair of operations is the same as the probability assigned to <math>D</math> by the | ||

process of applying an <math>ab</math>-shuffle to the identity ordering. | process of applying an <math>ab</math>-shuffle to the identity ordering.|Call the sample space of <math>a</math>-shuffles <math>S_a</math>. If we label the stacks by the | ||

integers from <math>0</math> to <math>a-1</math>, then each cut-interleaving pair, i.e., shuffle, corresponds to | integers from <math>0</math> to <math>a-1</math>, then each cut-interleaving pair, i.e., shuffle, corresponds to | ||

exactly one | exactly one | ||

| Line 296: | Line 296: | ||

In Chapter | In Chapter [[guide:E4fd10ce73|Expected Value and Variance]], we will define the average value of a random variable. Using this idea, and | ||

the above equation, one can calculate the average value of the random variable <math>T</math> (see | the above equation, one can calculate the average value of the random variable <math>T</math> (see [[exercise:36b2856968|Exercise]]). For example, if | ||

<math>n = 52</math>, then the average value of <math>T</math> is about 11.7. This means that, on the average, about 12 | <math>n = 52</math>, then the average value of <math>T</math> is about 11.7. This means that, on the average, about 12 | ||

riffle shuffles are needed for the process to be considered random. | riffle shuffles are needed for the process to be considered random. | ||

| Line 313: | Line 312: | ||

<math display="block"> | <math display="block"> | ||

{{n + a - r}\choose{n}}\ . | {{n + a - r}\choose{n}}\ . | ||

</math> | </math>|To see why this is true, we need to count the number of ways in which the cut | ||

in an <math>a</math>-shuffle can be performed which will lead to a given ordering with <math>r</math> rising | in an <math>a</math>-shuffle can be performed which will lead to a given ordering with <math>r</math> rising | ||

sequences. We can disregard the interleavings, since once a cut has been made, at | sequences. We can disregard the interleavings, since once a cut has been made, at | ||

| Line 370: | Line 369: | ||

<math display="block"> | <math display="block"> | ||

A(n, 1) = 1\ . | A(n, 1) = 1\ . | ||

</math> | </math>|The second equation can be used to calculate the values of the Eulerian | ||

numbers, and follows immediately from the | numbers, and follows immediately from the Equation \ref{eq 3.6}. The last equation is a | ||

consequence of the fact that the only ordering of <math>\{1, 2, \ldots, n\}</math> with one rising | consequence of the fact that the only ordering of <math>\{1, 2, \ldots, n\}</math> with one rising | ||

sequence is the identity ordering. Thus, it remains to prove | sequence is the identity ordering. Thus, it remains to prove Equation \ref{eq 3.6}. | ||

We will count the set of <math>a</math>-shuffles of a deck with <math>n</math> cards in two ways. First, we | We will count the set of <math>a</math>-shuffles of a deck with <math>n</math> cards in two ways. First, we | ||

know that there are <math>a^n</math> such shuffles (this was noted in the proof of | know that there are <math>a^n</math> such shuffles (this was noted in the proof of | ||

| Line 385: | Line 384: | ||

cut-interleaving pairs that lead to the ordering. Therefore, the right-hand side of | cut-interleaving pairs that lead to the ordering. Therefore, the right-hand side of | ||

Equation \ref{eq 3.6} counts the set of <math>a</math>-shuffles of an <math>n</math>-card deck. | |||

This completes the\linebreak[4] proof.}} | This completes the\linebreak[4] proof.}} | ||

| Line 433: | Line 432: | ||

. | . | ||

</math> | </math> | ||

Now we apply this idea to the case of shuffling. We let <math>X</math> be the process of | Now we apply this idea to the case of shuffling. We let <math>X</math> be the process of | ||

| Line 454: | Line 452: | ||

|+ Distance to the random process. | |+ Distance to the random process. | ||

|- | |- | ||

| | |Number of Riffle Shuffles||Variation Distance | ||

|- | |- | ||

|1||1 | |||

|- | |- | ||

|2||1 | |||

|- | |- | ||

|3||1 | |||

|- | |- | ||

|4||0.9999995334 | |||

|- | |- | ||

|5||0.9237329294 | |||

|- | |- | ||

|6||0.6135495966 | |||

|- | |- | ||

|7||0.3340609995 | |||

|- | |- | ||

|8||0.1671586419 | |||

|- | |- | ||

||9||0.0854201934 | |||

|- | |- | ||

||10||0.0429455489 | |||

|- | |- | ||

||11||0.0215023760 | |||

|- | |- | ||

|12||0.0107548935 | |||

|- | |- | ||

|13||0.0053779101 | |||

|- | |- | ||

|14||0.0026890130 | |||

|} | |} | ||

<div id=" | |||

[[File:guide_e6d15_PSfig3-13-5. | <div id="fig 3.13" class="d-flex justify-content-center"> | ||

[[File:guide_e6d15_PSfig3-13-5.png | 400px | thumb |Distance to the random process. ]] | |||

</div> | </div> | ||

| Line 491: | Line 490: | ||

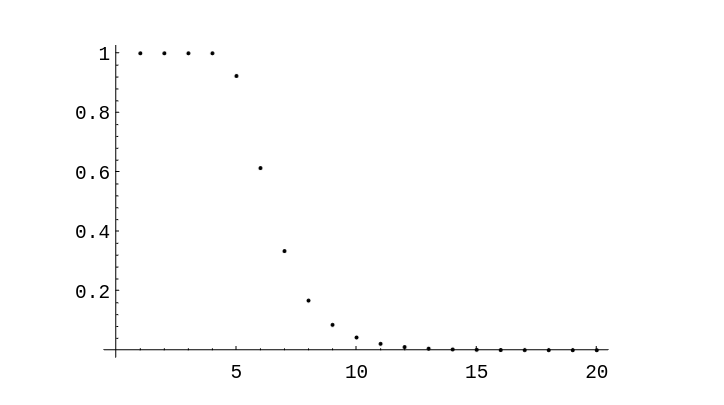

To help in understanding these data, they are shown in graphical form in | To help in understanding these data, they are shown in graphical form in | ||

[[#fig 3.13|Figure]]. The program ''' VariationList''' produces the | |||

data shown in both [[#table 3.12 |Table]] and | data shown in both [[#table 3.12 |Table]] and [[#fig 3.13|Figure]]. One sees that until 5 shuffles | ||

have occurred, the output of | have occurred, the output of | ||

<math>X</math> is very far from random. After 5 shuffles, the distance from the random process is | <math>X</math> is very far from random. After 5 shuffles, the distance from the random process is | ||

| Line 514: | Line 513: | ||

these two probabilities is as large as possible. It can be shown that this absolute | these two probabilities is as large as possible. It can be shown that this absolute | ||

value is the variation distance between the process <math>X</math> and the uniform process. (The | value is the variation distance between the process <math>X</math> and the uniform process. (The | ||

reader is asked to prove this fact in | reader is asked to prove this fact in [[exercise:12796432f4 |Exercise]].) | ||

| Line 538: | Line 537: | ||

Now suppose that we buy a new deck of cards, break the seal on the package, and | Now suppose that we buy a new deck of cards, break the seal on the package, and | ||

riffle shuffle the deck 7 times. If one tries this, one finds that the Yin suits win | riffle shuffle the deck 7 times. If one tries this, one finds that the Yin suits win | ||

about 75 | about 75% of the time. This is 25% more than we would get if the deck were in truly | ||

random order. This deviation is reasonably close to the theoretical maximum of | random order. This deviation is reasonably close to the theoretical maximum of | ||

<math>33.4</math> | <math>33.4</math>% obtained above. | ||

| Line 553: | Line 552: | ||

order. Under 7 riffle shuffles, the relative advantage of the Yin suits over the Yang | order. Under 7 riffle shuffles, the relative advantage of the Yin suits over the Yang | ||

suits is preserved to a certain extent. | suits is preserved to a certain extent. | ||

==General references== | ==General references== | ||

{{cite web |url=https://math.dartmouth.edu/~prob/prob/prob.pdf |title=Grinstead and Snell’s Introduction to Probability |last=Doyle |first=Peter G.|date=2006 |access-date=June 6, 2024}} | {{cite web |url=https://math.dartmouth.edu/~prob/prob/prob.pdf |title=Grinstead and Snell’s Introduction to Probability |last=Doyle |first=Peter G.|date=2006 |access-date=June 6, 2024}} | ||

==Notes== | ==Notes== | ||

{{Reflist|group=Notes}} | {{Reflist|group=Notes}} | ||

Latest revision as of 03:45, 12 June 2024

Much of this section is based upon an article by Brad Mann,[Notes 1] which is an exposition of an article by David Bayer and Persi Diaconis.[Notes 2]

Riffle Shuffles

Given a deck of [math]n[/math] cards, how many times must we shuffle it to make it “random”? Of course, the answer depends upon the method of shuffling which is used and what we mean by “random.” We shall begin the study of this question by considering a standard model for the riffle shuffle.

We begin with a deck of [math]n[/math] cards, which we will assume are labelled in

increasing order with the integers from 1 to [math]n[/math]. A riffle shuffle consists of a cut

of the deck into two stacks and an interleaving of the two

stacks. For example, if [math]n = 6[/math], the initial ordering is

[math](1, 2, 3, 4, 5, 6)[/math], and a cut might occur between cards 2 and 3. This gives rise to

two stacks, namely [math](1, 2)[/math] and [math](3, 4, 5, 6)[/math]. These are interleaved to form a new

ordering of the deck. For example, these two stacks might form the ordering [math](1, 3,

4, 2, 5, 6)[/math]. In order to discuss such shuffles, we need to assign a probability

distribution to the set of all possible shuffles. There are several reasonable ways in

which this can be done. We will give several different assignment strategies, and

show that they are equivalent. (This does not mean that this assignment is the only

reasonable one.) First, we assign the binomial probability [math]b(n, 1/2, k)[/math] to the

event that the cut occurs after the [math]k[/math]th card. Next, we assume that all possible

interleavings, given a cut, are equally likely. Thus, to complete the assignment of

probabilities, we need to determine the number of possible interleavings of two stacks

of cards, with [math]k[/math] and [math]n-k[/math] cards, respectively.

We begin by writing the second stack in a line, with spaces in between each pair

of consecutive cards, and with spaces at the beginning and end (so there are [math]n-k+1[/math]

spaces). We choose, with replacement, [math]k[/math] of these spaces, and place the cards from

the first stack in the chosen spaces. This can be done in

ways. Thus, the probability of a given interleaving should be

Next, we note that if the new ordering is not the identity ordering, it is the

result of a unique cut-interleaving pair. If the new ordering is the identity, it is

the result of any one of [math]n+1[/math] cut-interleaving pairs.

We define a rising sequence in an ordering to be a maximal

subsequence of consecutive integers in increasing order. For example, in the ordering

there are 4 rising sequences; they are [math](1)[/math], [math](2, 3, 4)[/math], [math](5, 6)[/math], and [math](7)[/math]. It is easy to see that an ordering is the result of a riffle shuffle applied to the identity ordering if and only if it has no more than two rising sequences. (If the ordering has two rising sequences, then these rising sequences correspond to the two stacks induced by the cut, and if the ordering has one rising sequence, then it is the identity ordering.) Thus, the sample space of orderings obtained by applying a riffle shuffle to the identity ordering is naturally described as the set of all orderings with at most two rising sequences.

It is now easy to assign a probability distribution to this sample space. Each

ordering with two rising sequences is assigned the value

and the identity ordering is assigned the value

There is another way to view a riffle shuffle. We can imagine starting with a

deck cut into two stacks as before, with the same probabilities assignment as before

i.e., the binomial distribution. Once we have the two stacks, we take cards, one by

one, off of the bottom of the two stacks, and place them onto one stack. If there are

[math]k_1[/math] and [math]k_2[/math] cards, respectively, in the two stacks at some point in this process,

then we make the assumption that the probabilities that the next card to be taken comes

from a given stack is proportional to the current stack size. This implies that the

probability that we take the next card from the first stack equals

and the corresponding probability for the second stack is

We shall now show that this process assigns the uniform probability to each of the possible interleavings of the two stacks.

Suppose, for example, that an interleaving came about as the result of choosing

cards from the two stacks in some order. The probability that this result occurred is

the product of the probabilities at each point in the process, since the choice of

card at each point is assumed to be independent of the previous choices. Each factor

of this product is of the form

where [math]i = 1[/math] or [math]2[/math], and the denominator of each factor equals the number of cards left to be chosen. Thus, the denominator of the probability is just [math]n![/math]. At the moment when a card is chosen from a stack that has [math]i[/math] cards in it, the numerator of the corresponding factor in the probability is [math]i[/math], and the number of cards in this stack decreases by 1. Thus, the numerator is seen to be [math]k!(n-k)![/math], since all cards in both stacks are eventually chosen. Therefore, this process assigns the probability

to each possible interleaving.

We now turn to the question of what happens when we riffle shuffle [math]s[/math] times. It

should be clear that if we start with the identity ordering, we obtain an ordering

with at most [math]2^s[/math] rising sequences, since a riffle shuffle creates at most two rising

sequences from every rising sequence in the starting ordering. In fact, it is not

hard to see that each such ordering is the result of [math]s[/math] riffle shuffles. The

question becomes, then, in how many ways can an ordering with [math]r[/math] rising sequences

come about by applying [math]s[/math] riffle shuffles to the identity ordering? In order to

answer this question, we turn to the idea of an [math]a[/math]-shuffle.

[math]a[/math]-Shuffles

There are several ways to visualize an [math]a[/math]-shuffle. One way is to imagine a creature with [math]a[/math] hands who is given a deck of cards to riffle shuffle. The creature naturally cuts the deck into [math]a[/math] stacks, and then riffles them together. (Imagine that!) Thus, the ordinary riffle shuffle is a 2-shuffle. As in the case of the ordinary 2-shuffle, we allow some of the stacks to have 0 cards. Another way to visualize an [math]a[/math]-shuffle is to think about its inverse, called an [math]a[/math]-unshuffle. This idea is described in the proof of the next theorem.

We will now show that an [math]a[/math]-shuffle followed by a [math]b[/math]-shuffle is equivalent to an

[math]ab[/math]-shuffle. This means, in particular, that [math]s[/math] riffle shuffles in succession are

equivalent to one

[math]2^s[/math]-shuffle. This equivalence is made precise by the following theorem.

Let [math]a[/math] and [math]b[/math] be two positive integers. Let [math]S_{a,b}[/math] be the set of all ordered pairs in which the first entry is an [math]a[/math]-shuffle and the second entry is a [math]b[/math]-shuffle. Let [math]S_{ab}[/math] be the set of all [math]ab[/math]-shuffles. Then there is a 1-1 correspondence between [math]S_{a,b}[/math] and [math]S_{ab}[/math] with the following property. Suppose that [math](T_1, T_2)[/math] corresponds to [math]T_3[/math]. If [math]T_1[/math] is applied to the identity ordering, and [math]T_2[/math] is applied to the resulting ordering, then the final ordering is the same as the ordering that is obtained by applying [math]T_3[/math] to the identity ordering.

Show ProofThe easiest way to describe the required correspondence is through the idea of an unshuffle. An [math]a[/math]-unshuffle begins with a deck of [math]n[/math] cards. One by one, cards are taken from the top of the deck and placed, with equal probability, on the bottom of any one of [math]a[/math] stacks, where the stacks are labelled from 0 to [math]a-1[/math]. After all of the cards have been distributed, we combine the stacks to form one stack by placing stack [math]i[/math] on top of stack [math]i+1[/math], for [math]0 \le i \le a-1[/math]. It is easy to see that if one starts with a deck, there is exactly one way to cut the deck to obtain the [math]a[/math] stacks generated by the [math]a[/math]-unshuffle, and with these [math]a[/math] stacks, there is exactly one way to interleave them to obtain the deck in the order that it was in before the unshuffle was performed. Thus, this [math]a[/math]-unshuffle corresponds to a unique [math]a[/math]-shuffle, and this [math]a[/math]-shuffle is the inverse of the original [math]a[/math]-unshuffle.

If we apply an [math]ab[/math]-unshuffle [math]U_3[/math] to a deck, we obtain a set of [math]ab[/math] stacks,

which are then combined, in order, to form one stack. We label these stacks with

ordered pairs of integers, where the first coordinate is between 0 and [math]a-1[/math], and the

second coordinate is between 0 and [math]b-1[/math]. Then we label each card with the label of

its stack. The number of possible labels is [math]ab[/math], as required. Using this labelling,

we can describe how to find a [math]b[/math]-unshuffle and an

[math]a[/math]-unshuffle, such that if these two unshuffles are applied in this order to the

deck, we obtain the same set of [math]ab[/math] stacks as were obtained by the [math]ab[/math]-unshuffle.

To obtain the [math]b[/math]-unshuffle [math]U_2[/math], we sort the deck into [math]b[/math] stacks, with the

[math]i[/math]th stack containing all of the cards with second coordinate [math]i[/math], for [math]0 \le i \le

b-1[/math]. Then these stacks are combined to form one stack. The [math]a[/math]-unshuffle [math]U_1[/math]

proceeds in the same manner, except that the first coordinates of the labels are

used. The resulting [math]a[/math] stacks are then combined to form one stack.

The above description shows that the cards ending up on top are all those

labelled [math](0, 0)[/math]. These are followed by those labelled [math](0, 1),\ (0, 2),[/math] [math]\

\ldots,\ (0, b - 1),\ (1, 0),\ (1,1),\ldots,\ (a-1, b-1)[/math]. Furthermore, the relative order of any

pair of cards with the same labels is never altered. But this is exactly the same as an

[math]ab[/math]-unshuffle, if, at the beginning of such an unshuffle, we label each of the cards with one of

the labels [math](0, 0),\ (0, 1),\ \ldots,\ (0, b-1),\ (1, 0),\ (1,1),\ \ldots,\ (a-1, b-1)[/math]. This

completes the proof.

In Figure, we show the labels for a 2-unshuffle of a deck with 10 cards. There

are 4 cards with the label 0 and 6 cards with the label 1, so if the 2-unshuffle is performed,

the first stack will have 4 cards and the second stack will have 6 cards. When this unshuffle is

performed, the deck ends up in the identity ordering.

In Figure, we show the labels for a 4-unshuffle of the same deck (because there

are four labels being used). This figure can also be regarded as an example of a pair of

2-unshuffles, as described in the proof above. The first 2-unshuffle will use the second coordinate

of the labels to determine the stacks. In this case, the two stacks contain the cards whose values

are

After this 2-unshuffle has been performed, the deck is in the order shown in Figure, as the reader should check. If we wish to perform a 4-unshuffle on the deck, using the labels shown, we sort the cards lexicographically, obtaining the four stacks

When these stacks are combined, we once again obtain the identity ordering of the deck. The point of the above theorem is that both sorting procedures always lead to the same initial ordering.

If [math]D[/math] is any ordering that is the result of applying an [math]a[/math]-shuffle and then a [math]b[/math]-shuffle to the identity ordering, then the probability assigned to [math]D[/math] by this pair of operations is the same as the probability assigned to [math]D[/math] by the process of applying an [math]ab[/math]-shuffle to the identity ordering.

Show ProofCall the sample space of [math]a[/math]-shuffles [math]S_a[/math]. If we label the stacks by the integers from [math]0[/math] to [math]a-1[/math], then each cut-interleaving pair, i.e., shuffle, corresponds to exactly one [math]n[/math]-digit base [math]a[/math] integer, where the [math]i[/math]th digit in the integer is the stack of which the [math]i[/math]th card is a member. Thus, the number of cut-interleaving pairs is equal to the number of [math]n[/math]-digit base [math]a[/math] integers, which is [math]a^n[/math]. Of course, not all of these pairs leads to different orderings. The number of pairs leading to a given ordering will be discussed later. For our purposes it is enough to point out that it is the cut-interleaving pairs that determine the probability assignment.

The previous theorem shows that there is a 1-1 correspondence between [math]S_{a,b}[/math]

and [math]S_{ab}[/math]. Furthermore, corresponding elements give the same ordering when applied to

the identity ordering. Given any ordering [math]D[/math], let [math]m_1[/math] be the number of elements of

[math]S_{a,b}[/math] which, when applied to the identity ordering, result in [math]D[/math]. Let [math]m_2[/math] be

the number of elements of [math]S_{ab}[/math] which, when applied to the identity ordering,

result in [math]D[/math]. The previous theorem implies that [math]m_1 = m_2[/math]. Thus, both sets assign

the probability

Connection with the Birthday Problem

There is another point that can be made concerning the labels given to the cards by the successive unshuffles. Suppose that we 2-unshuffle an [math]n[/math]-card deck until the labels on the cards are all different. It is easy to see that this process produces each permutation with the same probability, i.e., this is a random process. To see this, note that if the labels become distinct on the [math]s[/math]th 2-unshuffle, then one can think of this sequence of 2-unshuffles as one [math]2^s[/math]-unshuffle, in which all of the stacks determined by the unshuffle have at most one card in them (remember, the stacks correspond to the labels). If each stack has at most one card in it, then given any two cards in the deck, it is equally likely that the first card has a lower or a higher label than the second card. Thus, each possible ordering is equally likely to result from this [math]2^s[/math]-unshuffle.

Let [math]T[/math] be the random variable that counts the number of 2-unshuffles until all labels are

distinct. One can think of [math]T[/math] as giving a measure of how long it takes in the unshuffling process

until randomness is reached. Since shuffling and unshuffling are inverse processes, [math]T[/math] also

measures the number of shuffles necessary to achieve randomness. Suppose that we have an [math]n[/math]-card

deck, and we ask for [math]P(T \le s)[/math]. This equals [math]1 - P(T \gt s)[/math]. But [math]T \gt s[/math] if and only if it is

the case that not all of the labels after [math]s[/math] 2-unshuffles are distinct. This is just the birthday

problem; we are asking for the probability that at least two people have the same birthday, given

that we have [math]n[/math] people and there are [math]2^s[/math] possible birthdays. Using our formula from

Example, we find that

In Chapter Expected Value and Variance, we will define the average value of a random variable. Using this idea, and

the above equation, one can calculate the average value of the random variable [math]T[/math] (see Exercise). For example, if

[math]n = 52[/math], then the average value of [math]T[/math] is about 11.7. This means that, on the average, about 12

riffle shuffles are needed for the process to be considered random.

Cut-Interleaving Pairs and Orderings

As was noted in the proof of Theorem, not all of the cut-interleaving pairs lead to different orderings. However, there is an easy formula which gives the number of such pairs that lead to a given ordering.

If an ordering of length [math]n[/math] has [math]r[/math] rising sequences, then the number of cut-interleaving pairs under an [math]a[/math]-shuffle of the identity ordering which lead to the ordering is

To see why this is true, we need to count the number of ways in which the cut in an [math]a[/math]-shuffle can be performed which will lead to a given ordering with [math]r[/math] rising sequences. We can disregard the interleavings, since once a cut has been made, at most one interleaving will lead to a given ordering. Since the given ordering has [math]r[/math] rising sequences, [math]r-1[/math] of the division points in the cut are determined. The remaining [math]a - 1 - (r - 1) = a - r[/math] division points can be placed anywhere. The number of places to put these remaining division points is [math]n+1[/math] (which is the number of spaces between the consecutive pairs of cards, including the positions at the beginning and the end of the deck). These places are chosen with repetition allowed, so the number of ways to make these choices is

In particular, this means that if [math]D[/math] is an ordering that is the result of

applying an

[math]a[/math]-shuffle to the identity ordering, and if [math]D[/math] has [math]r[/math] rising sequences, then the

probability assigned to [math]D[/math] by this process is

The above theorem shows that the essential information about the

probability assigned to an ordering under an [math]a[/math]-shuffle is just the number of rising

sequences in the ordering. Thus, if we determine the number of orderings which

contain exactly [math]r[/math] rising sequences, for each [math]r[/math] between 1 and [math]n[/math], then we will

have determined the distribution function of the random variable which consists of

applying a random [math]a[/math]-shuffle to the identity ordering.

The number of orderings of [math]\{1, 2, \ldots, n\}[/math] with [math]r[/math] rising sequences is

denoted by [math]A(n, r)[/math], and is called an Eulerian number. There are

many ways to calculate the values of these numbers; the following theorem gives one recursive

method which follows immediately from what we already know about [math]a[/math]-shuffles.

Let [math]a[/math] and [math]n[/math] be positive integers. Then

The second equation can be used to calculate the values of the Eulerian numbers, and follows immediately from the Equation \ref{eq 3.6}. The last equation is a consequence of the fact that the only ordering of [math]\{1, 2, \ldots, n\}[/math] with one rising sequence is the identity ordering. Thus, it remains to prove Equation \ref{eq 3.6}. We will count the set of [math]a[/math]-shuffles of a deck with [math]n[/math] cards in two ways. First, we know that there are [math]a^n[/math] such shuffles (this was noted in the proof of Theorem). But there are [math]A(n, r)[/math] orderings of [math]\{1, 2, \ldots, n\}[/math] with [math]r[/math] rising sequences, and Theorem states that for each such ordering, there are exactly

cut-interleaving pairs that lead to the ordering. Therefore, the right-hand side of Equation \ref{eq 3.6} counts the set of [math]a[/math]-shuffles of an [math]n[/math]-card deck. This completes the\linebreak[4] proof.

Random Orderings and Random Processes

We now turn to the second question that was asked at the beginning of this section: What do we mean by a “random” ordering? It is somewhat misleading to think about a given ordering as being random or not random. If we want to choose a random ordering from the set of all orderings of [math]\{1, 2, \ldots, n\}[/math], we mean that we want every ordering to be chosen with the same probability, i.e., any ordering is as “random” as any other.

The word “random” should really be used to describe a process. We will say that

a process that produces an object from a (finite) set of objects is a random

process if each object in the set is produced with the

same probability by the process. In the present situation, the objects are the orderings, and the

process which produces these objects is the shuffling process. It is easy to see that no [math]a[/math]-shuffle

is really a random process, since if [math]T_1[/math] and [math]T_2[/math] are two orderings with a different

number of rising sequences, then they are produced by an [math]a[/math]-shuffle, applied to the

identity ordering, with different probabilities.

Variation Distance

Instead of requiring that a sequence of shuffles yield a process which is random, we will define a measure that describes how far away a given process is from a random process. Let [math]X[/math] be any process which produces an ordering of [math]\{1, 2, \ldots, n\}[/math]. Define [math]f_X(\pi)[/math] be the probability that [math]X[/math] produces the ordering [math]\pi[/math]. (Thus, [math]X[/math] can be thought of as a random variable with distribution function [math]f[/math].) Let [math]\Omega_n[/math] be the set of all orderings of [math]\{1, 2, \ldots, n\}[/math]. Finally, let [math]u(\pi) = 1/|\Omega_n|[/math] for all [math]\pi \in \Omega_n[/math]. The function [math]u[/math] is the distribution function of a process which produces orderings and which is random. For each ordering [math]\pi \in \Omega_n[/math], the quantity

is the difference between the actual and desired probabilities that [math]X[/math] produces [math]\pi[/math]. If we sum this over all orderings [math]\pi[/math] and call this sum [math]S[/math], we see that [math]S = 0[/math] if and only if [math]X[/math] is random, and otherwise [math]S[/math] is positive. It is easy to show that the maximum value of [math]S[/math] is 2, so we will multiply the sum by [math]1/2[/math] so that the value falls in the interval [math][0, 1][/math]. Thus, we obtain the following sum as the formula for the variation distance between the two processes:

Now we apply this idea to the case of shuffling. We let [math]X[/math] be the process of [math]s[/math] successive riffle shuffles applied to the identity ordering. We know that it is also possible to think of [math]X[/math] as one [math]2^s[/math]-shuffle. We also know that [math]f_X[/math] is constant on the set of all orderings with [math]r[/math] rising sequences, where [math]r[/math] is any positive integer. Finally, we know the value of [math]f_X[/math] on an ordering with [math]r[/math] rising sequences, and we know how many such orderings there are. Thus, in this specific case, we have

Since this sum has only [math]n[/math] summands, it is easy to compute this for moderate sized values of [math]n[/math]. For [math]n = 52[/math], we obtain the list of values given in Table.

| Number of Riffle Shuffles | Variation Distance |

| 1 | 1 |

| 2 | 1 |

| 3 | 1 |

| 4 | 0.9999995334 |

| 5 | 0.9237329294 |

| 6 | 0.6135495966 |

| 7 | 0.3340609995 |

| 8 | 0.1671586419 |

| 9 | 0.0854201934 |

| 10 | 0.0429455489 |

| 11 | 0.0215023760 |

| 12 | 0.0107548935 |

| 13 | 0.0053779101 |

| 14 | 0.0026890130 |

To help in understanding these data, they are shown in graphical form in Figure. The program VariationList produces the data shown in both Table and Figure. One sees that until 5 shuffles have occurred, the output of [math]X[/math] is very far from random. After 5 shuffles, the distance from the random process is essentially halved each time a shuffle occurs.

Given the distribution functions [math]f_X(\pi)[/math] and [math]u(\pi)[/math] as above, there is

another way to view the variation distance [math]\parallel f_X - u\parallel[/math]. Given any

event [math]T[/math] (which is a subset of

[math]S_n[/math]), we can calculate its probability under the process [math]X[/math] and under the uniform

process. For example, we can imagine that [math]T[/math] represents the set of all permutations

in which the first player in a 7-player poker game is dealt a straight flush (five

consecutive cards in the same suit). It is interesting to consider how much the

probability of this event after a certain number of shuffles differs from the

probability of this event if all permutations are equally likely. This difference can

be thought of as describing how close the process [math]X[/math] is to the random process with

respect to the event [math]T[/math].

Now consider the event [math]T[/math] such that the absolute value of the difference between

these two probabilities is as large as possible. It can be shown that this absolute

value is the variation distance between the process [math]X[/math] and the uniform process. (The

reader is asked to prove this fact in Exercise.)

We have just seen that, for a deck of 52 cards, the variation distance between

the 7-riffle shuffle process and the random process is about [math].334[/math]. It is of

interest to find an event [math]T[/math] such that the difference between the probabilities

that the two processes produce [math]T[/math] is close to

[math].334[/math]. An event with this property can be described in terms of the game called

New-Age Solitaire.

New-Age Solitaire

This game was invented by Peter Doyle. It is played with a standard 52-card deck. We deal the cards face up, one at a time, onto a discard pile. If an ace is encountered, say the ace of Hearts, we use it to start a Heart pile. Each suit pile must be built up in order, from ace to king, using only subsequently dealt cards. Once we have dealt all of the cards, we pick up the discard pile and continue. We define the Yin suits to be Hearts and Clubs, and the Yang suits to be Diamonds and Spades. The game ends when either both Yin suit piles have been completed, or both Yang suit piles have been completed. It is clear that if the ordering of the deck is produced by the random process, then the probability that the Yin suit piles are completed first is exactly 1/2.

Now suppose that we buy a new deck of cards, break the seal on the package, and

riffle shuffle the deck 7 times. If one tries this, one finds that the Yin suits win

about 75% of the time. This is 25% more than we would get if the deck were in truly

random order. This deviation is reasonably close to the theoretical maximum of

[math]33.4[/math]% obtained above.

Why do the Yin suits win so often? In a brand new deck of cards, the suits are

in the following order, from top to bottom: ace through king of Hearts, ace through

king of Clubs, king through ace of Diamonds, and king through ace of Spades. Note

that if the cards were not shuffled at all, then the Yin suit piles would be completed

on the first pass, before any Yang suit cards are even seen. If we were to continue

playing the game until the Yang suit piles are completed, it would take 13 passes

through the deck to do this. Thus, one can see that in a new deck, the Yin suits are

in the most advantageous order and the Yang suits are in the least advantageous

order. Under 7 riffle shuffles, the relative advantage of the Yin suits over the Yang

suits is preserved to a certain extent.

General references

Doyle, Peter G. (2006). "Grinstead and Snell's Introduction to Probability" (PDF). Retrieved June 6, 2024.