guide:F7d7868547: Difference between revisions

mNo edit summary |

mNo edit summary |

||

| (3 intermediate revisions by the same user not shown) | |||

| Line 1: | Line 1: | ||

The method of '''least squares''' is a standard approach in [[ | The method of '''least squares''' is a standard approach in [[regression analysis|regression analysis]] to approximate the solution of [[overdetermined system|overdetermined system]]s (sets of equations in which there are more equations than unknowns) by minimizing the sum of the squares of the [[Residuals (statistics)|residuals]] (a residual being the difference between an observed value and the fitted value provided by a model) made in the results of each individual equation. | ||

The most important application is in [[ | The most important application is in [[curve fitting|data fitting]]. When the problem has substantial uncertainties in the independent variable (the <math>X</math> variable), then simple regression and least-squares methods have problems; in such cases, the methodology required for fitting [[errors-in-variables models|errors-in-variables models]] may be considered instead of that for least squares. | ||

Least-squares problems fall into two categories: linear or [[ | Least-squares problems fall into two categories: linear or [[ordinary least squares|ordinary least squares]] and [[nonlinear least squares|nonlinear least squares]], depending on whether or not the residuals are linear in all unknowns. The linear least-squares problem occurs in statistical [[regression analysis|regression analysis]]; it has a closed-form solution. The nonlinear problem is usually solved by iterative refinement; at each iteration the system is approximated by a linear one, and thus the core calculation is similar in both cases. | ||

[[ | The following discussion is mostly presented in terms of linear functions but the use of least squares is valid and practical for more general families of functions. Also, by iteratively applying local quadratic approximation to the likelihood (through the [[Fisher information|Fisher information]]), the least-squares method may be used to fit a [[generalized linear model|generalized linear model]]. | ||

The least-squares method was officially discovered and published by [[Adrien-Marie Legendre|Adrien-Marie Legendre]] (1805),<ref>Mansfield Merriman, "A List of Writings Relating to the Method of Least Squares"</ref> though it is usually also co-credited to [[Carl Friedrich Gauss|Carl Friedrich Gauss]] (1795)<ref name=brertscher>{{cite book |last=Bretscher |first=Otto |title=Linear Algebra With Applications |edition=3rd |publisher=Prentice Hall |year=1995 |location=Upper Saddle River, NJ}}</ref><ref>{{cite journal |first=Stephen M. |last=Stigler |year=1981 |title=Gauss and the Invention of Least Squares |journal=Ann. Stat. |volume=9 |issue=3 |pages=465–474 |doi=10.1214/aos/1176345451 |doi-access=free }}</ref> who contributed significant theoretical advances to the method and may have previously used it in his work.<ref>Britannica, "Least squares method"</ref><ref>Studies in the History of Probability and Statistics. XXIX: The Discovery of the Method of Least Squares | |||

The least-squares method was officially discovered and published by [[ | |||

R. L. Plackett</ref> | R. L. Plackett</ref> | ||

=== | ==Problem statement== | ||

The objective consists of adjusting the parameters of a model function to best fit a data set. A simple data set consists of <math>n</math> points (data pairs) <math>(x_i,y_i)\!</math>, <math>i=1,\ldots,n</math>, where <math>x_i</math> is an independent variable and <math>y_i</math> is a [[dependent variable|dependent variable]] whose value is found by observation. The model function has the form <math>f(x, \boldsymbol \beta)</math>, where <math>m</math> adjustable parameters are held in the vector <math>\boldsymbol \beta</math>. The goal is to find the parameter values for the model that "best" fits the data. The fit of a model to a data point is measured by its [[errors and residuals in statistics|residual]], defined as the difference between the observed value of the dependent variable and the value predicted by the model: | |||

The objective consists of adjusting the parameters of a model function to best fit a data set. A simple data set consists of <math>n</math> points (data pairs) <math>(x_i,y_i)\!</math>, <math>i=1,\ldots,n</math>, where <math>x_i</math> is an | |||

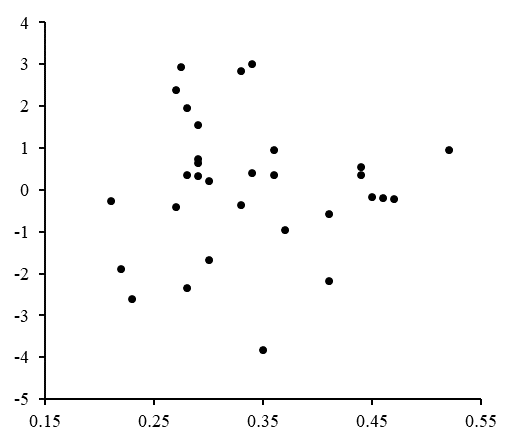

<math display="block">r_i = y_i - f(x_i, \boldsymbol \beta).</math>[[File:Linear Residual Plot Graph.png|thumb|The residuals are plotted against corresponding <math>x</math> values. The random fluctuations about <math>r_i=0</math> indicate a linear model is appropriate.|251x251px]] | <math display="block">r_i = y_i - f(x_i, \boldsymbol \beta).</math>[[File:Linear Residual Plot Graph.png|thumb|The residuals are plotted against corresponding <math>x</math> values. The random fluctuations about <math>r_i=0</math> indicate a linear model is appropriate.|251x251px]] | ||

The least-squares method finds the optimal parameter values by minimizing the [[ | The least-squares method finds the optimal parameter values by minimizing the [[sum of squared residuals|sum of squared residuals]], <math>S</math>:<ref name=":0" /> | ||

<math display="block">S=\sum_{i=1}^{n}r_i^2.</math> | <math display="block">S=\sum_{i=1}^{n}r_i^2.</math> | ||

An example of a model in two dimensions is that of the straight line. Denoting the y-intercept as <math>\beta_0</math> and the slope as <math>\beta_1</math>, the model function is given by <math>f(x,\boldsymbol \beta)=\beta_0+\beta_1 x</math>. See [[ | An example of a model in two dimensions is that of the straight line. Denoting the y-intercept as <math>\beta_0</math> and the slope as <math>\beta_1</math>, the model function is given by <math>f(x,\boldsymbol \beta)=\beta_0+\beta_1 x</math>. See [[#Linear Least Squares #Example|linear least squares]] for a fully worked out example of this model. | ||

A data point may consist of more than one independent variable. For example, when fitting a plane to a set of height measurements, the plane is a function of two independent variables,<math>X</math> and ''z'', say. In the most general case there may be one or more independent variables and one or more dependent variables at each data point. | A data point may consist of more than one independent variable. For example, when fitting a plane to a set of height measurements, the plane is a function of two independent variables,<math>X</math> and ''z'', say. In the most general case there may be one or more independent variables and one or more dependent variables at each data point. | ||

| Line 57: | Line 31: | ||

==Limitations== | ==Limitations== | ||

This regression formulation considers only observational errors in the dependent variable (but the alternative [[ | This regression formulation considers only observational errors in the dependent variable (but the alternative [[total least squares|total least squares]] regression can account for errors in both variables). There are two rather different contexts with different implications: | ||

*Regression for prediction. Here a model is fitted to provide a prediction rule for application in a similar situation to which the data used for fitting apply. Here the dependent variables corresponding to such future application would be subject to the same types of observation error as those in the data used for fitting. It is therefore logically consistent to use the least-squares prediction rule for such data. | *Regression for prediction. Here a model is fitted to provide a prediction rule for application in a similar situation to which the data used for fitting apply. Here the dependent variables corresponding to such future application would be subject to the same types of observation error as those in the data used for fitting. It is therefore logically consistent to use the least-squares prediction rule for such data. | ||

*Regression for fitting a "true relationship". In standard [[ | *Regression for fitting a "true relationship". In standard [[regression analysis|regression analysis]] that leads to fitting by least squares there is an implicit assumption that errors in the independent variable are zero or strictly controlled so as to be negligible. When errors in the independent variable are non-negligible, [[Errors-in-variables models|models of measurement error]] can be used; such methods can lead to [[parameter estimation|parameter estimates]], [[hypothesis testing|hypothesis testing]] and [[confidence interval|confidence interval]]s that take into account the presence of observation errors in the independent variables.<ref>For a good introduction to error-in-variables, please see {{cite book |last=Fuller |first=W. A. |author-link=Wayne Fuller |title=Measurement Error Models |publisher=John Wiley & Sons |year=1987 |isbn=978-0-471-86187-4 }}</ref> An alternative approach is to fit a model by [[total least squares|total least squares]]; this can be viewed as taking a pragmatic approach to balancing the effects of the different sources of error in formulating an objective function for use in model-fitting. | ||

==Linear Least Squares== | ==Linear Least Squares== | ||

'''Linear least squares''' ('''LLS''') is the [[ | '''Linear least squares''' ('''LLS''') is the [[least squares approximation|least squares approximation]] of [[linear functions|linear functions]] to data. | ||

It is a set of formulations for solving statistical problems involved in [[ | It is a set of formulations for solving statistical problems involved in [[linear regression|linear regression]], including variants for [[#Ordinary Least Squares (OLS)|ordinary]] (unweighted), [[Weighted least squares|weighted]], and [[Generalized least squares|generalized]] (correlated) [[residuals (statistics)|residuals]]. | ||

===Main formulations=== | ===Main formulations=== | ||

The three main linear least squares formulations are: | The three main linear least squares formulations are: | ||

{| class="table table-bordered" | |||

|- | |||

! Formulation !! Description | |||

|- | |||

| '''[[Ordinary least squares|Ordinary least squares]]''' (OLS) || Is the most common estimator. OLS estimates are commonly used to analyze both [[experiment|experiment]]al and [[observational study|observational]] data. The OLS method minimizes the sum of squared [[Errors and residuals in statistics|residuals]], and leads to a closed-form expression for the estimated value of the unknown parameter vector <math>\beta</math>: | |||

<math display="block"> | <math display="block"> | ||

\hat{\boldsymbol\beta} = (\mathbf{X}^\mathsf{T}\mathbf{X})^{-1} \mathbf{X}^\mathsf{T} \mathbf{y}, | \hat{\boldsymbol\beta} = (\mathbf{X}^\mathsf{T}\mathbf{X})^{-1} \mathbf{X}^\mathsf{T} \mathbf{y}, | ||

</math> where <math>\mathbf{y}</math> is a vector whose <math>i</math>th element is the <math>i</math>th observation of the [[ | </math> where <math>\mathbf{y}</math> is a vector whose <math>i</math>th element is the <math>i</math>th observation of the [[dependent variable|dependent variable]], and <math>\mathbf{X}</math> is a matrix whose <math>ij</math> element is the <math>i</math>th observation of the <math>j</math>th independent variable. The estimator is [[bias of an estimator|unbiased]] and [[consistent estimator|consistent]] if the errors have finite variance and are uncorrelated with the regressors:<ref>{{cite journal | last1=Lai | first1=T.L. | last2=Robbins | first2=H. | last3=Wei | first3=C.Z. | journal=[[Proceedings of the National Academy of Sciences|PNAS]] | year=1978 | volume=75 | title=Strong consistency of least squares estimates in multiple regression | issue=7 | pages=3034–3036 | doi= 10.1073/pnas.75.7.3034 | pmid=16592540 | jstor=68164 | bibcode=1978PNAS...75.3034L | pmc=392707 | doi-access=free }}</ref> <math display="block"> | ||

\operatorname{E}[\,\mathbf{x}_i\varepsilon_i\,] = 0, | \operatorname{E}[\,\mathbf{x}_i\varepsilon_i\,] = 0, | ||

</math> where <math>\mathbf{x}_i</math> is the transpose of row <math>i</math> of the matrix <math>\mathbf{X}.</math> It is also [[ | </math> where <math>\mathbf{x}_i</math> is the transpose of row <math>i</math> of the matrix <math>\mathbf{X}.</math> It is also [[efficiency (statistics)|efficient]] under the assumption that the errors have finite variance and are [[Homoscedasticity|homoscedastic]], meaning that <math>\operatorname{E}[\varepsilon_i^2|x_i]</math> does not depend on <math>i</math>. The condition that the errors are uncorrelated with the regressors will generally be satisfied in an experiment, but in the case of observational data, it is difficult to exclude the possibility of an omitted covariate <math>z</math> that is related to both the observed covariates and the response variable. The existence of such a covariate will generally lead to a correlation between the regressors and the response variable, and hence to an inconsistent estimator of <math>\beta</math>. The condition of homoscedasticity can fail with either experimental or observational data. If the goal is either inference or predictive modeling, the performance of OLS estimates can be poor if [[multicollinearity|multicollinearity]] is present, unless the sample size is large. | ||

|- | |||

| '''[[Weighted least squares|Weighted least squares]]''' (WLS) || Used when [[heteroscedasticity|heteroscedasticity]] is present in the error terms of the model. | |||

|- | |||

| '''[[Generalized least squares|Generalized least squares]]''' (GLS) || Is an extension of the OLS method, that allows efficient estimation of <math>\beta</math> when either [[heteroscedasticity|heteroscedasticity]], or correlations, or both are present among the error terms of the model, as long as the form of heteroscedasticity and correlation is known independently of the data. To handle heteroscedasticity when the error terms are uncorrelated with each other, GLS minimizes a weighted analogue to the sum of squared residuals from OLS regression, where the weight for the <math>i</math><sup>th</sup> case is inversely proportional to <math>\operatorname{Var}(\varepsilon_i)</math>. This special case of GLS is called "weighted least squares". The GLS solution to an estimation problem is <math display="block"> | |||

\hat{\boldsymbol\beta} = (\mathbf{X}^\mathsf{T} \boldsymbol\Omega^{-1} \mathbf{X})^{-1}\mathbf{X}^\mathsf{T}\boldsymbol\Omega^{-1}\mathbf{y}, | \hat{\boldsymbol\beta} = (\mathbf{X}^\mathsf{T} \boldsymbol\Omega^{-1} \mathbf{X})^{-1}\mathbf{X}^\mathsf{T}\boldsymbol\Omega^{-1}\mathbf{y}, | ||

</math> where <math>\Omega</math> is the covariance matrix of the errors. GLS can be viewed as applying a linear transformation to the data so that the assumptions of OLS are met for the transformed data. For GLS to be applied, the covariance structure of the errors must be known up to a multiplicative constant. | </math> where <math>\Omega</math> is the covariance matrix of the errors. GLS can be viewed as applying a linear transformation to the data so that the assumptions of OLS are met for the transformed data. For GLS to be applied, the covariance structure of the errors must be known up to a multiplicative constant. | ||

|} | |||

===Properties=== | ===Properties=== | ||

If the experimental errors, <math>\varepsilon</math>, are uncorrelated, have a mean of zero and a constant variance, <math>\sigma</math>, the [[ | If the experimental errors, <math>\varepsilon</math>, are uncorrelated, have a mean of zero and a constant variance, <math>\sigma</math>, the [[Gauss–Markov theorem|Gauss–Markov theorem]] states that the least-squares estimator, <math>\hat{\boldsymbol{\beta}}</math>, has the minimum variance of all estimators that are linear combinations of the observations. In this sense it is the best, or optimal, estimator of the parameters. Note particularly that this property is independent of the statistical [[Cumulative distribution function|distribution function]] of the errors. In other words, ''the distribution function of the errors need not be a [[normal distribution|normal distribution]]''. However, for some probability distributions, there is no guarantee that the least-squares solution is even possible given the observations; still, in such cases it is the best estimator that is both linear and unbiased. | ||

For example, it is easy to show that the [[ | For example, it is easy to show that the [[arithmetic mean|arithmetic mean]] of a set of measurements of a quantity is the least-squares estimator of the value of that quantity. If the conditions of the Gauss–Markov theorem apply, the arithmetic mean is optimal, whatever the distribution of errors of the measurements might be. | ||

However, in the case that the experimental errors do belong to a normal distribution, the least-squares estimator is also a [[ | However, in the case that the experimental errors do belong to a normal distribution, the least-squares estimator is also a [[maximum likelihood|maximum likelihood]] estimator.<ref>{{cite book |title=The Mathematics of Physics and Chemistry |last=Margenau |first=Henry | author2=Murphy, George Moseley |year=1956 | publisher=Van Nostrand |location=Princeton |url=https://archive.org/details/mathematicsofphy0002marg| url-access=registration }}</ref> | ||

These properties underpin the use of the method of least squares for all types of data fitting, even when the assumptions are not strictly valid. | These properties underpin the use of the method of least squares for all types of data fitting, even when the assumptions are not strictly valid. | ||

===Limitations=== | ===Limitations=== | ||

An assumption underlying the treatment given above is that the independent variable,<math>X</math>, is free of error. In practice, the errors on the measurements of the independent variable are usually much smaller than the errors on the dependent variable and can therefore be ignored. When this is not the case, [[ | An assumption underlying the treatment given above is that the independent variable,<math>X</math>, is free of error. In practice, the errors on the measurements of the independent variable are usually much smaller than the errors on the dependent variable and can therefore be ignored. When this is not the case, [[total least squares|total least squares]] or more generally errors-in-variables models, or ''rigorous least squares'', should be used. This can be done by adjusting the weighting scheme to take into account errors on both the dependent and independent variables and then following the standard procedure.<ref name="pg">{{cite book |title=Data fitting in the Chemical Sciences |last=Gans |first=Peter |year=1992 |publisher=Wiley |location=New York |isbn=978-0-471-93412-7 }}</ref><ref>{{cite book |title=Statistical adjustment of Data |last=Deming |first=W. E. |year=1943 |publisher=Wiley | location=New York }}</ref> | ||

In some cases the (weighted) normal equations matrix<math>X^{\mathsf{T}}X</math> is [[ | In some cases the (weighted) normal equations matrix <math>X^{\mathsf{T}}X</math> is [[ill-conditioned|ill-conditioned]]. When fitting polynomials the normal equations matrix is a [[Vandermonde matrix|Vandermonde matrix]]. Vandermonde matrices become increasingly ill-conditioned as the order of the matrix increases. In these cases, the least squares estimate amplifies the measurement noise and may be grossly inaccurate. Various [[regularization (mathematics)|regularization]] techniques can be applied in such cases, the most common of which is called [[Tikhonov regularization|ridge regression]]. If further information about the parameters is known, for example, a range of possible values of <math>\mathbf{\hat{\boldsymbol{\beta}}}</math>, then various techniques can be used to increase the stability of the solution. For example, see [[#Constrained_linear_least_squares|constrained least squares]]. | ||

Another drawback of the least squares estimator is the fact that the norm of the residuals, <math>\| \mathbf y - X\hat{\boldsymbol{\beta}} \|</math> is minimized, whereas in some cases one is truly interested in obtaining small error in the parameter <math>\mathbf{\hat{\boldsymbol{\beta}}}</math>, e.g., a small value of <math>\|{\boldsymbol{\beta}}-\hat{\boldsymbol{\beta}}\|</math>. However, since the true parameter <math>{\boldsymbol{\beta}}</math> is necessarily unknown, this quantity cannot be directly minimized. If a [[ | Another drawback of the least squares estimator is the fact that the norm of the residuals, <math>\| \mathbf y - X\hat{\boldsymbol{\beta}} \|</math> is minimized, whereas in some cases one is truly interested in obtaining small error in the parameter <math>\mathbf{\hat{\boldsymbol{\beta}}}</math>, e.g., a small value of <math>\|{\boldsymbol{\beta}}-\hat{\boldsymbol{\beta}}\|</math>. However, since the true parameter <math>{\boldsymbol{\beta}}</math> is necessarily unknown, this quantity cannot be directly minimized. If a [[prior probability|prior probability]] on <math>\hat{\boldsymbol{\beta}}</math> is known, then a [[Minimum mean square error|Bayes estimator]] can be used to minimize the [[mean squared error|mean squared error]], <math>E \left\{ \| {\boldsymbol{\beta}} - \hat{\boldsymbol{\beta}} \|^2 \right\} </math>. The least squares method is often applied when no prior is known. Surprisingly, when several parameters are being estimated jointly, better estimators can be constructed, an effect known as [[Stein's phenomenon|Stein's phenomenon]]. For example, if the measurement error is [[Normal distribution|Gaussian]], several estimators are known which [[dominating decision rule|dominate]], or outperform, the least squares technique; the best known of these is the [[James–Stein estimator|James–Stein estimator]]. This is an example of more general [[shrinkage estimator|shrinkage estimator]]s that have been applied to regression problems. | ||

==Ordinary Least Squares (OLS)== | ==Ordinary Least Squares (OLS)== | ||

In [[ | In [[statistics|statistics]], '''ordinary least squares''' ('''OLS''') is a type of [[linear least squares|linear least squares]] method for estimating the unknown [[statistical parameter|parameters]] in a [[linear regression|linear regression]] model. OLS chooses the parameters of a [[linear function|linear function]] of a set of [[explanatory variable|explanatory variable]]s by the principle of [[least squares|least squares]]: minimizing the sum of the squares of the differences between the observed [[dependent variable|dependent variable]] (values of the variable being observed) in the given [[dataset|dataset]] and those predicted by the linear function of the independent variable. | ||

Geometrically, this is seen as the sum of the squared distances, parallel to the axis of the dependent variable, between each data point in the set and the corresponding point on the regression surface—the smaller the differences, the better the model fits the data. The resulting [[ | Geometrically, this is seen as the sum of the squared distances, parallel to the axis of the dependent variable, between each data point in the set and the corresponding point on the regression surface—the smaller the differences, the better the model fits the data. The resulting [[Statistical estimation|estimator]] can be expressed by a simple formula, especially in the case of a [[simple linear regression|simple linear regression]], in which there is a single [[regressor|regressor]] on the right side of the regression equation. | ||

The OLS estimator is [[ | The OLS estimator is [[consistent estimator|consistent]] when the regressors are [[exogenous|exogenous]], and—by the [[Gauss–Markov theorem|Gauss–Markov theorem]]—[[best linear unbiased estimator|optimal in the class of linear unbiased estimators]] when the [[statistical error|error]]s are [[homoscedastic|homoscedastic]] and [[autocorrelation|serially uncorrelated]]. Under these conditions, the method of OLS provides [[UMVU|minimum-variance mean-unbiased]] estimation when the errors have finite [[variance|variance]]s. Under the additional assumption that the errors are [[normal distribution|normally distributed]], OLS is the [[maximum likelihood estimator|maximum likelihood estimator]]. | ||

=== Linear model === | === Linear model === | ||

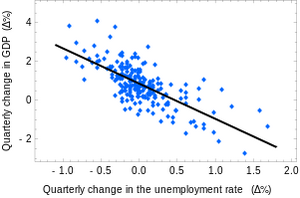

[[File:Okuns law quarterly differences.svg|300px|thumb|[[ | [[File:Okuns law quarterly differences.svg|300px|thumb|[[Okun's law|Okun's law]] in [[macroeconomics|macroeconomics]] states that in an economy the [[GDP|GDP]] growth should depend linearly on the changes in the unemployment rate. Here the ordinary least squares method is used to construct the regression line describing this law.]] | ||

Suppose the data consists of <math>n</math> [[ | Suppose the data consists of <math>n</math> [[statistical unit|observations]] <math>\left\{\mathbf{x}_i, y_i\right\}_{i=1}^n</math>. Each observation <math>i</math> includes a scalar response <math>y_i</math> and a column vector <math>\mathbf{x}_i</math> of <math>p</math> parameters (regressors), i.e., <math>\mathbf{x}_i=\left[x_{i1}, x_{i2}, \dots, x_{ip}\right]^\mathsf{T}</math>. In a [[linear regression model|linear regression model]], the response variable, <math>y_i</math>, is a linear function of the regressors: | ||

<math display="block">y_i = \beta_1\ x_{i1} + \beta_2\ x_{i2} + \cdots + \beta_p\ x_{ip} + \varepsilon_i,</math> | <math display="block">y_i = \beta_1\ x_{i1} + \beta_2\ x_{i2} + \cdots + \beta_p\ x_{ip} + \varepsilon_i,</math> | ||

or in [[ | or in [[row and column vectors|vector]] form, | ||

<math display = "block"> y_i = \mathbf{x}_i^\mathsf{T} \boldsymbol{\beta} + \varepsilon_i, \, </math> | <math display = "block"> y_i = \mathbf{x}_i^\mathsf{T} \boldsymbol{\beta} + \varepsilon_i, \, </math> | ||

where <math>\mathbf{x}_i</math>, as introduced previously, is a column vector of the <math>i</math>-th observation of all the explanatory variables; <math>\boldsymbol{\beta}</math> is a <math>p \times 1</math> vector of unknown parameters; and the scalar <math>\varepsilon_i</math> represents unobserved random variables ([[ | where <math>\mathbf{x}_i</math>, as introduced previously, is a column vector of the <math>i</math>-th observation of all the explanatory variables; <math>\boldsymbol{\beta}</math> is a <math>p \times 1</math> vector of unknown parameters; and the scalar <math>\varepsilon_i</math> represents unobserved random variables ([[errors and residuals in statistics|errors]]) of the <math>i</math>-th observation. <math>\varepsilon_i</math> accounts for the influences upon the responses <math>y_i</math> from sources other than the explanators <math>\mathbf{x}_i</math>. This model can also be written in matrix notation as | ||

<math display = "block"> \mathbf{y} = \mathrm{X} \boldsymbol{\beta} + \boldsymbol{\varepsilon}, \, </math> | <math display = "block"> \mathbf{y} = \mathrm{X} \boldsymbol{\beta} + \boldsymbol{\varepsilon}, \, </math> | ||

where <math>\mathbf{y}</math> and <math>\boldsymbol{\varepsilon}</math> are <math>n \times 1</math> vectors of the response variables and the errors of the <math> n </math> observations, and <math>\mathrm{X}</math> is an <math>n \times p</math> matrix of regressors, also sometimes called the [[ | where <math>\mathbf{y}</math> and <math>\boldsymbol{\varepsilon}</math> are <math>n \times 1</math> vectors of the response variables and the errors of the <math> n </math> observations, and <math>\mathrm{X}</math> is an <math>n \times p</math> matrix of regressors, also sometimes called the [[design matrix|design matrix]], whose row <math>i</math> is <math>\mathbf{x}_i^\mathsf{T}</math> and contains the <math>i</math>-th observations on all the explanatory variables. | ||

As a rule, the constant term is always included in the set of regressors <math>\mathrm{X}</math>, say, by taking <math>x_{i1}=1</math> for all <math>i=1, \dots, n</math>. The coefficient <math>\beta_1</math> corresponding to this regressor is called the ''intercept''. | As a rule, the constant term is always included in the set of regressors <math>\mathrm{X}</math>, say, by taking <math>x_{i1}=1</math> for all <math>i=1, \dots, n</math>. The coefficient <math>\beta_1</math> corresponding to this regressor is called the ''intercept''. | ||

| Line 127: | Line 108: | ||

====Matrix/vector formulation==== | ====Matrix/vector formulation==== | ||

Consider an [[ | Consider an [[overdetermined system|overdetermined system]] | ||

<math display="block">\sum_{j=1}^{p} X_{ij} \beta_j = y_i,\ (i=1, 2, \dots, n),</math> | <math display="block">\sum_{j=1}^{p} X_{ij} \beta_j = y_i,\ (i=1, 2, \dots, n),</math> | ||

of <math> n </math> [[ | of <math> n </math> [[linear equation|linear equation]]s in <math>p</math> unknown [[coefficients|coefficients]], <math> \beta_1, \beta_2, \dots, \beta_p </math>, with <math> n > p </math>. (Note: for a linear model as above, not all elements in <math> \mathrm{X} </math> contains information on the data points. The first column is populated with ones, <math>X_{i1} = 1</math>. Only the other columns contain actual data. So here <math>p</math> is equal to the number of regressors plus one.) This can be written in [[matrix (mathematics)|matrix]] form as | ||

<math display="block">\mathrm{X} \boldsymbol{\beta} = \mathbf {y},</math> | <math display="block">\mathrm{X} \boldsymbol{\beta} = \mathbf {y},</math> | ||

| Line 149: | Line 130: | ||

\end{bmatrix}. </math> | \end{bmatrix}. </math> | ||

Such a system usually has no exact solution, so the goal is instead to find the coefficients <math>\boldsymbol{\beta}</math> which fit the equations "best", in the sense of solving the | Such a system usually has no exact solution, so the goal is instead to find the coefficients <math>\boldsymbol{\beta}</math> which fit the equations "best", in the sense of solving the quadratic minimization problem | ||

<math display="block">\hat{\boldsymbol{\beta}} = \underset{\boldsymbol{\beta}}{\operatorname{arg\,min}}\,S(\boldsymbol{\beta}), </math> | <math display="block">\hat{\boldsymbol{\beta}} = \underset{\boldsymbol{\beta}}{\operatorname{arg\,min}}\,S(\boldsymbol{\beta}), </math> | ||

| Line 157: | Line 138: | ||

<math display="block">S(\boldsymbol{\beta}) = \sum_{i=1}^n \biggl| y_i - \sum_{j=1}^p X_{ij}\beta_j\biggr|^2 = \bigl\|\mathbf y - \mathrm{X} \boldsymbol \beta \bigr\|^2.</math> | <math display="block">S(\boldsymbol{\beta}) = \sum_{i=1}^n \biggl| y_i - \sum_{j=1}^p X_{ij}\beta_j\biggr|^2 = \bigl\|\mathbf y - \mathrm{X} \boldsymbol \beta \bigr\|^2.</math> | ||

A justification for choosing this criterion is given in [[ | A justification for choosing this criterion is given in [[#Properties|Properties]] below. This minimization problem has a unique solution, provided that the <math>p</math> columns of the matrix <math> \mathrm{X} </math> are [[linearly independent|linearly independent]], given by solving the so-called ''normal equations'': | ||

<math display="block">(\mathrm{X}^{\mathsf T} \mathrm{X} )\hat{\boldsymbol{\beta}} = \mathrm{X}^{\mathsf T} \mathbf y\ .</math> | <math display="block">(\mathrm{X}^{\mathsf T} \mathrm{X} )\hat{\boldsymbol{\beta}} = \mathrm{X}^{\mathsf T} \mathbf y\ .</math> | ||

The matrix <math>\mathrm{X}^{\mathsf T} \mathrm X</math> is known as the ''normal matrix'' or [[ | The matrix <math>\mathrm{X}^{\mathsf T} \mathrm X</math> is known as the ''normal matrix'' or [[Gram matrix|Gram matrix]] and the matrix <math>\mathrm{X}^{\mathsf T} \mathbf y</math> is known as the [[moment matrix|moment matrix]] of regressand by regressors.<ref>{{cite book |first=Arthur S. |last=Goldberger |author-link=Arthur Goldberger |chapter=Classical Linear Regression |title=Econometric Theory |location=New York |publisher=John Wiley & Sons |year=1964 |isbn=0-471-31101-4 |pages=[https://archive.org/details/econometrictheor0000gold/page/158 158] |chapter-url=https://books.google.com/books?id=KZq5AAAAIAAJ&pg=PA156 |url=https://archive.org/details/econometrictheor0000gold/page/158 }}</ref> Finally, <math>\hat{\boldsymbol{\beta}}</math> is the coefficient vector of the least-squares [[hyperplane|hyperplane]], expressed as | ||

<math display="block">\hat{\boldsymbol{\beta}}= \left( \mathrm{X}^{\mathsf T} \mathrm{X} \right)^{-1} \mathrm{X}^{\mathsf T} \mathbf y.</math> | <math display="block">\hat{\boldsymbol{\beta}}= \left( \mathrm{X}^{\mathsf T} \mathrm{X} \right)^{-1} \mathrm{X}^{\mathsf T} \mathbf y.</math> | ||

| Line 171: | Line 152: | ||

== Estimation == | == Estimation == | ||

Suppose <math>b</math> is a "candidate" value for the parameter vector <math>\beta</math>. The quantity <math>y_i-x_i^{\mathsf{T}}b</math>, called the '''[[ | Suppose <math>b</math> is a "candidate" value for the parameter vector <math>\beta</math>. The quantity <math>y_i-x_i^{\mathsf{T}}b</math>, called the '''[[errors and residuals in statistics|residual]]''' for the <math>i</math>-th observation, measures the vertical distance between the data point <math>(x_i,y_i)</math> and the hyperplane <math>y=X^{\mathsf{T}}b</math>, and thus assesses the degree of fit between the actual data and the model. The '''sum of squared residuals''' ('''SSR''') (also called the '''error sum of squares''' ('''ESS''') or '''residual sum of squares''' ('''RSS'''))<ref>{{cite book |last=Hayashi |first=Fumio |author-link=Fumio Hayashi |title=Econometrics |publisher=Princeton University Press |year=2000 |page=15 }}</ref> is a measure of the overall model fit: | ||

<math display = "block"> | <math display = "block"> | ||

S(b) = \sum_{i=1}^n (y_i - x_i ^\mathrm{T} b)^2 = (y-Xb)^\mathrm{T}(y-Xb), | S(b) = \sum_{i=1}^n (y_i - x_i ^\mathrm{T} b)^2 = (y-Xb)^\mathrm{T}(y-Xb), | ||

</math> | </math> | ||

where <math>\mathsf{T}</math> denotes the matrix [[ | where <math>\mathsf{T}</math> denotes the matrix [[transpose|transpose]], and the rows of<math>X</math>, denoting the values of all the independent variables associated with a particular value of the dependent variable, are ''X<sub>i</sub> = x<sub>i</sub>''<sup>T</sup>. The value of ''b'' which minimizes this sum is called the '''OLS estimator for <math>\beta</math>'''. The function <math>S(b)</math> is quadratic in <math>b</math> with positive-definite [[Hessian matrix|Hessian]], and therefore this function possesses a unique global minimum at <math>b =\hat\beta</math>, which can be given by the explicit formula:<ref>{{harvtxt|Hayashi|2000|loc=page 18}}</ref> | ||

<math display = "block"> | <math display = "block"> | ||

| Line 181: | Line 162: | ||

</math> | </math> | ||

The product <math>N=X^{\mathsf{T}}X</math> is a [[ | The product <math>N=X^{\mathsf{T}}X</math> is a [[Gram matrix|Gram matrix]] and its inverse, <math>Q=N^{-1}</math>, is the ''cofactor matrix'' of <math>\beta</math>,<ref>{{Cite book|url=https://books.google.com/books?id=hZ4mAOXVowoC&pg=PA160|title=Adjustment Computations: Spatial Data Analysis|isbn=9780471697282|last1=Ghilani|first1=Charles D.|last2=Paul r. Wolf|first2=Ph. D.|date=12 June 2006}}</ref><ref>{{Cite book|url=https://books.google.com/books?id=Np7y43HU_m8C&pg=PA263|title=GNSS – Global Navigation Satellite Systems: GPS, GLONASS, Galileo, and more|isbn=9783211730171|last1=Hofmann-Wellenhof|first1=Bernhard|last2=Lichtenegger|first2=Herbert|last3=Wasle|first3=Elmar|date=20 November 2007}}</ref><ref>{{Cite book|url=https://books.google.com/books?id=peYFZ69HqEsC&pg=PA134|title=GPS: Theory, Algorithms and Applications|isbn=9783540727156|last1=Xu|first1=Guochang|date=5 October 2007}}</ref> closely related to its [[#Covariance matrix|covariance matrix]], <math>C_{\beta}</math>. | ||

The matrix <math display = "block">(X^{\mathsf{T}}X)^{-1}X^{\mathsf{T}}=QX^{\mathsf{T}}</math> is called the [[ | The matrix <math display = "block">(X^{\mathsf{T}}X)^{-1}X^{\mathsf{T}}=QX^{\mathsf{T}}</math> is called the [[Moore–Penrose pseudoinverse|Moore–Penrose pseudoinverse]] matrix of <math>X</math>. This formulation highlights the point that estimation can be carried out if, and only if, there is no perfect [[multicollinearity|multicollinearity]] between the explanatory variables (which would cause the gram matrix to have no inverse). | ||

After we have estimated <math>\beta</math>, the '''fitted values''' (or '''predicted values''') from the regression will be | After we have estimated <math>\beta</math>, the '''fitted values''' (or '''predicted values''') from the regression will be | ||

| Line 188: | Line 169: | ||

\hat{y} = X\hat\beta = Py, | \hat{y} = X\hat\beta = Py, | ||

</math> | </math> | ||

where <math display = "block">P=X(X^{\mathsf{T}}X)^{-1}X^{\mathsf{T}}</math> is the [[ | where <math display = "block">P=X(X^{\mathsf{T}}X)^{-1}X^{\mathsf{T}}</math> is the [[projection matrix|projection matrix]] onto the space <math>V</math> spanned by the columns of<math>X</math>. This matrix <math>P</math> is also sometimes called the [[hat matrix|hat matrix]] because it "puts a hat" onto the variable <math>y</math>. Another matrix, closely related to <math>P</math> is the ''annihilator'' matrix <math>M=I_n-P</math>; this is a projection matrix onto the space orthogonal to <math>V</math>. Both matrices <math>P</math> and <math>M</math> are [[symmetric matrix|symmetric]] and [[idempotent matrix|idempotent]] (meaning that <math>P^2 = P</math> and <math>M^2=M</math>), and relate to the data matrix <math>X</math> via identities <math>PX=X</math> and <math>MX=0</math>.<ref name="Hayashi 2000 loc=page 19">{{harvtxt|Hayashi|2000|loc=page 19}}</ref> Matrix <math>M</math> creates the '''residuals''' from the regression: | ||

<math display = "block"> | <math display = "block"> | ||

\hat\varepsilon = y - \hat y = y - X\hat\beta = My = M(X\beta+\varepsilon) = (MX)\beta + M\varepsilon = M\varepsilon. | \hat\varepsilon = y - \hat y = y - X\hat\beta = My = M(X\beta+\varepsilon) = (MX)\beta + M\varepsilon = M\varepsilon. | ||

</math> | </math> | ||

Using these residuals we can estimate the value of <math>\sigma^2</math> using the '''[[ | Using these residuals we can estimate the value of <math>\sigma^2</math> using the '''[[reduced chi-squared|reduced chi-squared]]''' statistic: | ||

<math display = "block"> | <math display = "block"> | ||

s^2 = \frac{\hat\varepsilon ^\mathrm{T} \hat\varepsilon}{n-p} = \frac{(My)^\mathrm{T} My}{n-p} = \frac{y^\mathrm{T} M^\mathrm{T}My}{n-p}= \frac{y ^\mathrm{T} My}{n-p} = \frac{S(\hat\beta)}{n-p},\qquad | s^2 = \frac{\hat\varepsilon ^\mathrm{T} \hat\varepsilon}{n-p} = \frac{(My)^\mathrm{T} My}{n-p} = \frac{y^\mathrm{T} M^\mathrm{T}My}{n-p}= \frac{y ^\mathrm{T} My}{n-p} = \frac{S(\hat\beta)}{n-p},\qquad | ||

\hat\sigma^2 = \frac{n-p}{n}\;s^2 | \hat\sigma^2 = \frac{n-p}{n}\;s^2 | ||

</math> | </math> | ||

The denominator, <math>n-p</math>, is the [[ | The denominator, <math>n-p</math>, is the [[Degrees of freedom (statistics)|statistical degrees of freedom]]. The first quantity, <math>s^2</math>, is the OLS estimate for <math>\sigma^2</math>, whereas the second, <math style="vertical-align:0">\scriptstyle\hat\sigma^2</math>, is the MLE estimate for <math>\sigma^2</math>. The two estimators are quite similar in large samples; the first estimator is always [[estimator bias|unbiased]], while the second estimator is biased but has a smaller [[mean squared error|mean squared error]]. In practice <math>s^2</math> is used more often, since it is more convenient for the hypothesis testing. The square root of <math>s^2</math> is called the '''regression standard error''',<ref>[https://cran.r-project.org/doc/contrib/Faraway-PRA.pdf Julian Faraway (2000), ''Practical Regression and Anova using R'']</ref> '''standard error of the regression''',<ref>{{cite book |last1=Kenney |first1=J. |last2=Keeping |first2=E. S. |year=1963 |title=Mathematics of Statistics |publisher=van Nostrand |page=187 }}</ref><ref>{{cite book |last=Zwillinger |first=D. |year=1995 |title=Standard Mathematical Tables and Formulae |publisher=Chapman&Hall/CRC |isbn=0-8493-2479-3 |page=626 }}</ref> or '''standard error of the equation'''.<ref name="Hayashi 2000 loc=page 19"/> | ||

It is common to assess the goodness-of-fit of the OLS regression by comparing how much the initial variation in the sample can be reduced by regressing onto<math>X</math>. The '''[[ | It is common to assess the goodness-of-fit of the OLS regression by comparing how much the initial variation in the sample can be reduced by regressing onto<math>X</math>. The '''[[coefficient of determination|coefficient of determination]]''' <math>R^2</math> is defined as a ratio of "explained" variance to the "total" variance of the dependent variable <math>y</math>, in the cases where the regression sum of squares equals the sum of squares of residuals:<ref>{{harvtxt|Hayashi|2000|loc=page 20}}</ref> | ||

<math display = "block"> | <math display = "block"> | ||

R^2 = \frac{\sum(\hat y_i-\overline{y})^2}{\sum(y_i-\overline{y})^2} = \frac{y ^\mathrm{T} P ^\mathrm{T} LPy}{y ^\mathrm{T} Ly} = 1 - \frac{y ^\mathrm{T} My}{y ^\mathrm{T} Ly} = 1 - \frac{\rm RSS}{\rm TSS} | R^2 = \frac{\sum(\hat y_i-\overline{y})^2}{\sum(y_i-\overline{y})^2} = \frac{y ^\mathrm{T} P ^\mathrm{T} LPy}{y ^\mathrm{T} Ly} = 1 - \frac{y ^\mathrm{T} My}{y ^\mathrm{T} Ly} = 1 - \frac{\rm RSS}{\rm TSS} | ||

</math> | </math> | ||

where TSS is the '''[[ | where TSS is the '''[[total sum of squares|total sum of squares]]''' for the dependent variable, <math display="inline">L=I_n-\frac{1}{n}J_n</math>, and <math display="inline">J_n</math> is an <math>n\times n</math> matrix of ones. (<math>L</math> is a [[centering matrix|centering matrix]] which is equivalent to regression on a constant; it simply subtracts the mean from a variable.) In order for <math>R^2</math> to be meaningful, the matrix <math>X</math> of data on regressors must contain a column vector of ones to represent the constant whose coefficient is the regression intercept. In that case, <math>R^2</math> will always be a number between 0 and 1, with values close to 1 indicating a good degree of fit. | ||

The variance in the prediction of the independent variable as a function of the dependent variable is given in the article [[ | The variance in the prediction of the independent variable as a function of the dependent variable is given in the article [[Polynomial least squares|Polynomial least squares]]. | ||

=== Simple linear regression model === | === Simple linear regression model === | ||

| Line 224: | Line 205: | ||

=== Alternative derivations === | === Alternative derivations === | ||

In the previous section the least squares estimator <math>\hat\beta</math> was obtained as a value that minimizes the sum of squared residuals of the model. However it is also possible to derive the same estimator from other approaches. In all cases the formula for OLS estimator remains the same: <math>\hat{\beta}=(X^{\mathsf{T}}X)^{-1}X^{\mathsf{T}}y</math>; the only difference is in how we interpret this result. | In the previous section the least squares estimator <math>\hat\beta</math> was obtained as a value that minimizes the sum of squared residuals of the model. However it is also possible to derive the same estimator from other approaches. In all cases the formula for OLS estimator remains the same: <math display = "block">\hat{\beta}=(X^{\mathsf{T}}X)^{-1}X^{\mathsf{T}}y</math>; the only difference is in how we interpret this result. | ||

==== Projection ==== | ==== Projection ==== | ||

| Line 233: | Line 214: | ||

\hat\beta = {\rm arg}\min_\beta\,\lVert y - X\beta \rVert, | \hat\beta = {\rm arg}\min_\beta\,\lVert y - X\beta \rVert, | ||

</math> | </math> | ||

where <math>||\cdot||</math> is the standard [[ | where <math>||\cdot||</math> is the standard [[Norm (mathematics)#Euclidean norm|''L''<sup>2</sup> norm]] in the <math>n</math>-dimensional [[Euclidean space|Euclidean space]] <math>\mathbb{R}^n</math>. The predicted quantity <math>X\beta</math> is just a certain linear combination of the vectors of regressors. Thus, the residual vector <math>y-X\beta</math> will have the smallest length when<math>y</math>is [[projection (linear algebra)|projected orthogonally]] onto the [[linear subspace|linear subspace]] [[linear span|spanned]] by the columns of<math>X</math>. The OLS estimator <math>\hat\beta</math> in this case can be interpreted as the coefficients of [[vector decomposition|vector decomposition]] of <math>\hat{y}=Py</math> along the basis of<math>X</math>. | ||

In other words, the gradient equations at the minimum can be written as: | In other words, the gradient equations at the minimum can be written as: | ||

| Line 239: | Line 220: | ||

<math display="block">(\mathbf y - X \hat{\boldsymbol{\beta}})^{\rm T} X=0.</math> | <math display="block">(\mathbf y - X \hat{\boldsymbol{\beta}})^{\rm T} X=0.</math> | ||

A geometrical interpretation of these equations is that the vector of residuals, <math>\mathbf y - X \hat{\boldsymbol{\beta}}</math> is orthogonal to the [[ | A geometrical interpretation of these equations is that the vector of residuals, <math>\mathbf y - X \hat{\boldsymbol{\beta}}</math> is orthogonal to the [[column space|column space]] of<math>X</math>, since the dot product <math>(\mathbf y-X\hat{\boldsymbol{\beta}})\cdot X \mathbf v</math> is equal to zero for ''any'' conformal vector, <math>\mathbf{v}</math>. This means that <math>\mathbf y - X \boldsymbol{\hat \beta}</math> is the shortest of all possible vectors <math>\mathbf{y}- X \boldsymbol \beta</math>, that is, the variance of the residuals is the minimum possible. This is illustrated at the right. | ||

Introducing <math>\hat{\boldsymbol{\gamma}}</math> and a matrix <math>K</math> with the assumption that a matrix <math>[X \ K]</math> is non-singular and <math>K^{\mathsf{T}}X=0</math> (cf. [[ | Introducing <math>\hat{\boldsymbol{\gamma}}</math> and a matrix <math>K</math> with the assumption that a matrix <math>[X \ K]</math> is non-singular and <math>K^{\mathsf{T}}X=0</math> (cf. [[Linear projection#Orthogonal projections|Orthogonal projections]]), the residual vector should satisfy the following equation: | ||

<math display="block">\hat{\mathbf{r}} \triangleq \mathbf{y} - X \hat{\boldsymbol{\beta}} = K \hat{{\boldsymbol{\gamma}}}.</math> | <math display="block">\hat{\mathbf{r}} \triangleq \mathbf{y} - X \hat{\boldsymbol{\beta}} = K \hat{{\boldsymbol{\gamma}}}.</math> | ||

The equation and solution of linear least squares are thus described as follows: | The equation and solution of linear least squares are thus described as follows: | ||

| Line 250: | Line 231: | ||

==== Maximum likelihood ==== | ==== Maximum likelihood ==== | ||

The OLS estimator is identical to the [[ | The OLS estimator is identical to the [[maximum likelihood estimator|maximum likelihood estimator]] (MLE) under the normality assumption for the error terms.<ref>{{harvtxt|Hayashi|2000|loc=page 49}}</ref> From the properties of MLE, we can infer that the OLS estimator is asymptotically efficient (in the sense of attaining the [[Cramér–Rao bound|Cramér–Rao bound]] for variance) if the normality assumption is satisfied.<ref name="Hayashi 2000 loc=page 52">{{harvtxt|Hayashi|2000|loc=page 52}}</ref> | ||

=== Properties === | === Properties === | ||

| Line 256: | Line 237: | ||

==== Assumptions ==== | ==== Assumptions ==== | ||

There are several different frameworks in which the [[ | There are several different frameworks in which the [[linear regression model|linear regression model]] can be cast in order to make the OLS technique applicable. Each of these settings produces the same formulas and same results. The only difference is the interpretation and the assumptions which have to be imposed in order for the method to give meaningful results. The choice of the applicable framework depends mostly on the nature of data in hand, and on the inference task which has to be performed. | ||

One of the lines of difference in interpretation is whether to treat the regressors as random variables, or as predefined constants. In the first case ('''random design''') the regressors <math>x_i</math> are random and sampled together with the <math>y_i</math>'s from some [[ | One of the lines of difference in interpretation is whether to treat the regressors as random variables, or as predefined constants. In the first case ('''random design''') the regressors <math>x_i</math> are random and sampled together with the <math>y_i</math>'s from some [[statistical population|population]], as in an [[observational study|observational study]]. This approach allows for more natural study of the [[asymptotic theory (statistics)|asymptotic properties]] of the estimators. In the other interpretation ('''fixed design'''), the regressors<math>X</math> are treated as known constants set by a [[design of experiments|design]], and<math>y</math>is sampled conditionally on the values of<math>X</math> as in an [[experiment|experiment]]. For practical purposes, this distinction is often unimportant, since estimation and inference is carried out while conditioning on<math>X</math>. All results stated in this article are within the random design framework. | ||

===== Classical linear regression model ===== | ===== Classical linear regression model ===== | ||

The classical model focuses on the "finite sample" estimation and inference, meaning that the number of observations ''n'' is fixed. This contrasts with the other approaches, which study the [[ | The classical model focuses on the "finite sample" estimation and inference, meaning that the number of observations ''n'' is fixed. This contrasts with the other approaches, which study the [[asymptotic theory (statistics)|asymptotic behavior]] of OLS, and in which the number of observations is allowed to grow to infinity. | ||

{| class="table" | {| class="table table-bordered" | ||

|- | |- | ||

! Condition !! Description | ! Condition !! Description | ||

| Line 269: | Line 250: | ||

| Correct specification|| The linear functional form must coincide with the form of the actual data-generating process. | | Correct specification|| The linear functional form must coincide with the form of the actual data-generating process. | ||

|- | |- | ||

| Strict exogeneity || The errors in the regression should have [[ | | Strict exogeneity || The errors in the regression should have [[conditional expectation|conditional mean]] zero:<ref>{{harvtxt|Hayashi|2000|loc=page 7}}</ref><math> | ||

\operatorname{E}[\,\varepsilon\mid X\,] = 0. | \operatorname{E}[\,\varepsilon\mid X\,] = 0. | ||

</math>The immediate consequence of the exogeneity assumption is that the errors have mean zero: <math>\operatorname{E}[\epsilon] = 0 </math>, and that the regressors are uncorrelated with the errors: <math>\operatorname{E}[X^{\mathsf{T}}\epsilon] = 0</math>. | </math>The immediate consequence of the exogeneity assumption is that the errors have mean zero: <math>\operatorname{E}[\epsilon] = 0 </math>, and that the regressors are uncorrelated with the errors: <math>\operatorname{E}[X^{\mathsf{T}}\epsilon] = 0</math>. | ||

|- | |- | ||

| Linear Independence || The regressors in<math>X</math> must all be [[ | | Linear Independence || The regressors in<math>X</math> must all be [[linearly independent|linearly independent]]. Mathematically, this means that the matrix <math>X</math> must have full [[column rank|column rank]] almost surely:<ref name="Hayashi 2000 loc=page 10">{{harvtxt|Hayashi|2000|loc=page 10}}</ref><math display = "block"> | ||

\Pr\!\big[\,\operatorname{rank}(X) = p\,\big] = 1. | \Pr\!\big[\,\operatorname{rank}(X) = p\,\big] = 1. | ||

</math>Usually, it is also assumed that the regressors have finite moments up to at least the second moment. Then the matrix <math>Q_{xx}=\operatorname{E}[X^{\mathsf{T}}X]/n</math> is finite and positive semi-definite. When this assumption is violated the regressors are called linearly dependent or [[ | </math>Usually, it is also assumed that the regressors have finite moments up to at least the second moment. Then the matrix <math>Q_{xx}=\operatorname{E}[X^{\mathsf{T}}X]/n</math> is finite and positive semi-definite. When this assumption is violated the regressors are called linearly dependent or [[multicollinearity|perfectly multicollinear]]. In such case the value of the regression coefficient <math>\beta</math> cannot be learned, although prediction of <math>y</math> values is still possible for new values of the regressors that lie in the same linearly dependent subspace. | ||

|- | |- | ||

| Spherical Errors<ref name="Hayashi 2000 loc=page 10"/> || <math display = "block"> | | Spherical Errors<ref name="Hayashi 2000 loc=page 10"/> || <math display = "block"> | ||

\operatorname{Var}[\,\varepsilon \mid X\,] = \sigma^2 I_n, | \operatorname{Var}[\,\varepsilon \mid X\,] = \sigma^2 I_n, | ||

</math> | </math> | ||

:where <math>I_n</math> is the [[ | :where <math>I_n</math> is the [[identity matrix|identity matrix]] in dimension <math>n</math>, and <math>\sigma^2</math> is a parameter which determines the variance of each observation. This <math>\sigma^2</math> is considered a [[nuisance parameter|nuisance parameter]] in the model, although usually it is also estimated. If this assumption is violated then the OLS estimates are still valid, but no longer efficient. | ||

:It is customary to split this assumption into two parts: | :It is customary to split this assumption into two parts: | ||

:* '''[[ | :* '''[[Homoscedasticity|Homoscedasticity]]''': <math>\operatorname{E}[\epsilon_i^2|X] = \sigma^2</math>, which means that the error term has the same variance <math>\sigma^2</math> in each observation. When this requirement is violated this is called [[heteroscedasticity|heteroscedasticity]], in such case a more efficient estimator would be [[weighted least squares|weighted least squares]]. If the errors have infinite variance then the OLS estimates will also have infinite variance (although by the [[law of large numbers|law of large numbers]] they will nonetheless tend toward the true values so long as the errors have zero mean). In this case, [[robust regression|robust estimation]] techniques are recommended. | ||

:* '''No [[ | :* '''No [[autocorrelation|autocorrelation]]''': the errors are [[correlation|uncorrelated]] between observations: <math>\operatorname{E}[\epsilon_i\epsilon_j|X]=0</math> for <math>i\neq j</math>. This assumption may be violated in the context of [[guide:662dfe6591|time series]] data, [[panel data|panel data]], cluster samples, hierarchical data, repeated measures data, longitudinal data, and other data with dependencies. In such cases [[generalized least squares|generalized least squares]] provides a better alternative than the OLS. Another expression for autocorrelation is ''serial correlation''. | ||

|- | |- | ||

| Normality || It is sometimes additionally assumed that the errors have [[ | | Normality || It is sometimes additionally assumed that the errors have [[multivariate normal distribution|normal distribution]] conditional on the regressors:<ref>{{harvtxt|Hayashi|2000|loc=page 34}}</ref> | ||

<math display = "block"> | <math display = "block"> | ||

\varepsilon \mid X\sim \mathcal{N}(0, \sigma^2I_n). | \varepsilon \mid X\sim \mathcal{N}(0, \sigma^2I_n). | ||

</math>This assumption is not needed for the validity of the OLS method, although certain additional finite-sample properties can be established in case when it does (especially in the area of hypotheses testing). Also when the errors are normal, the OLS estimator is equivalent to the [[ | </math>This assumption is not needed for the validity of the OLS method, although certain additional finite-sample properties can be established in case when it does (especially in the area of hypotheses testing). Also when the errors are normal, the OLS estimator is equivalent to the [[maximum likelihood estimator|maximum likelihood estimator]] (MLE), and therefore it is asymptotically efficient in the class of all [[regular estimator|regular estimator]]s. Importantly, the normality assumption applies only to the error terms; contrary to a popular misconception, the response (dependent) variable is not required to be normally distributed.<ref>{{cite journal|last1=Williams|first1=M. N|last2=Grajales|first2=C. A. G|last3=Kurkiewicz|first3=D|title=Assumptions of multiple regression: Correcting two misconceptions|journal=Practical Assessment, Research & Evaluation|date=2013|volume=18|issue=11|url=https://scholarworks.umass.edu/cgi/viewcontent.cgi?article=1308&context=pare}}</ref> | ||

|} | |} | ||

| Line 294: | Line 275: | ||

===== Independent and identically distributed (iid) ===== | ===== Independent and identically distributed (iid) ===== | ||

In some applications | In some applications an additional assumption is imposed — that all observations are independent and identically distributed. This means that all observations are taken from a [[random sample|random sample]] which makes all the assumptions listed earlier simpler and easier to interpret. The list of assumptions in this case is: | ||

{| class="table" | {| class="table" | ||

| Line 300: | Line 281: | ||

! Assumption !! Description | ! Assumption !! Description | ||

|- | |- | ||

| iid obesrvations || <math>(x_i,y_i)</math> is [[ | | iid obesrvations || <math>(x_i,y_i)</math> is [[independent random variables|independent]] from, and has the same [[Probability distribution|distribution]] as, <math>(x_j,y_j)</math> for all <math>i\neq j</math>. | ||

|- | |- | ||

| no perfect multicollinearity || <math>Q_{xx}=\operatorname{E}[x_ix_i^{\mathsf{T}}]</math> is a [[ | | no perfect multicollinearity || <math>Q_{xx}=\operatorname{E}[x_ix_i^{\mathsf{T}}]</math> is a [[positive-definite matrix|positive-definite matrix]] | ||

|- | |- | ||

| Exogeneity || <math>\operatorname{E}[\varepsilon_ix_i]=0</math> | | Exogeneity || <math>\operatorname{E}[\varepsilon_ix_i]=0</math> | ||

| Line 310: | Line 291: | ||

==== Finite sample properties ==== | ==== Finite sample properties ==== | ||

First of all, under the ''strict exogeneity'' assumption the OLS estimators <math>\scriptstyle\hat\beta</math> and <math>s^2</math> are [[ | First of all, under the ''strict exogeneity'' assumption the OLS estimators <math>\scriptstyle\hat\beta</math> and <math>s^2</math> are [[Bias of an estimator|unbiased]], meaning that their expected values coincide with the true values of the parameters:<ref>{{harvtxt|Hayashi|2000|loc=pages 27, 30}}</ref> | ||

<math display = "block"> | <math display = "block"> | ||

\operatorname{E}[\, \hat\beta \mid X \,] = \beta, \quad \operatorname{E}[\,s^2 \mid X\,] = \sigma^2. | \operatorname{E}[\, \hat\beta \mid X \,] = \beta, \quad \operatorname{E}[\,s^2 \mid X\,] = \sigma^2. | ||

</math> | </math> | ||

If the strict exogeneity does not hold (as is the case with many [[ | If the strict exogeneity does not hold (as is the case with many [[time series|time series]] models, where exogeneity is assumed only with respect to the past shocks but not the future ones), then these estimators will be biased in finite samples. | ||

The ''[[ | The ''[[variance-covariance matrix|variance-covariance matrix]]'' (or simply ''covariance matrix'') of <math>\scriptstyle\hat\beta</math> is equal to<ref name="HayashiFSP">{{harvtxt|Hayashi|2000|loc=page 27}}</ref> | ||

<math display = "block"> | <math display = "block"> | ||

\operatorname{Var}[\, \hat\beta \mid X \,] = \sigma^2(X ^T X)^{-1} = \sigma^2 Q. | \operatorname{Var}[\, \hat\beta \mid X \,] = \sigma^2(X ^T X)^{-1} = \sigma^2 Q. | ||

| Line 330: | Line 311: | ||

</math> | </math> | ||

The ''[[ | The ''[[Gauss–Markov theorem|Gauss–Markov theorem]]'' states that under the ''spherical errors'' assumption (that is, the errors should be [[uncorrelated|uncorrelated]] and [[homoscedastic|homoscedastic]]) the estimator <math >\scriptstyle\hat\beta</math> is efficient in the class of linear unbiased estimators. This is called the ''best linear unbiased estimator'' (BLUE). Efficiency should be understood as if we were to find some other estimator <math >\scriptstyle\tilde\beta</math> which would be linear in<math>y</math>and unbiased, then <ref name="HayashiFSP"/> | ||

<math display = "block"> | <math display = "block"> | ||

\operatorname{Var}[\, \tilde\beta \mid X \,] - \operatorname{Var}[\, \hat\beta \mid X \,] \geq 0 | \operatorname{Var}[\, \tilde\beta \mid X \,] - \operatorname{Var}[\, \hat\beta \mid X \,] \geq 0 | ||

</math> | </math> | ||

in the sense that this is a [[ | in the sense that this is a [[nonnegative-definite matrix|nonnegative-definite matrix]]. This theorem establishes optimality only in the class of linear unbiased estimators, which is quite restrictive. Depending on the distribution of the error terms ''ε'', other, non-linear estimators may provide better results than OLS. | ||

===== Assuming normality ===== | ===== Assuming normality ===== | ||

| Line 343: | Line 324: | ||

\hat\beta\ \sim\ \mathcal{N}\big(\beta,\ \sigma^2(X ^\mathrm{T} X)^{-1}\big) | \hat\beta\ \sim\ \mathcal{N}\big(\beta,\ \sigma^2(X ^\mathrm{T} X)^{-1}\big) | ||

</math> | </math> | ||

where <math>Q</math> is the | where <math>Q</math> is the cofactor matrix. This estimator reaches the [[Cramér–Rao bound|Cramér–Rao bound]] for the model, and thus is optimal in the class of all unbiased estimators.<ref name="Hayashi 2000 loc=page 52"/> Note that unlike the [[Gauss–Markov theorem|Gauss–Markov theorem]], this result establishes optimality among both linear and non-linear estimators, but only in the case of normally distributed error terms. | ||

The estimator <math>s^2</math> will be proportional to the [[ | The estimator <math>s^2</math> will be proportional to the [[chi-squared distribution|chi-squared distribution]]:<ref>{{harvtxt|Amemiya|1985|loc=page 14}}</ref> | ||

<math display = "block"> | <math display = "block"> | ||

s^2\ \sim\ \frac{\sigma^2}{n-p} \cdot \chi^2_{n-p} | s^2\ \sim\ \frac{\sigma^2}{n-p} \cdot \chi^2_{n-p} | ||

</math> | </math> | ||

The variance of this estimator is equal to <math>2\sigma^4/(n-p)</math>, which does not attain the [[ | The variance of this estimator is equal to <math>2\sigma^4/(n-p)</math>, which does not attain the [[Cramér–Rao bound|Cramér–Rao bound]] of <math>2\sigma^4/n</math>. However it was shown that there are no unbiased estimators of <math>\sigma^2</math> with variance smaller than that of the estimator <math>s^2</math>.<ref>{{cite book |first=C. R. |last=Rao |author-link=C. R. Rao |title=Linear Statistical Inference and its Applications |location=New York |publisher=J. Wiley & Sons |year=1973 |edition=Second |page=319 |isbn=0-471-70823-2 }}</ref> If we are willing to allow biased estimators, and consider the class of estimators that are proportional to the sum of squared residuals (SSR) of the model, then the best (in the sense of the [[mean squared error|mean squared error]]) estimator in this class will be <math>\sim \sigma^2/(n-p+2)</math>, which even beats the Cramér–Rao bound in case when there is only one regressor (<math>p=1</math>).<ref>{{harvtxt|Amemiya|1985|loc=page 20}}</ref> | ||

Moreover, the estimators <math >\hat{\beta}</math> and <math>s^2</math> are [[independent random variables|independent]],<ref>{{harvtxt|Amemiya|1985|loc=page 27}}</ref> the fact which comes in useful when constructing the t- and F-tests for the regression. | |||

==== Large sample properties ==== | ==== Large sample properties ==== | ||

The least squares estimators are [[ | The least squares estimators are [[point estimate|point estimate]]s of the linear regression model parameters <math>\beta</math>. However, generally we also want to know how close those estimates might be to the true values of parameters. In other words, we want to construct the [[interval estimate|interval estimate]]s. | ||

Since we haven't made any assumption about the distribution of error term <math>\epsilon_i</math>, it is impossible to infer the distribution of the estimators <math>\hat\beta</math> and <math>\hat\sigma^2</math>. Nevertheless, we can apply the [[ | Since we haven't made any assumption about the distribution of error term <math>\epsilon_i</math>, it is impossible to infer the distribution of the estimators <math>\hat\beta</math> and <math>\hat\sigma^2</math>. Nevertheless, we can apply the [[central limit theorem|central limit theorem]] to derive their ''asymptotic'' properties as sample size <math>n</math> goes to infinity. While the sample size is necessarily finite, it is customary to assume that <math>n</math> is "large enough" so that the true distribution of the OLS estimator is close to its asymptotic limit. | ||

We can show that under the model assumptions, the least squares estimator for <math>\beta</math> is [[ | We can show that under the model assumptions, the least squares estimator for <math>\beta</math> is [[consistent estimator|consistent]] (that is <math>\hat\beta</math> [[Convergence of random variables#Convergence in probability|converges in probability]] to <math>\beta</math>) and asymptotically normal: | ||

<math display = "block">(\hat\beta - \beta)\ \xrightarrow{d}\ \mathcal{N}\big(0,\;\sigma^2Q_{xx}^{-1}\big),</math> | <math display = "block">(\hat\beta - \beta)\ \xrightarrow{d}\ \mathcal{N}\big(0,\;\sigma^2Q_{xx}^{-1}\big),</math> | ||

where <math>Q_{xx} = X ^T X.</math> | where <math>Q_{xx} = X ^T X.</math> | ||

| Line 401: | Line 352: | ||

\bigg] | \bigg] | ||

</math> at the <math>1-\alpha</math> confidence level, | </math> at the <math>1-\alpha</math> confidence level, | ||

where <math>Q</math> denotes the [[ | where <math>Q</math> denotes the [[quantile function|quantile function]] of standard normal distribution, and [·]<sub>''jj''</sub> is the <math>j</math>-th diagonal element of a matrix. | ||

Similarly, the least squares estimator for <math>\sigma^2</math> is also consistent and asymptotically normal (provided that the fourth moment of <math>\epsilon_i</math> exists) with limiting distribution | Similarly, the least squares estimator for <math>\sigma^2</math> is also consistent and asymptotically normal (provided that the fourth moment of <math>\epsilon_i</math> exists) with limiting distribution | ||

<math display = "block">(\hat{\sigma}^2 - \sigma^2)\ \xrightarrow{d}\ \mathcal{N} \left(0,\;\operatorname{E}\left[\varepsilon_i^4\right] - \sigma^4\right). </math> | <math display = "block">(\hat{\sigma}^2 - \sigma^2)\ \xrightarrow{d}\ \mathcal{N} \left(0,\;\operatorname{E}\left[\varepsilon_i^4\right] - \sigma^4\right). </math> | ||

These asymptotic distributions can be used for prediction, testing hypotheses, constructing other estimators, etc.. As an example consider the problem of prediction. Suppose <math>x_0</math> is some point within the domain of distribution of the regressors, and one wants to know what the response variable would have been at that point. The [[ | These asymptotic distributions can be used for prediction, testing hypotheses, constructing other estimators, etc.. As an example consider the problem of prediction. Suppose <math>x_0</math> is some point within the domain of distribution of the regressors, and one wants to know what the response variable would have been at that point. The [[mean response|mean response]] is the quantity <math>y_0 = x_0^\mathrm{T} \beta</math>, whereas the [[predicted response|predicted response]] is <math>\hat{y}_0 = x_0^\mathrm{T} \hat\beta</math>. Clearly the predicted response is a random variable, its distribution can be derived from that of <math>\hat{\beta}</math>: | ||

<math display = "block">\left(\hat{y}_0 - y_0\right)\ \xrightarrow{d}\ \mathcal{N}\left(0,\;\sigma^2 x_0^\mathrm{T} Q_{xx}^{-1} x_0\right),</math> | <math display = "block">\left(\hat{y}_0 - y_0\right)\ \xrightarrow{d}\ \mathcal{N}\left(0,\;\sigma^2 x_0^\mathrm{T} Q_{xx}^{-1} x_0\right),</math> | ||

Latest revision as of 02:22, 12 April 2024

The method of least squares is a standard approach in regression analysis to approximate the solution of overdetermined systems (sets of equations in which there are more equations than unknowns) by minimizing the sum of the squares of the residuals (a residual being the difference between an observed value and the fitted value provided by a model) made in the results of each individual equation.

The most important application is in data fitting. When the problem has substantial uncertainties in the independent variable (the [math]X[/math] variable), then simple regression and least-squares methods have problems; in such cases, the methodology required for fitting errors-in-variables models may be considered instead of that for least squares.

Least-squares problems fall into two categories: linear or ordinary least squares and nonlinear least squares, depending on whether or not the residuals are linear in all unknowns. The linear least-squares problem occurs in statistical regression analysis; it has a closed-form solution. The nonlinear problem is usually solved by iterative refinement; at each iteration the system is approximated by a linear one, and thus the core calculation is similar in both cases.

The following discussion is mostly presented in terms of linear functions but the use of least squares is valid and practical for more general families of functions. Also, by iteratively applying local quadratic approximation to the likelihood (through the Fisher information), the least-squares method may be used to fit a generalized linear model.

The least-squares method was officially discovered and published by Adrien-Marie Legendre (1805),[1] though it is usually also co-credited to Carl Friedrich Gauss (1795)[2][3] who contributed significant theoretical advances to the method and may have previously used it in his work.[4][5]

Problem statement

The objective consists of adjusting the parameters of a model function to best fit a data set. A simple data set consists of [math]n[/math] points (data pairs) [math](x_i,y_i)\![/math], [math]i=1,\ldots,n[/math], where [math]x_i[/math] is an independent variable and [math]y_i[/math] is a dependent variable whose value is found by observation. The model function has the form [math]f(x, \boldsymbol \beta)[/math], where [math]m[/math] adjustable parameters are held in the vector [math]\boldsymbol \beta[/math]. The goal is to find the parameter values for the model that "best" fits the data. The fit of a model to a data point is measured by its residual, defined as the difference between the observed value of the dependent variable and the value predicted by the model:

The least-squares method finds the optimal parameter values by minimizing the sum of squared residuals, [math]S[/math]:[6]

An example of a model in two dimensions is that of the straight line. Denoting the y-intercept as [math]\beta_0[/math] and the slope as [math]\beta_1[/math], the model function is given by [math]f(x,\boldsymbol \beta)=\beta_0+\beta_1 x[/math]. See linear least squares for a fully worked out example of this model.

A data point may consist of more than one independent variable. For example, when fitting a plane to a set of height measurements, the plane is a function of two independent variables,[math]X[/math] and z, say. In the most general case there may be one or more independent variables and one or more dependent variables at each data point.

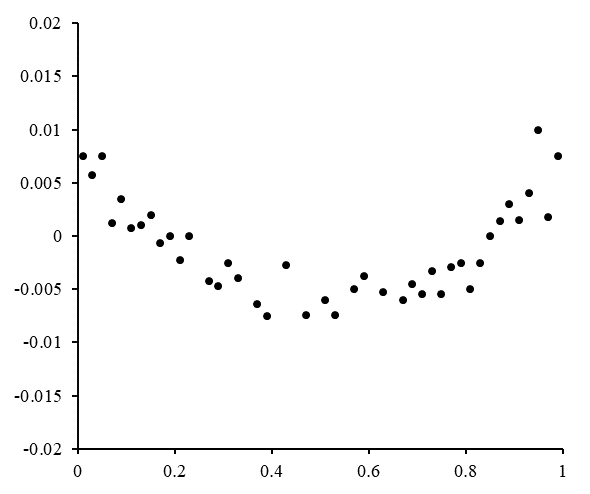

To the right is a residual plot illustrating random fluctuations about [math]r_i=0[/math], indicating that a linear model[math](Y_i = \alpha + \beta x_i + U_i)[/math] is appropriate. [math]U_i[/math] is an independent, random variable.[6]

If the residual points had some sort of a shape and were not randomly fluctuating, a linear model would not be appropriate. For example, if the residual plot had a parabolic shape as seen to the right, a parabolic model[math](Y_i = \alpha + \beta x_i + \gamma x_i^2 + U_i)[/math] would be appropriate for the data. The residuals for a parabolic model can be calculated via [math]r_i=y_i-\hat{\alpha}-\hat{\beta} x_i-\widehat{\gamma} x_i^2[/math].[6]

Limitations

This regression formulation considers only observational errors in the dependent variable (but the alternative total least squares regression can account for errors in both variables). There are two rather different contexts with different implications:

- Regression for prediction. Here a model is fitted to provide a prediction rule for application in a similar situation to which the data used for fitting apply. Here the dependent variables corresponding to such future application would be subject to the same types of observation error as those in the data used for fitting. It is therefore logically consistent to use the least-squares prediction rule for such data.

- Regression for fitting a "true relationship". In standard regression analysis that leads to fitting by least squares there is an implicit assumption that errors in the independent variable are zero or strictly controlled so as to be negligible. When errors in the independent variable are non-negligible, models of measurement error can be used; such methods can lead to parameter estimates, hypothesis testing and confidence intervals that take into account the presence of observation errors in the independent variables.[7] An alternative approach is to fit a model by total least squares; this can be viewed as taking a pragmatic approach to balancing the effects of the different sources of error in formulating an objective function for use in model-fitting.

Linear Least Squares

Linear least squares (LLS) is the least squares approximation of linear functions to data. It is a set of formulations for solving statistical problems involved in linear regression, including variants for ordinary (unweighted), weighted, and generalized (correlated) residuals.

Main formulations

The three main linear least squares formulations are:

| Formulation | Description |

|---|---|

| Ordinary least squares (OLS) | Is the most common estimator. OLS estimates are commonly used to analyze both experimental and observational data. The OLS method minimizes the sum of squared residuals, and leads to a closed-form expression for the estimated value of the unknown parameter vector [math]\beta[/math]:

[[math]]

\hat{\boldsymbol\beta} = (\mathbf{X}^\mathsf{T}\mathbf{X})^{-1} \mathbf{X}^\mathsf{T} \mathbf{y},

[[/math]] where [math]\mathbf{y}[/math] is a vector whose [math]i[/math]th element is the [math]i[/math]th observation of the dependent variable, and [math]\mathbf{X}[/math] is a matrix whose [math]ij[/math] element is the [math]i[/math]th observation of the [math]j[/math]th independent variable. The estimator is unbiased and consistent if the errors have finite variance and are uncorrelated with the regressors:[8] [[math]]

\operatorname{E}[\,\mathbf{x}_i\varepsilon_i\,] = 0,

[[/math]] where [math]\mathbf{x}_i[/math] is the transpose of row [math]i[/math] of the matrix [math]\mathbf{X}.[/math] It is also efficient under the assumption that the errors have finite variance and are homoscedastic, meaning that [math]\operatorname{E}[\varepsilon_i^2|x_i][/math] does not depend on [math]i[/math]. The condition that the errors are uncorrelated with the regressors will generally be satisfied in an experiment, but in the case of observational data, it is difficult to exclude the possibility of an omitted covariate [math]z[/math] that is related to both the observed covariates and the response variable. The existence of such a covariate will generally lead to a correlation between the regressors and the response variable, and hence to an inconsistent estimator of [math]\beta[/math]. The condition of homoscedasticity can fail with either experimental or observational data. If the goal is either inference or predictive modeling, the performance of OLS estimates can be poor if multicollinearity is present, unless the sample size is large.

|

| Weighted least squares (WLS) | Used when heteroscedasticity is present in the error terms of the model. |

| Generalized least squares (GLS) | Is an extension of the OLS method, that allows efficient estimation of [math]\beta[/math] when either heteroscedasticity, or correlations, or both are present among the error terms of the model, as long as the form of heteroscedasticity and correlation is known independently of the data. To handle heteroscedasticity when the error terms are uncorrelated with each other, GLS minimizes a weighted analogue to the sum of squared residuals from OLS regression, where the weight for the [math]i[/math]th case is inversely proportional to [math]\operatorname{Var}(\varepsilon_i)[/math]. This special case of GLS is called "weighted least squares". The GLS solution to an estimation problem is [[math]]

\hat{\boldsymbol\beta} = (\mathbf{X}^\mathsf{T} \boldsymbol\Omega^{-1} \mathbf{X})^{-1}\mathbf{X}^\mathsf{T}\boldsymbol\Omega^{-1}\mathbf{y},

[[/math]] where [math]\Omega[/math] is the covariance matrix of the errors. GLS can be viewed as applying a linear transformation to the data so that the assumptions of OLS are met for the transformed data. For GLS to be applied, the covariance structure of the errors must be known up to a multiplicative constant.

|

Properties

If the experimental errors, [math]\varepsilon[/math], are uncorrelated, have a mean of zero and a constant variance, [math]\sigma[/math], the Gauss–Markov theorem states that the least-squares estimator, [math]\hat{\boldsymbol{\beta}}[/math], has the minimum variance of all estimators that are linear combinations of the observations. In this sense it is the best, or optimal, estimator of the parameters. Note particularly that this property is independent of the statistical distribution function of the errors. In other words, the distribution function of the errors need not be a normal distribution. However, for some probability distributions, there is no guarantee that the least-squares solution is even possible given the observations; still, in such cases it is the best estimator that is both linear and unbiased.

For example, it is easy to show that the arithmetic mean of a set of measurements of a quantity is the least-squares estimator of the value of that quantity. If the conditions of the Gauss–Markov theorem apply, the arithmetic mean is optimal, whatever the distribution of errors of the measurements might be.

However, in the case that the experimental errors do belong to a normal distribution, the least-squares estimator is also a maximum likelihood estimator.[9]

These properties underpin the use of the method of least squares for all types of data fitting, even when the assumptions are not strictly valid.