guide:B85f6bf6f2: Difference between revisions

mNo edit summary |

mNo edit summary |

||

| (9 intermediate revisions by the same user not shown) | |||

| Line 1: | Line 1: | ||

<div class="d-none"> | <div class="d-none"> | ||

<math> | <math> | ||

% Generic syms | % Generic syms | ||

\newcommand\defeq{:=} | \newcommand\defeq{:=} | ||

| Line 202: | Line 201: | ||

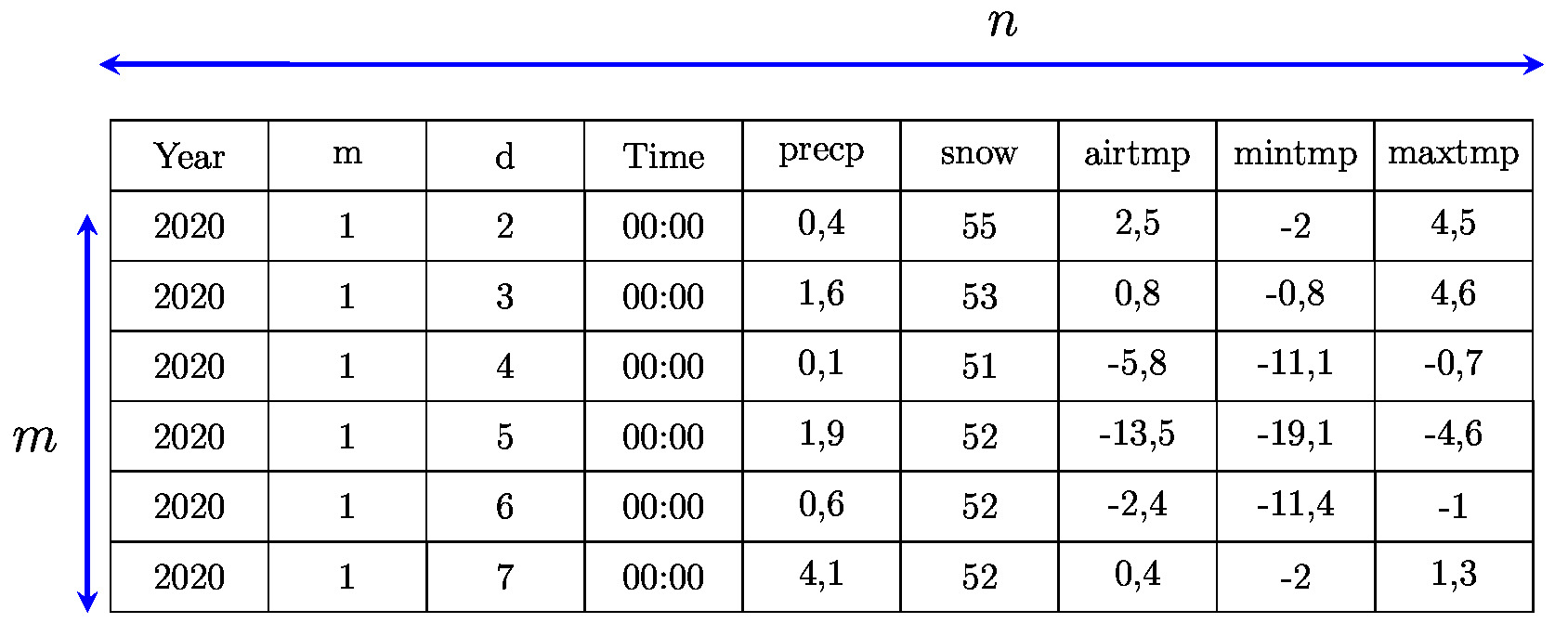

* <span class="mw-gls" data-name ="data">data</span> as collections of individual <span class="mw-gls mw-gls-first" data-name ="datapoint">data point</span>s that are characterized by <span class="mw-gls mw-gls-first" data-name ="features">features</span> (see Section [[#sec_feature_space |Features]]) and <span class="mw-gls mw-gls-first" data-name ="label">label</span>s (see Section [[#sec_labels | Labels]]) | * <span class="mw-gls" data-name ="data">data</span> as collections of individual <span class="mw-gls mw-gls-first" data-name ="datapoint">data point</span>s that are characterized by <span class="mw-gls mw-gls-first" data-name ="features">features</span> (see Section [[#sec_feature_space |Features]]) and <span class="mw-gls mw-gls-first" data-name ="label">label</span>s (see Section [[#sec_labels | Labels]]) | ||

* a <span class="mw-gls" data-name ="model">model</span> or <span class="mw-gls mw-gls-first" data-name ="hypospace">hypothesis space</span> that consists of computationally feasible hypothesis maps from feature space to <span class="mw-gls mw-gls-first" data-name ="labelspace">label space</span> (see Section [[ | * a <span class="mw-gls" data-name ="model">model</span> or <span class="mw-gls mw-gls-first" data-name ="hypospace">hypothesis space</span> that consists of computationally feasible hypothesis maps from feature space to <span class="mw-gls mw-gls-first" data-name ="labelspace">label space</span> (see Section [[#sec_hypo_space | The Model ]]) | ||

* a <span class="mw-gls" data-name ="lossfunc">loss function</span> (see Section [[ | * a <span class="mw-gls" data-name ="lossfunc">loss function</span> (see Section [[#sec_lossfct | The Loss ]]) to measure the quality of a hypothesis map. | ||

A ML problem involves specific design choices for <span class="mw-gls" data-name ="datapoint">data point</span>s, its features and labels, | A ML problem involves specific design choices for <span class="mw-gls" data-name ="datapoint">data point</span>s, its features and labels, | ||

the <span class="mw-gls" data-name ="hypospace">hypothesis space</span> and the <span class="mw-gls" data-name ="lossfunc">loss function</span> to measure the quality of a particular hypothesis. | the <span class="mw-gls" data-name ="hypospace">hypothesis space</span> and the <span class="mw-gls" data-name ="lossfunc">loss function</span> to measure the quality of a particular hypothesis. | ||

Similar to ML problems (or applications), we can also characterize ML methods as combinations of these three components. This chapter discusses in some depth each of the above ML components and their combination within some widely-used ML methods <ref name="JMLR:v12:pedregosa11a"/>. | Similar to ML problems (or applications), we can also characterize ML methods as combinations of these three components. This chapter discusses in some depth each of the above ML components and their combination within some widely-used ML methods <ref name="JMLR:v12:pedregosa11a">F. Pedregosa. Scikit-learn: Machine learning in python. ''Journal of Machine Learning Research'' 12(85):2825--2830, 2011</ref>. | ||

We detail in Chapter [[guide:013ef4b5cd | The Landscape of ML ]] how some of the most popular ML methods, | We detail in Chapter [[guide:013ef4b5cd | The Landscape of ML ]] how some of the most popular ML methods, | ||

including <span class="mw-gls mw-gls-first" data-name ="linreg">linear regression</span> (see Section [[guide:013ef4b5cd#sec_lin_reg | Linear Regression ]]) as well as deep learning methods (see Section [[guide:013ef4b5cd#sec_deep_learning | Deep Learning ]]), are obtained by specific design choices for the three components. Chapter [[guide:2c0f621d22 | Empirical Risk Minimization ]] will then introduce <span class="mw-gls" data-name ="erm">ERM</span> as a main principle for how to operationally combine the individual ML components. Within the <span class="mw-gls" data-name ="erm">ERM</span> principle, ML problems become optimization problems and ML methods become optimization methods. | including <span class="mw-gls mw-gls-first" data-name ="linreg">linear regression</span> (see Section [[guide:013ef4b5cd#sec_lin_reg | Linear Regression ]]) as well as deep learning methods (see Section [[guide:013ef4b5cd#sec_deep_learning | Deep Learning ]]), are obtained by specific design choices for the three components. Chapter [[guide:2c0f621d22 | Empirical Risk Minimization ]] will then introduce <span class="mw-gls" data-name ="erm">ERM</span> as a main principle for how to operationally combine the individual ML components. Within the <span class="mw-gls" data-name ="erm">ERM</span> principle, ML problems become optimization problems and ML methods become optimization methods. | ||

==The Data== | ==<span id="sec_the_data"/>The Data== | ||

''' Data as Collections of <span class="mw-gls mw-gls-first" data-name ="datapoint">Data point</span>s.''' Maybe the most important component of any ML problem | ''' Data as Collections of <span class="mw-gls mw-gls-first" data-name ="datapoint">Data point</span>s.''' Maybe the most important component of any ML problem | ||

| Line 220: | Line 219: | ||

<div class="d-flex justify-content-center"> | <div class="d-flex justify-content-center"> | ||

<span id="fig:image "></span> | <span id="fig:image "></span> | ||

[[File:BergSee.jpg | | [[File:BergSee.jpg | 300px | thumb | A snapshot taken at the beginning of a mountain hike. ]] | ||

</div> | </div> | ||

| Line 233: | Line 232: | ||

Consider a <span class="mw-gls" data-name ="datapoint">data point</span> that represents a vaccine. A full characterization of such a <span class="mw-gls" data-name ="datapoint">data point</span> would require to specify its chemical composition down to level of molecules and atoms. Moreover, there are properties of a vaccine that depend on the patient who received the vaccine. | Consider a <span class="mw-gls" data-name ="datapoint">data point</span> that represents a vaccine. A full characterization of such a <span class="mw-gls" data-name ="datapoint">data point</span> would require to specify its chemical composition down to level of molecules and atoms. Moreover, there are properties of a vaccine that depend on the patient who received the vaccine. | ||

We find it useful to distinguish between two different groups of properties of a <span class="mw-gls" data-name ="datapoint">data point</span>. The first group of properties is referred to as <span class="mw-gls" data-name ="features">features</span> and the second group of properties is referred to as <span class="mw-gls" data-name ="label">label</span>s. Roughly speaking, features are low-level properties of a <span class="mw-gls" data-name ="datapoint">data point</span> that can be measured or computed easily in an automated fashion. In contract, labels are high-level properties of a <span class="mw-gls" data-name ="datapoint">data point</span>s that represent some quantity of interest. Determining the label value of a <span class="mw-gls" data-name ="datapoint">data point</span> often requires human labour, e.g., a domain expert who has to examine the <span class="mw-gls" data-name ="datapoint">data point</span>. Some widely used synonyms for <span class="mw-gls" data-name ="features">features</span> are “covariate”,“explanatory variable”, “independent variable”, “input (variable)”, “predictor (variable)” or “regressor” <ref name="Gujarati2021"/><ref name="Dodge2003"/><ref name="Everitt2022"/>. Some widely used synonyms for the label of a <span class="mw-gls" data-name ="datapoint">data point</span> are "response variable", "output variable" or "target" <ref name="Gujarati2021"/><ref name="Dodge2003"/><ref name="Everitt2022"/>. | We find it useful to distinguish between two different groups of properties of a <span class="mw-gls" data-name ="datapoint">data point</span>. The first group of properties is referred to as <span class="mw-gls" data-name ="features">features</span> and the second group of properties is referred to as <span class="mw-gls" data-name ="label">label</span>s. Roughly speaking, features are low-level properties of a <span class="mw-gls" data-name ="datapoint">data point</span> that can be measured or computed easily in an automated fashion. In contract, labels are high-level properties of a <span class="mw-gls" data-name ="datapoint">data point</span>s that represent some quantity of interest. Determining the label value of a <span class="mw-gls" data-name ="datapoint">data point</span> often requires human labour, e.g., a domain expert who has to examine the <span class="mw-gls" data-name ="datapoint">data point</span>. Some widely used synonyms for <span class="mw-gls" data-name ="features">features</span> are “covariate”,“explanatory variable”, “independent variable”, “input (variable)”, “predictor (variable)” or “regressor” <ref name="Gujarati2021">D. Gujarati and D. Porter. ''Basic Econometrics'' Mc-Graw Hill, 2009</ref><ref name="Dodge2003">Y. Dodge. ''The Oxford Dictionary of Statistical Terms'' Oxford University Press, 2003</ref><ref name="Everitt2022">B. Everitt. ''Cambridge Dictionary of Statistics'' Cambridge University Press, 2002</ref>. Some widely used synonyms for the label of a <span class="mw-gls" data-name ="datapoint">data point</span> are "response variable", "output variable" or "target" <ref name="Gujarati2021"/><ref name="Dodge2003"/><ref name="Everitt2022"/>. | ||

We will discuss the concepts of features and labels in somewhat more detail in Sections [[#sec_feature_space |Features]] and [[#sec_labels | Labels]]. | We will discuss the concepts of features and labels in somewhat more detail in Sections [[#sec_feature_space |Features]] and [[#sec_labels | Labels]]. | ||

| Line 240: | Line 239: | ||

Assume we have a list of <span class="mw-gls" data-name ="datapoint">data point</span>s each of which is characterized by several properties that could be measured easily in principles (by sensors). These properties would be first candidates for being used as features of the <span class="mw-gls" data-name ="datapoint">data point</span>s. However, some of these properties are unknown (missing) for a small set of <span class="mw-gls" data-name ="datapoint">data point</span>s | Assume we have a list of <span class="mw-gls" data-name ="datapoint">data point</span>s each of which is characterized by several properties that could be measured easily in principles (by sensors). These properties would be first candidates for being used as features of the <span class="mw-gls" data-name ="datapoint">data point</span>s. However, some of these properties are unknown (missing) for a small set of <span class="mw-gls" data-name ="datapoint">data point</span>s | ||

(e.g., due to broken sensors). We could then define the properties which are missing for some <span class="mw-gls" data-name ="datapoint">data point</span>s as labels and try to predict these labels using the remaining properties (which are known for all <span class="mw-gls" data-name ="datapoint">data point</span>s) | (e.g., due to broken sensors). We could then define the properties which are missing for some <span class="mw-gls" data-name ="datapoint">data point</span>s as labels and try to predict these labels using the remaining properties (which are known for all <span class="mw-gls" data-name ="datapoint">data point</span>s) | ||

as features. The task of determining missing values of properties that could be measured easily in principle is referred to as imputation <ref name="Abayomi2008DiagnosticsFM"/>. | as features. The task of determining missing values of properties that could be measured easily in principle is referred to as imputation <ref name="Abayomi2008DiagnosticsFM">K. Abayomi, A. Gelman, and M. A. Levy. Diagnostics for multivariate imputations. ''Journal of The Royal Statistical Society Series C-applied | ||

Statistics'' 57:273--291, 2008</ref>. | |||

Missing data might also arise in image processing applications. Consider <span class="mw-gls" data-name ="datapoint">data point</span>s being images (snapshots) | Missing data might also arise in image processing applications. Consider <span class="mw-gls" data-name ="datapoint">data point</span>s being images (snapshots) | ||

| Line 261: | Line 261: | ||

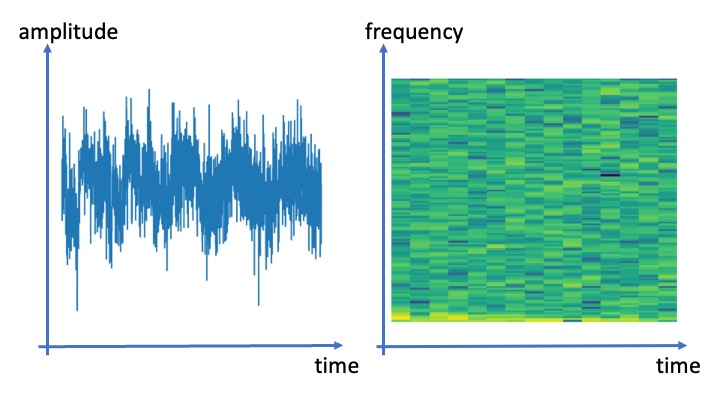

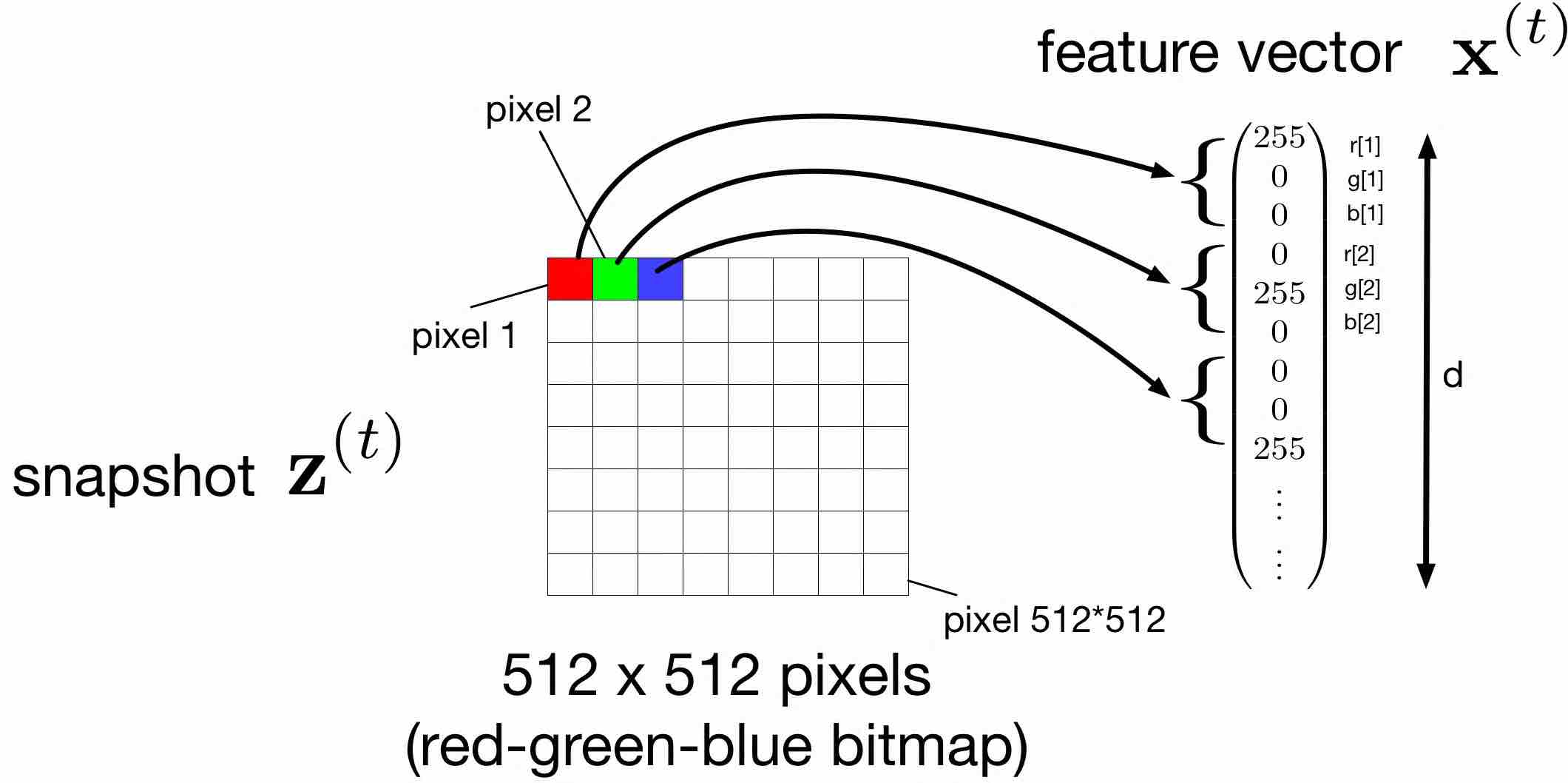

<span class="mw-gls" data-name ="datapoint">data point</span>s representing audio recording (of a given duration) we might use the signal amplitudes at regular sampling instants (e.g., using sampling frequency <math>44</math> kHz) as features. For <span class="mw-gls" data-name ="datapoint">data point</span>s representing images it seems natural to use the colour (red, green and blue) intensity levels of each pixel as a feature (see Figure [[#fig_snapshot_pixels|fig_snapshot_pixels]]). | <span class="mw-gls" data-name ="datapoint">data point</span>s representing audio recording (of a given duration) we might use the signal amplitudes at regular sampling instants (e.g., using sampling frequency <math>44</math> kHz) as features. For <span class="mw-gls" data-name ="datapoint">data point</span>s representing images it seems natural to use the colour (red, green and blue) intensity levels of each pixel as a feature (see Figure [[#fig_snapshot_pixels|fig_snapshot_pixels]]). | ||

The feature construction for images depicted in Figure [[#fig_snapshot_pixels|fig_snapshot_pixels]] can be extended to other types of <span class="mw-gls" data-name ="datapoint">data point</span>s as long as they can be visualized efficiently <ref name="Friendly:06:hbook"/>. | The feature construction for images depicted in Figure [[#fig_snapshot_pixels|fig_snapshot_pixels]] can be extended to other types of <span class="mw-gls" data-name ="datapoint">data point</span>s as long as they can be visualized efficiently <ref name="Friendly:06:hbook">M. Friendly. A brief history of data visualization. In C. Chen, W. Härdle, and A. Unwin, editors, ''Handbook of | ||

Computational Statistics: Data Visualization'' volume III. Springer-Verlag, | |||

2006</ref>. | |||

Audio recordings are typically available a sequence of signal amplitudes <math>a_{\timeidx}</math> collected regularly at time instants <math>\timeidx=1,\ldots,\featuredim</math> with sampling frequency <math>\approx 44</math> kHz. From a signal processing perspective, it seems natural to directly use the signal amplitudes as features, <math>\feature_{\featureidx} = a_{\featureidx}</math> for <math>\featureidx=1,\ldots,\featurelen</math>. However, another choice for the features would be the pixel RGB values of some visualization of the audio recording. | Audio recordings are typically available a sequence of signal amplitudes <math>a_{\timeidx}</math> collected regularly at time instants <math>\timeidx=1,\ldots,\featuredim</math> with sampling frequency <math>\approx 44</math> kHz. From a signal processing perspective, it seems natural to directly use the signal amplitudes as features, <math>\feature_{\featureidx} = a_{\featureidx}</math> for <math>\featureidx=1,\ldots,\featurelen</math>. However, another choice for the features would be the pixel RGB values of some visualization of the audio recording. | ||

| Line 270: | Line 272: | ||

</div> | </div> | ||

Figure [[#fig_visualization_audio|fig_visualization_audio]] depicts two possible visualizations of an audio signal. The first visualization is obtained from a line plot of the signal amplitudes as a function of time <math>\timeidx</math>. Another visualization of an audio recording is obtained from an intensity plot of its <span class="mw-gls" data-name ="spectogram">spectrogram</span><ref name="TimeFrequencyAnalysisBoashash"/><ref name="MallatBook"/>. We can then use the pixel RGB intensities of these visualizations as the features for an audio recording. Using this trick we can transform any ML method for image data to an ML method for audio data. We can use the <span class="mw-gls mw-gls-first" data-name ="scatterplot">scatterplot</span> of a data set to use ML methods for image segmentation to <span class="mw-gls mw-gls-first" data-name ="cluster">cluster</span> the <span class="mw-gls" data-name ="dataset">dataset</span>(see Chapter [[guide:1a7f020d42 | Clustering ]]). | Figure [[#fig_visualization_audio|fig_visualization_audio]] depicts two possible visualizations of an audio signal. The first visualization is obtained from a line plot of the signal amplitudes as a function of time <math>\timeidx</math>. Another visualization of an audio recording is obtained from an intensity plot of its <span class="mw-gls" data-name ="spectogram">spectrogram</span><ref name="TimeFrequencyAnalysisBoashash">B. Boashash, editor. ''Time Frequency Signal Analysis and Processing: A | ||

Comprehensive Reference'' Elsevier, Amsterdam, The Netherlands, 2003</ref><ref name="MallatBook">S. G. Mallat. ''A Wavelet Tour of Signal Processing -- The Sparse Way'' Academic Press, San Diego, CA, 3 edition, 2009</ref>. We can then use the pixel RGB intensities of these visualizations as the features for an audio recording. Using this trick we can transform any ML method for image data to an ML method for audio data. We can use the <span class="mw-gls mw-gls-first" data-name ="scatterplot">scatterplot</span> of a data set to use ML methods for image segmentation to <span class="mw-gls mw-gls-first" data-name ="cluster">cluster</span> the <span class="mw-gls" data-name ="dataset">dataset</span>(see Chapter [[guide:1a7f020d42 | Clustering ]]). | |||

<div class="d-flex justify-content-center"> | <div class="d-flex justify-content-center"> | ||

| Line 288: | Line 291: | ||

The feature space is a design choice as it depends on what properties of a <span class="mw-gls" data-name ="datapoint">data point</span> we use as its features. This design choice should take into account the statistical properties of the data as well as the available computational infrastructure. If the computational infrastructure allows for efficient numerical linear algebra, then using <math>\featurespace = \mathbb{R}^{\featurelen}</math> might be a good choice. | The feature space is a design choice as it depends on what properties of a <span class="mw-gls" data-name ="datapoint">data point</span> we use as its features. This design choice should take into account the statistical properties of the data as well as the available computational infrastructure. If the computational infrastructure allows for efficient numerical linear algebra, then using <math>\featurespace = \mathbb{R}^{\featurelen}</math> might be a good choice. | ||

The <span class="mw-gls mw-gls-first" data-name ="euclidspace">Euclidean space</span> <math>\mathbb{R}^{\featuredim}</math> is an example of a feature space with a rich geometric and algebraic structure <ref name="RudinBookPrinciplesMatheAnalysis"/>. | The <span class="mw-gls mw-gls-first" data-name ="euclidspace">Euclidean space</span> <math>\mathbb{R}^{\featuredim}</math> is an example of a feature space with a rich geometric and algebraic structure <ref name="RudinBookPrinciplesMatheAnalysis">W. Rudin. ''Principles of Mathematical Analysis'' McGraw-Hill, New York, 3 edition, 1976</ref>. | ||

The algebraic structure of <math>\mathbb{R}^{\featuredim}</math> is defined by vector addition and multiplication of vectors with scalars. The geometric structure of <math>\mathbb{R}^{\featuredim}</math> is obtained by the Euclidean norm as a measure for the distance between two elements of <math>\mathbb{R}^{\featuredim}</math>. | The algebraic structure of <math>\mathbb{R}^{\featuredim}</math> is defined by vector addition and multiplication of vectors with scalars. The geometric structure of <math>\mathbb{R}^{\featuredim}</math> is obtained by the Euclidean norm as a measure for the distance between two elements of <math>\mathbb{R}^{\featuredim}</math>. | ||

The algebraic and geometric structure of <math>\mathbb{R}^{\featuredim}</math> often enables an efficient search over <math>\mathbb{R}^{\featuredim}</math> to find elements with desired properties. Chapter [[guide:2c0f621d22#sec_ERM_lin_reg | ERM for Linear Regression ]] discusses examples of such search problems in the context of learning an optimal hypothesis. | The algebraic and geometric structure of <math>\mathbb{R}^{\featuredim}</math> often enables an efficient search over <math>\mathbb{R}^{\featuredim}</math> to find elements with desired properties. Chapter [[guide:2c0f621d22#sec_ERM_lin_reg | ERM for Linear Regression ]] discusses examples of such search problems in the context of learning an optimal hypothesis. | ||

| Line 294: | Line 297: | ||

Modern information-technology, including smartphones or wearables, allows us to measure a huge number of properties about <span class="mw-gls" data-name ="datapoint">data point</span>s in many application domains. Consider a <span class="mw-gls" data-name ="datapoint">data point</span> representing the book author “Alex Jung”. Alex uses a smartphone to take roughly five snapshots per day (sometimes more, e.g., during a mountain hike) resulting in more than <math>1000</math> snapshots per year. Each snapshot contains around <math>10^{6}</math> pixels whose greyscale levels we can use as features of the <span class="mw-gls" data-name ="datapoint">data point</span>. This results in more than <math>10^{9}</math> features (per year!). | Modern information-technology, including smartphones or wearables, allows us to measure a huge number of properties about <span class="mw-gls" data-name ="datapoint">data point</span>s in many application domains. Consider a <span class="mw-gls" data-name ="datapoint">data point</span> representing the book author “Alex Jung”. Alex uses a smartphone to take roughly five snapshots per day (sometimes more, e.g., during a mountain hike) resulting in more than <math>1000</math> snapshots per year. Each snapshot contains around <math>10^{6}</math> pixels whose greyscale levels we can use as features of the <span class="mw-gls" data-name ="datapoint">data point</span>. This results in more than <math>10^{9}</math> features (per year!). | ||

As indicated above, many important ML applications involve <span class="mw-gls" data-name ="datapoint">data point</span>s represented by very long feature vectors. To process such high-dimensional data, modern ML methods rely on concepts from high-dimensional statistics <ref name="BuhlGeerBook"/><ref name="Wain2019"/>. One such concept from high-dimensional statistics is the notion of sparsity. Section [[guide:013ef4b5cd#sec_lasso | The Lasso ]] discusses methods that exploit the tendency of high-dimensional <span class="mw-gls" data-name ="datapoint">data point</span>s, which are characterized by a large number <math>\featuredim</math> of features, to concentrate near low-dimensional subspaces in the <span class="mw-gls" data-name ="featurespace">feature space</span> <ref name="VidalMag"/>. | As indicated above, many important ML applications involve <span class="mw-gls" data-name ="datapoint">data point</span>s represented by very long feature vectors. To process such high-dimensional data, modern ML methods rely on concepts from high-dimensional statistics <ref name="BuhlGeerBook">P. Bühlmann and S. van de Geer. ''Statistics for High-Dimensional Data'' Springer, New York, 2011</ref><ref name="Wain2019">M. Wainwright. ''High-Dimensional Statistics: A Non-Asymptotic Viewpoint'' Cambridge: Cambridge University Press, 2019</ref>. One such concept from high-dimensional statistics is the notion of sparsity. Section [[guide:013ef4b5cd#sec_lasso | The Lasso ]] discusses methods that exploit the tendency of high-dimensional <span class="mw-gls" data-name ="datapoint">data point</span>s, which are characterized by a large number <math>\featuredim</math> of features, to concentrate near low-dimensional subspaces in the <span class="mw-gls" data-name ="featurespace">feature space</span> <ref name="VidalMag">R. Vidal. Subspace clustering. ''IEEE Signal Processing Magazine'' 52, March 2011</ref>. | ||

At first sight it might seem that “the more features the better” since using more features might convey more relevant information to achieve the overall goal. However, as we discuss in Chapter [[guide:50be9327aa | Regularization ]], it can be detrimental for the performance of ML methods to use an excessive amount of (irrelevant) features. | At first sight it might seem that “the more features the better” since using more features might convey more relevant information to achieve the overall goal. However, as we discuss in Chapter [[guide:50be9327aa | Regularization ]], it can be detrimental for the performance of ML methods to use an excessive amount of (irrelevant) features. | ||

| Line 309: | Line 312: | ||

Beside the available computational infrastructure, also the statistical properties of datasets must be taken into account for the choices of the feature space. The linear algebraic structure of <math>\mathbb{R}^{\featuredim}</math> allows us to efficiently represent and approximate datasets that are well aligned along linear subspaces. Section [[guide:4f25e79970#sec_pca | Principal Component Analysis]] discusses a basic method to optimally approximate datasets by linear subspaces of a given dimension. The geometric structure of <math>\mathbb{R}^{\featuredim}</math> is also used in Chapter [[guide:1a7f020d42 | Clustering ]] to decompose a <span class="mw-gls" data-name ="dataset">dataset</span> into few groups or clusters that consist of similar <span class="mw-gls" data-name ="datapoint">data point</span>s. | Beside the available computational infrastructure, also the statistical properties of datasets must be taken into account for the choices of the feature space. The linear algebraic structure of <math>\mathbb{R}^{\featuredim}</math> allows us to efficiently represent and approximate datasets that are well aligned along linear subspaces. Section [[guide:4f25e79970#sec_pca | Principal Component Analysis]] discusses a basic method to optimally approximate datasets by linear subspaces of a given dimension. The geometric structure of <math>\mathbb{R}^{\featuredim}</math> is also used in Chapter [[guide:1a7f020d42 | Clustering ]] to decompose a <span class="mw-gls" data-name ="dataset">dataset</span> into few groups or clusters that consist of similar <span class="mw-gls" data-name ="datapoint">data point</span>s. | ||

Throughout this book we will mainly use the feature space <math>\mathbb{R}^{\featuredim}</math> with dimension <math>\featuredim</math> being the number of features of a <span class="mw-gls" data-name ="datapoint">data point</span>. This <span class="mw-gls" data-name ="featurespace">feature space</span> has proven useful in many ML applications due to availability of efficient soft- and hardware for numerical linear algebra. Moreover, the algebraic and geometric structure of <math>\mathbb{R}^{\featuredim}</math> reflect the intrinsic structure of data arising in many important application domains. This should not be too surprising as the <span class="mw-gls" data-name ="euclidspace">Euclidean space</span> has evolved as a useful mathematical abstraction of physical phenomena <ref name="KibbleBerkshireBook"/>. | Throughout this book we will mainly use the feature space <math>\mathbb{R}^{\featuredim}</math> with dimension <math>\featuredim</math> being the number of features of a <span class="mw-gls" data-name ="datapoint">data point</span>. This <span class="mw-gls" data-name ="featurespace">feature space</span> has proven useful in many ML applications due to availability of efficient soft- and hardware for numerical linear algebra. Moreover, the algebraic and geometric structure of <math>\mathbb{R}^{\featuredim}</math> reflect the intrinsic structure of data arising in many important application domains. This should not be too surprising as the <span class="mw-gls" data-name ="euclidspace">Euclidean space</span> has evolved as a useful mathematical abstraction of physical phenomena <ref name="KibbleBerkshireBook">T. Kibble and F. Berkshire. ''Classical Mechanics'' Imperical College Press, 5 edition, 2011</ref>. | ||

In general there is no mathematically correct choice for which properties of a <span class="mw-gls" data-name ="datapoint">data point</span> to be used as its features. Most application domains allow for some design freedom in the choice of features. Let us illustrate this design freedom with a personalized health-care applications. This application involves <span class="mw-gls" data-name ="datapoint">data point</span>s that represent audio recordings with the fixed duration of three seconds. These recordings are obtained via smartphone microphones and used to detect coughing <ref name="CougDetection2019"/>. | In general there is no mathematically correct choice for which properties of a <span class="mw-gls" data-name ="datapoint">data point</span> to be used as its features. Most application domains allow for some design freedom in the choice of features. Let us illustrate this design freedom with a personalized health-care applications. This application involves <span class="mw-gls" data-name ="datapoint">data point</span>s that represent audio recordings with the fixed duration of three seconds. These recordings are obtained via smartphone microphones and used to detect coughing <ref name="CougDetection2019">F. Barata, K. Kipfer, M. Weber, P. Tinschert, E. Fleisch, and T. Kowatsch. Towards device-agnostic mobile cough detection with convolutional | ||

neural networks. In ''2019 IEEE International Conference on Healthcare Informatics | |||

(ICHI)'' pages 1--11, 2019</ref>. | |||

===<span id="sec_labels"/>Labels=== | ===<span id="sec_labels"/>Labels=== | ||

| Line 359: | Line 364: | ||

We refer to a <span class="mw-gls" data-name ="datapoint">data point</span> as being \emph{labeled} if, besides its features <math>\featurevec</math>, the value of its label <math>\truelabel</math> is known. The acquisition of labeled <span class="mw-gls" data-name ="datapoint">data point</span>s typically involves human labour, | We refer to a <span class="mw-gls" data-name ="datapoint">data point</span> as being \emph{labeled} if, besides its features <math>\featurevec</math>, the value of its label <math>\truelabel</math> is known. The acquisition of labeled <span class="mw-gls" data-name ="datapoint">data point</span>s typically involves human labour, | ||

such as verifying if an image shows a cat. In other applications, acquiring labels might require sending out a team of marine biologists to the Baltic sea <ref name="MLMarineBiology"/>, to run a particle physics experiment at the European organization for nuclear research (CERN) <ref name="MLCERN"/>, or to conduct animal trials in pharmacology <ref name="MLPharma"/>. | such as verifying if an image shows a cat. In other applications, acquiring labels might require sending out a team of marine biologists to the Baltic sea <ref name="MLMarineBiology">S. Smoliski and K. Radtke. Spatial prediction of demersal fish diversity in the baltic sea: | ||

comparison of machine learning and regression-based techniques. ''ICES Journal of Marine Science'' 74(1):102--111, 2017</ref>, to run a particle physics experiment at the European organization for nuclear research (CERN) <ref name="MLCERN">S. Carrazza. Machine learning challenges in theoretical HEP. ''arXiv'' 2018</ref>, or to conduct animal trials in pharmacology <ref name="MLPharma">M. Gao, H. Igata, A. Takeuchi, K. Sato, and Y. Ikegaya. Machine learning-based prediction of adverse drug effects: An example | |||

of seizure-inducing compounds. ''Journal of Pharmacological Sciences'' 133(2):70 -- 78, 2017</ref>. | |||

Let us also point out online market places for human labelling workforce <ref name="Mort2018"/>. These market places, | Let us also point out online market places for human labelling workforce <ref name="Mort2018">K. Mortensen and T. Hughes. Comparing amazon's mechanical turk platform to conventional data | ||

collection methods in the health and medical research literature. ''J. Gen. Intern Med.'' 33(4):533--538, 2018</ref>. These market places, | |||

allow to upload <span class="mw-gls" data-name ="datapoint">data point</span>s, such as collections of images or audio recordings, and then offer an hourly rate to humans that label the <span class="mw-gls" data-name ="datapoint">data point</span>s. This labeling work might amount to marking images that show a cat. | allow to upload <span class="mw-gls" data-name ="datapoint">data point</span>s, such as collections of images or audio recordings, and then offer an hourly rate to humans that label the <span class="mw-gls" data-name ="datapoint">data point</span>s. This labeling work might amount to marking images that show a cat. | ||

Many applications involve <span class="mw-gls" data-name ="datapoint">data point</span>s whose features can be determined easily, but whose labels are known for few <span class="mw-gls" data-name ="datapoint">data point</span>s only. Labeled data is a scarce resource. Some of the most successful ML methods have been devised in application domains where label information can be acquired easily <ref name="UnreasonableData"/>. ML methods for speech recognition and machine translation can make use of massive labeled datasets that are freely available <ref name="Koehn2005"/>. | Many applications involve <span class="mw-gls" data-name ="datapoint">data point</span>s whose features can be determined easily, but whose labels are known for few <span class="mw-gls" data-name ="datapoint">data point</span>s only. Labeled data is a scarce resource. Some of the most successful ML methods have been devised in application domains where label information can be acquired easily <ref name="UnreasonableData">A. Halevy, P. Norvig, and F. Pereira. The unreasonable effectiveness of data. ''IEEE Intelligent Systems'' March/April 2009</ref>. ML methods for speech recognition and machine translation can make use of massive labeled datasets that are freely available <ref name="Koehn2005">P. Koehn. Europarl: A parallel corpus for statistical machine translation. In ''The 10th Machine Translation Summit, page 79--86., AAMT,'' | ||

Phuket, Thailand, 2005</ref>. | |||

In the extreme case, we do not know the label of any single <span class="mw-gls" data-name ="datapoint">data point</span>. Even in the absence of any labeled data, ML methods can be useful for extracting relevant information from features only. We refer to ML methods which do not require any labeled <span class="mw-gls" data-name ="datapoint">data point</span>s as “unsupervised” ML methods. We discuss some of the most important unsupervised ML methods in Chapter [[guide:1a7f020d42 | Clustering ]] | In the extreme case, we do not know the label of any single <span class="mw-gls" data-name ="datapoint">data point</span>. Even in the absence of any labeled data, ML methods can be useful for extracting relevant information from features only. We refer to ML methods which do not require any labeled <span class="mw-gls" data-name ="datapoint">data point</span>s as “unsupervised” ML methods. We discuss some of the most important unsupervised ML methods in Chapter [[guide:1a7f020d42 | Clustering ]] | ||

| Line 371: | Line 380: | ||

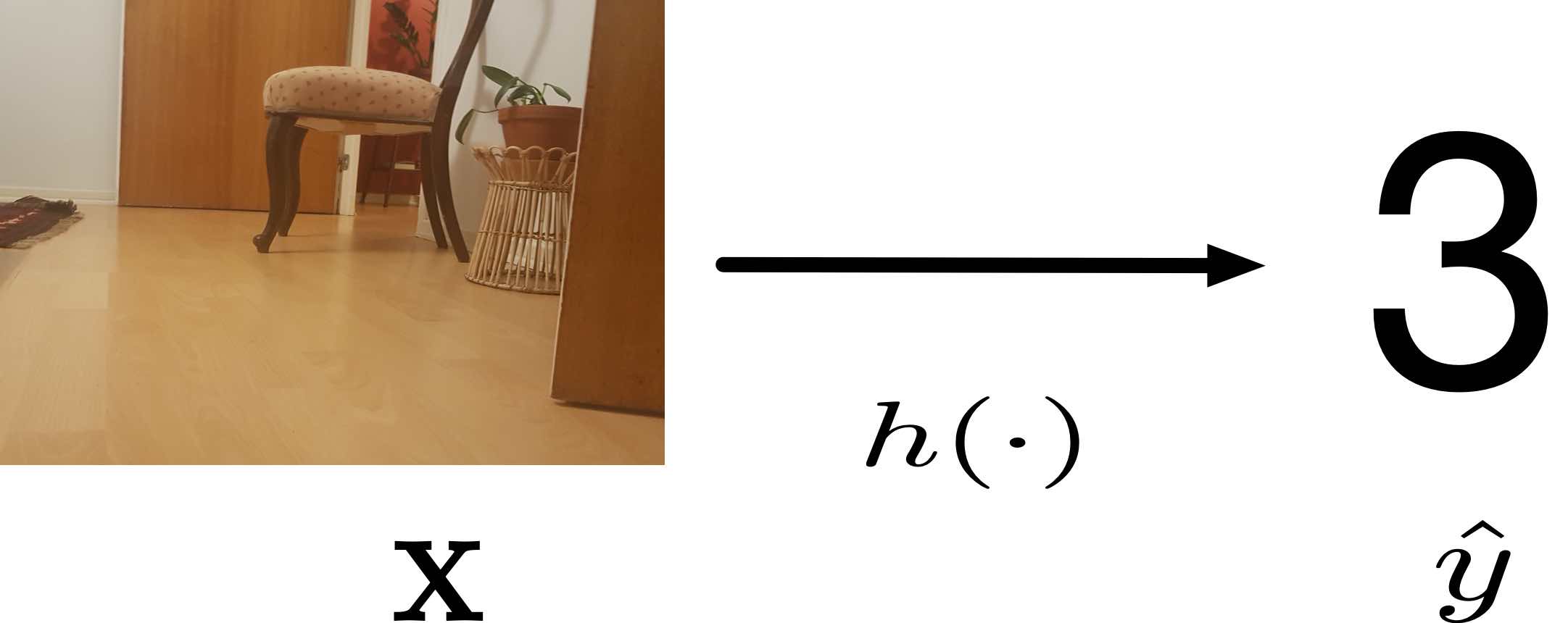

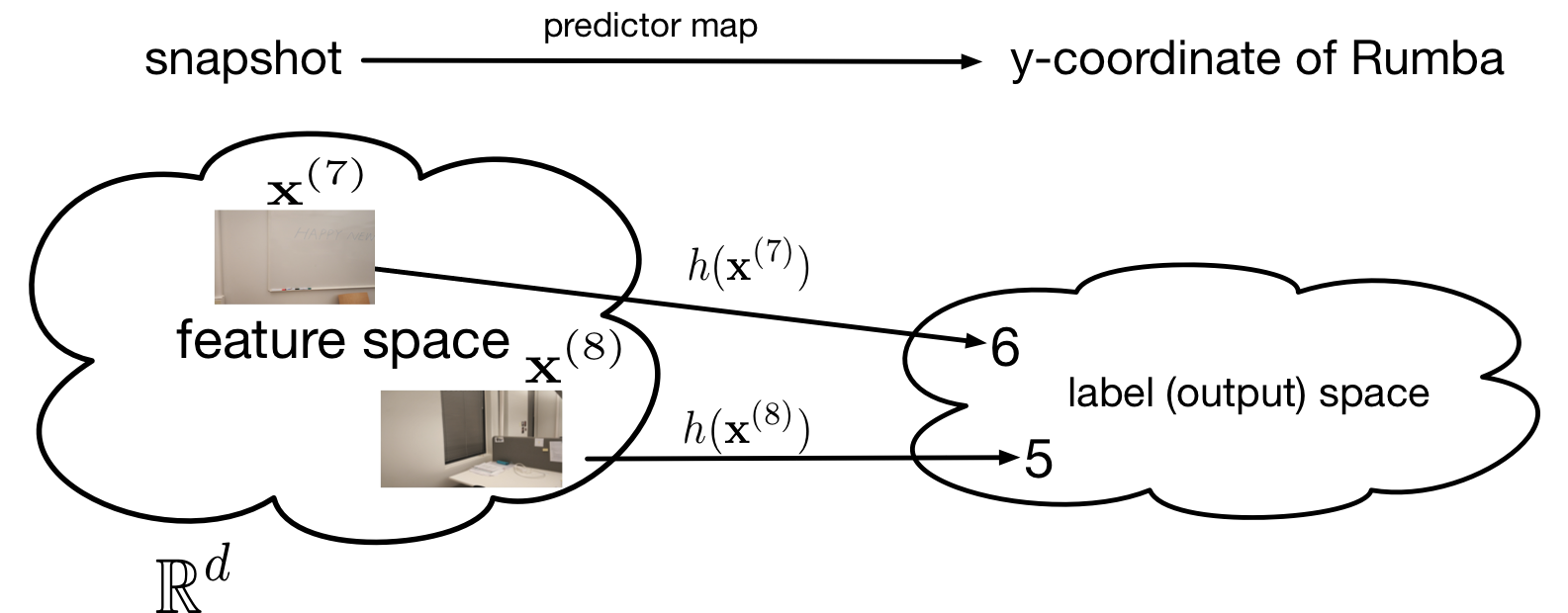

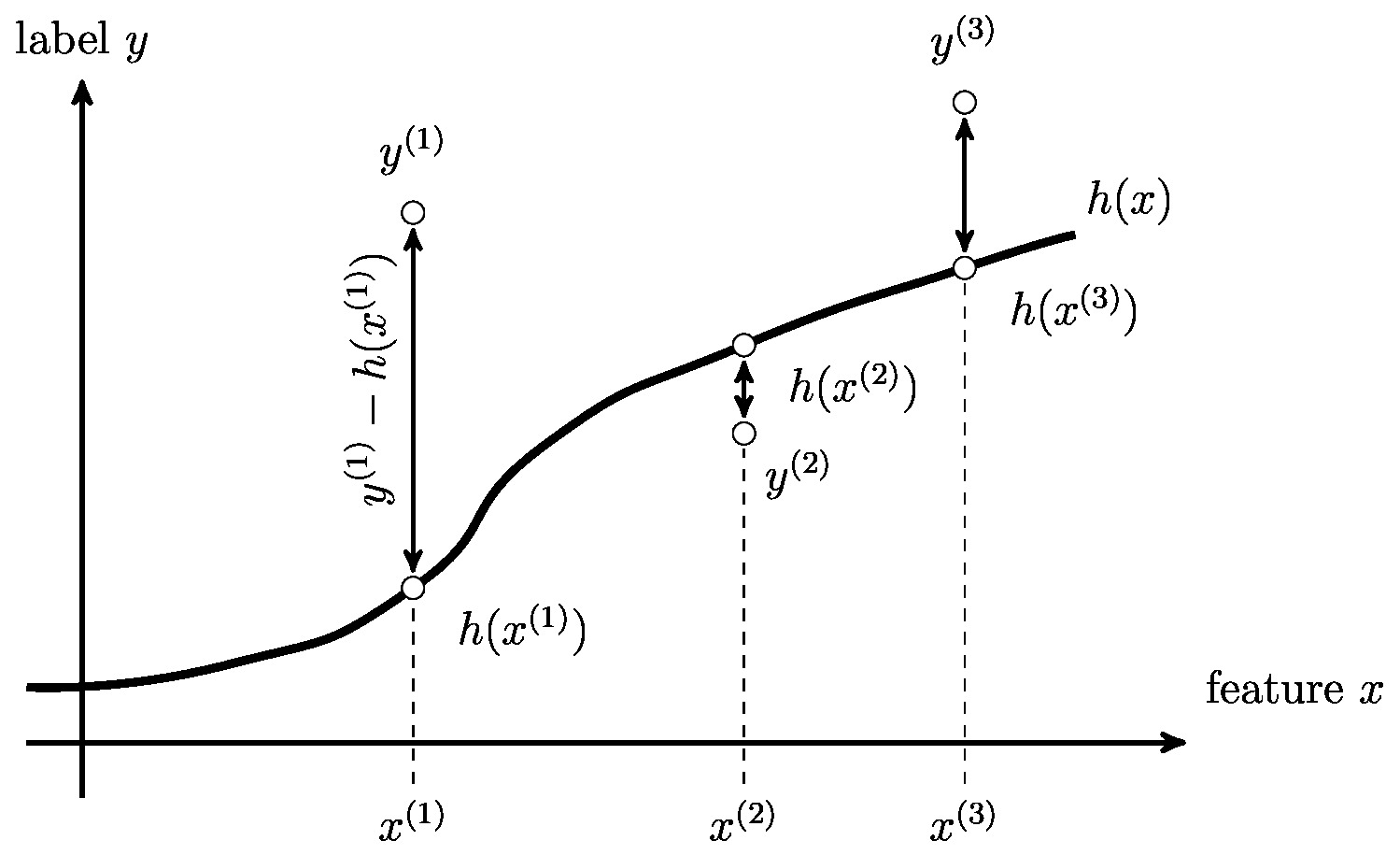

ML methods learn (or search for) a “good” predictor <math>h: \featurespace \rightarrow \labelspace</math> which takes the features <math>\featurevec \in \featurespace</math> of a <span class="mw-gls" data-name ="datapoint">data point</span> as its input and outputs a predicted label (or output, or target) <math>\hat{\truelabel} = h(\featurevec) \in \labelspace</math>. A good predictor should be such that <math>\hat{\truelabel} \approx \truelabel</math>, i.e., the predicted label <math>\hat{\truelabel}</math> is close (with small error <math>\hat{\truelabel} - \truelabel</math>) to the true underlying label <math>\truelabel</math>. | ML methods learn (or search for) a “good” predictor <math>h: \featurespace \rightarrow \labelspace</math> which takes the features <math>\featurevec \in \featurespace</math> of a <span class="mw-gls" data-name ="datapoint">data point</span> as its input and outputs a predicted label (or output, or target) <math>\hat{\truelabel} = h(\featurevec) \in \labelspace</math>. A good predictor should be such that <math>\hat{\truelabel} \approx \truelabel</math>, i.e., the predicted label <math>\hat{\truelabel}</math> is close (with small error <math>\hat{\truelabel} - \truelabel</math>) to the true underlying label <math>\truelabel</math>. | ||

===Scatterplot=== | ===<span id="equ_subsection_scatterplot"/>Scatterplot=== | ||

Consider <span class="mw-gls" data-name ="datapoint">data point</span>s characterized by a single numeric feature <math>\feature</math> and single numeric label <math>\truelabel</math>. | Consider <span class="mw-gls" data-name ="datapoint">data point</span>s characterized by a single numeric feature <math>\feature</math> and single numeric label <math>\truelabel</math>. | ||

| Line 391: | Line 400: | ||

However, this interpretation allows us to use the properties of the <span class="mw-gls" data-name ="probdist">probability distribution</span> to characterize overall properties of entire datasets, i.e., large collections of <span class="mw-gls" data-name ="datapoint">data point</span>s. | However, this interpretation allows us to use the properties of the <span class="mw-gls" data-name ="probdist">probability distribution</span> to characterize overall properties of entire datasets, i.e., large collections of <span class="mw-gls" data-name ="datapoint">data point</span>s. | ||

The <span class="mw-gls" data-name ="probdist">probability distribution</span> <math>p(\feature)</math> underlying the <span class="mw-gls" data-name ="datapoint">data point</span>s within the <span class="mw-gls mw-gls-first" data-name ="iidasspt">i.i.d. assumption</span> is either known (based on some domain expertise) or estimated from data. It is often enough to estimate only some parameters of the distribution <math>p(\feature)</math>. Section [[guide:013ef4b5cd#sec_max_iikelihood | Maximum Likelihood ]] discusses a principled approach to estimate the parameters of a <span class="mw-gls" data-name ="probdist">probability distribution</span> from a given set of <span class="mw-gls" data-name ="datapoint">data point</span>s. This approach is sometimes referred to as maximum likelihood and aims at finding (parameter of) a <span class="mw-gls" data-name ="probdist">probability distribution</span> <math>p(\feature)</math> such that the probability (density) of observing the given <span class="mw-gls" data-name ="datapoint">data point</span>s is maximized <ref name="LC"/><ref name="kay"/><ref name="BertsekasProb"/>. | The <span class="mw-gls" data-name ="probdist">probability distribution</span> <math>p(\feature)</math> underlying the <span class="mw-gls" data-name ="datapoint">data point</span>s within the <span class="mw-gls mw-gls-first" data-name ="iidasspt">i.i.d. assumption</span> is either known (based on some domain expertise) or estimated from data. It is often enough to estimate only some parameters of the distribution <math>p(\feature)</math>. Section [[guide:013ef4b5cd#sec_max_iikelihood | Maximum Likelihood ]] discusses a principled approach to estimate the parameters of a <span class="mw-gls" data-name ="probdist">probability distribution</span> from a given set of <span class="mw-gls" data-name ="datapoint">data point</span>s. This approach is sometimes referred to as maximum likelihood and aims at finding (parameter of) a <span class="mw-gls" data-name ="probdist">probability distribution</span> <math>p(\feature)</math> such that the probability (density) of observing the given <span class="mw-gls" data-name ="datapoint">data point</span>s is maximized <ref name="LC">E. L. Lehmann and G. Casella. ''Theory of Point Estimation'' Springer, New York, 2nd edition, 1998</ref><ref name="kay">S. M. Kay. ''Fundamentals of Statistical Signal Processing: Estimation | ||

Theory'' Prentice Hall, Englewood Cliffs, NJ, 1993</ref><ref name="BertsekasProb">D. Bertsekas and J. Tsitsiklis. ''Introduction to Probability'' Athena Scientific, 2 edition, 2008</ref>. | |||

Two of the most basic and widely used parameters of a <span class="mw-gls" data-name ="probdist">probability distribution</span> <math>p(\feature)</math> are the expected value or <span class="mw-gls mw-gls-first" data-name ="mean">mean</span> <ref name="BillingsleyProbMeasure"/> | Two of the most basic and widely used parameters of a <span class="mw-gls" data-name ="probdist">probability distribution</span> <math>p(\feature)</math> are the expected value or <span class="mw-gls mw-gls-first" data-name ="mean">mean</span> <ref name="BillingsleyProbMeasure">P. Billingsley. ''Probability and Measure'' Wiley, New York, 3 edition, 1995</ref> | ||

<math>\mu_{\feature} = \expect\{\feature\} \defeq \int_{\feature'} \feature' p(\feature') dx'</math> and the <span class="mw-gls mw-gls-first" data-name ="variance">variance</span> <math>\sigma_{\feature}^{2} \defeq \expect\big\{\big(\feature-\expect\{\feature\}\big)^{2}\big\}.</math> These parameters can be estimated using the sample mean (average) and sample variance, | <math>\mu_{\feature} = \expect\{\feature\} \defeq \int_{\feature'} \feature' p(\feature') dx'</math> and the <span class="mw-gls mw-gls-first" data-name ="variance">variance</span> <math>\sigma_{\feature}^{2} \defeq \expect\big\{\big(\feature-\expect\{\feature\}\big)^{2}\big\}.</math> These parameters can be estimated using the sample mean (average) and sample variance, | ||

| Line 403: | Line 413: | ||

</math> | </math> | ||

The sample mean and sample variance \eqref{equ_sample_mean_var} are the <span class="mw-gls mw-gls-first" data-name ="ml">maximum likelihood</span> estimators for the mean and variance of a normal (Gaussian) distribution <math>p(\feature)</math> (see <ref name="BishopBook"/>{{rp | at=Chapter 2.3.4}}). | The sample mean and sample variance \eqref{equ_sample_mean_var} are the <span class="mw-gls mw-gls-first" data-name ="ml">maximum likelihood</span> estimators for the mean and variance of a normal (Gaussian) distribution <math>p(\feature)</math> (see <ref name="BishopBook">C. M. Bishop. ''Pattern Recognition and Machine Learning'' Springer, 2006</ref>{{rp | at=Chapter 2.3.4}}). | ||

Most of the ML methods discussed in this book are motivated by an <span class="mw-gls" data-name ="iidasspt">i.i.d. assumption</span>. It is important to note that this <span class="mw-gls" data-name ="iidasspt">i.i.d. assumption</span> is only a modelling assumption (or hypothesis). There is no means to verify if an arbitrary set of <span class="mw-gls" data-name ="datapoint">data point</span>s are “exactly” realizations of <span class="mw-gls" data-name ="iid">iid</span> <span class="mw-gls" data-name ="rv">RV</span>s. There are principled statistical methods (hypothesis tests) that allow to verify if a given set of <span class="mw-gls" data-name ="datapoint">data point</span> can be well approximated as realizations of <span class="mw-gls" data-name ="iid">iid</span> <span class="mw-gls" data-name ="rv">RV</span>s <ref name="Luetkepol2005"/>. Alternatively, we can enforce the <span class="mw-gls" data-name ="iidasspt">i.i.d. assumption</span> if we generate synthetic data using a random number generator. Such synthetic <span class="mw-gls" data-name ="iid">iid</span> <span class="mw-gls" data-name ="datapoint">data point</span>s could be obtained by sampling algorithms that incrementally build a synthetic dataset by adding randomly chosen raw <span class="mw-gls" data-name ="datapoint">data point</span>s <ref name="Efron97"/>. | Most of the ML methods discussed in this book are motivated by an <span class="mw-gls" data-name ="iidasspt">i.i.d. assumption</span>. It is important to note that this <span class="mw-gls" data-name ="iidasspt">i.i.d. assumption</span> is only a modelling assumption (or hypothesis). There is no means to verify if an arbitrary set of <span class="mw-gls" data-name ="datapoint">data point</span>s are “exactly” realizations of <span class="mw-gls" data-name ="iid">iid</span> <span class="mw-gls" data-name ="rv">RV</span>s. There are principled statistical methods (hypothesis tests) that allow to verify if a given set of <span class="mw-gls" data-name ="datapoint">data point</span> can be well approximated as realizations of <span class="mw-gls" data-name ="iid">iid</span> <span class="mw-gls" data-name ="rv">RV</span>s <ref name="Luetkepol2005">H. Lütkepohl. ''New Introduction to Multiple Time Series Analysis'' Springer, New York, 2005</ref>. Alternatively, we can enforce the <span class="mw-gls" data-name ="iidasspt">i.i.d. assumption</span> if we generate synthetic data using a random number generator. Such synthetic <span class="mw-gls" data-name ="iid">iid</span> <span class="mw-gls" data-name ="datapoint">data point</span>s could be obtained by sampling algorithms that incrementally build a synthetic dataset by adding randomly chosen raw <span class="mw-gls" data-name ="datapoint">data point</span>s <ref name="Efron97">B. Efron and R. Tibshirani. Improvements on cross-validation: The 632+ bootstrap method. ''Journal of the American Statistical Association'' | ||

92(438):548--560, 1997</ref>. | |||

==The Model== | ==<span id="sec_hypo_space"/>The Model== | ||

Consider some ML application that generates <span class="mw-gls" data-name ="datapoint">data point</span>s, each characterized by features <math>\featurevec \in \featurespace</math> and label <math>\truelabel \in \labelspace</math>. The goal of a ML method is to learn a hypothesis map <math>h: \featurespace \rightarrow \labelspace</math> such that | Consider some ML application that generates <span class="mw-gls" data-name ="datapoint">data point</span>s, each characterized by features <math>\featurevec \in \featurespace</math> and label <math>\truelabel \in \labelspace</math>. The goal of a ML method is to learn a hypothesis map <math>h: \featurespace \rightarrow \labelspace</math> such that | ||

| Line 414: | Line 425: | ||

\label{equ_approx_label_pred} | \label{equ_approx_label_pred} | ||

\truelabel \approx \underbrace{h(\featurevec)}_{\hat{\truelabel}} \mbox{ for any data point}. | \truelabel \approx \underbrace{h(\featurevec)}_{\hat{\truelabel}} \mbox{ for any data point}. | ||

\end{equation}</math> The informal goal \eqref{equ_approx_label_pred} will be made precise in several aspects throughout the rest of our book. First, we need to quantify the approximation error \eqref{equ_approx_label_pred} incurred by a given hypothesis map <math>h</math>. Second, we need to make precise what we actually mean by requiring \eqref{equ_approx_label_pred} to hold for “any” <span class="mw-gls" data-name ="datapoint">data point</span>. We solve the first issue by the concept of a loss function in Section [[ | \end{equation}</math> The informal goal \eqref{equ_approx_label_pred} will be made precise in several aspects throughout the rest of our book. First, we need to quantify the approximation error \eqref{equ_approx_label_pred} incurred by a given hypothesis map <math>h</math>. Second, we need to make precise what we actually mean by requiring \eqref{equ_approx_label_pred} to hold for “any” <span class="mw-gls" data-name ="datapoint">data point</span>. We solve the first issue by the concept of a loss function in Section [[#sec_lossfct | The Loss ]]. The second issue is then solved in Chapter [[guide:2c0f621d22 | Empirical Risk Minimization ]] by using a simple probabilistic model for data. | ||

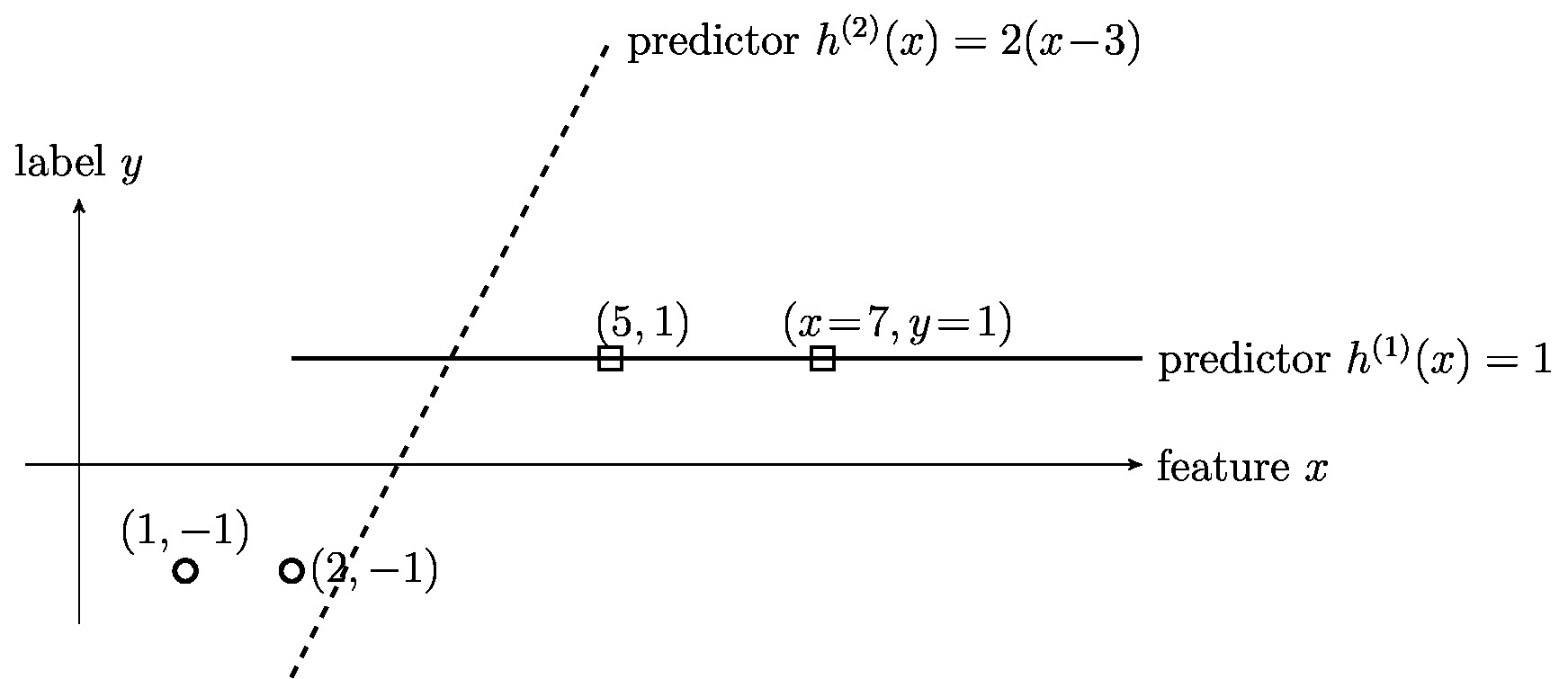

Let us assume for the time being that we have found a reasonable hypothesis <math>h</math> in the sense of \eqref{equ_approx_label_pred}. We can then use this hypothesis to predict the label of any <span class="mw-gls" data-name ="datapoint">data point</span> for which we know its features. The prediction <math>\hat{\truelabel}=h(\featurevec)</math> is obtained by evaluating the hypothesis for the features <math>\featurevec</math> of a <span class="mw-gls" data-name ="datapoint">data point</span> (see Figure [[#fig_feature_map_eval|fig_feature_map_eval]] and [[#fig:Hypothesis_Map|fig:Hypothesis_Map]]). | Let us assume for the time being that we have found a reasonable hypothesis <math>h</math> in the sense of \eqref{equ_approx_label_pred}. We can then use this hypothesis to predict the label of any <span class="mw-gls" data-name ="datapoint">data point</span> for which we know its features. The prediction <math>\hat{\truelabel}=h(\featurevec)</math> is obtained by evaluating the hypothesis for the features <math>\featurevec</math> of a <span class="mw-gls" data-name ="datapoint">data point</span> (see Figure [[#fig_feature_map_eval|fig_feature_map_eval]] and [[#fig:Hypothesis_Map|fig:Hypothesis_Map]]). | ||

| Line 435: | Line 446: | ||

In general, the set <math>\labelspace^{\featurespace}</math> is way too large to be search over by a practical ML methods. | In general, the set <math>\labelspace^{\featurespace}</math> is way too large to be search over by a practical ML methods. | ||

As a point in case, consider <span class="mw-gls" data-name ="datapoint">data point</span>s characterized by a single numeric feature <math>\feature \in \mathbb{R}</math> and label <math>\truelabel \in \mathbb{R}</math>. The set of all real-valued maps <math>h(\feature)</math> of a real-valued argument already contains uncountably infinite many different hypothesis maps <ref name="HalmosSet"/>. | As a point in case, consider <span class="mw-gls" data-name ="datapoint">data point</span>s characterized by a single numeric feature <math>\feature \in \mathbb{R}</math> and label <math>\truelabel \in \mathbb{R}</math>. The set of all real-valued maps <math>h(\feature)</math> of a real-valued argument already contains uncountably infinite many different hypothesis maps <ref name="HalmosSet">P. Halmos. ''Naive set theory'' Springer-Verlag, 1974</ref>. | ||

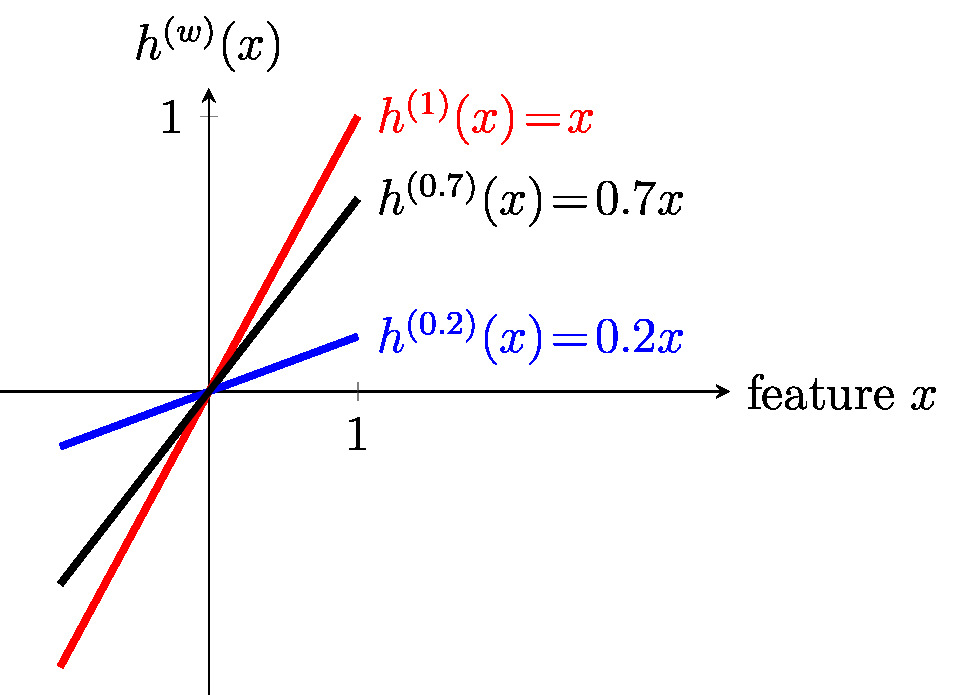

Practical ML methods can search and evaluate only a (tiny) subset of all possible hypothesis maps. This subset of computationally feasible (“affordable”) hypothesis maps is referred to as the <span class="mw-gls" data-name ="hypospace">hypothesis space</span> or <span class="mw-gls" data-name ="model">model</span> underlying a ML method. As depicted in Figure [[#fig_hypo_space|fig_hypo_space]], ML methods typically use a <span class="mw-gls" data-name ="hypospace">hypothesis space</span> <math>\hypospace</math> that is a tiny subset of <math>\labelspace^{\featurespace}</math>. Similar to the features and labels used to characterize | Practical ML methods can search and evaluate only a (tiny) subset of all possible hypothesis maps. This subset of computationally feasible (“affordable”) hypothesis maps is referred to as the <span class="mw-gls" data-name ="hypospace">hypothesis space</span> or <span class="mw-gls" data-name ="model">model</span> underlying a ML method. As depicted in Figure [[#fig_hypo_space|fig_hypo_space]], ML methods typically use a <span class="mw-gls" data-name ="hypospace">hypothesis space</span> <math>\hypospace</math> that is a tiny subset of <math>\labelspace^{\featurespace}</math>. Similar to the features and labels used to characterize | ||

| Line 465: | Line 476: | ||

* It has to be sufficiently large such that it contains at least one accurate predictor map <math>\hat{h} \in \hypospace</math>. A hypothesis space <math>\hypospace</math> that is too small might fail to include a predictor map required to reproduce the (potentially highly non-linear) relation between features and label. Consider the task of grouping or classifying images into “cat” images and “no cat image”. The classification of each image is based solely on the feature vector obtained from the pixel colour intensities. The relation between features and label (<math>\truelabel \in \{ \mbox{cat}, \mbox{no cat} \}</math>) is highly non-linear. Any ML method that uses a <span class="mw-gls" data-name ="hypospace">hypothesis space</span> consisting only of linear maps will most likely fail to learn a good predictor (classifier). We say that a ML method is underfitting if it uses a <span class="mw-gls" data-name ="hypospace">hypothesis space</span> that does not contain any hypotheses maps that can accurately predict the label of any <span class="mw-gls" data-name ="datapoint">data point</span>s. | * It has to be sufficiently large such that it contains at least one accurate predictor map <math>\hat{h} \in \hypospace</math>. A hypothesis space <math>\hypospace</math> that is too small might fail to include a predictor map required to reproduce the (potentially highly non-linear) relation between features and label. Consider the task of grouping or classifying images into “cat” images and “no cat image”. The classification of each image is based solely on the feature vector obtained from the pixel colour intensities. The relation between features and label (<math>\truelabel \in \{ \mbox{cat}, \mbox{no cat} \}</math>) is highly non-linear. Any ML method that uses a <span class="mw-gls" data-name ="hypospace">hypothesis space</span> consisting only of linear maps will most likely fail to learn a good predictor (classifier). We say that a ML method is underfitting if it uses a <span class="mw-gls" data-name ="hypospace">hypothesis space</span> that does not contain any hypotheses maps that can accurately predict the label of any <span class="mw-gls" data-name ="datapoint">data point</span>s. | ||

* It has to be sufficiently small such that its processing fits the available computational resources (memory, bandwidth, processing time). We must be able to efficiently search over the <span class="mw-gls" data-name ="hypospace">hypothesis space</span> to find good predictors (see Section [[ | * It has to be sufficiently small such that its processing fits the available computational resources (memory, bandwidth, processing time). We must be able to efficiently search over the <span class="mw-gls" data-name ="hypospace">hypothesis space</span> to find good predictors (see Section [[#sec_lossfct | The Loss ]] and Chapter [[guide:2c0f621d22 | Empirical Risk Minimization ]]). This requirement implies also that the maps <math>h(\featurevec)</math> contained in <math>\hypospace</math> can be evaluated (computed) efficiently <ref name="Austin2018">P. Austin, P. Kaski, and K. Kubjas. Tensor network complexity of multilinear maps. ''arXiv'' 2018</ref>. Another important reason for using a <span class="mw-gls" data-name ="hypospace">hypothesis space</span> <math>\hypospace</math> that is not too large is to avoid overfitting (see Chapter [[guide:50be9327aa | Regularization ]]). If the <span class="mw-gls" data-name ="hypospace">hypothesis space</span> <math>\hypospace</math> is too large, then we can easily find a hypothesis which (almost) perfectly predicts the labels of <span class="mw-gls" data-name ="datapoint">data point</span>s in a <span class="mw-gls mw-gls-first" data-name ="trainset">training set</span> which is used to learn a hypothesis. However, such a hypothesis might deliver poor predictions for labels of <span class="mw-gls" data-name ="datapoint">data point</span>s outside the <span class="mw-gls" data-name ="trainset">training set</span>. We say that the hypothesis does not generalize well. | ||

===Parametrized Hypothesis spaces=== | ===Parametrized Hypothesis spaces=== | ||

| Line 471: | Line 482: | ||

A wide range of current scientific computing environments allow for efficient numerical linear algebra. | A wide range of current scientific computing environments allow for efficient numerical linear algebra. | ||

This hard- and software allows to efficiently process data that is provided in the form of numeric arrays such as vectors, matrices or tensors <ref name="JMLR:v12:pedregosa11a"/>. To take advantage of such computational infrastructure, many ML methods use the <span class="mw-gls" data-name ="hypospace">hypothesis space</span> | This hard- and software allows to efficiently process data that is provided in the form of numeric arrays such as vectors, matrices or tensors <ref name="JMLR:v12:pedregosa11a"/>. To take advantage of such computational infrastructure, many ML methods use the <span class="mw-gls" data-name ="hypospace">hypothesis space</span> | ||

<span id="equ_def_hypo_linear_pred"/> | |||

<math display = "block">\begin{align} | <math display = "block">\begin{align} | ||

\label{equ_def_hypo_linear_pred} | \label{equ_def_hypo_linear_pred} | ||

| Line 532: | Line 545: | ||

\end{equation}</math> The construction \eqref{equ_def_enlarged_hypospace} used for arbitrary combinations of a feature map <math>\featuremap(\cdot)</math> and a “base” <span class="mw-gls" data-name ="hypospace">hypothesis space</span> <math>\hypospace</math>. The only requirement is that the output of the feature map can be used as input for a hypothesis <math>h \in \hypospace</math>. More formally, the range of the feature map must belong to the domain of the maps in <math>\hypospace</math>. Examples for ML methods that use a <span class="mw-gls" data-name ="hypospace">hypothesis space</span> of the form \eqref{equ_def_enlarged_hypospace} include polynomial regression (see Section [[guide:013ef4b5cd#sec_polynomial_regression | Polynomial Regression ]]), | \end{equation}</math> The construction \eqref{equ_def_enlarged_hypospace} used for arbitrary combinations of a feature map <math>\featuremap(\cdot)</math> and a “base” <span class="mw-gls" data-name ="hypospace">hypothesis space</span> <math>\hypospace</math>. The only requirement is that the output of the feature map can be used as input for a hypothesis <math>h \in \hypospace</math>. More formally, the range of the feature map must belong to the domain of the maps in <math>\hypospace</math>. Examples for ML methods that use a <span class="mw-gls" data-name ="hypospace">hypothesis space</span> of the form \eqref{equ_def_enlarged_hypospace} include polynomial regression (see Section [[guide:013ef4b5cd#sec_polynomial_regression | Polynomial Regression ]]), | ||

Gaussian basis regression (see Section [[guide:013ef4b5cd#sec_linbasreg | Gaussian Basis Regression ]]) and the important family of kernel methods (see Section [[guide:013ef4b5cd#sec_kernel_methods | Kernel Methods ]]). | Gaussian basis regression (see Section [[guide:013ef4b5cd#sec_linbasreg | Gaussian Basis Regression ]]) and the important family of kernel methods (see Section [[guide:013ef4b5cd#sec_kernel_methods | Kernel Methods ]]). | ||

The feature map in \eqref{equ_def_enlarged_hypospace} might also be obtained from <span class="mw-gls mw-gls-first" data-name ="clustering">clustering</span> or feature learning methods (see Section [[guide:1a7f020d42#sec_clust_preproc | Clustering as Preprocessing ]] and Section | The feature map in \eqref{equ_def_enlarged_hypospace} might also be obtained from <span class="mw-gls mw-gls-first" data-name ="clustering">clustering</span> or feature learning methods (see Section [[guide:1a7f020d42#sec_clust_preproc | Clustering as Preprocessing ]] and Section [[guide:4f25e79970#sec_comb_PcA_Linreg|Combining PCA with linear regression]]). | ||

For the special case of the linear <span class="mw-gls" data-name ="hypospace">hypothesis space</span> \eqref{equ_def_hypo_linear_pred}, the resulting enlarged | For the special case of the linear <span class="mw-gls" data-name ="hypospace">hypothesis space</span> \eqref{equ_def_hypo_linear_pred}, the resulting enlarged | ||

| Line 544: | Line 557: | ||

''' Non-Numeric Features.''' | ''' Non-Numeric Features.''' | ||

The <span class="mw-gls" data-name ="hypospace">hypothesis space</span> \eqref{equ_def_hypo_linear_pred} can only be used for <span class="mw-gls" data-name ="datapoint">data point</span>s whose features are numeric vectors <math>\featurevec = (\feature_{1},\ldots,\feature_{\featuredim})^{T} \in \mathbb{R}^{\featuredim}</math>. In some application domains, such as natural language processing, there is no obvious natural choice for numeric features. However, since ML methods based on the <span class="mw-gls" data-name ="hypospace">hypothesis space</span> \eqref{equ_def_hypo_linear_pred} are well developed (using numerical linear algebra), | The <span class="mw-gls" data-name ="hypospace">hypothesis space</span> \eqref{equ_def_hypo_linear_pred} can only be used for <span class="mw-gls" data-name ="datapoint">data point</span>s whose features are numeric vectors <math>\featurevec = (\feature_{1},\ldots,\feature_{\featuredim})^{T} \in \mathbb{R}^{\featuredim}</math>. In some application domains, such as natural language processing, there is no obvious natural choice for numeric features. However, since ML methods based on the <span class="mw-gls" data-name ="hypospace">hypothesis space</span> \eqref{equ_def_hypo_linear_pred} are well developed (using numerical linear algebra), | ||

it might be useful to construct numerical features even for non-numeric data (such as text). For text data, there has been significant progress recently on methods that map a human-generated text into sequences of vectors (see <ref name="Goodfellow-et-al-2016"/>{{rp|at=Chap. 12}} for more details). Moreover, Section [[guide:4f25e79970#sec_discrete_embeddings | | it might be useful to construct numerical features even for non-numeric data (such as text). For text data, there has been significant progress recently on methods that map a human-generated text into sequences of vectors (see <ref name="Goodfellow-et-al-2016">I. Goodfellow, Y. Bengio, and A. Courville. ''Deep Learning'' MIT Press, 2016</ref>{{rp|at=Chap. 12}} for more details). Moreover, Section [[guide:4f25e79970#sec_discrete_embeddings | Feature Learning for Non-Numeric Data]] will discuss an approach to generate numeric features for <span class="mw-gls" data-name ="datapoint">data point</span>s that have an intrinsic notion of similarity. | ||

===The Size of a Hypothesis Space=== | ===The Size of a Hypothesis Space=== | ||

| Line 557: | Line 569: | ||

We define the <span class="mw-gls" data-name ="effdim">effective dimension</span> <math>\effdim{\hypospace}</math> of <math>\hypospace</math> as the maximum number <math>\sizehypospace \in \mathbb{N}</math> such that for any set <math>\dataset = \big\{ \big(\featurevec^{(1)},\truelabel^{(1)}\big), \ldots, \big(\featurevec^{(\sizehypospace)},\truelabel^{(\sizehypospace)}\big) \}</math> of <math>\sizehypospace</math> <span class="mw-gls" data-name ="datapoint">data point</span>s with different features, we can always find a hypothesis <math>h \in \hypospace</math> that perfectly fits the labels, <math>\truelabel^{(\sampleidx)} = h\big( \featurevec^{(\sampleidx)} \big)</math> for <math>\sampleidx=1,\ldots,\sizehypospace</math>. | We define the <span class="mw-gls" data-name ="effdim">effective dimension</span> <math>\effdim{\hypospace}</math> of <math>\hypospace</math> as the maximum number <math>\sizehypospace \in \mathbb{N}</math> such that for any set <math>\dataset = \big\{ \big(\featurevec^{(1)},\truelabel^{(1)}\big), \ldots, \big(\featurevec^{(\sizehypospace)},\truelabel^{(\sizehypospace)}\big) \}</math> of <math>\sizehypospace</math> <span class="mw-gls" data-name ="datapoint">data point</span>s with different features, we can always find a hypothesis <math>h \in \hypospace</math> that perfectly fits the labels, <math>\truelabel^{(\sampleidx)} = h\big( \featurevec^{(\sampleidx)} \big)</math> for <math>\sampleidx=1,\ldots,\sizehypospace</math>. | ||

The <span class="mw-gls" data-name ="effdim">effective dimension</span> of a <span class="mw-gls" data-name ="hypospace">hypothesis space</span> is closely related to the <span class="mw-gls mw-gls-first" data-name ="vcdim">Vapnik–Chervonenkis (VC) dimension</span> <ref name="VapnikBook"/>. | The <span class="mw-gls" data-name ="effdim">effective dimension</span> of a <span class="mw-gls" data-name ="hypospace">hypothesis space</span> is closely related to the <span class="mw-gls mw-gls-first" data-name ="vcdim">Vapnik–Chervonenkis (VC) dimension</span> <ref name="VapnikBook">V. N. Vapnik. ''The Nature of Statistical Learning Theory'' Springer, 1999</ref>. | ||

The <span class="mw-gls" data-name ="vcdim">VC dimension</span> is maybe the most widely used concept for measuring the size of infinite <span class="mw-gls" data-name ="hypospace">hypothesis space</span>s <ref name="VapnikBook"/><ref name="ShalevMLBook"/><ref name="BishopBook"/><ref name="hastie01statisticallearning"/>. | The <span class="mw-gls" data-name ="vcdim">VC dimension</span> is maybe the most widely used concept for measuring the size of infinite <span class="mw-gls" data-name ="hypospace">hypothesis space</span>s <ref name="VapnikBook"/><ref name="ShalevMLBook">S. Shalev-Shwartz and S. Ben-David. ''Understanding Machine Learning -- from Theory to Algorithms'' Cambridge University Press, 2014</ref><ref name="BishopBook"/><ref name="hastie01statisticallearning">T. Hastie, R. Tibshirani, and J. Friedman. ''The Elements of Statistical Learning'' Springer Series in Statistics. Springer, New York, NY, USA, 2001</ref>. | ||

However, the precise definition of the <span class="mw-gls" data-name ="vcdim">VC dimension</span> are beyond the scope of this book. Moreover, the | However, the precise definition of the <span class="mw-gls" data-name ="vcdim">VC dimension</span> are beyond the scope of this book. Moreover, the | ||

<span class="mw-gls" data-name ="effdim">effective dimension</span> captures most of the relevant properties of the <span class="mw-gls" data-name ="vcdim">VC dimension</span> for our purposes. For a precise definition of the <span class="mw-gls" data-name ="vcdim">VC dimension</span> and discussion of its applications in ML we refer to <ref name="ShalevMLBook"/>. Let us illustrate the concept of <span class="mw-gls" data-name ="effdim">effective dimension</span> as a measure for the size of a <span class="mw-gls" data-name ="hypospace">hypothesis space</span> with two examples: <span class="mw-gls" data-name ="linreg">linear regression</span> and polynomial regression. | <span class="mw-gls" data-name ="effdim">effective dimension</span> captures most of the relevant properties of the <span class="mw-gls" data-name ="vcdim">VC dimension</span> for our purposes. For a precise definition of the <span class="mw-gls" data-name ="vcdim">VC dimension</span> and discussion of its applications in ML we refer to <ref name="ShalevMLBook"/>. Let us illustrate the concept of <span class="mw-gls" data-name ="effdim">effective dimension</span> as a measure for the size of a <span class="mw-gls" data-name ="hypospace">hypothesis space</span> with two examples: <span class="mw-gls" data-name ="linreg">linear regression</span> and polynomial regression. | ||

| Line 579: | Line 591: | ||

</div> | </div> | ||

==The Loss== | ==<span id="sec_lossfct"/>The Loss== | ||

Every ML method uses a (more of less explicit) <span class="mw-gls" data-name ="hypospace">hypothesis space</span> <math>\hypospace</math> which consists of all computationally feasible predictor maps <math>h</math>. Which predictor map <math>h</math> out of all the maps in the <span class="mw-gls" data-name ="hypospace">hypothesis space</span> <math>\hypospace</math> is the best for the ML problem at hand? To answer this questions, | Every ML method uses a (more of less explicit) <span class="mw-gls" data-name ="hypospace">hypothesis space</span> <math>\hypospace</math> which consists of all computationally feasible predictor maps <math>h</math>. Which predictor map <math>h</math> out of all the maps in the <span class="mw-gls" data-name ="hypospace">hypothesis space</span> <math>\hypospace</math> is the best for the ML problem at hand? To answer this questions, | ||

| Line 592: | Line 604: | ||

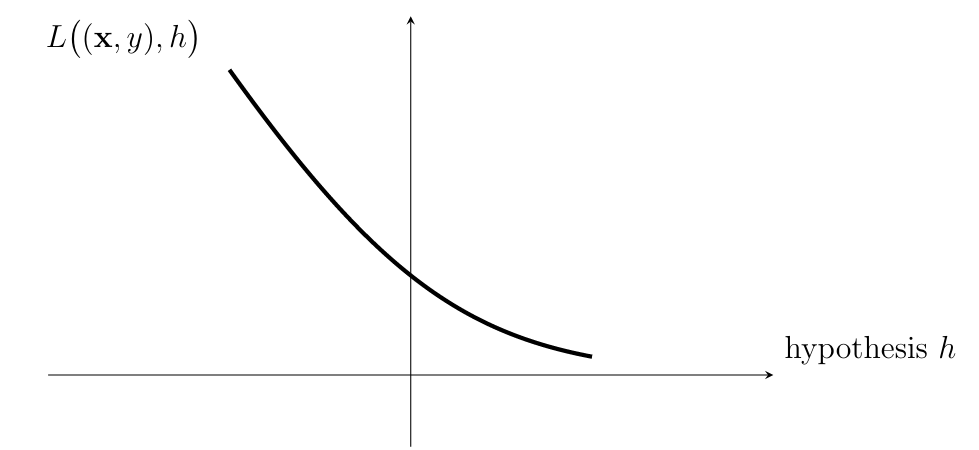

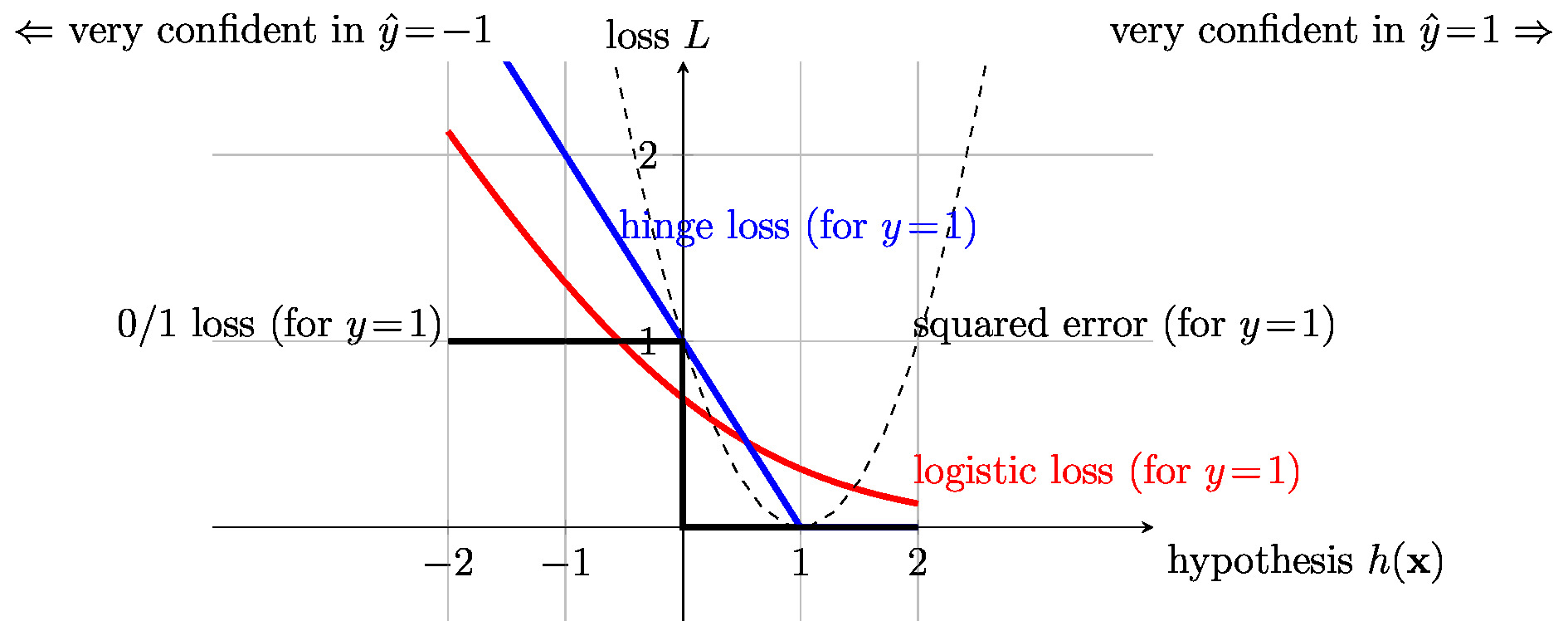

The loss value <math>\loss{(\featurevec,\truelabel)}{h}</math> quantifies the discrepancy between the true label <math>\truelabel</math> and the predicted label <math>h(\featurevec)</math>. A small (close to zero) value <math>\loss{(\featurevec,\truelabel)}{h}</math> indicates a low discrepancy between predicted <span class="mw-gls" data-name ="label">label</span> and true label of a <span class="mw-gls" data-name ="datapoint">data point</span>. Figure [[#fig_loss_function|fig_loss_function]] depicts a loss function for a given <span class="mw-gls" data-name ="datapoint">data point</span>, with features <math>\featurevec</math> and label <math>\truelabel</math>, as a function of the hypothesis <math>h \in \hypospace</math>. The basic principle of ML methods can then be formulated as: Learn (find) a hypothesis out of a given <span class="mw-gls" data-name ="hypospace">hypothesis space</span> <math>\hypospace</math> that incurs a minimum loss <math>\loss{(\featurevec,\truelabel)}{h}</math> for any <span class="mw-gls" data-name ="datapoint">data point</span> (see Chapter [[guide:2c0f621d22 | Empirical Risk Minimization ]]). | The loss value <math>\loss{(\featurevec,\truelabel)}{h}</math> quantifies the discrepancy between the true label <math>\truelabel</math> and the predicted label <math>h(\featurevec)</math>. A small (close to zero) value <math>\loss{(\featurevec,\truelabel)}{h}</math> indicates a low discrepancy between predicted <span class="mw-gls" data-name ="label">label</span> and true label of a <span class="mw-gls" data-name ="datapoint">data point</span>. Figure [[#fig_loss_function|fig_loss_function]] depicts a loss function for a given <span class="mw-gls" data-name ="datapoint">data point</span>, with features <math>\featurevec</math> and label <math>\truelabel</math>, as a function of the hypothesis <math>h \in \hypospace</math>. The basic principle of ML methods can then be formulated as: Learn (find) a hypothesis out of a given <span class="mw-gls" data-name ="hypospace">hypothesis space</span> <math>\hypospace</math> that incurs a minimum loss <math>\loss{(\featurevec,\truelabel)}{h}</math> for any <span class="mw-gls" data-name ="datapoint">data point</span> (see Chapter [[guide:2c0f621d22 | Empirical Risk Minimization ]]). | ||

Much like the choice for the <span class="mw-gls" data-name ="hypospace">hypothesis space</span> <math>\hypospace</math> used in a ML method, also the loss function is a design choice. We will discuss some widely used examples for <span class="mw-gls" data-name ="lossfunc">loss function</span> in Section | Much like the choice for the <span class="mw-gls" data-name ="hypospace">hypothesis space</span> <math>\hypospace</math> used in a ML method, also the loss function is a design choice. We will discuss some widely used examples for <span class="mw-gls" data-name ="lossfunc">loss function</span> in Section [[#sec_loss_numeric_label |Loss Functions for Numeric Labels]] and Section [[#sec_loss_categorical |Loss Functions for Categorical Labels]]. The choice for the <span class="mw-gls" data-name ="lossfunc">loss function</span> should take into account the computational complexity of searching the <span class="mw-gls" data-name ="hypospace">hypothesis space</span> for a hypothesis with minimum loss. Consider a ML method that uses a parametrized <span class="mw-gls" data-name ="hypospace">hypothesis space</span> and a <span class="mw-gls" data-name ="lossfunc">loss function</span> that is a convex and differentiable (smooth) function of the parameters of a hypothesis. In this case, searching for a hypothesis with small <span class="mw-gls mw-gls-first" data-name ="loss">loss</span> can be done efficiently using the <span class="mw-gls mw-gls-first" data-name ="gdmethods">gradient-based methods</span> discussed in Chapter [[guide:Cc42ad1ea4 | Gradient-Based Learning ]]. The minimization of a <span class="mw-gls" data-name ="lossfunc">loss function</span> that is either non-convex or non-differentiable is typically computationally much more difficult. Section [[guide:2c0f621d22#sec_comp_stat_ERM | Computational and Statistical Aspects of ERM ]] discusses the computational complexities of different types of <span class="mw-gls" data-name ="lossfunc">loss function</span>s in more detail. | ||

Beside computational aspects, the choice for the <span class="mw-gls" data-name ="lossfunc">loss function</span> should also take into account statistical aspects. For example, some <span class="mw-gls" data-name ="lossfunc">loss function</span>s result in ML methods that are more robust against <span class="mw-gls mw-gls-first" data-name ="outlier">outlier</span>s (see Section [[guide:013ef4b5cd#sec_lad | Least Absolute Deviation Regression ]] and Section [[guide:013ef4b5cd#sec_SVM | Support Vector Machines ]]). The choice of <span class="mw-gls" data-name ="lossfunc">loss function</span> might also be guided by probabilistic models for the data generated in an ML application. Section [[guide:013ef4b5cd#sec_max_iikelihood | Maximum Likelihood ]] details how the <span class="mw-gls" data-name ="ml">maximum likelihood</span> principle of statistical inference provides an explicit construction of <span class="mw-gls" data-name ="lossfunc">loss function</span>s in terms of an (assumed) | |||

<span class="mw-gls" data-name ="probdist">probability distribution</span> for <span class="mw-gls" data-name ="datapoint">data point</span>s. | <span class="mw-gls" data-name ="probdist">probability distribution</span> for <span class="mw-gls" data-name ="datapoint">data point</span>s. | ||

| Line 613: | Line 622: | ||

<div class="d-flex justify-content-center"> | <div class="d-flex justify-content-center"> | ||

<span id="fig_loss_function_and_metric "></span> | <span id="fig_loss_function_and_metric "></span> | ||

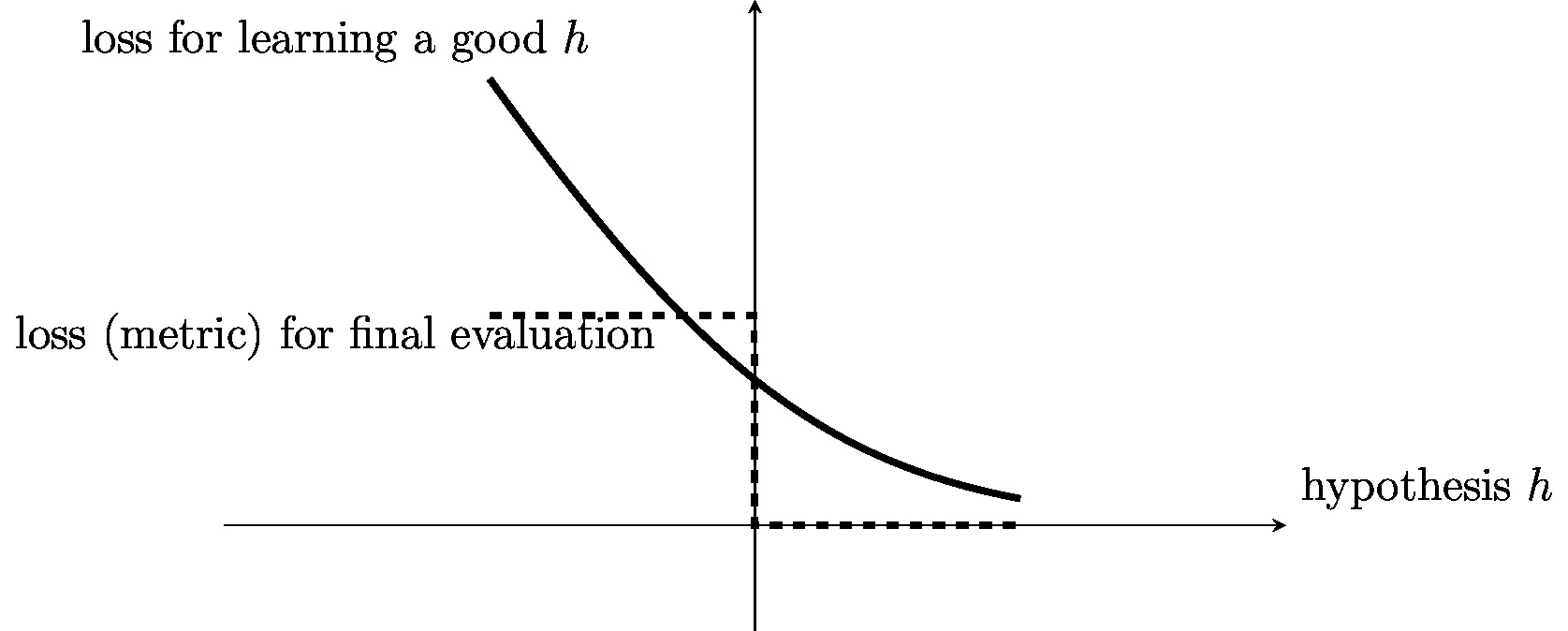

[[File:fig_loss_function_and_metric.jpg | 500px | thumb | Two different loss functions for a given <span class="mw-gls" data-name ="datapoint">data point</span> and varying hypothesis <math>h</math>. | [[File:fig_loss_function_and_metric.jpg | 500px | thumb | Two different loss functions for a given <span class="mw-gls" data-name ="datapoint">data point</span> and varying hypothesis <math>h</math>. One of these <span class="mw-gls" data-name ="loss">loss</span> functions (solid curve) is used to learn a good hypothesis by minimizing the loss. | ||

The other <span class="mw-gls" data-name ="loss">loss</span> function (dashed curve) is used to evaluate the performance of the learnt hypothesis. The <span class="mw-gls" data-name ="lossfunc">loss function</span> used for this final performance evaluation is sometimes referred to as a <span class="mw-gls" data-name ="metric">metric</span>. ]] | |||

</div> | </div> | ||

===Loss Functions for Numeric Labels=== | === <span id="sec_loss_numeric_label "/>Loss Functions for Numeric Labels=== | ||

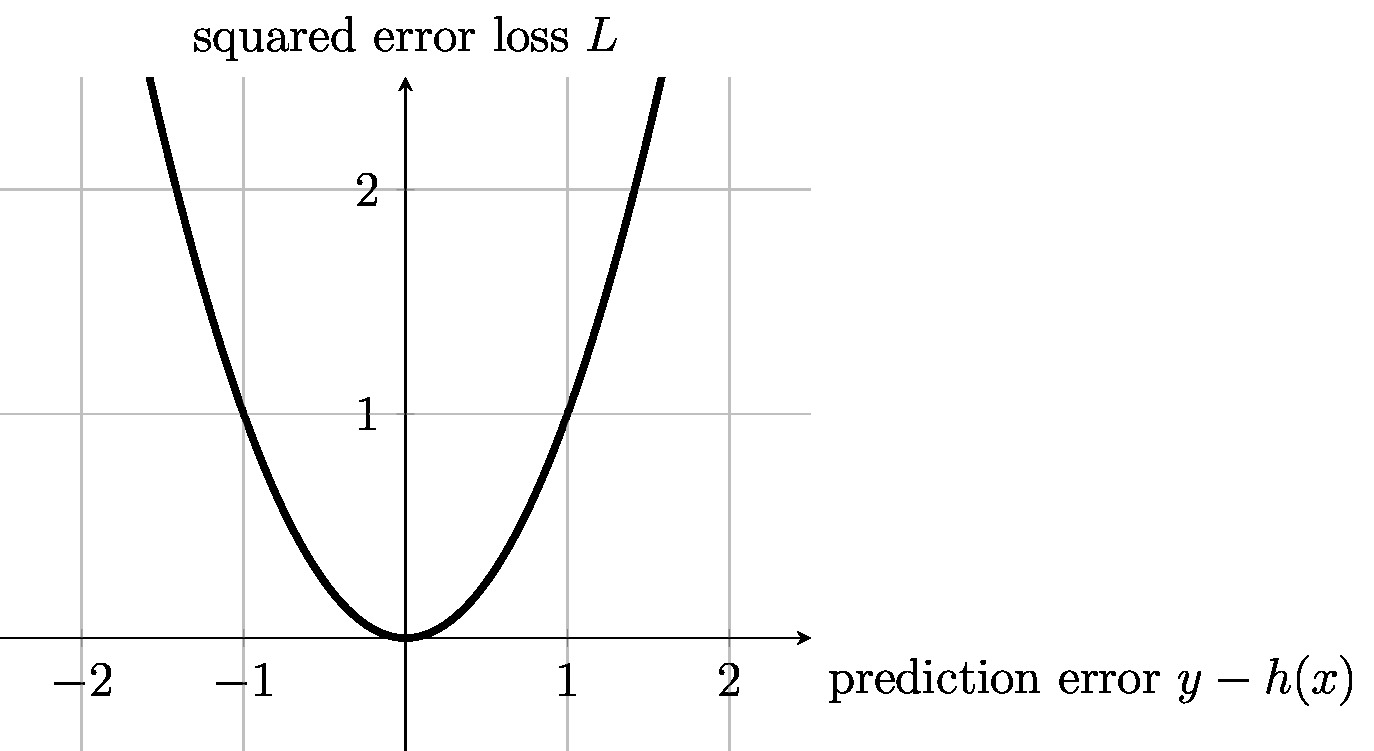

For ML problems involving <span class="mw-gls" data-name ="datapoint">data point</span>s with a numeric label <math>\truelabel \in \mathbb{R}</math>, i.e., for regression problems (see Section [[#sec_labels | Labels]]), a widely used (first) choice for the loss function can be the squared error <span class="mw-gls" data-name ="loss">loss</span> | |||

<span id = "equ_squared_loss"/> | |||

<math display="block">\begin{equation} | |||

\label{equ_squared_loss} | \label{equ_squared_loss} | ||

\loss{(\featurevec,\truelabel)}{h} \defeq \big(\truelabel - \underbrace{h(\featurevec)}_{=\predictedlabel} \big)^{2}. | \loss{(\featurevec,\truelabel)}{h} \defeq \big(\truelabel - \underbrace{h(\featurevec)}_{=\predictedlabel} \big)^{2}. | ||

| Line 636: | Line 644: | ||

</div> | </div> | ||

The squared error loss \eqref{equ_squared_loss} has appealing computational and statistical properties. For linear predictor maps <math>h(\featurevec) = \weights^{T} \featurevec</math>, the squared error loss is a convex and differentiable function of the parameter vector <math>\weights</math>. This allows, in turn, to efficiently search for the optimal linear predictor using efficient iterative optimization methods (see Chapter [[guide:Cc42ad1ea4 | Gradient-Based Learning ]]). The squared error loss also has a useful interpretation in terms of a probabilistic model for the features and labels. Minimizing the squared error loss is equivalent to maximum likelihood estimation within a linear Gaussian model <ref name="hastie01statisticallearning"/> {{rp | at=Sec. 2.6.3}}. | |||

Another loss function used in regression problems is the absolute error loss <math>|\hat{y} - y|</math>. | |||

Using this loss function to guide the learning of a predictor results in methods that are robust against a few <span class="mw-gls" data-name ="outlier">outlier</span>s in the <span class="mw-gls" data-name ="trainset">training set</span> (see Section [[guide:013ef4b5cd#sec_lad | Least Absolute Deviation Regression ]]). However, this improved robustness comes at the expense of increased computational complexity of minimizing the (non-differentiable) absolute error loss compared to the (differentiable) squared error loss \eqref{equ_squared_loss}. | |||

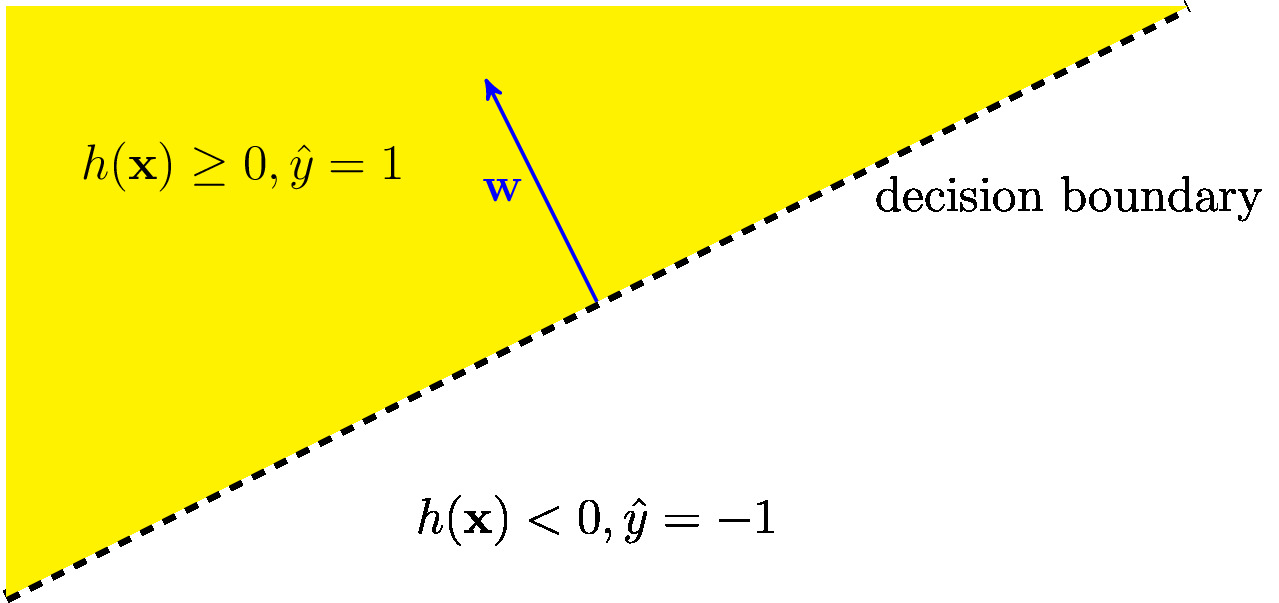

=== <span id="sec_loss_categorical"/>Loss Functions for Categorical Labels=== | === <span id="sec_loss_categorical"/>Loss Functions for Categorical Labels=== | ||

| Line 705: | Line 710: | ||

To avoid the non-convexity of the <math>0/1</math> loss \eqref{equ_def_0_1} we might approximate it by a convex <span class="mw-gls" data-name ="lossfunc">loss function</span>. One popular convex approximation of the <math>0/1</math> loss is the <span class="mw-gls mw-gls-first" data-name ="hingeloss">hinge loss</span> | To avoid the non-convexity of the <math>0/1</math> loss \eqref{equ_def_0_1} we might approximate it by a convex <span class="mw-gls" data-name ="lossfunc">loss function</span>. One popular convex approximation of the <math>0/1</math> loss is the <span class="mw-gls mw-gls-first" data-name ="hingeloss">hinge loss</span> | ||

<math display="block">\begin{equation} | |||

<span id = "equ_hinge_loss" /> | |||

<math display="block"> | |||

\begin{equation} | |||

\label{equ_hinge_loss} | \label{equ_hinge_loss} | ||

\loss{(\featurevec,\truelabel)}{h} \defeq \max \{ 0 , 1 - \truelabel h(\featurevec) \}. | \loss{(\featurevec,\truelabel)}{h} \defeq \max \{ 0 , 1 - \truelabel h(\featurevec) \}. | ||

| Line 726: | Line 734: | ||

However, in contrast to the <span class="mw-gls" data-name ="hingeloss">hinge loss</span>, the <span class="mw-gls" data-name ="logloss">logistic loss</span> \eqref{equ_log_loss} is also a differentiable function of the <math>\weights</math>. | However, in contrast to the <span class="mw-gls" data-name ="hingeloss">hinge loss</span>, the <span class="mw-gls" data-name ="logloss">logistic loss</span> \eqref{equ_log_loss} is also a differentiable function of the <math>\weights</math>. | ||