guide:5fcedc2288: Difference between revisions

No edit summary |

mNo edit summary |

||

| (2 intermediate revisions by 2 users not shown) | |||

| Line 1: | Line 1: | ||

<div class="d-none"><math> | |||

\newcommand{\indep}[0]{\ensuremath{\perp\!\!\!\perp}} | |||

\newcommand{\dpartial}[2]{\frac{\partial #1}{\partial #2}} | |||

\newcommand{\abs}[1]{\left| #1 \right|} | |||

\newcommand\autoop{\left(} | |||

\newcommand\autocp{\right)} | |||

\newcommand\autoob{\left[} | |||

\newcommand\autocb{\right]} | |||

\newcommand{\vecbr}[1]{\langle #1 \rangle} | |||

\newcommand{\ui}{\hat{\imath}} | |||

\newcommand{\uj}{\hat{\jmath}} | |||

\newcommand{\uk}{\hat{k}} | |||

\newcommand{\V}{\vec{V}} | |||

\newcommand{\half}[1]{\frac{#1}{2}} | |||

\newcommand{\recip}[1]{\frac{1}{#1}} | |||

\newcommand{\invsqrt}[1]{\recip{\sqrt{#1}}} | |||

\newcommand{\halfpi}{\half{\pi}} | |||

\newcommand{\windbar}[2]{\Big|_{#1}^{#2}} | |||

\newcommand{\rightinfwindbar}[0]{\Big|_{0}^\infty} | |||

\newcommand{\leftinfwindbar}[0]{\Big|_{-\infty}^0} | |||

\newcommand{\state}[1]{\large\protect\textcircled{\textbf{\small#1}}} | |||

\newcommand{\shrule}{\\ \centerline{\rule{13cm}{0.4pt}}} | |||

\newcommand{\tbra}[1]{$\bra{#1}$} | |||

\newcommand{\tket}[1]{$\ket{#1}$} | |||

\newcommand{\tbraket}[2]{$\braket{1}{2}$} | |||

\newcommand{\infint}[0]{\int_{-\infty}^{\infty}} | |||

\newcommand{\rightinfint}[0]{\int_0^\infty} | |||

\newcommand{\leftinfint}[0]{\int_{-\infty}^0} | |||

\newcommand{\wavefuncint}[1]{\infint|#1|^2} | |||

\newcommand{\ham}[0]{\hat{H}} | |||

\newcommand{\mathds}{\mathbb}</math></div> | |||

===<span id="sec:ipw"></span>Inverse Probability Weighting=== | |||

<div class="d-flex justify-content-center"> | |||

[[File:tikzd2fc6.png | 400px | thumb | ]] | |||

</div> | |||

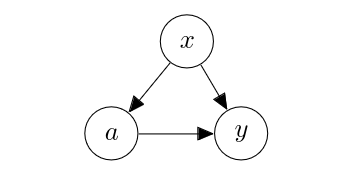

Let us come back to the original graph <math>G</math> that consists of three variables; <math>a</math>, <math>y</math> and <math>x</math>, with the following joint probability: | |||

<math display="block"> | |||

\begin{align} | |||

p^*(a, y, x) = p^*(x) p^*(a|x) p^*(y|a, x). | |||

\end{align} | |||

</math> | |||

We also assume that we have a set <math>D</math> of triplets <math>(a_n, y_n, x_n)</math> drawn from this underlying graph <math>G</math>. In other words, we assume that we observe the confounder in this case. | |||

If we have a large such set, i.e. <math>N=|D| \gg 1</math>, we can use regression, as in [[guide:Ffdca025ef#sec:regression |Regression: Causal Inference can be Trivial]], to approximate <math>p^*(y | a, x)</math> in order to compute the causal effect as | |||

<math display="block"> | |||

\begin{align} | |||

\label{eq:regression} | |||

\mathbb{E}_G\left[ y | \mathrm{do}(a=\hat{a})\right] | |||

&\approx | |||

\sum_{x} | |||

p^*(x) | |||

\sum_{y} | |||

y | |||

\hat{p}(y | \hat{a}, x) | |||

\\ | |||

&\approx | |||

\frac{1} | |||

{N} | |||

\sum_{n=1}^N | |||

\mathbb{E}_{\hat{p}(y | \hat{a}, x_n)}[y]. | |||

\end{align} | |||

</math> | |||

Although this regression-based approach is straightforward, this approach has a disadvantage of having to fit a regressor on the concatenation of the action <math>a</math> and confounder <math>x</math>. The issue is exactly that of RCT with partially observed confounders, that is, we must have enough data points for each action-covariate combination in order for regression to have a low variance. We can use regularization to reduce the variance, which may unfortunately introduce a bias. | |||

We can reduce the variance by using regression to estimate a simpler quantity. In particular, we consider approximating <math>p^*(a|x)</math>. Because this is a map from <math>\mathcal{X}</math>, we just need enough data points for each covariate configuration rather than the action-covariate combination. Approximating <math>p^*(a|x)</math> allows us to estimate the causal effect using data points drawn from the original graph <math>G</math> rather than the modified graph <math>\overline{G}</math> from [[guide:Eb6d7b34e1#sec:randomized-controlled-trials |Randomized Controlled Trials]], because | |||

<math display="block"> | |||

\begin{align} | |||

\mathbb{E}_G\left[ y | \mathrm{do}(a=\hat{a})\right] | |||

&= | |||

\sum_{x} | |||

p^*(x) | |||

\sum_{a} | |||

\mathds{1}(a = \hat{a}) | |||

\sum_{y} | |||

p^*(y | \hat{a}, x) | |||

y | |||

\\ | |||

&= | |||

\sum_{x} | |||

\sum_{a} | |||

\sum_{y} | |||

p^*(x) | |||

p^*(a|x) | |||

\mathds{1}(a = \hat{a}) | |||

p^*(y | \hat{a}, x) | |||

\frac{1}{p^*(a|x)} | |||

y | |||

\\ | |||

&= | |||

\frac{1}{\sum_{n'=1}^N | |||

\mathds{1}(a_{n'}= \hat{a})} | |||

\sum_{n=1}^N | |||

\mathds{1}(a_n = \hat{a}) | |||

\frac{y_n} | |||

{p^*(\hat{a} | x_n)}. | |||

\end{align} | |||

</math> | |||

Instead of the true <math>p^*(\hat{a}|x_n)</math>, we plug in the regression-based approximation <math>\hat{p}(\hat{a} | x_n)</math> and arrive at | |||

<math display="block"> | |||

\begin{align} | |||

\label{eq:ipw} | |||

\mathbb{E}_G\left[ y | \mathrm{do}(a=\hat{a})\right] | |||

& | |||

\approx | |||

\frac{\sum_{n=1}^N | |||

\mathds{1}(a_n = \hat{a}) | |||

\frac{y_n} | |||

{\hat{p}(\hat{a} | x_n)}}{ | |||

\sum_{n'=1}^N | |||

\mathds{1}(a_{n'}= \hat{a})}. | |||

\end{align} | |||

</math> | |||

In words, we look for all data points within the previously-collected set <math>D</math> that are associated with the action <math>\hat{a}</math> of interest. The simple average of the associated outcomes would be a biased estimate of the casual effect, since it combines the effects of <math>a</math> on <math>y</math> via two paths; (causal) <math>a \to y</math> and (spurious) <math>a \leftarrow x \to y</math>. We correct this bias by weighting each data point, or the associated outcome, by the inverse probability of the action given the confounder, <math>\frac{1}{\hat{p}(\hat{a} | x_n)}</math>. This approach is thus called '' inverse probability weighting'' (IPW), and <math>p^*(a|x)</math> is often referred to as a propensity score. | |||

'''It is fine to have missing outcomes.''' | |||

One important advantage of the IPW-based approach is that we can approximate <math>p^*(a|x)</math> using all <math>(a,x)</math> pairs even if they are not associated with the outcome <math>y</math>, unlike the earlier regression-based approach which required having all three variables observed <math>(a,y,x)</math>. Imagine using clinical notes and measurements from the electronic health record (EHR) of a large hospital in order to estimate the causal effect of a particular drug on a target disease. Of course, the prescription of the drug <math>a</math> is not made blindly but based on the patient information which includes their underlying health condition. Since the existing health conditions affect the outcome <math>y</math> of almost any kind of a disease, such patient information is a confounder <math>x</math>. Some patients often do not return to the same hospital for follow-up checks, meaning that the EHR does not record the outcome of these patients, leaving us only with <math>(a,x)</math>. We can then use all the prescriptions to approximate the propensity score <math>p(a|x)</math> and then use a subset of these prescriptions for which the patients' outcomes are recorded (i.e. they came back for follow-up visits) to compute the causal effect. | |||

Mathematically, it means that we solve two separate optimization problems using two different data sets (though, one is a superset of the other,) as follows: | |||

<math display="block"> | |||

\begin{align} | |||

&\hat{p}(a|x) = \arg\max_{p} \sum_{(a',x') \in D_{\pi}} \log p(a'|x'), | |||

\\ | |||

&\hat{y}(\hat{a}) = | |||

\frac{\sum_{(a',x',y') \in D_{\bar{y}}} | |||

\mathds{1}(a' = \hat{a}) | |||

\frac{y_n} | |||

{\hat{p}(\hat{a} | x_n)}}{ | |||

\sum_{(a'',x'',y'') \in D_{\bar{y}}} | |||

\mathds{1}(a'' = \hat{a}) | |||

}, | |||

\label{eq:ipw2} | |||

\end{align} | |||

</math> | |||

where <math>D_{\bar{y}} \subseteq D_{\pi}</math>. | |||

'''A doubly robust estimator.''' | |||

A major issue with the IPW-based approach is that the variance of the estimator can be very large, even if the variance of estimating the propensity score is low, because the propensity scores shows up in the denominator. On the other hand, the regression-based approach has a low variance due to the missing division by the propensity score. It is however likely a biased estimator, as we cannot easily guarantee that the choice of a non-parametric regressor can identify the correct conditional probability, due to a variety of issues, such as the lack of realizability. | |||

If the regression-based approach is correct for an instance <math>(a,y,x)</math>, <math>\tilde{y}(a, x)</math> would coincide with <math>y</math>, and we would just use <math>\tilde{y}(a, x)</math> as it is and prefer to avoid the IPW-based estimate due to the potentially large variance arising from the denominator. Otherwise, we want to rely on the IPW-based estimate to correct for the incorrectness arising from the regression-based approach. This can be expressed as | |||

<math display="block"> | |||

\begin{align} | |||

\hat{y}(\hat{a}) | |||

= | |||

\sum_x p(x) | |||

\sum_a | |||

\left( | |||

\frac{1}{|\mathcal{A}|} | |||

\tilde{y}(a,x) | |||

+ | |||

\underbrace{ | |||

p^*(a|x) | |||

\frac{\tfrac{1}{|\mathcal{A}|}}{\hat{p}(a|x)} | |||

}_{(b)} | |||

\left( | |||

\underbrace{ | |||

y^*(a|x) | |||

- | |||

\tilde{y}(a,x) | |||

}_{\mathrm{(a)}} | |||

\right) | |||

\right). | |||

\end{align} | |||

</math> | |||

If <math>\tilde{y}(a,x)</math> is perfect, (a) disappears, as expected. If our estimate of the propensity score is perfect, (b) is <math>1</math>, resulting in using the true <math>y^*(a,x)</math> while ignoring the regression-based approach.<ref group="Notes" > | |||

<math>y^*(a,x)</math> is the true expected outcome given the action <math>a</math> and the covariate <math>x</math>. Since expectation is linear, we can push the expectation all the way inside to obtain this expression. | |||

</ref> | |||

Since we are provided with data rather than the actual probability distributions, we end up with | |||

<math display="block"> | |||

\begin{align} | |||

\hat{y}(\hat{a}) | |||

= | |||

\frac{1}{Z(\hat{a})} | |||

\sum_{(a',x',y') \in D} | |||

\mathds{1}(a' = \hat{a}) | |||

\left( | |||

\tilde{y}(\hat{a}, x_n) | |||

+ | |||

\frac{1} | |||

{\hat{p}(\hat{a} | x_n)} | |||

\left(y_n - \tilde{y}(\hat{a}, x_n)\right) | |||

\right), | |||

\end{align} | |||

</math> | |||

where <math>Z(\hat{a}) = \sum_{(a'',x'',y'') \in D} | |||

\mathds{1}(a'' = \hat{a}).</math> This estimator is called a '' doubly robust estimator''. | |||

===<span id="sec:matching"></span>Matching.=== | |||

Instead of estimating <math>p^*(a|x)</math> and multiplying the observed outcome with its inverse, we can achieve a similar outcome by manipulating data itself. When <math>p^*(a|x) = p^*(a)</math>, that is, the action is independent of the covariate, the IPW-based estimate coincides with simple averaging, just like in RCT from [[guide:Eb6d7b34e1#sec:randomized-controlled-trials |Randomized Controlled Trials]]. This happens when the ratio of actions associated with each unique <math>x</math> in the data is the same across the data set. To make it simpler, let us express this ratio of actions by assigning the minimal number of each action in relation to the other actions. For instance, if our desired ratio between the treatment and placebo is <math>0.8:0.2</math>, we would express it for instance as <math>4:1</math>. | |||

Starting from the original data set <math>D=\left\{(a_1, x_1, y_1), \ldots, (a_N, x_N, y_N) \right\}</math>, we go through each <math>x_n</math> by collecting as many <math>(a,x_n,y) \in D</math> as <math>n_a</math>, where <math>n_a</math> is the target number of examples of action <math>a</math>, for instance <math>4</math> above. This collection can be done randomly or by following some fixed strategy, such as round-robin scheduling. Sometimes it is necessary to choose the same triplet multiple times, which is not ideal but may be necessary. By aggregating these collections, we arrive at a new dataset <math>\tilde{D}</math>. | |||

Under this new dataset <math>\tilde{D}</math>, the propensity score is guaranteed to be | |||

<math display="block"> | |||

\begin{align} | |||

\hat{p}(a|x) \propto n_a, | |||

\end{align} | |||

</math> | |||

regardless of <math>x</math>. Furthermore, <math>\hat{p}(a|x) = \hat{p}(a)</math> as well. Meanwhile, <math>\hat{p}(x)</math> stays the same as that under the original data set <math>D</math>. Then, the expected causal outcome of any given action <math>a</math> under this dataset is | |||

<math display="block"> | |||

\begin{align} | |||

\hat{y}(\hat{a}) = \frac{1}{N} \sum_{n=1}^N \mathds{1}(a'_n=\hat{a}) y'_n, | |||

\end{align} | |||

</math> | |||

where <math>(a'_n, y'_n, x'_n) \in \tilde{D}</math>. This avoids the issue of the high variance arising from the IPW-based approach. This however does not mean that this approach always works. | |||

The most obvious issue with this approach is that the original data set may not have enough triplets associated with each <math>x</math> to ensure that <math>p^*(a|x)</math> is identical for all <math>x</math>. Furthermore, even if we have enough associated triplets for each <math>x</math>, we may end up with discarding many triples from the original data set to form the new data set. We never want to discard data points we have. This approach is thus appropriate when data is already well balanced and the goal is to further ensure that the propensity score is constant. | |||

A relatively simple variant of this approach, called ‘matching’ because we match triplets based on <math>x</math>, is to relax the constraint that we only consider the exact match of the covariate <math>x</math>. The original formulation samples the triplets according to the target counts with replacement from the following multiset:<ref group="Notes" > | |||

It is a multiset, since there can be duplicates. | |||

</ref> | |||

<math display="block"> | |||

\begin{align} | |||

D(x) = \left\{ | |||

(a',y',x') \in D | | |||

x' = x | |||

\right\}. | |||

\end{align} | |||

</math> | |||

This condition of <math>x' = x</math> may be too strict, leaving us with only a very small <math>D(x)</math>. | |||

We can instead relax this condition using a predefined notion of similarity such that | |||

<math display="block"> | |||

\begin{align} | |||

\tilde{D}(x) = \left\{ | |||

(a',y',x') \in D | | |||

s(x',x) < \epsilon | |||

\right\}, | |||

\end{align} | |||

</math> | |||

where <math>s(x',x)</math> is a predefined distance function and <math>\epsilon</math> a distance threshold. | |||

==General references== | |||

{{cite arXiv|last1=Cho|first1=Kyunghyun|year=2024|title=A Brief Introduction to Causal Inference in Machine Learning|eprint=2405.08793|class=cs.LG}} | |||

==Notes== | |||

{{Reflist|group=Notes}} | |||

Latest revision as of 13:45, 19 May 2024

Inverse Probability Weighting

Let us come back to the original graph [math]G[/math] that consists of three variables; [math]a[/math], [math]y[/math] and [math]x[/math], with the following joint probability:

We also assume that we have a set [math]D[/math] of triplets [math](a_n, y_n, x_n)[/math] drawn from this underlying graph [math]G[/math]. In other words, we assume that we observe the confounder in this case. If we have a large such set, i.e. [math]N=|D| \gg 1[/math], we can use regression, as in Regression: Causal Inference can be Trivial, to approximate [math]p^*(y | a, x)[/math] in order to compute the causal effect as

Although this regression-based approach is straightforward, this approach has a disadvantage of having to fit a regressor on the concatenation of the action [math]a[/math] and confounder [math]x[/math]. The issue is exactly that of RCT with partially observed confounders, that is, we must have enough data points for each action-covariate combination in order for regression to have a low variance. We can use regularization to reduce the variance, which may unfortunately introduce a bias.

We can reduce the variance by using regression to estimate a simpler quantity. In particular, we consider approximating [math]p^*(a|x)[/math]. Because this is a map from [math]\mathcal{X}[/math], we just need enough data points for each covariate configuration rather than the action-covariate combination. Approximating [math]p^*(a|x)[/math] allows us to estimate the causal effect using data points drawn from the original graph [math]G[/math] rather than the modified graph [math]\overline{G}[/math] from Randomized Controlled Trials, because

Instead of the true [math]p^*(\hat{a}|x_n)[/math], we plug in the regression-based approximation [math]\hat{p}(\hat{a} | x_n)[/math] and arrive at

In words, we look for all data points within the previously-collected set [math]D[/math] that are associated with the action [math]\hat{a}[/math] of interest. The simple average of the associated outcomes would be a biased estimate of the casual effect, since it combines the effects of [math]a[/math] on [math]y[/math] via two paths; (causal) [math]a \to y[/math] and (spurious) [math]a \leftarrow x \to y[/math]. We correct this bias by weighting each data point, or the associated outcome, by the inverse probability of the action given the confounder, [math]\frac{1}{\hat{p}(\hat{a} | x_n)}[/math]. This approach is thus called inverse probability weighting (IPW), and [math]p^*(a|x)[/math] is often referred to as a propensity score.

It is fine to have missing outcomes. One important advantage of the IPW-based approach is that we can approximate [math]p^*(a|x)[/math] using all [math](a,x)[/math] pairs even if they are not associated with the outcome [math]y[/math], unlike the earlier regression-based approach which required having all three variables observed [math](a,y,x)[/math]. Imagine using clinical notes and measurements from the electronic health record (EHR) of a large hospital in order to estimate the causal effect of a particular drug on a target disease. Of course, the prescription of the drug [math]a[/math] is not made blindly but based on the patient information which includes their underlying health condition. Since the existing health conditions affect the outcome [math]y[/math] of almost any kind of a disease, such patient information is a confounder [math]x[/math]. Some patients often do not return to the same hospital for follow-up checks, meaning that the EHR does not record the outcome of these patients, leaving us only with [math](a,x)[/math]. We can then use all the prescriptions to approximate the propensity score [math]p(a|x)[/math] and then use a subset of these prescriptions for which the patients' outcomes are recorded (i.e. they came back for follow-up visits) to compute the causal effect. Mathematically, it means that we solve two separate optimization problems using two different data sets (though, one is a superset of the other,) as follows:

where [math]D_{\bar{y}} \subseteq D_{\pi}[/math].

A doubly robust estimator.

A major issue with the IPW-based approach is that the variance of the estimator can be very large, even if the variance of estimating the propensity score is low, because the propensity scores shows up in the denominator. On the other hand, the regression-based approach has a low variance due to the missing division by the propensity score. It is however likely a biased estimator, as we cannot easily guarantee that the choice of a non-parametric regressor can identify the correct conditional probability, due to a variety of issues, such as the lack of realizability.

If the regression-based approach is correct for an instance [math](a,y,x)[/math], [math]\tilde{y}(a, x)[/math] would coincide with [math]y[/math], and we would just use [math]\tilde{y}(a, x)[/math] as it is and prefer to avoid the IPW-based estimate due to the potentially large variance arising from the denominator. Otherwise, we want to rely on the IPW-based estimate to correct for the incorrectness arising from the regression-based approach. This can be expressed as

If [math]\tilde{y}(a,x)[/math] is perfect, (a) disappears, as expected. If our estimate of the propensity score is perfect, (b) is [math]1[/math], resulting in using the true [math]y^*(a,x)[/math] while ignoring the regression-based approach.[Notes 1] Since we are provided with data rather than the actual probability distributions, we end up with

where [math]Z(\hat{a}) = \sum_{(a'',x'',y'') \in D} \mathds{1}(a'' = \hat{a}).[/math] This estimator is called a doubly robust estimator.

Matching.

Instead of estimating [math]p^*(a|x)[/math] and multiplying the observed outcome with its inverse, we can achieve a similar outcome by manipulating data itself. When [math]p^*(a|x) = p^*(a)[/math], that is, the action is independent of the covariate, the IPW-based estimate coincides with simple averaging, just like in RCT from Randomized Controlled Trials. This happens when the ratio of actions associated with each unique [math]x[/math] in the data is the same across the data set. To make it simpler, let us express this ratio of actions by assigning the minimal number of each action in relation to the other actions. For instance, if our desired ratio between the treatment and placebo is [math]0.8:0.2[/math], we would express it for instance as [math]4:1[/math]. Starting from the original data set [math]D=\left\{(a_1, x_1, y_1), \ldots, (a_N, x_N, y_N) \right\}[/math], we go through each [math]x_n[/math] by collecting as many [math](a,x_n,y) \in D[/math] as [math]n_a[/math], where [math]n_a[/math] is the target number of examples of action [math]a[/math], for instance [math]4[/math] above. This collection can be done randomly or by following some fixed strategy, such as round-robin scheduling. Sometimes it is necessary to choose the same triplet multiple times, which is not ideal but may be necessary. By aggregating these collections, we arrive at a new dataset [math]\tilde{D}[/math]. Under this new dataset [math]\tilde{D}[/math], the propensity score is guaranteed to be

regardless of [math]x[/math]. Furthermore, [math]\hat{p}(a|x) = \hat{p}(a)[/math] as well. Meanwhile, [math]\hat{p}(x)[/math] stays the same as that under the original data set [math]D[/math]. Then, the expected causal outcome of any given action [math]a[/math] under this dataset is

where [math](a'_n, y'_n, x'_n) \in \tilde{D}[/math]. This avoids the issue of the high variance arising from the IPW-based approach. This however does not mean that this approach always works. The most obvious issue with this approach is that the original data set may not have enough triplets associated with each [math]x[/math] to ensure that [math]p^*(a|x)[/math] is identical for all [math]x[/math]. Furthermore, even if we have enough associated triplets for each [math]x[/math], we may end up with discarding many triples from the original data set to form the new data set. We never want to discard data points we have. This approach is thus appropriate when data is already well balanced and the goal is to further ensure that the propensity score is constant. A relatively simple variant of this approach, called ‘matching’ because we match triplets based on [math]x[/math], is to relax the constraint that we only consider the exact match of the covariate [math]x[/math]. The original formulation samples the triplets according to the target counts with replacement from the following multiset:[Notes 2]

This condition of [math]x' = x[/math] may be too strict, leaving us with only a very small [math]D(x)[/math]. We can instead relax this condition using a predefined notion of similarity such that

where [math]s(x',x)[/math] is a predefined distance function and [math]\epsilon[/math] a distance threshold.

General references

Cho, Kyunghyun (2024). "A Brief Introduction to Causal Inference in Machine Learning". arXiv:2405.08793 [cs.LG].