guide:Ec36399528: Difference between revisions

No edit summary |

mNo edit summary |

||

| (5 intermediate revisions by the same user not shown) | |||

| Line 127: | Line 127: | ||

\newcommand{\outrn}[1]{\mathcal{O}_{\sP_n}[#1]} | \newcommand{\outrn}[1]{\mathcal{O}_{\sP_n}[#1]} | ||

\newcommand{\email}[1]{\texttt{#1}} | \newcommand{\email}[1]{\texttt{#1}} | ||

\newcommand{\possessivecite}[1]{ | \newcommand{\possessivecite}[1]{\citeauthor{#1}'s \citeyear{#1}} | ||

\newcommand\xqed[1]{% | \newcommand\xqed[1]{% | ||

\leavevmode\unskip\penalty9999 \hbox{}\nobreak\hfill | \leavevmode\unskip\penalty9999 \hbox{}\nobreak\hfill | ||

| Line 147: | Line 147: | ||

</math> | </math> | ||

</div> | </div> | ||

The literature reviewed in this chapter starts with the analysis of what can be learned about functionals of probability distributions that are well-defined in the absence of a model. | The literature reviewed in this chapter starts with the analysis of what can be learned about functionals of probability distributions that are well-defined in the absence of a model. | ||

The approach is nonparametric, and it is typically ''constructive'', in the sense that it leads to | The approach is nonparametric, and it is typically ''constructive'', in the sense that it leads to “plug-in” formulae for the bounds on the functionals of interest. | ||

==<span id="subsec:missing_data"></span>Selectively Observed Data== | |||

As in <ref name="man89"></ref>, suppose that a researcher is interested in learning the probability that an individual who is homeless at a given date has a home six months later. | As in <ref name="man89"><span style="font-variant-caps:small-caps">Manski, C.F.</span> (1989): “Anatomy of the Selection Problem” ''The Journal of Human Resources'', 24(3), 343--360.</ref>, suppose that a researcher is interested in learning the probability that an individual who is homeless at a given date has a home six months later. | ||

Here the population of interest is the people who are homeless at the initial date, and the outcome of interest <math>\ey</math> is an indicator of whether the individual has a home six months later (so that <math>\ey=1</math>) or remains homeless (so that <math>\ey=0</math>). | Here the population of interest is the people who are homeless at the initial date, and the outcome of interest <math>\ey</math> is an indicator of whether the individual has a home six months later (so that <math>\ey=1</math>) or remains homeless (so that <math>\ey=0</math>). | ||

A random sample of homeless individuals is interviewed at the initial date, so that individual background attributes <math>\ex</math> are observed, but six months later only a subset of the individuals originally sampled can be located. | A random sample of homeless individuals is interviewed at the initial date, so that individual background attributes <math>\ex</math> are observed, but six months later only a subset of the individuals originally sampled can be located. | ||

| Line 158: | Line 158: | ||

Let <math>\ed</math> be an indicator of whether the individual can be located (hence <math>\ed=1</math>) or not (hence <math>\ed=0</math>). | Let <math>\ed</math> be an indicator of whether the individual can be located (hence <math>\ed=1</math>) or not (hence <math>\ed=0</math>). | ||

The question is what can the researcher learn about <math>\E_\sQ(\ey|\ex=x)</math>, with <math>\sQ</math> the distribution of <math>(\ey,\ex)</math>? | The question is what can the researcher learn about <math>\E_\sQ(\ey|\ex=x)</math>, with <math>\sQ</math> the distribution of <math>(\ey,\ex)</math>? | ||

<ref name="man89" | <ref name="man89"/> showed that <math>\E_\sQ(\ey|\ex=x)</math> is not point identified in the absence of additional assumptions, but informative nonparametric bounds on this quantity can be obtained. | ||

In this section I review his approach, and discuss several important extensions of his original idea. | In this section I review his approach, and discuss several important extensions of his original idea. | ||

Throughout the chapter, I formally state the structure of the problem under study as an | Throughout the chapter, I formally state the structure of the problem under study as an “Identification Problem”, and then provide a solution, either in the form of a sharp identification region, or of an outer region. | ||

To set the stage, and at the cost of some repetition, I do the same here, slightly generalizing the question stated in the previous paragraph. | To set the stage, and at the cost of some repetition, I do the same here, slightly generalizing the question stated in the previous paragraph. | ||

{{proofcard|Identification Problem (Conditional Expectation of Selectively Observed Data)|IP:bounds:mean:md| | |||

Let <math>\ey \in \mathcal{Y}\subset \R</math> and <math>\ex \in \mathcal{X}\subset \R^d</math> be, respectively, an outcome variable and a vector of covariates with support <math>\cY</math> and <math>\cX</math> respectively, with <math>\cY</math> a compact set. | Let <math>\ey \in \mathcal{Y}\subset \R</math> and <math>\ex \in \mathcal{X}\subset \R^d</math> be, respectively, an outcome variable and a vector of covariates with support <math>\cY</math> and <math>\cX</math> respectively, with <math>\cY</math> a compact set. | ||

Let <math>\ed \in \{0,1\}</math>. | Let <math>\ed \in \{0,1\}</math>. | ||

| Line 171: | Line 170: | ||

Let <math>y_{j}\in\cY</math> be such that <math>g(y_j)=g_j</math>, <math>j=0,1</math>.<ref group="Notes" >The bounds <math>g_0,g_1</math> and the values <math>y_0,y_1</math> at which they are attained may differ for different functions <math>g(\cdot)</math>.</ref> | Let <math>y_{j}\in\cY</math> be such that <math>g(y_j)=g_j</math>, <math>j=0,1</math>.<ref group="Notes" >The bounds <math>g_0,g_1</math> and the values <math>y_0,y_1</math> at which they are attained may differ for different functions <math>g(\cdot)</math>.</ref> | ||

In the absence of additional information, what can the researcher learn about <math>\E_\sQ(g(\ey)|\ex=x)</math>, with <math>\sQ</math> the distribution of <math>(\ey,\ex)</math>? | In the absence of additional information, what can the researcher learn about <math>\E_\sQ(g(\ey)|\ex=x)</math>, with <math>\sQ</math> the distribution of <math>(\ey,\ex)</math>? | ||

|}} | |||

<ref name="man89" | <ref name="man89"/>’s analysis of this problem begins with a simple application of the law of total probability, that yields | ||

<math display="block"> | <math display="block"> | ||

| Line 184: | Line 183: | ||

Hence, <math>\sQ(\ey|\ex=x)</math> is not point identified. | Hence, <math>\sQ(\ey|\ex=x)</math> is not point identified. | ||

If one were to assume ''exogenous selection'' (or data missing at random conditional on <math>\ex</math>), i.e., <math>\sR(\ey|\ex,\ed=0)=\sP(\ey|\ex,\ed=1)</math>, point identification would obtain. | If one were to assume ''exogenous selection'' (or data missing at random conditional on <math>\ex</math>), i.e., <math>\sR(\ey|\ex,\ed=0)=\sP(\ey|\ex,\ed=1)</math>, point identification would obtain. | ||

However, that assumption is non-refutable and it is well known that it may fail in [[guide:7b0105e1fc#sec:misspec | | However, that assumption is non-refutable and it is well known that it may fail in applications <ref group="Notes" >[[guide:7b0105e1fc#sec:misspec |Section]] discusses the consequences of model misspecification (with respect to refutable assumptions)</ref>. | ||

Let <math>\cT</math> denote the space of all probability measures with support in <math>\cY</math>. The unknown functional vector is <math>\{\tau(x),\upsilon(x)\}\equiv \{\sQ(\ey|\ex=x),\sR(\ey|\ex=x,\ed=0)\}</math>. | Let <math>\cT</math> denote the space of all probability measures with support in <math>\cY</math>. The unknown functional vector is <math>\{\tau(x),\upsilon(x)\}\equiv \{\sQ(\ey|\ex=x),\sR(\ey|\ex=x,\ed=0)\}</math>. | ||

What the researcher can learn, in the absence of additional restrictions on <math>\sR(\ey|\ex=x,\ed=0)</math>, is the region of ''observationally equivalent'' distributions for <math>\ey|\ex=x</math>, and the associated set of expectations taken with respect to these distributions. | What the researcher can learn, in the absence of additional restrictions on <math>\sR(\ey|\ex=x,\ed=0)</math>, is the region of ''observationally equivalent'' distributions for <math>\ey|\ex=x</math>, and the associated set of expectations taken with respect to these distributions. | ||

{{proofcard|Theorem (Conditional Expectations of Selectively Observed Data)|SIR:prob:E:md|Under the assumptions in Identification [[#IP:bounds:mean:md |Problem]], | |||

Under the assumptions in Identification [[#IP:bounds:mean:md |Problem]], | |||

<math display="block"> | <math display="block"> | ||

| Line 198: | Line 196: | ||

</math> | </math> | ||

is the sharp identification region for <math>\E_\sQ(g(\ey)|\ex=x)</math>. | is the sharp identification region for <math>\E_\sQ(g(\ey)|\ex=x)</math>. | ||

| | |||

Due to the discussion following equation \eqref{eq:LTP_md}, the collection of observationally equivalent distribution functions for <math>\ey|\ex=x</math> is | Due to the discussion following equation \eqref{eq:LTP_md}, the collection of observationally equivalent distribution functions for <math>\ey|\ex=x</math> is | ||

| Line 210: | Line 208: | ||

Next, observe that the lower bound in equation \eqref{eq:bounds:mean:md} is achieved by integrating <math>g(\ey)</math> against the distribution <math>\tau(x)</math> that results when <math>\upsilon(x)</math> places probability one on <math>y_0</math>. The upper bound is achieved by integrating <math>g(\ey)</math> against the distribution <math>\tau(x)</math> that results when <math>\upsilon(x)</math> places probability one on <math>y_1</math>. | Next, observe that the lower bound in equation \eqref{eq:bounds:mean:md} is achieved by integrating <math>g(\ey)</math> against the distribution <math>\tau(x)</math> that results when <math>\upsilon(x)</math> places probability one on <math>y_0</math>. The upper bound is achieved by integrating <math>g(\ey)</math> against the distribution <math>\tau(x)</math> that results when <math>\upsilon(x)</math> places probability one on <math>y_1</math>. | ||

Both are contained in the set <math>\idr{\sQ(\ey|\ex=x)}</math> in equation \eqref{eq:Tau_md}. | Both are contained in the set <math>\idr{\sQ(\ey|\ex=x)}</math> in equation \eqref{eq:Tau_md}. | ||

}} | |||

These are the ''worst case bounds'', so called because assumptions free and therefore representing the widest possible range of values for the parameter of interest that are consistent with the observed data. | These are the ''worst case bounds'', so called because assumptions free and therefore representing the widest possible range of values for the parameter of interest that are consistent with the observed data. | ||

A simple | A simple “plug-in” estimator for <math>\idr{\E_\sQ(g(\ey)|\ex=x)}</math> replaces all unknown quantities in \eqref{eq:bounds:mean:md} with consistent estimators, obtained, e.g., by kernel or sieve regression. | ||

I return to consistent estimation of partially identified parameters in [[guide:6d1a428897#sec:inference |Section]]. | I return to consistent estimation of partially identified parameters in [[guide:6d1a428897#sec:inference |Section]]. | ||

Here I emphasize that identification problems are fundamentally distinct from finite sample inference problems. | Here I emphasize that identification problems are fundamentally distinct from finite sample inference problems. | ||

The latter are typically reduced as sample size increase (because, e.g., the variance of the estimator becomes smaller). | The latter are typically reduced as sample size increase (because, e.g., the variance of the estimator becomes smaller). | ||

The former do not improve, unless a different and better type of data is collected, e.g. with a smaller prevalence of missing data (see <ref name="dom:man17"></ref>{{rp|at=for a discussion}}). | The former do not improve, unless a different and better type of data is collected, e.g. with a smaller prevalence of missing data (see <ref name="dom:man17"><span style="font-variant-caps:small-caps">Dominitz, J., <span style="font-variant-caps:normal">and</span> C.F. Manski</span> (2017): “More Data or Better Data? A Statistical Decision Problem” ''The Review of Economic Studies'', 84(4), 1583--1605.</ref>{{rp|at=for a discussion}}). | ||

<ref name="man03"></ref>{{rp|at=Section 1.3}} shows that the proof of Theorem [[#SIR:prob:E:md |SIR-]] can be extended to obtain the smallest and largest points in the sharp identification region of any parameter that respects stochastic dominance.<ref group="Notes" > | <ref name="man03"><span style="font-variant-caps:small-caps">Manski, C.F.</span> (2003): ''Partial Identification of Probability Distributions'', Springer Series in Statistics. Springer.</ref>{{rp|at=Section 1.3}} shows that the proof of Theorem [[#SIR:prob:E:md |SIR-]] can be extended to obtain the smallest and largest points in the sharp identification region of any parameter that respects stochastic dominance.<ref group="Notes" > | ||

Recall that a probability distribution <math>\sF\in\cT</math> stochastically dominates <math>\sF^\prime\in\cT</math> if <math>\sF(-\infty,t]\le \sF^\prime(-\infty,t]</math> for all <math>t\in\R</math>. A real-valued functional <math>\sd:\cT\to\R</math> respects stochastic dominance if <math>\sd(\sF)\ge \sd(\sF^\prime)</math> whenever <math>\sF</math> stochastically dominates <math>\sF^\prime</math>.</ref> | Recall that a probability distribution <math>\sF\in\cT</math> stochastically dominates <math>\sF^\prime\in\cT</math> if <math>\sF(-\infty,t]\le \sF^\prime(-\infty,t]</math> for all <math>t\in\R</math>. A real-valued functional <math>\sd:\cT\to\R</math> respects stochastic dominance if <math>\sd(\sF)\ge \sd(\sF^\prime)</math> whenever <math>\sF</math> stochastically dominates <math>\sF^\prime</math>.</ref> | ||

This is especially useful to bound the quantiles of <math>\ey|\ex=x</math>. | This is especially useful to bound the quantiles of <math>\ey|\ex=x</math>. | ||

| Line 242: | Line 240: | ||

By comparison, for any value of <math>\alpha</math>, <math>r(\alpha,x)</math> and <math>s(\alpha,x)</math> are | By comparison, for any value of <math>\alpha</math>, <math>r(\alpha,x)</math> and <math>s(\alpha,x)</math> are | ||

generically informative if, respectively, <math>\sP(\ed=1|\ex=x) > 1-\alpha</math> and <math>\sP(\ed=1|\ex=x) \ge \alpha</math>, regardless of the range of <math>g</math>. | generically informative if, respectively, <math>\sP(\ed=1|\ex=x) > 1-\alpha</math> and <math>\sP(\ed=1|\ex=x) \ge \alpha</math>, regardless of the range of <math>g</math>. | ||

<ref name="sto10"></ref> further extends partial identification analysis to the study of spread parameters in the presence of missing data (as well as interval data, data combinations, and other applications). | <ref name="sto10"><span style="font-variant-caps:small-caps">Stoye, J.</span> (2010): “Partial identification of spread parameters” ''Quantitative Economics'', 1(2), 323--357.</ref> further extends partial identification analysis to the study of spread parameters in the presence of missing data (as well as interval data, data combinations, and other applications). | ||

These parameters include ones that respect second order stochastic dominance, such as the variance, the Gini coefficient, and other inequality measures, as well as other measures of dispersion which do not respect second order stochastic dominance, such as interquartile range and ratio.<ref group="Notes" > | These parameters include ones that respect second order stochastic dominance, such as the variance, the Gini coefficient, and other inequality measures, as well as other measures of dispersion which do not respect second order stochastic dominance, such as interquartile range and ratio.<ref group="Notes" > | ||

Earlier related work includes, e.g., | Earlier related work includes, e.g., {{ref|name=gas72}} and {{ref|name=cow91}}, who obtain worst case bounds on the sample Gini coefficient under the assumption that one knows the income bracket but not the exact income of every household.</ref> | ||

<ref name="sto10" | <ref name="sto10"/> shows that the sharp identification region for these parameters can be obtained by fixing the mean or quantile of the variable of interest at a specific value within its sharp identification region, and deriving a distribution consistent with this value which is ``compressed" with respect to the ones which bound the cumulative distribution function (CDF) of the variable of interest, and one which is ``dispersed" with respect to them. | ||

Heuristically, the compressed distribution minimizes spread, while the dispersed one maximizes it (the sense in which this optimization occurs is formally defined in the paper). | Heuristically, the compressed distribution minimizes spread, while the dispersed one maximizes it (the sense in which this optimization occurs is formally defined in the paper). | ||

The intuition for this is that a compressed CDF is first below and then above any non-compressed one; a dispersed CDF is first above and then below any non-dispersed one. | The intuition for this is that a compressed CDF is first below and then above any non-compressed one; a dispersed CDF is first above and then below any non-dispersed one. | ||

| Line 251: | Line 249: | ||

The main results of the paper are sharp identification regions for the expectation and variance, for the median and interquartile ratio, and for many other combinations of parameters. | The main results of the paper are sharp identification regions for the expectation and variance, for the median and interquartile ratio, and for many other combinations of parameters. | ||

'''Key Insight (Identification is not a binary event):''' | |||

<span id="big_idea:id_not_binary"/> | |||

Identification [[#IP:bounds:mean:md |Problem]] is mathematically simple, but it puts forward a new approach to empirical research. | <i>Identification [[#IP:bounds:mean:md |Problem]] is mathematically simple, but it puts forward a new approach to empirical research. | ||

The traditional approach aims at finding a sufficient (possibly minimal) set of assumptions guaranteeing point identification of parameters, viewing identification as an | The traditional approach aims at finding a sufficient (possibly minimal) set of assumptions guaranteeing point identification of parameters, viewing identification as an “all or nothing” notion, where either the functional of interest can be learned exactly or nothing of value can be learned. | ||

The partial identification approach pioneered by <ref name="man89" | The partial identification approach pioneered by <ref name="man89"/> points out that much can be learned from combination of data and assumptions that restrict the functionals of interest to a set of observationally equivalent values, even if this set is not a singleton. | ||

Along the way, <ref name="man89" | Along the way, <ref name="man89"/> points out that in Identification [[#IP:bounds:mean:md |Problem]] the observed outcome is the singleton <math>\ey</math> when <math>\ed=1</math>, and the set <math>\cY</math> when <math>\ed=0</math>. | ||

This is a random closed set, see [[guide:379e0dcd67#def:rcs |Definition]]. | This is a random closed set, see [[guide:379e0dcd67#def:rcs |Definition]]. | ||

I return to this connection in Section [[#subsec:interval_data |Interval Data]]. | I return to this connection in Section [[#subsec:interval_data |Interval Data]].</i> | ||

Despite how transparent the framework in Identification [[#IP:bounds:mean:md |Problem]] is, important subtleties arise even in this seemingly simple context. | Despite how transparent the framework in Identification [[#IP:bounds:mean:md |Problem]] is, important subtleties arise even in this seemingly simple context. | ||

For a given <math>t\in\R</math>, consider the function <math>g(\ey)=\one(\ey\le t)</math>, with <math>\one(A)</math> the indicator function taking the value one if the logical condition in parentheses holds and zero otherwise. | For a given <math>t\in\R</math>, consider the function <math>g(\ey)=\one(\ey\le t)</math>, with <math>\one(A)</math> the indicator function taking the value one if the logical condition in parentheses holds and zero otherwise. | ||

| Line 271: | Line 269: | ||

\end{multline} | \end{multline} | ||

</math> | </math> | ||

Yet, the collection of CDFs that belong to the band defined by \eqref{eq:pointwise_bounds_F_md} is ''not'' the sharp identification region for the CDF of <math>\ey|\ex=x</math>. Rather, it constitutes an ''outer region'', as originally pointed out by <ref name="man94"></ref>{{rp|at=p. 149 and note 2}}. | Yet, the collection of CDFs that belong to the band defined by \eqref{eq:pointwise_bounds_F_md} is ''not'' the sharp identification region for the CDF of <math>\ey|\ex=x</math>. Rather, it constitutes an ''outer region'', as originally pointed out by <ref name="man94"><span style="font-variant-caps:small-caps">Manski, C.F.</span> (1994): “The selection problem” in ''Advances in Econometrics: Sixth World Congress'', ed. by C.A. Sims, vol.1 of ''Econometric Society Monographs'', pp. 143--170. Cambridge University Press.</ref>{{rp|at=p. 149 and note 2}}. | ||

{{proofcard|Theorem (Cumulative Distribution Function of Selectively Observed Data)|OR:CDF_md|Let <math>\cC</math> denote the collection of cumulative distribution functions on <math>\cY</math>. | |||

Let <math>\cC</math> denote the collection of cumulative distribution functions on <math>\cY</math>. | |||

Then, under the assumptions in Identification [[#IP:bounds:mean:md |Problem]], | Then, under the assumptions in Identification [[#IP:bounds:mean:md |Problem]], | ||

| Line 285: | Line 282: | ||

</math> | </math> | ||

is an outer region for the CDF of <math>\ey|\ex=x</math>. | is an outer region for the CDF of <math>\ey|\ex=x</math>.| | ||

Any admissible CDF for <math>\ey|\ex=x</math> belongs to the family of functions in equation \eqref{eq:outer_cdf_md}. However, the bound in equation \eqref{eq:outer_cdf_md} does not impose the restriction that for any <math>t_0\le t_1</math>, | Any admissible CDF for <math>\ey|\ex=x</math> belongs to the family of functions in equation \eqref{eq:outer_cdf_md}. However, the bound in equation \eqref{eq:outer_cdf_md} does not impose the restriction that for any <math>t_0\le t_1</math>, | ||

| Line 297: | Line 292: | ||

</math> | </math> | ||

This restriction is implied by the maintained assumptions, but is not necessarily satisfied by all CDFs in <math>\outr{\sF(\ey|\ex=x)}</math>, as illustrated in the following simple example. | This restriction is implied by the maintained assumptions, but is not necessarily satisfied by all CDFs in <math>\outr{\sF(\ey|\ex=x)}</math>, as illustrated in the following simple example. | ||

}} | |||

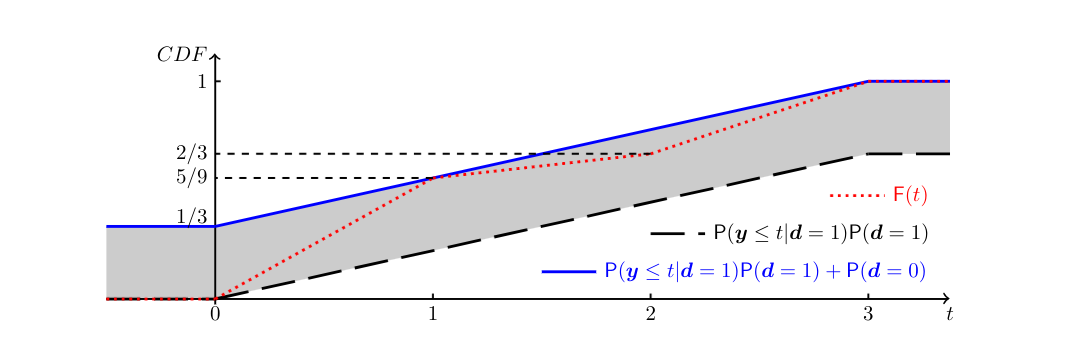

<div id="fig:boundsCDF:md" class="d-flex justify-content-center"> | <div id="fig:boundsCDF:md" class="d-flex justify-content-center"> | ||

[[File:guide_d9532_fig_boundsCDF_md.png | | [[File:guide_d9532_fig_boundsCDF_md.png | 700px | thumb | The tube defined by inequalities \eqref{eq:pointwise_bounds_F_md} in the set-up of [[#example:CDF_md |Example]], and the CDF in \eqref{eq:CDF_counterexample_md}. ]] | ||

</div> | </div> | ||

<span id="example:CDF_md"/> | |||

'''Example''' | |||

Omit <math>\ex</math> for simplicity, let <math>\sP(\ed=1)=\frac{2}{3}</math>, and let | Omit <math>\ex</math> for simplicity, let <math>\sP(\ed=1)=\frac{2}{3}</math>, and let | ||

<math display="block"> | <math display="block"> | ||

\sP(\ey\le t|\ed=1)\left\{ | \sP(\ey\le t|\ed=1)\left\{ | ||

\begin{ | \begin{array}{lll} | ||

0 & \textrm{if} & t < 0,\\ | |||

\frac{1}{3}t & \textrm{if} & 0\le t < 3,\\ | |||

1 & \textrm{if} & t\ge 3. | |||

\end{ | \end{array} | ||

\right. | \right. | ||

</math> | </math> | ||

The bounding functions and associated tube from the inequalities in \eqref{eq:pointwise_bounds_F_md} are depicted in [[#fig:boundsCDF:md|Figure]]. | The bounding functions and associated tube from the inequalities in \eqref{eq:pointwise_bounds_F_md} are depicted in [[#fig:boundsCDF:md|Figure]]. | ||

Consider the cumulative distribution function | Consider the cumulative distribution function | ||

| Line 324: | Line 322: | ||

\sF(t)= | \sF(t)= | ||

\left\{ | \left\{ | ||

\begin{ | \begin{array}{lll} | ||

0 & \textrm{if}\,\, & t < 0,\\ | |||

\frac{5}{9}t & \textrm{if} & 0\le t < 1,\\ | |||

\frac{1}{9}t+\frac{4}{9} & \textrm{if} & 1\le t < 2,\\ | |||

\frac{1}{3}t & \textrm{if} & 2\le t < 3,\\ | |||

1 & \textrm{if} & t\ge 3. | |||

\end{ | \end{array} | ||

\right. | \right. | ||

\end{align} | \end{align} | ||

</math> | </math> | ||

For each <math>t\in\R</math>, <math>\sF(t)</math> lies in the tube defined by equation \eqref{eq:pointwise_bounds_F_md}. | For each <math>t\in\R</math>, <math>\sF(t)</math> lies in the tube defined by equation \eqref{eq:pointwise_bounds_F_md}. | ||

However, it cannot be the CDF of <math>\ey</math>, because <math>\sF(2)-\sF(1)=\frac{1}{9} < \sP(1\le\ey\le 2|\ed=1)\sP(\ed=1)</math>, directly contradicting equation \eqref{eq:CDF_md_Kinterval}. | However, it cannot be the CDF of <math>\ey</math>, because <math>\sF(2)-\sF(1)=\frac{1}{9} < \sP(1\le\ey\le 2|\ed=1)\sP(\ed=1)</math>, directly contradicting equation \eqref{eq:CDF_md_Kinterval}. | ||

How can one characterize the sharp identification region for the CDF of <math>\ey|\ex=x</math> under the assumptions in Identification [[#IP:bounds:mean:md |Problem]]? | How can one characterize the sharp identification region for the CDF of <math>\ey|\ex=x</math> under the assumptions in Identification [[#IP:bounds:mean:md |Problem]]? | ||

In general, there is not a single answer to this question: different methodologies can be used. | In general, there is not a single answer to this question: different methodologies can be used. | ||

Here I use results in <ref name="man03" | Here I use results in <ref name="man03"/>{{rp|at=Corollary 1.3.1}} and <ref name="mol:mol18"><span style="font-variant-caps:small-caps">Molchanov, I., <span style="font-variant-caps:normal">and</span> F.Molinari</span> (2018): ''Random Sets in Econometrics''. Econometric Society Monograph Series, Cambridge University Press, Cambridge UK.</ref>{{rp|at=Theorem 2.25}}, which yield an alternative characterization of <math>\idr{\sQ(\ey|\ex=x)}</math> that translates directly into a characterization of <math>\idr{\sF(\ey|\ex=x)}</math>.<ref group="Notes" >Whereas {{ref|name=man94}} is very clear that the collection of CDFs in \eqref{eq:pointwise_bounds_F_md} is an outer region for the CDF of <math>\ey|\ex=x</math>, and {{ref|name=man03}} provides the sharp characterization in \eqref{eq:sharp_id_P_md_Manski}, {{ref|name=man07a}}{{rp|at=p. 39}} does not state all the requirements that characterize <math>\idr{\sF(\ey|\ex=x)}</math>.</ref> | ||

{{proofcard|Theorem (Conditional Distribution and CDF of Selectively Observed Data)|SIR:CDF_md| | |||

Given <math>\tau\in\cT</math>, let <math>\tau_K(x)</math> denote the probability that distribution <math>\tau</math> assigns to set <math>K</math> conditional on <math>\ex=x</math>, with <math>\tau_y(x)\equiv\tau_{\{y\}}(x)</math>. | Given <math>\tau\in\cT</math>, let <math>\tau_K(x)</math> denote the probability that distribution <math>\tau</math> assigns to set <math>K</math> conditional on <math>\ex=x</math>, with <math>\tau_y(x)\equiv\tau_{\{y\}}(x)</math>. | ||

Under the assumptions in Identification [[#IP:bounds:mean:md |Problem]], | Under the assumptions in Identification [[#IP:bounds:mean:md |Problem]], | ||

| Line 367: | Line 365: | ||

\end{multline} | \end{multline} | ||

</math> | </math> | ||

| | |||

The characterization in \eqref{eq:sharp_id_P_md_Manski} follows from equation \eqref{eq:Tau_md}, observing that if <math>\tau(x)\in\idr{\sQ(\ey|\ex=x)}</math> as defined in equation \eqref{eq:Tau_md}, then there exists a distribution <math>\upsilon(x)\in\cT</math> such that <math>\tau(x) = \sP(\ey|\ex=x,\ed=1)\sP(\ed=1|\ex=x)+\upsilon(x)\sP(\ed=0|\ex=x)</math>. | The characterization in \eqref{eq:sharp_id_P_md_Manski} follows from equation \eqref{eq:Tau_md}, observing that if <math>\tau(x)\in\idr{\sQ(\ey|\ex=x)}</math> as defined in equation \eqref{eq:Tau_md}, then there exists a distribution <math>\upsilon(x)\in\cT</math> such that <math>\tau(x) = \sP(\ey|\ex=x,\ed=1)\sP(\ed=1|\ex=x)+\upsilon(x)\sP(\ed=0|\ex=x)</math>. | ||

Hence, by construction <math>\tau_K(x) \ge \sP(\ey\in K|\ex=x,\ed=1)\sP(\ed=1|\ex=x)</math>, <math>\forall K\subset \cY</math>. Conversely, if one has <math>\tau_K(x) \ge \sP(\ey\in K|\ex=x,\ed=1)\sP(\ed=1|\ex=x)</math>, <math>\forall K\subset \cY</math>, one can define <math>\upsilon(x)=\frac{\tau(x) - \sP(\ey|\ex=x,\ed=1)\sP(\ed=1|\ex=x)}{\sP(\ed=0|\ex=x)}</math>. | Hence, by construction <math>\tau_K(x) \ge \sP(\ey\in K|\ex=x,\ed=1)\sP(\ed=1|\ex=x)</math>, <math>\forall K\subset \cY</math>. Conversely, if one has <math>\tau_K(x) \ge \sP(\ey\in K|\ex=x,\ed=1)\sP(\ed=1|\ex=x)</math>, <math>\forall K\subset \cY</math>, one can define <math>\upsilon(x)=\frac{\tau(x) - \sP(\ey|\ex=x,\ed=1)\sP(\ed=1|\ex=x)}{\sP(\ed=0|\ex=x)}</math>. | ||

| Line 380: | Line 378: | ||

\end{multline} | \end{multline} | ||

</math> | </math> | ||

The result in equation \eqref{eq:sharp_id_P_md_interval} is proven in <ref name="mol:mol18" | The result in equation \eqref{eq:sharp_id_P_md_interval} is proven in <ref name="mol:mol18"/>{{rp|at=Theorem 2.25}} using elements of random set theory, to which I return in Section [[#subsec:interval_data |Interval Data]]. | ||

Using elements of random set theory it is also possible to show that the characterization in \eqref{eq:sharp_id_P_md_Manski} requires only to check the inequalities for <math>K</math> the compact subsets of <math>\cY</math>. | Using elements of random set theory it is also possible to show that the characterization in \eqref{eq:sharp_id_P_md_Manski} requires only to check the inequalities for <math>K</math> the compact subsets of <math>\cY</math>. | ||

}} | |||

This section provides sharp identification regions and outer regions for a variety of functionals of interest. | This section provides sharp identification regions and outer regions for a variety of functionals of interest. | ||

The computational complexity of these characterizations varies widely. | The computational complexity of these characterizations varies widely. | ||

| Line 389: | Line 387: | ||

A sharp identification region on the CDF requires evaluating the probability that a certain distribution assigns to all intervals. | A sharp identification region on the CDF requires evaluating the probability that a certain distribution assigns to all intervals. | ||

I return to computational challenges in partial identification in [[guide:A85a6b6ff1#sec:computations |Section]]. | I return to computational challenges in partial identification in [[guide:A85a6b6ff1#sec:computations |Section]]. | ||

==<span id="subsec:programme:eval"></span>Treatment Effects with and without Instrumental Variables== | |||

The discussion of partial identification of probability distributions of selectively observed data naturally leads to the question of its implications for program evaluation. | The discussion of partial identification of probability distributions of selectively observed data naturally leads to the question of its implications for program evaluation. | ||

The literature on program evaluation is vast. | The literature on program evaluation is vast. | ||

| Line 396: | Line 395: | ||

To keep this chapter to a manageable length, I discuss only partial identification of the average response to a treatment and of the average treatment effect (ATE). | To keep this chapter to a manageable length, I discuss only partial identification of the average response to a treatment and of the average treatment effect (ATE). | ||

There are many different parameters that received much interest in the literature. | There are many different parameters that received much interest in the literature. | ||

Examples include the ''local average treatment effect'' of <ref name="imb:ang94"></ref> and the ''marginal treatment effect'' of | Examples include the ''local average treatment effect'' of <ref name="imb:ang94"><span style="font-variant-caps:small-caps">Imbens, G.W., <span style="font-variant-caps:normal">and</span> J.D. Angrist</span> (1994): “Identification and Estimation of Local Average Treatment Effects” ''Econometrica'', 62(2), 467--475.</ref> and the ''marginal treatment effect'' of | ||

<ref name="hec:vyt99"></ref><ref name="hec:vyt01"></ref><ref name="hec:vyt05"></ref>. | <ref name="hec:vyt99"><span style="font-variant-caps:small-caps">Heckman, J.J., <span style="font-variant-caps:normal">and</span> E.J. Vytlacil</span> (1999): “Local Instrumental Variables and Latent Variable Models for Identifying and Bounding Treatment Effects” ''Proceedings of the National Academy of Sciences of the United States of America'', 96(8), 4730--4734.</ref><ref name="hec:vyt01"><span style="font-variant-caps:small-caps">Heckman, J.J., <span style="font-variant-caps:normal">and</span> E.J. Vytlacil</span> (2001): “Instrumental variables, selection models, and tight bounds on the average treatment effect” in ''Econometric Evaluation of Labour Market Policies'', ed. by M.Lechner, <span style="font-variant-caps:normal">and</span> F.Pfeiffer, pp. 1--15, Heidelberg. Physica-Verlag HD.</ref><ref name="hec:vyt05"><span style="font-variant-caps:small-caps">Heckman, J.J., <span style="font-variant-caps:normal">and</span> E.J. Vytlacil</span> (2005): “Structural Equations, Treatment Effects, and Econometric Policy Evaluation” ''Econometrica'', 73(3), 669--738.</ref>. | ||

For thorough discussions of the literature on program evaluation, I refer to the textbook treatments in <ref name="man95"></ref><ref name="man03" | For thorough discussions of the literature on program evaluation, I refer to the textbook treatments in <ref name="man95"><span style="font-variant-caps:small-caps">Manski, C.F.</span> (1995): ''Identification Problems in the Social Sciences''. Harvard University Press.</ref><ref name="man03"/><ref name="man07a"><span style="font-variant-caps:small-caps">Manski, C.F.</span> (2007a): ''Identification for Prediction and Decision''. Harvard University Press.</ref> and <ref name="imb:rub15"><span style="font-variant-caps:small-caps">Imbens, G.W., <span style="font-variant-caps:normal">and</span> D.B. Rubin</span> (2015): ''Causal Inference for Statistics, Social, and Biomedical Sciences: An Introduction''. Cambridge University Press.</ref>, to the Handbook chapters by <ref name="hec:vyt07I"><span style="font-variant-caps:small-caps">Heckman, J.J., <span style="font-variant-caps:normal">and</span> E.J. Vytlacil</span> (2007a): “Chapter 70 -- Econometric Evaluation of Social Programs, Part I: Causal Models, Structural Models and Econometric Policy Evaluation” in ''Handbook of Econometrics'', ed. by J.J. Heckman, <span style="font-variant-caps:normal">and</span> E.E. Leamer, vol.6, pp. 4779 -- 4874. Elsevier.</ref><ref name="hec:vyt07II"><span style="font-variant-caps:small-caps">Heckman, J.J., <span style="font-variant-caps:normal">and</span> E.J. Vytlacil</span> (2007b): “Chapter 71 -- Econometric Evaluation of Social Programs, Part II: Using the Marginal Treatment Effect to Organize Alternative Econometric Estimators to Evaluate Social Programs, and to Forecast their Effects in New Environments” in ''Handbook of Econometrics'', ed. by J.J. Heckman, <span style="font-variant-caps:normal">and</span> E.E. Leamer, vol.6, pp. 4875 -- 5143. Elsevier.</ref> and <ref name="abb:hec07"><span style="font-variant-caps:small-caps">Abbring, J.H., <span style="font-variant-caps:normal">and</span> J.J. Heckman</span> (2007): “Chapter 72 -- Econometric Evaluation of Social Programs, Part III: Distributional Treatment Effects, Dynamic Treatment Effects, Dynamic Discrete Choice, and General Equilibrium Policy Evaluation” in ''Handbook of Econometrics'', ed. by J.J. Heckman, <span style="font-variant-caps:normal">and</span> E.E. Leamer, vol.6, pp. 5145 -- 5303. Elsevier.</ref>, and to the review articles by <ref name="imb:woo09"><span style="font-variant-caps:small-caps">Imbens, G.W., <span style="font-variant-caps:normal">and</span> J.M. Wooldridge</span> (2009): “Recent Developments in the Econometrics of Program Evaluation” ''Journal of Economic Literature'', 47(1), 5--86.</ref> and <ref name="mog:tor18"><span style="font-variant-caps:small-caps">Mogstad, M., <span style="font-variant-caps:normal">and</span> A.Torgovitsky</span> (2018): “Identification and Extrapolation of Causal Effects with Instrumental Variables” ''Annual Review of Economics'', 10(1), 577--613.</ref>. | ||

Using standard notation (e.g., <ref name="ney23"></ref>), let <math>\ey:\T \mapsto \cY</math> be an individual-specific response function, with <math>\T=\{0,1,\dots,T\}</math> a finite set of mutually exclusive and exhaustive treatments, and let <math>\es</math> denote the individual's received treatment (taking its realizations in <math>\T</math>).<ref group="Notes" >Here the treatment response is a function only of the (scalar) treatment received by the given individual, an assumption known as ''stable unit treatment value assumption'' | |||

Using standard notation (e.g., <ref name="ney23"><span style="font-variant-caps:small-caps">Neyman, J.S.</span> (1923): “On the Application of Probability Theory to Agricultural Experiments. Essay on Principles. Section 9.” ''Roczniki Nauk Rolniczych'', X, 1--51, reprinted in \textit{Statistical Science}, 5(4), 465-472, translated and edited by D. M. Dabrowska and T. P. Speed from the Polish original.</ref>), let <math>\ey:\T \mapsto \cY</math> be an individual-specific response function, with <math>\T=\{0,1,\dots,T\}</math> a finite set of mutually exclusive and exhaustive treatments, and let <math>\es</math> denote the individual's received treatment (taking its realizations in <math>\T</math>).<ref group="Notes" >Here the treatment response is a function only of the (scalar) treatment received by the given individual, an assumption known as ''stable unit treatment value assumption'' {{ref|name=rub78}}.</ref> | |||

The researcher observes data <math>(\ey,\es,\ex)\sim\sP</math>, with <math>\ey\equiv\ey(\es)</math> the outcome corresponding to the received treatment <math>\es</math>, and <math>\ex</math> a vector of covariates. | The researcher observes data <math>(\ey,\es,\ex)\sim\sP</math>, with <math>\ey\equiv\ey(\es)</math> the outcome corresponding to the received treatment <math>\es</math>, and <math>\ex</math> a vector of covariates. | ||

The outcome <math>\ey(t)</math> for <math>\es\neq t</math> is counterfactual, and hence can be conceptualized as missing. | The outcome <math>\ey(t)</math> for <math>\es\neq t</math> is counterfactual, and hence can be conceptualized as missing. | ||

Therefore, we are in the framework of Identification [[#IP:bounds:mean:md |Problem]] and all the results from Section [[#subsec:missing_data |Selectively Observed Data]] apply in this context too, subject to adjustments in notation.<ref group="Notes" > | Therefore, we are in the framework of Identification [[#IP:bounds:mean:md |Problem]] and all the results from Section [[#subsec:missing_data |Selectively Observed Data]] apply in this context too, subject to adjustments in notation.<ref group="Notes" >{{ref|name=ber:mol:mol12}} and {{ref|name=mol:mol18}}{{rp|at=Section 2.5}} provide a characterization of the sharp identification region for the joint distribution of <math>[\ey(t),t\in\T]</math>.</ref> | ||

For example, using Theorem [[#SIR:prob:E:md |SIR-]], | For example, using Theorem [[#SIR:prob:E:md |SIR-]], | ||

| Line 431: | Line 431: | ||

The resulting bounds have width equal to <math>(y_1-y_0)[2-\sP(\es=t_1|\ex=x)-\sP(\es=t_0|\ex=x)]\in[(y_1-y_0),2(y_1-y_0)]</math>, and hence are informative only if both <math>y_0 > -\infty</math> and <math>y_1 < \infty</math>. | The resulting bounds have width equal to <math>(y_1-y_0)[2-\sP(\es=t_1|\ex=x)-\sP(\es=t_0|\ex=x)]\in[(y_1-y_0),2(y_1-y_0)]</math>, and hence are informative only if both <math>y_0 > -\infty</math> and <math>y_1 < \infty</math>. | ||

As the largest logically possible value for the ATE (in the absence of information from data) cannot be larger than <math>(y_1-y_0)</math>, and the smallest cannot be smaller than <math>-(y_1-y_0)</math>, the sharp bounds on the ATE always cover zero. | As the largest logically possible value for the ATE (in the absence of information from data) cannot be larger than <math>(y_1-y_0)</math>, and the smallest cannot be smaller than <math>-(y_1-y_0)</math>, the sharp bounds on the ATE always cover zero. | ||

How should one think about the finding on the size of the worst case bounds on the ATE? | '''Key Insight:''' <i>How should one think about the finding on the size of the worst case bounds on the ATE? | ||

On the one hand, if both <math>y_0 < \infty</math> and <math>y_1 < \infty</math> the bounds are informative, because they are a strict subset of the ATE's possible realizations. | On the one hand, if both <math>y_0 < \infty</math> and <math>y_1 < \infty</math> the bounds are informative, because they are a strict subset of the ATE's possible realizations. | ||

On the other hand, they reveal that the data alone are silent on the sign of the ATE. | On the other hand, they reveal that the data alone are silent on the sign of the ATE. | ||

This means that assumptions play a crucial role in delivering stronger conclusions about this policy relevant parameter. | This means that assumptions play a crucial role in delivering stronger conclusions about this policy relevant parameter. | ||

The partial identification approach to empirical research recommends that as assumptions are added to the analysis, one systematically reports how each contributes to shrinking the bounds, making transparent their role in shaping inference. | The partial identification approach to empirical research recommends that as assumptions are added to the analysis, one systematically reports how each contributes to shrinking the bounds, making transparent their role in shaping inference. </i> | ||

What assumptions may researchers bring to bear to learn more about treatment effects of interest? | What assumptions may researchers bring to bear to learn more about treatment effects of interest? | ||

The literature has provided a wide array of well motivated and useful restrictions. | The literature has provided a wide array of well motivated and useful restrictions. | ||

Here I consider two examples. | Here I consider two examples. | ||

The first one entails ''shape restrictions'' on the treatment response function, leaving selection unrestricted. | The first one entails ''shape restrictions'' on the treatment response function, leaving selection unrestricted. | ||

<ref name="man97:monotone"></ref> obtains bounds on treatment effects under the assumption that the response functions are monotone, semi-monotone, or concave-monotone. | <ref name="man97:monotone"><span style="font-variant-caps:small-caps">Manski, C.F.</span> (1997b): “Monotone Treatment Response” ''Econometrica'', 65(6), 1311--1334.</ref> obtains bounds on treatment effects under the assumption that the response functions are monotone, semi-monotone, or concave-monotone. | ||

These restrictions are motivated by economic theory, where it is commonly presumed, e.g., that demand functions are downward sloping and supply functions are upward sloping. | These restrictions are motivated by economic theory, where it is commonly presumed, e.g., that demand functions are downward sloping and supply functions are upward sloping. | ||

Let the set <math>\T</math> be ordered in terms of degree of intensity. | Let the set <math>\T</math> be ordered in terms of degree of intensity. | ||

Then <ref name="man97:monotone" | Then <ref name="man97:monotone"/>'s ''monotone treatment response'' assumption requires that | ||

<math display="block"> | <math display="block"> | ||

| Line 464: | Line 464: | ||

\end{align} | \end{align} | ||

</math> | </math> | ||

Hence, the sharp bounds on <math>\E_\sQ(\ey(t)|\ex=x)</math> are <ref name="man97:monotone" | Hence, the sharp bounds on <math>\E_\sQ(\ey(t)|\ex=x)</math> are <ref name="man97:monotone"/>{{rp|at=Proposition M1}} | ||

<math display="block"> | <math display="block"> | ||

| Line 475: | Line 475: | ||

Under the monotone treatment response assumption, the bounds on <math>\E_\sQ(\ey(t)|\ex=x)</math> are obtained using information from all <math>(\ey,\es)</math> pairs (given <math>\ex=x</math>), while the bounds in \eqref{eq:WCB:treat} only use the information provided by <math>(\ey,\es)</math> pairs for which <math>\es=t</math> (given <math>\ex=x</math>). | Under the monotone treatment response assumption, the bounds on <math>\E_\sQ(\ey(t)|\ex=x)</math> are obtained using information from all <math>(\ey,\es)</math> pairs (given <math>\ex=x</math>), while the bounds in \eqref{eq:WCB:treat} only use the information provided by <math>(\ey,\es)</math> pairs for which <math>\es=t</math> (given <math>\ex=x</math>). | ||

As a consequence, the bounds in \eqref{eq:MTR:treat} are informative even if <math>\sP(\es= t|\ex=x)=0</math>, whereas the worst case bounds are not. | As a consequence, the bounds in \eqref{eq:MTR:treat} are informative even if <math>\sP(\es= t|\ex=x)=0</math>, whereas the worst case bounds are not. | ||

Concerning the ATE with <math>t_1 > t_0</math>, under monotone treatment response its lower bound is zero, and its upper bound is obtained by subtracting the lower bound on <math>\E_\sQ(\ey(t_0)|\ex=x)</math> from the upper bound on <math>\E_\sQ(\ey(t_1)|\ex=x)</math>, where both bounds are obtained as in \eqref{eq:MTR:treat} <ref name="man97:monotone" | Concerning the ATE with <math>t_1 > t_0</math>, under monotone treatment response its lower bound is zero, and its upper bound is obtained by subtracting the lower bound on <math>\E_\sQ(\ey(t_0)|\ex=x)</math> from the upper bound on <math>\E_\sQ(\ey(t_1)|\ex=x)</math>, where both bounds are obtained as in \eqref{eq:MTR:treat} <ref name="man97:monotone"/>{{rp|at=Proposition M2}}. | ||

The second example of assumptions used to tighten worst case bounds is that of ''exclusion restrictions'', as in, e.g., <ref name="man90"></ref>. | The second example of assumptions used to tighten worst case bounds is that of ''exclusion restrictions'', as in, e.g., <ref name="man90"><span style="font-variant-caps:small-caps">Manski, C.F.</span> (1990): “Nonparametric Bounds on Treatment Effects” ''The American Economic Review Papers and Proceedings'', 80(2), 319--323.</ref>. | ||

Suppose the researcher observes a random variable <math>\ez</math>, taking its realizations in <math>\cZ</math>, such that<ref group="Notes" >Stronger exclusion restrictions include statistical independence of the response function at each <math>t</math> with <math>\ez</math>: <math>\sQ(\ey(t)|\ez,\ex)=\sQ(\ey(t)|\ex)~\forall t \in\T,~\ex</math>-a.s.; and statistical independence of the entire response function with <math>\ez</math>: <math>\sQ([\ey(t),t \in\T]|\ez,\ex)=\sQ([\ey(t),t \in\T]|\ex),~\ex</math>-a.s. | Suppose the researcher observes a random variable <math>\ez</math>, taking its realizations in <math>\cZ</math>, such that<ref group="Notes" >Stronger exclusion restrictions include statistical independence of the response function at each <math>t</math> with <math>\ez</math>: <math>\sQ(\ey(t)|\ez,\ex)=\sQ(\ey(t)|\ex)~\forall t \in\T,~\ex</math>-a.s.; and statistical independence of the entire response function with <math>\ez</math>: <math>\sQ([\ey(t),t \in\T]|\ez,\ex)=\sQ([\ey(t),t \in\T]|\ex),~\ex</math>-a.s. | ||

Examples of partial identification analysis under these conditions can be found in | Examples of partial identification analysis under these conditions can be found in {{ref|name=bal:pea97}}, {{ref|name=man03}}, {{ref|name=kit09}}, {{ref|name=ber:mol:mol12}}, {{ref|name=mac:sha:vyt18}}, and many others.</ref> | ||

<math display="block"> | <math display="block"> | ||

| Line 500: | Line 500: | ||

If the instrument affects the probability of being selected into treatment, or the average outcome for the subpopulation receiving treatment <math>t</math>, the bounds on <math>\E_\sQ(\ey(t)|\ex=x)</math> shrink. | If the instrument affects the probability of being selected into treatment, or the average outcome for the subpopulation receiving treatment <math>t</math>, the bounds on <math>\E_\sQ(\ey(t)|\ex=x)</math> shrink. | ||

If the bounds are empty, the mean independence assumption can be refuted (see [[guide:7b0105e1fc#sec:misspec |Section]] for a discussion of misspecification in partial identification). | If the bounds are empty, the mean independence assumption can be refuted (see [[guide:7b0105e1fc#sec:misspec |Section]] for a discussion of misspecification in partial identification). | ||

<ref name="man:pep00"></ref><ref name="man:pep09"></ref> generalize the notion of instrumental variable to ''monotone'' instrumental variable, and show how these can be used to obtain tighter bounds on treatment effect parameters.<ref group="Notes" >See | <ref name="man:pep00"><span style="font-variant-caps:small-caps">Manski, C.F., <span style="font-variant-caps:normal">and</span> J.V. Pepper</span> (2000): “Monotone Instrumental Variables: With an Application to the Returns to Schooling” ''Econometrica'', 68(4), 997--1010.</ref><ref name="man:pep09"><span style="font-variant-caps:small-caps">Manski, C.F., <span style="font-variant-caps:normal">and</span> J.V. Pepper</span> (2009): “More on monotone instrumental variables” ''The Econometrics Journal'', 12(s1), S200--S216.</ref> generalize the notion of instrumental variable to ''monotone'' instrumental variable, and show how these can be used to obtain tighter bounds on treatment effect parameters.<ref group="Notes" >See {{ref|name=che:ros19}}{{rp|at=Chapter XXX in this Volume}} for further discussion.</ref> | ||

They also show how shape restrictions and exclusion restrictions can jointly further tighten the bounds. | They also show how shape restrictions and exclusion restrictions can jointly further tighten the bounds. | ||

<ref name="man13social"></ref> generalizes these findings to the case where treatment response may have social interactions -- that is, each individual's outcome depends on the treatment received by all other individuals. | <ref name="man13social"><span style="font-variant-caps:small-caps">Manski, C.F.</span> (2013a): “Identification of treatment response with social interactions” ''The Econometrics Journal'', 16(1), S1--S23.</ref> generalizes these findings to the case where treatment response may have social interactions -- that is, each individual's outcome depends on the treatment received by all other individuals. | ||

==<span id="subsec:interval_data"></span>Interval Data== | |||

Identification [[#IP:bounds:mean:md |Problem]], as well as the treatment evaluation problem in Section [[#subsec:programme:eval |Treatment Effects with and without Instrumental Variables]], is an instance of the more general question of what can be learned about (functionals of) probability distributions of interest, in the presence of interval valued outcome and/or covariate data. | Identification [[#IP:bounds:mean:md |Problem]], as well as the treatment evaluation problem in Section [[#subsec:programme:eval |Treatment Effects with and without Instrumental Variables]], is an instance of the more general question of what can be learned about (functionals of) probability distributions of interest, in the presence of interval valued outcome and/or covariate data. | ||

Such data have become commonplace in Economics. | Such data have become commonplace in Economics. | ||

For example, since the early 1990s the Health and Retirement Study collects income data from survey respondents in the form of brackets, with degenerate (singleton) intervals for individuals who opt to fully reveal their income (see, e.g., <ref name="jus:suz95"></ref>). | For example, since the early 1990s the Health and Retirement Study collects income data from survey respondents in the form of brackets, with degenerate (singleton) intervals for individuals who opt to fully reveal their income (see, e.g., <ref name="jus:suz95"><span style="font-variant-caps:small-caps">Juster, F.T., <span style="font-variant-caps:normal">and</span> R.Suzman</span> (1995): “An Overview of the Health and Retirement Study” ''Journal of Human Resources'', 30 (Supplement), S7--S56.</ref>). | ||

Due to concerns for privacy, public use tax data are recorded as the number of tax payers which belong to each of a finite number of cells (see, e.g., <ref name="pic05"></ref>). | Due to concerns for privacy, public use tax data are recorded as the number of tax payers which belong to each of a finite number of cells (see, e.g., <ref name="pic05"><span style="font-variant-caps:small-caps">Picketty, T.</span> (2005): “Top Income Shares in the Long Run: An Overview” ''Journal of the European Economic Association'', 3, 382--392.</ref>). | ||

The Occupational Employment Statistics (OES) program at the Bureau of Labor Statistics <ref name="BLS"></ref> collects wage data from employers as intervals, and uses these data to construct estimates | The Occupational Employment Statistics (OES) program at the Bureau of Labor Statistics <ref name="BLS"><span style="font-variant-caps:small-caps">{Bureau of Labor Statistics}</span> (2018): “Occupational Employment Statistics” U.S. Department of Labor, available online at [www.bls.gov/oes/ www.bls.gov/oes/]; accessed 1/28/2018.</ref> collects wage data from employers as intervals, and uses these data to construct estimates | ||

for wage and salary workers in more than 800 detailed occupations. | for wage and salary workers in more than 800 detailed occupations. | ||

<ref name="man:mol10"></ref> and <ref name="giu:man:mol19round"></ref> document the extensive prevalence of rounding in survey responses to probabilistic expectation questions, and propose to use a person's response pattern across different questions to infer his rounding practice, the result being interpretation of reported numerical values as interval data. | <ref name="man:mol10"><span style="font-variant-caps:small-caps">Manski, C.F., <span style="font-variant-caps:normal">and</span> F.Molinari</span> (2010): “Rounding Probabilistic Expectations in Surveys” ''Journal of Business and Economic Statistics'', 28(2), 219--231.</ref> and <ref name="giu:man:mol19round"><span style="font-variant-caps:small-caps">Giustinelli, P., C.F. Manski, <span style="font-variant-caps:normal">and</span> F.Molinari</span> (2019b): “Tail and Center Rounding of Probabilistic Expectations in the Health and Retirement Study” available at [http://faculty.wcas.northwestern.edu/cfm754/gmm_rounding.pdf http://faculty.wcas.northwestern.edu/cfm754/gmm_rounding.pdf].</ref> document the extensive prevalence of rounding in survey responses to probabilistic expectation questions, and propose to use a person's response pattern across different questions to infer his rounding practice, the result being interpretation of reported numerical values as interval data. | ||

Other instances abound. | Other instances abound. | ||

Here I focus first on the case of interval outcome data. | Here I focus first on the case of interval outcome data. | ||

{{proofcard|Identification Problem (Interval Outcome Data)|IP:interval_outcome|Assume that in addition to being compact, either <math>\cY</math> is countable or <math>\cY=[y_0,y_1]</math>, with <math>y_0=\min_{y\in\cY}y</math> and <math>y_1=\max_{y\in\cY}y</math>. | |||

Let <math>(\yL,\yU,\ex)\sim\sP</math> be observable random variables and <math>\ey</math> be an unobservable random variable whose distribution (or features thereof) is of interest, with <math>\yL,\yU,\ey\in\cY</math>. | Let <math>(\yL,\yU,\ex)\sim\sP</math> be observable random variables and <math>\ey</math> be an unobservable random variable whose distribution (or features thereof) is of interest, with <math>\yL,\yU,\ey\in\cY</math>. | ||

Suppose that <math>(\yL,\yU,\ey)</math> are such that <math>\sR(\yL\le\ey\le\yU)=1</math>.<ref group="Notes" > | Suppose that <math>(\yL,\yU,\ey)</math> are such that <math>\sR(\yL\le\ey\le\yU)=1</math>.<ref group="Notes" ><span id="fn:missing_special_case_interval"/>In Identification [[#IP:bounds:mean:md |Problem]] the observable variables are <math>(\ey\ed,\ed,\ex)</math>, and <math>(\yL,\yU)</math> are determined as follows: <math>\yL=\ey\ed+y_0(1-\ed)</math>, <math>\yU=\ey\ed+y_1(1-\ed)</math>. For the analysis in Section [[#subsec:programme:eval |Treatment Effects with and without Instrumental Variables]], the data is <math>(\ey,\es,\ex)</math> and <math>\yL=\ey\one(\es=t)+y_0\one(\es\ne t)</math>, <math>\yU=\ey\one(\es=t)+y_1\one(\es\ne t)</math>. | ||

Hence, <math>\sP(\yL\le\ey\le\yU)=1</math> by construction.</ref> | Hence, <math>\sP(\yL\le\ey\le\yU)=1</math> by construction.</ref> | ||

In the absence of additional information, what can the researcher learn about features of <math>\sQ(\ey|\ex=x)</math>, the conditional distribution of <math>\ey</math> given <math>\ex=x</math>? | In the absence of additional information, what can the researcher learn about features of <math>\sQ(\ey|\ex=x)</math>, the conditional distribution of <math>\ey</math> given <math>\ex=x</math>? | ||

|}} | |||

It is immediate to obtain the sharp identification region | It is immediate to obtain the sharp identification region | ||

| Line 549: | Line 550: | ||

\end{align*} | \end{align*} | ||

</math> | </math> | ||

Then <math>\eY</math> is a random closed set according to [[guide:379e0dcd67#def:rcs |Definition]].<ref group="Notes" >For a proof of this statement, see | Then <math>\eY</math> is a random closed set according to [[guide:379e0dcd67#def:rcs |Definition]].<ref group="Notes" >For a proof of this statement, see {{ref|name=mol:mol18}}{{rp|at=Example 1.11}}.</ref> The requirement <math>\sR(\yL\le\ey\le\yU)=1</math> can be equivalently expressed as | ||

<math display="block"> | <math display="block"> | ||

| Line 558: | Line 559: | ||

</math> | </math> | ||

Equation \eqref{eq:y_in_Y}, together with knowledge of <math>\sP</math>, exhausts all the information in the data and maintained assumptions. | Equation \eqref{eq:y_in_Y}, together with knowledge of <math>\sP</math>, exhausts all the information in the data and maintained assumptions. | ||

In order to harness such information to characterize the set of observationally equivalent probability distributions for <math>\ey</math>, one can leverage a result due to <ref name="art83"></ref> (and <ref name="nor92"></ref>), reported in [[guide:379e0dcd67#thr:artstein |Theorem]] in [[guide:379e0dcd67#app:RCS |Appendix]], which allows one to translate \eqref{eq:y_in_Y} into a collection of conditional moment inequalities. | In order to harness such information to characterize the set of observationally equivalent probability distributions for <math>\ey</math>, one can leverage a result due to <ref name="art83"><span style="font-variant-caps:small-caps">Artstein, Z.</span> (1983): “Distributions of random sets and random selections” ''Israel Journal of Mathematics'', 46, 313--324.</ref> (and <ref name="nor92"><span style="font-variant-caps:small-caps">Norberg, T.</span> (1992): “On the existence of ordered couplings of random sets --- with applications” ''Israel Journal of Mathematics'', 77, 241--264.</ref>), reported in [[guide:379e0dcd67#thr:artstein |Theorem]] in [[guide:379e0dcd67#app:RCS |Appendix]], which allows one to translate \eqref{eq:y_in_Y} into a collection of conditional moment inequalities. | ||

Specifically, let <math>\cT</math> denote the space of all probability measures with support in <math>\cY</math>. | Specifically, let <math>\cT</math> denote the space of all probability measures with support in <math>\cY</math>. | ||

{{proofcard|Theorem (Conditional Distribution of Interval-Observed Outcome Data)|SIR:CDF_id| | |||

Given <math>\tau\in\cT</math>, let <math>\tau_K(x)</math> denote the probability that distribution <math>\tau</math> assigns to set <math>K</math> conditional on <math>\ex=x</math>. | Given <math>\tau\in\cT</math>, let <math>\tau_K(x)</math> denote the probability that distribution <math>\tau</math> assigns to set <math>K</math> conditional on <math>\ex=x</math>. | ||

Under the assumptions in Identification [[#IP:interval_outcome |Problem]], the sharp identification region for <math>\sQ(\ey|\ex=x)</math> is | Under the assumptions in Identification [[#IP:interval_outcome |Problem]], the sharp identification region for <math>\sQ(\ey|\ex=x)</math> is | ||

| Line 577: | Line 577: | ||

\end{align} | \end{align} | ||

</math> | </math> | ||

| | |||

[[guide:379e0dcd67#thr:artstein |Theorem]] yields \eqref{eq:sharp_id_P_interval_1}. | [[guide:379e0dcd67#thr:artstein |Theorem]] yields \eqref{eq:sharp_id_P_interval_1}. | ||

If <math>\cY=[y_0,y_1]</math>, <ref name="mol:mol18" | If <math>\cY=[y_0,y_1]</math>, <ref name="mol:mol18"/>{{rp|at=Theorem 2.25}} show that it suffices to verify the inequalities in \eqref{eq:sharp_id_P_interval_2} for sets <math>K</math> that are intervals. | ||

}} | |||

Compare equation \eqref{eq:sharp_id_P_interval_1} with equation \eqref{eq:sharp_id_P_md_Manski}. | Compare equation \eqref{eq:sharp_id_P_interval_1} with equation \eqref{eq:sharp_id_P_md_Manski}. | ||

Under the set-up of Identification [[#IP:bounds:mean:md |Problem]], when <math>\ed=1</math> we have <math>\eY=\{\ey\}</math> and when <math>\ed=0</math> we have <math>\eY=\cY</math>. | Under the set-up of Identification [[#IP:bounds:mean:md |Problem]], when <math>\ed=1</math> we have <math>\eY=\{\ey\}</math> and when <math>\ed=0</math> we have <math>\eY=\cY</math>. | ||

Hence, for any <math>K \subsetneq \cY</math>, <math>\sP(\eY \subset K|\ex=x)=\sP(\ey\in K|\ex=x,\ed=1)\sP(\ed=1)</math>.<ref group="Notes" >For <math>K = \cY</math>, both \eqref{eq:sharp_id_P_interval_1} and \eqref{eq:sharp_id_P_md_Manski} hold trivially.</ref> | Hence, for any <math>K \subsetneq \cY</math>, <math>\sP(\eY \subset K|\ex=x)=\sP(\ey\in K|\ex=x,\ed=1)\sP(\ed=1)</math>.<ref group="Notes" >For <math>K = \cY</math>, both \eqref{eq:sharp_id_P_interval_1} and \eqref{eq:sharp_id_P_md_Manski} hold trivially.</ref> | ||

It follows that the characterizations in \eqref{eq:sharp_id_P_interval_1} and \eqref{eq:sharp_id_P_md_Manski} are equivalent. | It follows that the characterizations in \eqref{eq:sharp_id_P_interval_1} and \eqref{eq:sharp_id_P_md_Manski} are equivalent. | ||

If <math>\cY</math> is countable, it is easy to show that \eqref{eq:sharp_id_P_interval_1} simplifies to \eqref{eq:sharp_id_P_md_Manski} (see, e.g., <ref name="ber:mol:mol12"></ref>{{rp|at=Proposition 2.2}}). | If <math>\cY</math> is countable, it is easy to show that \eqref{eq:sharp_id_P_interval_1} simplifies to \eqref{eq:sharp_id_P_md_Manski} (see, e.g., <ref name="ber:mol:mol12"><span style="font-variant-caps:small-caps">Beresteanu, A., I.Molchanov, <span style="font-variant-caps:normal">and</span> F.Molinari</span> (2012): “Partial identification using random set theory” ''Journal of Econometrics'', 166(1), 17 -- 32, with errata at [https://molinari.economics.cornell.edu/docs/NOTE_BMM2012_v3.pdf https://molinari.economics.cornell.edu/docs/NOTE_BMM2012_v3.pdf].</ref>{{rp|at=Proposition 2.2}}). | ||

'''Key Insight (Random set theory and partial identification):'''<span id="big_idea:pi_and_rs"/><i>The mathematical framework for the analysis of random closed sets embodied in random set theory is naturally suited to conduct identification analysis and statistical inference in partially identified models. | |||

The mathematical framework for the analysis of random closed sets embodied in random set theory is naturally suited to conduct identification analysis and statistical inference in partially identified models. | This is because, as argued by <ref name="ber:mol08"><span style="font-variant-caps:small-caps">Beresteanu, A., <span style="font-variant-caps:normal">and</span> F.Molinari</span> (2008): “Asymptotic Properties for a Class of Partially Identified Models” ''Econometrica'', 76(4), 763--814.</ref> and <ref name="ber:mol:mol11"><span style="font-variant-caps:small-caps">Beresteanu, A., I.Molchanov, <span style="font-variant-caps:normal">and</span> F.Molinari</span> (2011): “Sharp identification regions in models with convex moment predictions” ''Econometrica'', 79(6), 1785--1821.</ref><ref name="ber:mol:mol12"/>, lack of point identification can often be traced back to a collection of random variables that are consistent with the available data and maintained assumptions. | ||

This is because, as argued by <ref name="ber:mol08"></ref> and <ref name="ber:mol:mol11"></ref><ref name="ber:mol:mol12" | |||

In turn, this collection of random variables is equal to the family of selections of a properly specified random closed set, so that random set theory applies. | In turn, this collection of random variables is equal to the family of selections of a properly specified random closed set, so that random set theory applies. | ||

The interval data case is a simple example that illustrates this point. | The interval data case is a simple example that illustrates this point. | ||

More examples are given throughout this chapter. | More examples are given throughout this chapter. | ||

As mentioned in the Introduction, the exercise of defining the random closed set that is relevant for the problem under consideration is routinely carried out in partial identification analysis, even when random set theory is not applied. | As mentioned in the Introduction, the exercise of defining the random closed set that is relevant for the problem under consideration is routinely carried out in partial identification analysis, even when random set theory is not applied. | ||

For example, in the case of treatment effect analysis with monotone response function, <ref name="man97:monotone" | For example, in the case of treatment effect analysis with monotone response function, <ref name="man97:monotone"/> derived the set in the right-hand-side of \eqref{eq:RCS:MTR}, which satisfies Definition [[guide:379e0dcd67#def:rcs |def:rcs]].</i> | ||

An attractive feature of the characterization in \eqref{eq:sharp_id_P_interval_1} is that it holds regardless of the specific assumptions on <math>\yL,\,\yU</math>, and <math>\cY</math>. | An attractive feature of the characterization in \eqref{eq:sharp_id_P_interval_1} is that it holds regardless of the specific assumptions on <math>\yL,\,\yU</math>, and <math>\cY</math>. | ||

Later sections in this chapter illustrate how [[guide:379e0dcd67#thr:artstein |Theorem]] delivers the sharp identification region in other more complex instances of partial identification of probability distributions, as well as in structural models. | Later sections in this chapter illustrate how [[guide:379e0dcd67#thr:artstein |Theorem]] delivers the sharp identification region in other more complex instances of partial identification of probability distributions, as well as in structural models. | ||

In Chapter '''XXX''' in this Volume, <ref name="che:ros19"></ref> apply [[guide:379e0dcd67#thr:artstein |Theorem]] to obtain sharp identification regions for functionals of interest in the important class of ''generalized instrumental variable models''. To avoid repetitions, I do not systematically discuss that class of models in this chapter. | In Chapter '''XXX''' in this Volume, <ref name="che:ros19"><span style="font-variant-caps:small-caps">Chesher, A., <span style="font-variant-caps:normal">and</span> A.M. Rosen</span> (2019): “Generalized instrumental variable models, methods, and applications” in ''Handbook of Econometrics''. Elsevier.</ref> apply [[guide:379e0dcd67#thr:artstein |Theorem]] to obtain sharp identification regions for functionals of interest in the important class of ''generalized instrumental variable models''. To avoid repetitions, I do not systematically discuss that class of models in this chapter. | ||

When addressing questions about features of <math>\sQ(\ey|\ex=x)</math> in the presence of interval outcome data, an alternative approach (e.g. <ref name="tam10"></ref><ref name="pon:tam11"></ref>) looks at all (random) mixtures of <math>\yL,\yU</math>. | When addressing questions about features of <math>\sQ(\ey|\ex=x)</math> in the presence of interval outcome data, an alternative approach (e.g. <ref name="tam10"><span style="font-variant-caps:small-caps">Tamer, E.</span> (2010): “Partial Identification in Econometrics” ''Annual Review of Economics'', 2, 167--195.</ref><ref name="pon:tam11"><span style="font-variant-caps:small-caps">Ponomareva, M., <span style="font-variant-caps:normal">and</span> E.Tamer</span> (2011): “Misspecification in moment inequality models: back to moment equalities?” ''The Econometrics Journal'', 14(2), 186--203.</ref>) looks at all (random) mixtures of <math>\yL,\yU</math>. | ||

The approach is based on a random variable <math>\eu</math> (a ''selection mechanism'' that picks an element of <math>\eY</math>) with values in <math>[0,1]</math>, whose distribution conditional on <math>\yL,\yU</math> is left completely unspecified. | The approach is based on a random variable <math>\eu</math> (a ''selection mechanism'' that picks an element of <math>\eY</math>) with values in <math>[0,1]</math>, whose distribution conditional on <math>\yL,\yU</math> is left completely unspecified. | ||

Using this random variable, one defines | Using this random variable, one defines | ||

| Line 612: | Line 611: | ||

This is because each <math>\ey_\eu</math> is a (stochastic) convex combination of <math>\yL,\yU</math>, hence each of these random variables satisfies <math>\sR(\yL\le\ey_\eu\le\yU)=1</math>. | This is because each <math>\ey_\eu</math> is a (stochastic) convex combination of <math>\yL,\yU</math>, hence each of these random variables satisfies <math>\sR(\yL\le\ey_\eu\le\yU)=1</math>. | ||

While such characterization is sharp, it can be of difficult implementation in practice, because it requires working with all possible random variables <math>\ey_\eu</math> built using all possible random variables <math>\eu</math> with support in <math>[0,1]</math>. | While such characterization is sharp, it can be of difficult implementation in practice, because it requires working with all possible random variables <math>\ey_\eu</math> built using all possible random variables <math>\eu</math> with support in <math>[0,1]</math>. | ||

[[guide:379e0dcd67#thr:artstein |Theorem]] allows one to bypass the use of <math>\eu</math>, and obtain directly a characterization of the sharp identification region for <math>\sQ(\ey|\ex=x)</math> based on conditional moment inequalities.<ref group="Notes" >It can be shown that the collection of random variables <math>\ey_\eu</math> equals the collection of ''measurable selections'' of the random closed set <math>\eY\equiv [\yL,\yU]</math> (see [[guide:379e0dcd67#def:selection |Definition]]); see | [[guide:379e0dcd67#thr:artstein |Theorem]] allows one to bypass the use of <math>\eu</math>, and obtain directly a characterization of the sharp identification region for <math>\sQ(\ey|\ex=x)</math> based on conditional moment inequalities.<ref group="Notes" >It can be shown that the collection of random variables <math>\ey_\eu</math> equals the collection of ''measurable selections'' of the random closed set <math>\eY\equiv [\yL,\yU]</math> (see [[guide:379e0dcd67#def:selection |Definition]]); see {{ref|name=ber:mol:mol11}}{{rp|at=Lemma 2.1}}. | ||

[[guide:379e0dcd67#thr:artstein |Theorem]] provides a characterization of the distribution of any <math>\ey_\eu</math> that satisfies <math>\ey_\eu \in \eY</math> a.s., based on a dominance condition that relates the distribution of <math>\ey_\eu</math> to the distribution of the random set <math>\eY</math>. | [[guide:379e0dcd67#thr:artstein |Theorem]] provides a characterization of the distribution of any <math>\ey_\eu</math> that satisfies <math>\ey_\eu \in \eY</math> a.s., based on a dominance condition that relates the distribution of <math>\ey_\eu</math> to the distribution of the random set <math>\eY</math>. | ||

Such dominance condition is given by the inequalities in \eqref{eq:sharp_id_P_interval_1}. | Such dominance condition is given by the inequalities in \eqref{eq:sharp_id_P_interval_1}. | ||

</ref> | </ref> | ||

<ref name="hor:man98"></ref><ref name="hor:man00"></ref> study nonparametric conditional prediction problems with missing outcome and/or missing covariate data. | <ref name="hor:man98"><span style="font-variant-caps:small-caps">Horowitz, J.L., <span style="font-variant-caps:normal">and</span> C.F. Manski</span> (1998): “Censoring of outcomes and regressors due to survey nonresponse: Identification and estimation using weights and imputations” ''Journal of Econometrics'', 84(1), 37 -- 58.</ref><ref name="hor:man00"><span style="font-variant-caps:small-caps">Horowitz, J.L., <span style="font-variant-caps:normal">and</span> C.F. Manski</span> (2000): “Nonparametric Analysis of Randomized Experiments with Missing Covariate and Outcome Data” ''Journal of the American Statistical Association'', 95(449), 77--84.</ref> study nonparametric conditional prediction problems with missing outcome and/or missing covariate data. | ||

Their analysis shows that this problem is considerably more pernicious than the case where only outcome data are missing. | Their analysis shows that this problem is considerably more pernicious than the case where only outcome data are missing. | ||

For the case of interval covariate data, <ref name="man:tam02"></ref> provide a set of sufficient conditions under which simple and elegant sharp bounds on functionals of <math>\sQ(\ey|\ex)</math> can be obtained, even in this substantially harder identification problem. | For the case of interval covariate data, <ref name="man:tam02"><span style="font-variant-caps:small-caps">Manski, C.F., <span style="font-variant-caps:normal">and</span> E.Tamer</span> (2002): “Inference on Regressions with Interval Data on a Regressor or Outcome” ''Econometrica'', 70(2), 519--546.</ref> provide a set of sufficient conditions under which simple and elegant sharp bounds on functionals of <math>\sQ(\ey|\ex)</math> can be obtained, even in this substantially harder identification problem. | ||

Their assumptions are listed in Identification [[#IP:interval_covariate |Problem]], and their result (with proof) in Theorem [[#SIR:man:tam:nonpar |SIR-]]. | Their assumptions are listed in Identification [[#IP:interval_covariate |Problem]], and their result (with proof) in Theorem [[#SIR:man:tam:nonpar |SIR-]]. | ||

{{proofcard|Identification Problem (Interval Covariate Data)|IP:interval_covariate| | |||

Let <math>(\ey,\xL,\xU)\sim\sP</math> be observable random variables in <math>\R\times\R\times\R</math> and <math>\ex\in\R</math> be an unobservable random variable. | Let <math>(\ey,\xL,\xU)\sim\sP</math> be observable random variables in <math>\R\times\R\times\R</math> and <math>\ex\in\R</math> be an unobservable random variable. | ||

Suppose that <math>\sR</math>, the joint distribution of <math>(\ey,\ex,\xL,\xU)</math>, is such that: (I) <math>\sR(\xL\le\ex\le\xU)=1</math>; (M) <math>\E_\sQ(\ey|\ex=x)</math> is weakly increasing in <math>x</math>; and (MI) <math>\E_{\sR}(\ey|\ex,\xL,\xU)=\E_\sQ(\ey|\ex)</math>. | Suppose that <math>\sR</math>, the joint distribution of <math>(\ey,\ex,\xL,\xU)</math>, is such that: (I) <math>\sR(\xL\le\ex\le\xU)=1</math>; (M) <math>\E_\sQ(\ey|\ex=x)</math> is weakly increasing in <math>x</math>; and (MI) <math>\E_{\sR}(\ey|\ex,\xL,\xU)=\E_\sQ(\ey|\ex)</math>. | ||

In the absence of additional information, what can the researcher learn about <math>\E_\sQ(\ey|\ex=x)</math> for given <math>x\in\cX</math>? | In the absence of additional information, what can the researcher learn about <math>\E_\sQ(\ey|\ex=x)</math> for given <math>x\in\cX</math>? | ||

|}} | |||

Compared to the earlier discussion for the interval outcome case, here there are two additional assumptions. | Compared to the earlier discussion for the interval outcome case, here there are two additional assumptions. | ||

The monotonicity condition (M) is a simple shape restrictions, which however requires some prior knowledge about the joint distribution of <math>(\ey,\ex)</math>. | The monotonicity condition (M) is a simple shape restrictions, which however requires some prior knowledge about the joint distribution of <math>(\ey,\ex)</math>. | ||

The mean independence restriction (MI) requires that if <math>\ex</math> were observed, knowledge of <math>(\xL,\xU)</math> would not affect the conditional expectation of <math>\ey|\ex</math>. | The mean independence restriction (MI) requires that if <math>\ex</math> were observed, knowledge of <math>(\xL,\xU)</math> would not affect the conditional expectation of <math>\ey|\ex</math>. | ||

The assumption is not innocuous, as pointed out by the authors. | The assumption is not innocuous, as pointed out by the authors. | ||

For example, it may fail if censoring is endogenous.<ref group="Notes" > | For example, it may fail if censoring is endogenous.<ref group="Notes" ><span id="foot:auc:bug:hot17"/>For the case of missing covariate data, which is a special case of interval covariate data similarly to arguments in [[#fn:missing_special_case_interval |footnote]], {{ref|name=auc:bug:hot17}} show that the MI restriction implies the assumption that data is missing at random.</ref> | ||

{{proofcard|Theorem (Conditional Expectation with Interval-Observed Covariate Data)|SIR:man:tam:nonpar| | |||