guide:52e01d4de7: Difference between revisions

No edit summary |

mNo edit summary |

||

| (5 intermediate revisions by 2 users not shown) | |||

| Line 1: | Line 1: | ||

<div class="d-none"><math> | |||

\newcommand{\NA}{{\rm NA}} | |||

\newcommand{\mat}[1]{{\bf#1}} | |||

\newcommand{\exref}[1]{\ref{##1}} | |||

\newcommand{\secstoprocess}{\all} | |||

\newcommand{\NA}{{\rm NA}} | |||

\newcommand{\mathds}{\mathbb}</math></div> | |||

Most of our study of probability has dealt with independent trials processes. | |||

These processes are the basis of classical probability theory and much of | |||

statistics. We have discussed two of the principal theorems for these | |||

processes: the Law of Large Numbers and the Central Limit Theorem. | |||

We have seen that when a sequence of chance experiments forms an independent | |||

trials process, the possible outcomes for each experiment are the same and | |||

occur with the same probability. Further, knowledge of the outcomes of the | |||

previous experiments does not influence our predictions for the outcomes of the | |||

next experiment. The distribution for the outcomes of a single experiment is | |||

sufficient to construct a tree and a tree measure for a sequence of <math>n</math> | |||

experiments, and we can answer any probability question about these | |||

experiments by using this tree measure. | |||

Modern probability theory studies chance processes for which the knowledge of | |||

previous outcomes influences predictions for future experiments. In principle, | |||

when we observe a sequence of chance experiments, all of the past outcomes | |||

could influence our predictions for the next experiment. For example, this | |||

should be the case in predicting a student's grades on a sequence of exams in a | |||

course. But to allow this much generality would make it very difficult to | |||

prove general results. | |||

In 1907, A. A. Markov began the study of an important new type of chance | |||

process. In this process, the outcome of a given experiment can affect the | |||

outcome of the next experiment. This type of process is called a Markov | |||

chain. | |||

===Specifying a Markov Chain=== | |||

We describe a Markov chain as follows: We have a set of ''states,'' <math>S = | |||

\{s_1,s_2,\ldots,s_r\}</math>. The process starts in | |||

one of | |||

these states and moves successively from one state to another. Each | |||

move is called a ''step.'' If the chain is currently in state <math>s_i</math>, then | |||

it | |||

moves to state <math>s_j</math> at the next step with a probability denoted by <math>p_{ij}</math>, | |||

and this | |||

probability does not depend upon which states the chain was in before the | |||

current state. | |||

The probabilities <math>p_{ij}</math> are called ''transition | |||

probabilities.'' The process can remain in the state | |||

it is in, | |||

and this occurs with probability <math>p_{ii}</math>. An initial probability | |||

distribution, defined | |||

on <math>S</math>, specifies the starting state. Usually this is done by specifying a | |||

particular | |||

state as the starting state. | |||

R. A. Howard<ref group="Notes" >R. A. Howard, ''Dynamic Probabilistic Systems,'' | |||

vol. 1 | |||

(New York: John Wiley and Sons, 1971).</ref> provides us with a | |||

picturesque | |||

description of a Markov chain as a frog jumping on a set of lily pads. | |||

The frog starts on one of the pads and then jumps from lily pad to lily | |||

pad with the appropriate transition probabilities. | |||

<span id="exam 11.1.1"/> | |||

'''Example''' | |||

According to Kemeny, Snell, and Thompson,<ref group="Notes" >J. G. Kemeny, J. L. Snell, | |||

G. L. Thompson, ''Introduction to Finite Mathematics,'' 3rd ed. (Englewood Cliffs, NJ: Prentice-Hall, 1974).</ref> the Land of Oz is blessed by many | |||

things, but not by good weather. They never have two nice days in a row. If they have a | |||

nice day, | |||

they are just as likely to have snow as rain the next day. If they have snow | |||

or rain, they | |||

have an even chance of having the same the next day. If there is change from | |||

snow or | |||

rain, only half of the time is this a change to a nice day. With this | |||

information we form a | |||

Markov chain as follows. We take as states the kinds of weather R, N, and S. | |||

From the above information we determine the transition probabilities. These are most | |||

conveniently represented | |||

in a square array as | |||

<div class="d-flex justify-content-center"> | |||

{| | |||

|- | |||

| | |||

| <span style="margin-left:20px;margin-right:25px;"><math>\mbox{R}</math></span><span style="margin-right:25px;"><math>\mbox{N}</math></span> <span><math>\mbox{S}</math></span> | |||

|- | |||

| style= "padding:0px" |<math>\begin{array}{c c c c} | |||

\mbox{R} \\ | |||

\mbox{N}\\ | |||

\mbox{S} | |||

\end{array}</math> || <math>\begin{pmatrix} | |||

1/2 & 1/4 & 1/4 \\ | |||

1/2 & 0 & 1/2 \\ | |||

1/4 & 1/4 & 1/2 | |||

\end{pmatrix}</math> | |||

|} | |||

</div> | |||

===Transition Matrix=== | |||

The entries in the first row of the matrix <math>\mat {P}</math> in [[#exam 11.1.1|Example]] represent the probabilities for the various kinds of weather following a rainy day. Similarly, the entries in the second and third rows represent the probabilities for | |||

the various kinds of weather following nice and snowy days, respectively. | |||

Such a square array is called the ''matrix of transition | |||

probabilities'', or the ''transition matrix''. | |||

We consider the question of determining the probability that, given the chain | |||

is in state <math>i</math> today, it will be in state <math>j</math> two days from now. We denote this | |||

probability by <math>p_{ij}^{(2)}</math>. In [[#exam 11.1.1 |Example]], we see that if it | |||

is | |||

rainy today then the event that it is snowy two days from now is the disjoint | |||

union | |||

of the following three events: 1) it is rainy tomorrow and snowy two days from | |||

now, | |||

2) it is nice tomorrow and snowy two days from now, and 3) it is snowy tomorrow | |||

and | |||

snowy two days from now. The probability of the first of these events is the | |||

product | |||

of the conditional probability that it is rainy tomorrow, given that it is | |||

rainy today, | |||

and the conditional probability that it is snowy two days from now, given that | |||

it is | |||

rainy tomorrow. Using the transition matrix <math>\mat{P}</math>, we can write this | |||

product as | |||

<math>p_{11}p_{13}</math>. The other two events also have probabilities that can be | |||

written as products | |||

of entries of <math>\mat{P}</math>. Thus, we have | |||

<math display="block"> | |||

p_{13}^{(2)} = p_{11}p_{13} + p_{12}p_{23} + p_{13}p_{33}\ . | |||

</math> | |||

This equation should remind the reader of a dot product of two vectors; we are | |||

dotting the first | |||

row of <math> \mat {P}</math> with the third column of <math> \mat {P}</math>. This is just what is | |||

done in | |||

obtaining the <math>1,3</math>-entry of the product of <math> \mat {P}</math> with itself. | |||

In general, if a Markov chain has <math>r</math> states, then | |||

<math display="block"> | |||

p_{ij}^{(2)} = \sum_{k = 1}^r p_{ik}p_{kj}\ . | |||

</math> | |||

The following general theorem is easy to prove by using the above observation | |||

and | |||

induction. | |||

{{proofcard|Theorem|thm_11.1.1|Let <math> \mat {P}</math> be the transition matrix of a Markov chain. The <math>ij</math>th | |||

entry <math>p_{ij}^{(n)}</math> of the matrix <math> \mat {P}^n</math> gives the | |||

probability that the Markov chain, starting in state <math>s_i</math>, will be in | |||

state <math>s_j</math> after <math>n</math> steps.|The proof of this theorem is left as an exercise ([[exercise:9577f0da14 |Exercise]]).}} | |||

<span id="exam 11.1.1.5"/> | |||

'''Example''' | |||

Consider again the weather in the Land of Oz. We know that the powers of the | |||

transition matrix give us interesting information about the process as it | |||

evolves. We shall be particularly interested in the state of the chain after a | |||

large number of steps. The program ''' MatrixPowers''' computes | |||

the powers of <math>\mat{P}</math>. | |||

We have run the program ''' MatrixPowers''' for the Land of Oz example | |||

to compute the successive powers of <math>\mat{P}</math> from 1 to 6. The results are | |||

shown | |||

in [[#table 11.1 |Table]]. We note that after six days our weather predictions | |||

are, | |||

to three-decimal-place accuracy, independent of today's weather. The | |||

probabilities for the three | |||

types of weather, R, N, and S, are .4, .2, and .4 no matter where the chain | |||

started. This is an | |||

example of a type of Markov chain called a ''regular'' Markov chain. For | |||

this type of chain, | |||

it is true that long-range predictions are independent of the starting state. | |||

Not all chains are | |||

regular, but this is an important class of chains that we shall study in detail | |||

later. | |||

<div class="d-flex justify-content-center"> | |||

<span id="table 11.1"/> | |||

{| | |||

|+ Powers of the Land of Oz transition matrix. | |||

|- | |||

| || || <span style="width:40px;text-align:center;display:inline-block;margin-left:10px;"><math>\mbox{Rain}</math></span><span style="width:40px;;text-align:center;display:inline-block;"><math>\mbox{Nice}</math></span> <span style="width:40px;;text-align:center;"><math>\mbox{Snow}</math></span> | |||

|- | |||

| <math>\mat{P}^1 =</math> | |||

| style= "padding:0px" |<math>\begin{array}{c c c c} | |||

\mbox{Rain} \\ | |||

\mbox{Nice}\\ | |||

\mbox{Snow} | |||

\end{array}</math> || <math>\begin{pmatrix} | |||

.500 & .250 & .250 \\ | |||

.500 & .000 & .500 \\ | |||

.250 & .250 & .500 \\ | |||

\end{pmatrix}</math> | |||

|- | |||

| || || <span style="width:40px;text-align:center;display:inline-block;margin-left:10px;"><math>\mbox{Rain}</math></span><span style="width:40px;;text-align:center;display:inline-block;"><math>\mbox{Nice}</math></span> <span style="width:40px;;text-align:center;"><math>\mbox{Snow}</math></span> | |||

|- | |||

| <math>\mat{P}^2=</math> | |||

| style= "padding:0px" |<math>\begin{array}{c c c c} | |||

\mbox{Rain} \\ | |||

\mbox{Nice}\\ | |||

\mbox{Snow} | |||

\end{array}</math> || <math>\begin{pmatrix} | |||

.438 & .188 & .375 \\ | |||

.375 & .250 & .375 \\ | |||

.375 & .188 & .438 | |||

\end{pmatrix}</math> | |||

|- | |||

| || || <span style="width:40px;text-align:center;display:inline-block;margin-left:10px;"><math>\mbox{Rain}</math></span><span style="width:40px;;text-align:center;display:inline-block;"><math>\mbox{Nice}</math></span> <span style="width:40px;;text-align:center;"><math>\mbox{Snow}</math></span> | |||

|- | |||

| <math>\mat{P}^3=</math> | |||

| style= "padding:0px" |<math>\begin{array}{c c c c} | |||

\mbox{Rain} \\ | |||

\mbox{Nice}\\ | |||

\mbox{Snow} | |||

\end{array}</math> || <math>\begin{pmatrix} | |||

.406 & .203 & .391 \\ | |||

.406 & .188 & .406 \\ | |||

.391 & .203 & .406 | |||

\end{pmatrix}</math> | |||

|- | |||

| || || <span style="width:40px;text-align:center;display:inline-block;margin-left:10px;"><math>\mbox{Rain}</math></span><span style="width:40px;;text-align:center;display:inline-block;"><math>\mbox{Nice}</math></span> <span style="width:40px;;text-align:center;"><math>\mbox{Snow}</math></span> | |||

|- | |||

| <math>\mat{P}^4=</math> | |||

| style= "padding:0px" |<math>\begin{array}{c c c c} | |||

\mbox{Rain} \\ | |||

\mbox{Nice}\\ | |||

\mbox{Snow} | |||

\end{array}</math> || <math>\begin{pmatrix} | |||

.402 & .199 & .398 \\ | |||

.398 & .203 & .398 \\ | |||

.398 & .199 & .402 \\ | |||

\end{pmatrix}</math> | |||

|} | |||

</div> | |||

We now consider the long-term behavior of a Markov chain when it starts in a | |||

state chosen by a probability distribution | |||

on the set of states, which we will call a ''probability | |||

vector''. A probability vector with <math>r</math> components is | |||

a row | |||

vector whose entries are non-negative and sum to 1. If <math>\mat | |||

{u}</math> is a probability vector which represents the initial state of a Markov | |||

chain, then we | |||

think of the <math>i</math>th component of <math>\mat {u}</math> as representing the probability that | |||

the | |||

chain starts in state <math>s_i</math>. | |||

With this interpretation of random starting states, it is easy to prove the following theorem. | |||

{{proofcard|Theorem|thm_11.1.2|Let <math>\mat{P}</math> be the transition matrix of a Markov chain, and let <math>\mat {u}</math> be | |||

the | |||

probability vector which represents the starting distribution. Then the | |||

probability | |||

that the chain is in state <math>s_i</math> after <math>n</math> steps is the <math>i</math>th entry in the | |||

vector | |||

<math display="block"> | |||

\mat{u}^{(n)} = \mat{u}{\mat{P}^n}\ . | |||

</math>|The proof of this theorem is left as an exercise ([[exercise:A73106882e |Exercise]]).}} | |||

We note that if we want to examine the behavior of the chain under the assumption that it starts in a certain state <math>s_i</math>, we simply choose <math>\mat {u}</math> to be the probability vector with <math>i</math>th entry equal to 1 and all other entries equal to 0. | |||

<span id="exam 11.1.1.6"/> | |||

'''Example''' | |||

[[#exam 11.1.1 |(Example]]) let the initial probability vector <math>\mat {u}</math> equal <math>(1/3, 1/3, 1/3)</math>. Then we can | |||

calculate the distribution of the states after three days using [[#thm 11.1.2|Theorem]] and our previous calculation of <math>{\mat {P}^3}</math>. We obtain | |||

<math display="block"> | |||

\begin{eqnarray*} | |||

{\mat {u}}^{(3)} = {\mat {u}}{\mat {P}^3} &=& \pmatrix{ 1/3,& 1/3,& 1/3} | |||

\pmatrix{ .406 & .203 & | |||

.391 \cr .406 & .188 & .406 \cr .391 & .203 & .406 } \cr | |||

&& \cr | |||

&=& \pmatrix{ .401,& .198,& .401} \ . | |||

\end{eqnarray*} | |||

</math> | |||

===Examples=== | |||

The following examples of Markov chains will be used throughout the chapter for exercises. | |||

<span id="exam 11.1.2"/> | |||

'''Example''' | |||

The President of the United States tells person A his or her intention to run | |||

or not to run in the next election. Then A relays the news to B, who in turn | |||

relays the message to C, and so forth, always to some new person. We assume that | |||

there is a probability <math>a</math> that a person will change the answer from yes to no when | |||

transmitting it to the next person and a probability <math>b</math> that he or she will | |||

change it from no to yes. We choose as states the message, either yes or no. The transition matrix is then | |||

<div class="d-flex justify-content-center"> | |||

{| | |||

|- | |||

| || || <span style="width:45px;text-align:center;display:inline-block;margin-left:10px;"><math>\mbox{yes}</math></span><span style="width:45px;;text-align:center;display:inline-block;"><math>\mbox{no}</math></span> | |||

|- | |||

| <math>\mat{P} = \,</math> | |||

| style= "padding:0px" |<math>\begin{array}{c c c c} | |||

\mbox{yes} \\ | |||

\mbox{no} | |||

\end{array}</math> || <math>\begin{pmatrix} | |||

1 - a & a \\ | |||

b & 1 - b \\ | |||

\end{pmatrix}</math> | |||

|} | |||

</div> | |||

The initial state represents the President's choice. | |||

<span id="exam 11.1.3"/> | |||

'''Example''' | |||

Each time a certain horse runs in a three-horse race, he has probability 1/2 of winning, 1/4 of coming in second, and 1/4 of coming in third, independent of | |||

the outcome of any previous race. We have an independent trials process, but it can also be considered from the point of view of Markov chain theory. The transition matrix is | |||

<div class="d-flex justify-content-center"> | |||

{| | |||

|- | |||

| || || <span style="width:30px;text-align:center;display:inline-block;margin-left:10px;"><math>\mbox{W}</math></span><span style="width:30px;;text-align:center;display:inline-block;"><math>\mbox{P}</math></span> <span style="width:30px;;text-align:center;display:inline-block;"><math>\mbox{S}</math></span> | |||

|- | |||

| <math>\mat{P} =</math> | |||

| style= "padding:0px" |<math>\begin{array}{c c c c} | |||

\mbox{W} \\ | |||

\mbox{P}\\ | |||

\mbox{S}\\ | |||

\end{array}</math> || <math>\begin{pmatrix} | |||

.5 & .25 & .25 \\ | |||

.5 & .25 & .25 \\ | |||

.5 & .25 & .25 \\ | |||

\end{pmatrix}</math> | |||

|} | |||

</div> | |||

<span id="exam 11.1.4"/> | |||

'''Example''' | |||

In the Dark Ages, Harvard, Dartmouth, and Yale admitted only male students. | |||

Assume that, at that time, 80 percent of the sons of Harvard men went to Harvard and the rest went to Yale, 40 percent of the sons of Yale men went to Yale, and the rest split evenly between Harvard and Dartmouth; and of the sons of | |||

Dartmouth men, 70 percent went to Dartmouth, 20 percent to Harvard, and | |||

10 percent to Yale. We form a Markov chain with transition matrix | |||

<div class="d-flex justify-content-center"> | |||

{| | |||

|- | |||

| || || <span style="width:25px;text-align:center;display:inline-block;margin-left:10px;"><math>\mbox{H}</math></span><span style="width:25px;;text-align:center;display:inline-block;"><math>\mbox{Y}</math></span> <span style="width:25px;;text-align:center;display:inline-block;"><math>\mbox{D}</math></span> | |||

|- | |||

| <math>\mat{P} =</math> | |||

| style= "padding:0px" |<math>\begin{array}{c c c c} | |||

\mbox{H} \\ | |||

\mbox{Y}\\ | |||

\mbox{D}\\ | |||

\end{array}</math> || <math>\begin{pmatrix} | |||

.8 & .2 & 0 \\ | |||

.3 & .4 & .3 \\ | |||

.2 & .1 & .7 | |||

\end{pmatrix}</math> | |||

|} | |||

</div> | |||

<span id="exam 11.1.5"/> | |||

'''Example''' | |||

Modify [[#exam 11.1.4 |Example]] by assuming that the son of a Harvard man always went to Harvard. The transition matrix is now | |||

<div class="d-flex justify-content-center"> | |||

{| | |||

|- | |||

| || || <span style="width:25px;text-align:center;display:inline-block;margin-left:10px;"><math>\mbox{H}</math></span><span style="width:25px;;text-align:center;display:inline-block;"><math>\mbox{Y}</math></span> <span style="width:25px;;text-align:center;display:inline-block;"><math>\mbox{D}</math></span> | |||

|- | |||

| <math>\mat{P} =</math> | |||

| style= "padding:0px" |<math>\begin{array}{c c c c} | |||

\mbox{H} \\ | |||

\mbox{Y}\\ | |||

\mbox{D}\\ | |||

\end{array}</math> || <math>\begin{pmatrix} | |||

1 & 0 & 0 \\ | |||

.3 & .4 & .3 \\ | |||

.2 & .1 & .7 | |||

\end{pmatrix}</math> | |||

|} | |||

</div> | |||

<span id="exam 11.1.6"/> | |||

'''Example''' | |||

(Ehrenfest Model) The following is a special case of a model, called the | |||

Ehrenfest | |||

model,<ref group="Notes" >P. and T. Ehrenfest, “Über zwei bekannte Einwände gegen das Boltzmannsche H-Theorem,” ''Physikalishce Zeitschrift,'' vol. 8 (1907), | |||

pp. 311-314.</ref> | |||

that has been used to explain diffusion of gases. The general model will be | |||

discussed in detail in [[guide:8be2f96c1e|Mean First Passage Time for Ergodic Chains]]. We have two urns that, between | |||

them, contain four balls. At each step, one of the four balls is chosen at random and | |||

moved from the urn that it is in into the other urn. We choose, as states, the | |||

number of balls in the first urn. The transition matrix is then | |||

<div class="d-flex justify-content-center"> | |||

{| | |||

|- | |||

| || || <span style="width:40px;text-align:center;display:inline-block;margin-left:5px;"><math>0</math></span><span style="width:35px;;text-align:center;display:inline-block;"><math>1</math></span> <span style="width:35px;;text-align:center;display:inline-block;"><math>2</math></span> <span style="width:35px;text-align:center;display:inline-block;"><math>3</math></span> <span style="width:35px;;text-align:center;display:inline-block;"><math>4</math></span> | |||

|- | |||

| <math>\mat{P} =\,\,</math> | |||

| style= "padding:0px" |<math>\begin{array}{c c c c} | |||

0 \\ | |||

1\\ | |||

2\\ | |||

3\\ | |||

4 | |||

\end{array}</math> || <math>\begin{pmatrix} | |||

0 & 1 & 0 & 0 & 0 \\ | |||

1/4 & 0 & 3/4 & 0 & 0 \\ | |||

0 & 1/2 & 0 & 1/2 & 0 \\ | |||

0 & 0 & 3/4 & 0 & 1/4 \\ | |||

0 & 0 & 0 & 1 & 0 \\ | |||

\end{pmatrix}</math> | |||

|} | |||

</div> | |||

<span id="exam 11.1.7"/> | |||

'''Example''' | |||

(Gene Model) The simplest type of inheritance of traits in animals occurs when | |||

a trait is | |||

governed by a pair of genes, each of which may be of two types, say G and g. | |||

An individual may have a GG combination or Gg (which is genetically the same as | |||

gG) or gg. Very often the GG and Gg types are indistinguishable in appearance, | |||

and then we say that the G gene dominates the g gene. An individual is called | |||

''dominant'' if he or she has GG genes, ''recessive'' if he or she has | |||

gg, and ''hybrid'' with a Gg mixture. | |||

In the mating of two animals, the offspring inherits one gene of the pair from | |||

each parent, and the basic assumption of genetics is that these genes are | |||

selected at random, independently of each other. This assumption determines | |||

the probability of occurrence of each type of offspring. The offspring of two | |||

purely dominant parents must be dominant, of two recessive parents must be | |||

recessive, and of one dominant and one recessive parent must be hybrid. | |||

In the mating of a dominant and a hybrid animal, each offspring must get a | |||

G gene from the former and has an equal chance of getting G or g from the | |||

latter. Hence there is an equal probability for getting a dominant or a hybrid | |||

offspring. Again, in the mating of a recessive and a hybrid, there is an even | |||

chance for getting either a recessive or a hybrid. In the mating of two | |||

hybrids, the offspring has an equal chance of getting G or g from each parent. | |||

Hence the probabilities are 1/4 for GG, 1/2 for Gg, and 1/4 for gg. | |||

Consider a process of continued matings. We start with an individual of | |||

known genetic character and mate it with a hybrid. We assume that there is at | |||

least one offspring. An offspring is chosen at random and is mated with a hybrid and | |||

this process repeated through a number of generations. The genetic type of the chosen | |||

offspring in successive generations can be represented by a Markov chain. The states are | |||

dominant, hybrid, and recessive, and indicated by GG, Gg, and gg respectively. | |||

The transition probabilities are | |||

<div class="d-flex justify-content-center"> | |||

{| | |||

|- | |||

| || || <span style="width:30px;text-align:center;display:inline-block;margin-left:10px;"><math>\mbox{GG}</math></span><span style="width:30px;;text-align:center;display:inline-block;"><math>\mbox{Gg}</math></span> <span style="width:30px;;text-align:center;display:inline-block;"><math>\mbox{gg}</math></span> | |||

|- | |||

| <math>\mat{P} =</math> | |||

| style= "padding:0px" |<math>\begin{array}{c c c c} | |||

\mbox{GG} \\ | |||

\mbox{Gg}\\ | |||

\mbox{gg}\\ | |||

\end{array}</math> || <math>\begin{pmatrix} | |||

.5 & .5 & 0 \\ | |||

.25 & .5 & .25 \\ | |||

0 & .5 & .5 | |||

\end{pmatrix}</math> | |||

|} | |||

</div> | |||

<span id="exam 11.1.8"/> | |||

'''Example''' | |||

Modify [[#exam 11.1.7 |Example]] as follows: Instead of mating the oldest | |||

offspring with a hybrid, we mate it with a dominant individual. The transition | |||

matrix | |||

is | |||

<div class="d-flex justify-content-center"> | |||

{| | |||

|- | |||

| || || <span style="text-align:center;display:inline-block;margin-right:10px;margin-left:5px;"><math>\mbox{GG}</math></span><span style="text-align:center;display:inline-block;margin-right:10px;"><math>\mbox{Gg}</math></span> <span style="text-align:center;display:inline-block;"><math>\mbox{gg}</math></span> | |||

|- | |||

| <math>\mat{P} =</math> | |||

| style= "padding:0px" |<math>\begin{array}{c c c c} | |||

\mbox{GG} \\ | |||

\mbox{Gg}\\ | |||

\mbox{gg}\\ | |||

\end{array}</math> || <math>\begin{pmatrix} | |||

1 \,& 0\, & 0 \\ | |||

.5 \,& .5\, & 0 \\ | |||

0 \,& 1\, & 0\\ | |||

\end{pmatrix}</math> | |||

|} | |||

</div> | |||

<span id="exam 11.1.9"/> | |||

'''Example''' | |||

We start with two animals of opposite sex, mate them, select two of their offspring of opposite sex, and mate those, and so forth. To simplify the | |||

example, we will assume that the trait under consideration is independent of sex. | |||

Here a state is determined by a pair of animals. Hence, the states of our | |||

process will be: <math>s_1 = (\mbox{GG},\mbox{GG})</math>, <math>s_2 = (\mbox{GG},\mbox{Gg})</math>, | |||

<math>s_3 = (\mbox{GG},\mbox{gg})</math>, <math>s_4 = (\mbox{Gg},\mbox{Gg})</math>, <math>s_5 = | |||

(\mbox{Gg},\mbox{gg})</math>, and <math>s_6 = (\mbox{gg},\mbox{gg})</math>. | |||

We illustrate the calculation of transition probabilities in terms of the | |||

state <math>s_2</math>. When the process is in this state, one parent has GG genes, the | |||

other Gg. Hence, the probability of a dominant offspring is 1/2. Then the | |||

probability of transition to <math>s_1</math> (selection of two dominants) is 1/4, | |||

transition to <math>s_2</math> is 1/2, and to <math>s_4</math> is 1/4. The other states are treated | |||

the same way. The transition matrix of this chain is: | |||

<div class="d-flex justify-content-center"> | |||

{| | |||

|- | |||

| || || <span style="text-align:center;display:inline-block;margin-left:5px;padding-right:10px;"><math>\mbox{GG,GG}</math></span><span style=";text-align:center;display:inline-block;padding-right:10px;"><math>\mbox{GG,Gg}</math></span> <span style="text-align:center;display:inline-block;padding-right:10px;"><math> \mbox{GG,gg}</math></span> <span style="text-align:center;display:inline-block;padding-right:10px;"><math>\mbox{Gg,Gg}</math></span> <span style="text-align:center;display:inline-block;padding-right:10px;"><math>\mbox{Gg,gg}</math></span> <span style="text-align:center;display:inline-block;padding-left:5px;"><math>\mbox{gg,gg}</math></span> | |||

|- | |||

| <math>\mat{P}^1 =</math> | |||

| style= "padding:0px" |<math>\begin{array}{c c c c} | |||

\mbox{GG,GG} \\ | |||

\mbox{GG,Gg}\\ | |||

\mbox{GG,gg}\\ | |||

\mbox{Gg,Gg}\\ | |||

\mbox{Gg,gg}\\ | |||

\mbox{gg,gg} | |||

\end{array}</math> || <math>\begin{pmatrix} | |||

1.000 &\,\,\,\,\, .000 &\,\,\,\,\, .000 &\,\,\,\,\, .000 &\,\,\,\,\, .000 &\,\,\,\,\, .000\\ | |||

.250 &\,\,\,\,\, .500 &\,\,\,\,\, .000 &\,\,\,\,\, .250 &\,\,\,\,\, .000 &\,\,\,\,\, .000\\ | |||

.000 &\,\,\,\,\, .000 &\,\,\,\,\, .000 &\,\,\,\,\, 1.000 &\,\,\,\,\, .000 &\,\,\,\,\, .000\\ | |||

.062 &\,\,\,\,\, .250 &\,\,\,\,\, .125 &\,\,\,\,\, .250 &\,\,\,\,\, .250 &\,\,\,\,\, .062\\ | |||

.000 &\,\,\,\,\, .000 &\,\,\,\,\, .000 &\,\,\,\,\, .250 &\,\,\,\,\, .500 &\,\,\,\,\, .250\\ | |||

.000 &\,\,\,\,\, .000 &\,\,\,\,\, .000 &\,\,\,\,\, .000 &\,\,\,\,\, .000 &\,\,\,\,\, 1.000 | |||

\end{pmatrix}</math> | |||

|} | |||

</div> | |||

<span id="exam 11.1.10"/> | |||

'''Example''' | |||

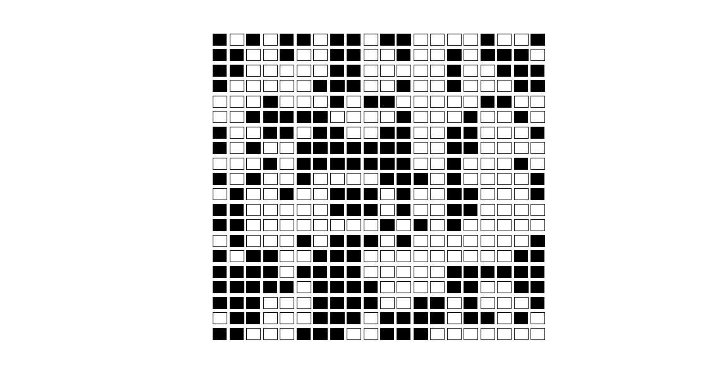

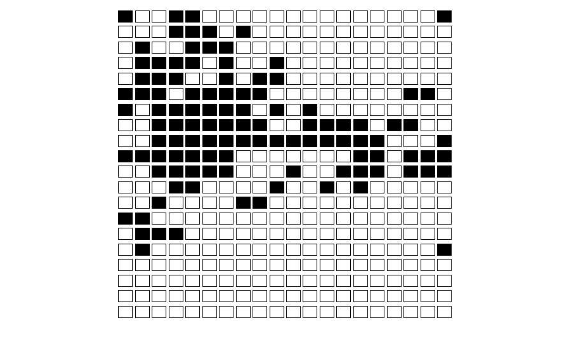

(Stepping Stone Model) Our final example is another example that has been used | |||

in the | |||

study of genetics. It is called the ''stepping stone'' model.<ref group="Notes" >S. | |||

Sawyer, “Results for The Stepping Stone Model for Migration in Population | |||

Genetics,” ''Annals of Probability,'' vol. 4 (1979), | |||

pp. 699--728.</ref> In this | |||

model we have an <math>n</math>-by-<math>n</math> array of squares, and each square is initially any | |||

one of <math>k</math> different colors. For each step, a square is chosen at random. | |||

This | |||

square then chooses one of its eight neighbors at random and assumes the color | |||

of that neighbor. To avoid boundary problems, we assume that if a square <math>S</math> | |||

is on the | |||

left-hand boundary, say, but not at a corner, it is adjacent to the square <math>T</math> | |||

on the | |||

right-hand boundary in the same row as <math>S</math>, and <math>S</math> is also adjacent to the | |||

squares | |||

just above and below <math>T</math>. A similar assumption is made about squares on the | |||

upper and | |||

lower boundaries. The top left-hand corner square is adjacent to three obvious neighbors, namely the squares below it, to its right, and diagonally below and to the right. It has five other neighbors, which are as follows: the other three corner squares, the square below the upper right-hand corner, and the square to the right of the bottom left-hand corner. The other three corners also have, in a similar way, eight neighbors. (These adjacencies are much easier to understand if one | |||

imagines | |||

making the array into a cylinder by gluing the top and bottom edge together, | |||

and then | |||

making the cylinder into a doughnut by gluing the two circular boundaries | |||

together.) | |||

With these adjacencies, each square in the array is adjacent to exactly eight | |||

other | |||

squares. | |||

A state in this Markov chain is a description of the color of | |||

each square. For this Markov chain the number of states is <math>k^{n^2}</math>, which | |||

for even a small array of squares is enormous. This is an example of a Markov | |||

chain that is easy to simulate but difficult to analyze in terms of its | |||

transition matrix. The program ''' SteppingStone''' simulates | |||

this chain. We have started with a random initial configuration of two colors | |||

with <math>n = 20</math> and show the result after the process has run for some time in | |||

[[#fig 11.2|Figure]]. | |||

This is an example of an ''absorbing'' Markov chain. This type of chain will be studied in [[guide:87cf36f969|Absorbing Markov Chains]]. One of the theorems proved in that section, applied to the present example, implies that with probability 1, the stones will eventually all be the same color. By watching | |||

the program run, you can see that territories are established and a battle develops to see which color survives. At any time the probability that a particular color will win out is equal to the proportion of the array of this color. You are asked to prove this in [[exercise:8e459a4a58|Exercise]]. | |||

<div id="fig 11.1" class="d-flex justify-content-center"> | |||

[[File:guide_e6d15_PSfig11-1.png | 400px | thumb | Initial state of the stepping stone model.]] | |||

</div> | |||

<div id="fig 11.2" class="d-flex justify-content-center"> | |||

[[File:guide_e6d15_PSfig11-2.png | 400px | thumb |State of the stepping stone model after 10,000 steps. ]] | |||

</div> | |||

==General references== | |||

{{cite web |url=https://math.dartmouth.edu/~prob/prob/prob.pdf |title=Grinstead and Snell’s Introduction to Probability |last=Doyle |first=Peter G.|date=2006 |access-date=June 6, 2024}} | |||

==Notes== | |||

{{Reflist|group=Notes}} | |||

Latest revision as of 15:42, 17 June 2024

Most of our study of probability has dealt with independent trials processes. These processes are the basis of classical probability theory and much of statistics. We have discussed two of the principal theorems for these processes: the Law of Large Numbers and the Central Limit Theorem.

We have seen that when a sequence of chance experiments forms an independent trials process, the possible outcomes for each experiment are the same and occur with the same probability. Further, knowledge of the outcomes of the previous experiments does not influence our predictions for the outcomes of the next experiment. The distribution for the outcomes of a single experiment is sufficient to construct a tree and a tree measure for a sequence of [math]n[/math] experiments, and we can answer any probability question about these experiments by using this tree measure.

Modern probability theory studies chance processes for which the knowledge of previous outcomes influences predictions for future experiments. In principle, when we observe a sequence of chance experiments, all of the past outcomes could influence our predictions for the next experiment. For example, this should be the case in predicting a student's grades on a sequence of exams in a course. But to allow this much generality would make it very difficult to prove general results.

In 1907, A. A. Markov began the study of an important new type of chance process. In this process, the outcome of a given experiment can affect the outcome of the next experiment. This type of process is called a Markov chain.

Specifying a Markov Chain

We describe a Markov chain as follows: We have a set of states, [math]S = \{s_1,s_2,\ldots,s_r\}[/math]. The process starts in one of these states and moves successively from one state to another. Each move is called a step. If the chain is currently in state [math]s_i[/math], then it moves to state [math]s_j[/math] at the next step with a probability denoted by [math]p_{ij}[/math], and this probability does not depend upon which states the chain was in before the current state.

The probabilities [math]p_{ij}[/math] are called transition

probabilities. The process can remain in the state

it is in,

and this occurs with probability [math]p_{ii}[/math]. An initial probability

distribution, defined

on [math]S[/math], specifies the starting state. Usually this is done by specifying a

particular

state as the starting state.

R. A. Howard[Notes 1] provides us with a

picturesque

description of a Markov chain as a frog jumping on a set of lily pads.

The frog starts on one of the pads and then jumps from lily pad to lily

pad with the appropriate transition probabilities.

Example According to Kemeny, Snell, and Thompson,[Notes 2] the Land of Oz is blessed by many things, but not by good weather. They never have two nice days in a row. If they have a nice day, they are just as likely to have snow as rain the next day. If they have snow or rain, they have an even chance of having the same the next day. If there is change from snow or rain, only half of the time is this a change to a nice day. With this information we form a Markov chain as follows. We take as states the kinds of weather R, N, and S. From the above information we determine the transition probabilities. These are most conveniently represented in a square array as

| [math]\mbox{R}[/math][math]\mbox{N}[/math] [math]\mbox{S}[/math] | |

| [math]\begin{array}{c c c c} \mbox{R} \\ \mbox{N}\\ \mbox{S} \end{array}[/math] | [math]\begin{pmatrix} 1/2 & 1/4 & 1/4 \\ 1/2 & 0 & 1/2 \\ 1/4 & 1/4 & 1/2 \end{pmatrix}[/math] |

Transition Matrix

The entries in the first row of the matrix [math]\mat {P}[/math] in Example represent the probabilities for the various kinds of weather following a rainy day. Similarly, the entries in the second and third rows represent the probabilities for the various kinds of weather following nice and snowy days, respectively. Such a square array is called the matrix of transition probabilities, or the transition matrix.

We consider the question of determining the probability that, given the chain is in state [math]i[/math] today, it will be in state [math]j[/math] two days from now. We denote this probability by [math]p_{ij}^{(2)}[/math]. In Example, we see that if it is rainy today then the event that it is snowy two days from now is the disjoint union of the following three events: 1) it is rainy tomorrow and snowy two days from now, 2) it is nice tomorrow and snowy two days from now, and 3) it is snowy tomorrow and snowy two days from now. The probability of the first of these events is the product of the conditional probability that it is rainy tomorrow, given that it is rainy today, and the conditional probability that it is snowy two days from now, given that it is rainy tomorrow. Using the transition matrix [math]\mat{P}[/math], we can write this product as [math]p_{11}p_{13}[/math]. The other two events also have probabilities that can be written as products of entries of [math]\mat{P}[/math]. Thus, we have

This equation should remind the reader of a dot product of two vectors; we are dotting the first row of [math] \mat {P}[/math] with the third column of [math] \mat {P}[/math]. This is just what is done in obtaining the [math]1,3[/math]-entry of the product of [math] \mat {P}[/math] with itself. In general, if a Markov chain has [math]r[/math] states, then

The following general theorem is easy to prove by using the above observation and induction.

Let [math] \mat {P}[/math] be the transition matrix of a Markov chain. The [math]ij[/math]th entry [math]p_{ij}^{(n)}[/math] of the matrix [math] \mat {P}^n[/math] gives the probability that the Markov chain, starting in state [math]s_i[/math], will be in state [math]s_j[/math] after [math]n[/math] steps.

Show ProofThe proof of this theorem is left as an exercise (Exercise).

Example Consider again the weather in the Land of Oz. We know that the powers of the transition matrix give us interesting information about the process as it evolves. We shall be particularly interested in the state of the chain after a large number of steps. The program MatrixPowers computes the powers of [math]\mat{P}[/math].

We have run the program MatrixPowers for the Land of Oz example

to compute the successive powers of [math]\mat{P}[/math] from 1 to 6. The results are

shown

in Table. We note that after six days our weather predictions

are,

to three-decimal-place accuracy, independent of today's weather. The

probabilities for the three

types of weather, R, N, and S, are .4, .2, and .4 no matter where the chain

started. This is an

example of a type of Markov chain called a regular Markov chain. For

this type of chain,

it is true that long-range predictions are independent of the starting state.

Not all chains are

regular, but this is an important class of chains that we shall study in detail

later.

| [math]\mbox{Rain}[/math][math]\mbox{Nice}[/math] [math]\mbox{Snow}[/math] | ||

| [math]\mat{P}^1 =[/math] | [math]\begin{array}{c c c c} \mbox{Rain} \\ \mbox{Nice}\\ \mbox{Snow} \end{array}[/math] | [math]\begin{pmatrix} .500 & .250 & .250 \\ .500 & .000 & .500 \\ .250 & .250 & .500 \\ \end{pmatrix}[/math] |

| [math]\mbox{Rain}[/math][math]\mbox{Nice}[/math] [math]\mbox{Snow}[/math] | ||

| [math]\mat{P}^2=[/math] | [math]\begin{array}{c c c c} \mbox{Rain} \\ \mbox{Nice}\\ \mbox{Snow} \end{array}[/math] | [math]\begin{pmatrix} .438 & .188 & .375 \\ .375 & .250 & .375 \\ .375 & .188 & .438 \end{pmatrix}[/math] |

| [math]\mbox{Rain}[/math][math]\mbox{Nice}[/math] [math]\mbox{Snow}[/math] | ||

| [math]\mat{P}^3=[/math] | [math]\begin{array}{c c c c} \mbox{Rain} \\ \mbox{Nice}\\ \mbox{Snow} \end{array}[/math] | [math]\begin{pmatrix} .406 & .203 & .391 \\ .406 & .188 & .406 \\ .391 & .203 & .406 \end{pmatrix}[/math] |

| [math]\mbox{Rain}[/math][math]\mbox{Nice}[/math] [math]\mbox{Snow}[/math] | ||

| [math]\mat{P}^4=[/math] | [math]\begin{array}{c c c c} \mbox{Rain} \\ \mbox{Nice}\\ \mbox{Snow} \end{array}[/math] | [math]\begin{pmatrix} .402 & .199 & .398 \\ .398 & .203 & .398 \\ .398 & .199 & .402 \\ \end{pmatrix}[/math] |

We now consider the long-term behavior of a Markov chain when it starts in a state chosen by a probability distribution on the set of states, which we will call a probability vector. A probability vector with [math]r[/math] components is a row vector whose entries are non-negative and sum to 1. If [math]\mat {u}[/math] is a probability vector which represents the initial state of a Markov chain, then we think of the [math]i[/math]th component of [math]\mat {u}[/math] as representing the probability that the chain starts in state [math]s_i[/math].

With this interpretation of random starting states, it is easy to prove the following theorem.

Let [math]\mat{P}[/math] be the transition matrix of a Markov chain, and let [math]\mat {u}[/math] be the probability vector which represents the starting distribution. Then the probability that the chain is in state [math]s_i[/math] after [math]n[/math] steps is the [math]i[/math]th entry in the vector

The proof of this theorem is left as an exercise (Exercise).

We note that if we want to examine the behavior of the chain under the assumption that it starts in a certain state [math]s_i[/math], we simply choose [math]\mat {u}[/math] to be the probability vector with [math]i[/math]th entry equal to 1 and all other entries equal to 0.

Example (Example) let the initial probability vector [math]\mat {u}[/math] equal [math](1/3, 1/3, 1/3)[/math]. Then we can calculate the distribution of the states after three days using Theorem and our previous calculation of [math]{\mat {P}^3}[/math]. We obtain

Examples

The following examples of Markov chains will be used throughout the chapter for exercises.

Example The President of the United States tells person A his or her intention to run or not to run in the next election. Then A relays the news to B, who in turn relays the message to C, and so forth, always to some new person. We assume that there is a probability [math]a[/math] that a person will change the answer from yes to no when transmitting it to the next person and a probability [math]b[/math] that he or she will change it from no to yes. We choose as states the message, either yes or no. The transition matrix is then

| [math]\mbox{yes}[/math][math]\mbox{no}[/math] | ||

| [math]\mat{P} = \,[/math] | [math]\begin{array}{c c c c} \mbox{yes} \\ \mbox{no} \end{array}[/math] | [math]\begin{pmatrix} 1 - a & a \\ b & 1 - b \\ \end{pmatrix}[/math] |

The initial state represents the President's choice.

Example Each time a certain horse runs in a three-horse race, he has probability 1/2 of winning, 1/4 of coming in second, and 1/4 of coming in third, independent of the outcome of any previous race. We have an independent trials process, but it can also be considered from the point of view of Markov chain theory. The transition matrix is

| [math]\mbox{W}[/math][math]\mbox{P}[/math] [math]\mbox{S}[/math] | ||

| [math]\mat{P} =[/math] | [math]\begin{array}{c c c c} \mbox{W} \\ \mbox{P}\\ \mbox{S}\\ \end{array}[/math] | [math]\begin{pmatrix} .5 & .25 & .25 \\ .5 & .25 & .25 \\ .5 & .25 & .25 \\ \end{pmatrix}[/math] |

Example

In the Dark Ages, Harvard, Dartmouth, and Yale admitted only male students.

Assume that, at that time, 80 percent of the sons of Harvard men went to Harvard and the rest went to Yale, 40 percent of the sons of Yale men went to Yale, and the rest split evenly between Harvard and Dartmouth; and of the sons of

Dartmouth men, 70 percent went to Dartmouth, 20 percent to Harvard, and

10 percent to Yale. We form a Markov chain with transition matrix

| [math]\mbox{H}[/math][math]\mbox{Y}[/math] [math]\mbox{D}[/math] | ||

| [math]\mat{P} =[/math] | [math]\begin{array}{c c c c} \mbox{H} \\ \mbox{Y}\\ \mbox{D}\\ \end{array}[/math] | [math]\begin{pmatrix} .8 & .2 & 0 \\ .3 & .4 & .3 \\ .2 & .1 & .7 \end{pmatrix}[/math] |

Example

Modify Example by assuming that the son of a Harvard man always went to Harvard. The transition matrix is now

| [math]\mbox{H}[/math][math]\mbox{Y}[/math] [math]\mbox{D}[/math] | ||

| [math]\mat{P} =[/math] | [math]\begin{array}{c c c c} \mbox{H} \\ \mbox{Y}\\ \mbox{D}\\ \end{array}[/math] | [math]\begin{pmatrix} 1 & 0 & 0 \\ .3 & .4 & .3 \\ .2 & .1 & .7 \end{pmatrix}[/math] |

Example

(Ehrenfest Model) The following is a special case of a model, called the

Ehrenfest

model,[Notes 3]

that has been used to explain diffusion of gases. The general model will be

discussed in detail in Mean First Passage Time for Ergodic Chains. We have two urns that, between

them, contain four balls. At each step, one of the four balls is chosen at random and

moved from the urn that it is in into the other urn. We choose, as states, the

number of balls in the first urn. The transition matrix is then

| [math]0[/math][math]1[/math] [math]2[/math] [math]3[/math] [math]4[/math] | ||

| [math]\mat{P} =\,\,[/math] | [math]\begin{array}{c c c c} 0 \\ 1\\ 2\\ 3\\ 4 \end{array}[/math] | [math]\begin{pmatrix} 0 & 1 & 0 & 0 & 0 \\ 1/4 & 0 & 3/4 & 0 & 0 \\ 0 & 1/2 & 0 & 1/2 & 0 \\ 0 & 0 & 3/4 & 0 & 1/4 \\ 0 & 0 & 0 & 1 & 0 \\ \end{pmatrix}[/math] |

Example

(Gene Model) The simplest type of inheritance of traits in animals occurs when

a trait is

governed by a pair of genes, each of which may be of two types, say G and g.

An individual may have a GG combination or Gg (which is genetically the same as

gG) or gg. Very often the GG and Gg types are indistinguishable in appearance,

and then we say that the G gene dominates the g gene. An individual is called

dominant if he or she has GG genes, recessive if he or she has

gg, and hybrid with a Gg mixture.

In the mating of two animals, the offspring inherits one gene of the pair from

each parent, and the basic assumption of genetics is that these genes are

selected at random, independently of each other. This assumption determines

the probability of occurrence of each type of offspring. The offspring of two

purely dominant parents must be dominant, of two recessive parents must be

recessive, and of one dominant and one recessive parent must be hybrid.

In the mating of a dominant and a hybrid animal, each offspring must get a G gene from the former and has an equal chance of getting G or g from the latter. Hence there is an equal probability for getting a dominant or a hybrid offspring. Again, in the mating of a recessive and a hybrid, there is an even chance for getting either a recessive or a hybrid. In the mating of two hybrids, the offspring has an equal chance of getting G or g from each parent. Hence the probabilities are 1/4 for GG, 1/2 for Gg, and 1/4 for gg.

Consider a process of continued matings. We start with an individual of known genetic character and mate it with a hybrid. We assume that there is at least one offspring. An offspring is chosen at random and is mated with a hybrid and this process repeated through a number of generations. The genetic type of the chosen offspring in successive generations can be represented by a Markov chain. The states are dominant, hybrid, and recessive, and indicated by GG, Gg, and gg respectively.

The transition probabilities are

| [math]\mbox{GG}[/math][math]\mbox{Gg}[/math] [math]\mbox{gg}[/math] | ||

| [math]\mat{P} =[/math] | [math]\begin{array}{c c c c} \mbox{GG} \\ \mbox{Gg}\\ \mbox{gg}\\ \end{array}[/math] | [math]\begin{pmatrix} .5 & .5 & 0 \\ .25 & .5 & .25 \\ 0 & .5 & .5 \end{pmatrix}[/math] |

Example

Modify Example as follows: Instead of mating the oldest

offspring with a hybrid, we mate it with a dominant individual. The transition

matrix

is

| [math]\mbox{GG}[/math][math]\mbox{Gg}[/math] [math]\mbox{gg}[/math] | ||

| [math]\mat{P} =[/math] | [math]\begin{array}{c c c c} \mbox{GG} \\ \mbox{Gg}\\ \mbox{gg}\\ \end{array}[/math] | [math]\begin{pmatrix} 1 \,& 0\, & 0 \\ .5 \,& .5\, & 0 \\ 0 \,& 1\, & 0\\ \end{pmatrix}[/math] |

Example

We start with two animals of opposite sex, mate them, select two of their offspring of opposite sex, and mate those, and so forth. To simplify the

example, we will assume that the trait under consideration is independent of sex.

Here a state is determined by a pair of animals. Hence, the states of our process will be: [math]s_1 = (\mbox{GG},\mbox{GG})[/math], [math]s_2 = (\mbox{GG},\mbox{Gg})[/math], [math]s_3 = (\mbox{GG},\mbox{gg})[/math], [math]s_4 = (\mbox{Gg},\mbox{Gg})[/math], [math]s_5 = (\mbox{Gg},\mbox{gg})[/math], and [math]s_6 = (\mbox{gg},\mbox{gg})[/math].

We illustrate the calculation of transition probabilities in terms of the

state [math]s_2[/math]. When the process is in this state, one parent has GG genes, the

other Gg. Hence, the probability of a dominant offspring is 1/2. Then the

probability of transition to [math]s_1[/math] (selection of two dominants) is 1/4,

transition to [math]s_2[/math] is 1/2, and to [math]s_4[/math] is 1/4. The other states are treated

the same way. The transition matrix of this chain is:

| [math]\mbox{GG,GG}[/math][math]\mbox{GG,Gg}[/math] [math] \mbox{GG,gg}[/math] [math]\mbox{Gg,Gg}[/math] [math]\mbox{Gg,gg}[/math] [math]\mbox{gg,gg}[/math] | ||

| [math]\mat{P}^1 =[/math] | [math]\begin{array}{c c c c} \mbox{GG,GG} \\ \mbox{GG,Gg}\\ \mbox{GG,gg}\\ \mbox{Gg,Gg}\\ \mbox{Gg,gg}\\ \mbox{gg,gg} \end{array}[/math] | [math]\begin{pmatrix} 1.000 &\,\,\,\,\, .000 &\,\,\,\,\, .000 &\,\,\,\,\, .000 &\,\,\,\,\, .000 &\,\,\,\,\, .000\\ .250 &\,\,\,\,\, .500 &\,\,\,\,\, .000 &\,\,\,\,\, .250 &\,\,\,\,\, .000 &\,\,\,\,\, .000\\ .000 &\,\,\,\,\, .000 &\,\,\,\,\, .000 &\,\,\,\,\, 1.000 &\,\,\,\,\, .000 &\,\,\,\,\, .000\\ .062 &\,\,\,\,\, .250 &\,\,\,\,\, .125 &\,\,\,\,\, .250 &\,\,\,\,\, .250 &\,\,\,\,\, .062\\ .000 &\,\,\,\,\, .000 &\,\,\,\,\, .000 &\,\,\,\,\, .250 &\,\,\,\,\, .500 &\,\,\,\,\, .250\\ .000 &\,\,\,\,\, .000 &\,\,\,\,\, .000 &\,\,\,\,\, .000 &\,\,\,\,\, .000 &\,\,\,\,\, 1.000 \end{pmatrix}[/math] |

Example

(Stepping Stone Model) Our final example is another example that has been used

in the

study of genetics. It is called the stepping stone model.[Notes 4] In this

model we have an [math]n[/math]-by-[math]n[/math] array of squares, and each square is initially any

one of [math]k[/math] different colors. For each step, a square is chosen at random.

This

square then chooses one of its eight neighbors at random and assumes the color

of that neighbor. To avoid boundary problems, we assume that if a square [math]S[/math]

is on the

left-hand boundary, say, but not at a corner, it is adjacent to the square [math]T[/math]

on the

right-hand boundary in the same row as [math]S[/math], and [math]S[/math] is also adjacent to the

squares

just above and below [math]T[/math]. A similar assumption is made about squares on the

upper and

lower boundaries. The top left-hand corner square is adjacent to three obvious neighbors, namely the squares below it, to its right, and diagonally below and to the right. It has five other neighbors, which are as follows: the other three corner squares, the square below the upper right-hand corner, and the square to the right of the bottom left-hand corner. The other three corners also have, in a similar way, eight neighbors. (These adjacencies are much easier to understand if one

imagines

making the array into a cylinder by gluing the top and bottom edge together,

and then

making the cylinder into a doughnut by gluing the two circular boundaries

together.)

With these adjacencies, each square in the array is adjacent to exactly eight

other

squares.

A state in this Markov chain is a description of the color of

each square. For this Markov chain the number of states is [math]k^{n^2}[/math], which

for even a small array of squares is enormous. This is an example of a Markov

chain that is easy to simulate but difficult to analyze in terms of its

transition matrix. The program SteppingStone simulates

this chain. We have started with a random initial configuration of two colors

with [math]n = 20[/math] and show the result after the process has run for some time in

Figure.

This is an example of an absorbing Markov chain. This type of chain will be studied in Absorbing Markov Chains. One of the theorems proved in that section, applied to the present example, implies that with probability 1, the stones will eventually all be the same color. By watching the program run, you can see that territories are established and a battle develops to see which color survives. At any time the probability that a particular color will win out is equal to the proportion of the array of this color. You are asked to prove this in Exercise.

General references

Doyle, Peter G. (2006). "Grinstead and Snell's Introduction to Probability" (PDF). Retrieved June 6, 2024.

Notes

- R. A. Howard, Dynamic Probabilistic Systems, vol. 1 (New York: John Wiley and Sons, 1971).

- J. G. Kemeny, J. L. Snell, G. L. Thompson, Introduction to Finite Mathematics, 3rd ed. (Englewood Cliffs, NJ: Prentice-Hall, 1974).

- P. and T. Ehrenfest, “Über zwei bekannte Einwände gegen das Boltzmannsche H-Theorem,” Physikalishce Zeitschrift, vol. 8 (1907), pp. 311-314.

- S. Sawyer, “Results for The Stepping Stone Model for Migration in Population Genetics,” Annals of Probability, vol. 4 (1979), pp. 699--728.