guide:812d89983e: Difference between revisions

mNo edit summary |

m (Set citations) |

||

| Line 44: | Line 44: | ||

The availability of data from electronic markets and the recent developments in RL together have led to a rapidly growing recent body of work applying RL algorithms to decision-making problems in electronic markets. Examples include optimal execution, portfolio optimization, option pricing and hedging, market making, order routing, as well as robot advising. | The availability of data from electronic markets and the recent developments in RL together have led to a rapidly growing recent body of work applying RL algorithms to decision-making problems in electronic markets. Examples include optimal execution, portfolio optimization, option pricing and hedging, market making, order routing, as well as robot advising. | ||

In this section, we start with a brief overview of electronic markets and some discussion of market microstructure in [[#sec:electronic_market |Section]]. We then introduce several applications of RL in finance. In particular, optimal execution for a single asset is introduced in [[#sec:optimal_execution |Section]] and portfolio optimization problems across multiple assets is discussed in [[#sec:portfolio_optimization |Section]]. This is followed by sections on option pricing, robo-advising, and smart order routing. In each we introduce the underlying problem and basic model before looking at recent RL approaches used in tackling them. | In this section, we start with a brief overview of electronic markets and some discussion of market microstructure in [[#sec:electronic_market |Section]]. We then introduce several applications of RL in finance. In particular, optimal execution for a single asset is introduced in [[#sec:optimal_execution |Section]] and portfolio optimization problems across multiple assets is discussed in [[#sec:portfolio_optimization |Section]]. This is followed by sections on option pricing, robo-advising, and smart order routing. In each we introduce the underlying problem and basic model before looking at recent RL approaches used in tackling them. | ||

It is worth noting that there are some open source projects that provide full pipelines for implementing different RL algorithms in financial applications <ref name="liu2021finrl"/><ref name="liu2022finrl"/>. | It is worth noting that there are some open source projects that provide full pipelines for implementing different RL algorithms in financial applications <ref name="liu2021finrl">X.-Y. Liu, H.YANG, J.GAO, and C.D. Wang, ''Finrl: Deep reinforcement learning framework to automate trading in quantitative finance'', in Proceedings of the Second ACM International Conference on AI in Finance, 2021, pp.1--9.</ref><ref name="liu2022finrl">X.-Y. Liu, Z.XIA, J.RUI, J.GAO, H.YANG, M.ZHU, C.D. Wang, Z.WANG, and J.GUO, ''Finrl-meta: Market environments and benchmarks for data-driven financial reinforcement learning'', arXiv preprint arXiv:2211.03107, (2022).</ref>. | ||

===<span id="sec:electronic_market"></span>Preliminary: Electronic Markets and Market Microstructure=== | ===<span id="sec:electronic_market"></span>Preliminary: Electronic Markets and Market Microstructure=== | ||

Many recent decision-making problems in finance are centered around electronic markets. We give a brief overview of this type of market and discuss two popular examples -- central limit order books and electronic over-the-counter markets. For more in-depth discussion of electronic markets and market microstructure, we refer the reader to the books <ref name="cartea2015algorithmic"/> and <ref name="lehalle2018market"/>. | Many recent decision-making problems in finance are centered around electronic markets. We give a brief overview of this type of market and discuss two popular examples -- central limit order books and electronic over-the-counter markets. For more in-depth discussion of electronic markets and market microstructure, we refer the reader to the books <ref name="cartea2015algorithmic">{\'A}.CARTEA, S.JAIMUNGAL, and J.PENALVA, ''Algorithmic and high-frequency trading'', Cambridge University Press, 2015.</ref> and <ref name="lehalle2018market">C.-A. Lehalle and S.LARUELLE, ''Market microstructure in practice'', World Scientific, 2018.</ref>. | ||

'''Electronic Markets.''' Electronic markets have emerged as popular venues for the trading of a wide variety of financial assets. Stock exchanges in many countries, including Canada, Germany, Israel, and the United Kingdom, have adopted electronic platforms to trade equities, as has Euronext, the market combining several former European stock exchanges. In the United States, electronic communications networks (ECNs) such as Island, Instinet, and Archipelago (now ArcaEx) use an electronic order book structure to trade as much as 45\trading of currencies. Eurex, the electronic Swiss-German exchange, is now the world's largest futures market, while options have been traded in electronic markets since the opening of the International Securities Exchange in 2000. Many such electronic markets are organized as electronic Limit Order Books (LOBs). In this structure, there is no designated liquidity provider such as a specialist or a dealer. Instead, liquidity arises endogenously from the submitted orders of traders. Traders who submit orders to buy or sell the asset at a particular price are said to “make” | '''Electronic Markets.''' Electronic markets have emerged as popular venues for the trading of a wide variety of financial assets. Stock exchanges in many countries, including Canada, Germany, Israel, and the United Kingdom, have adopted electronic platforms to trade equities, as has Euronext, the market combining several former European stock exchanges. In the United States, electronic communications networks (ECNs) such as Island, Instinet, and Archipelago (now ArcaEx) use an electronic order book structure to trade as much as 45\trading of currencies. Eurex, the electronic Swiss-German exchange, is now the world's largest futures market, while options have been traded in electronic markets since the opening of the International Securities Exchange in 2000. Many such electronic markets are organized as electronic Limit Order Books (LOBs). In this structure, there is no designated liquidity provider such as a specialist or a dealer. Instead, liquidity arises endogenously from the submitted orders of traders. Traders who submit orders to buy or sell the asset at a particular price are said to “make” | ||

| Line 54: | Line 54: | ||

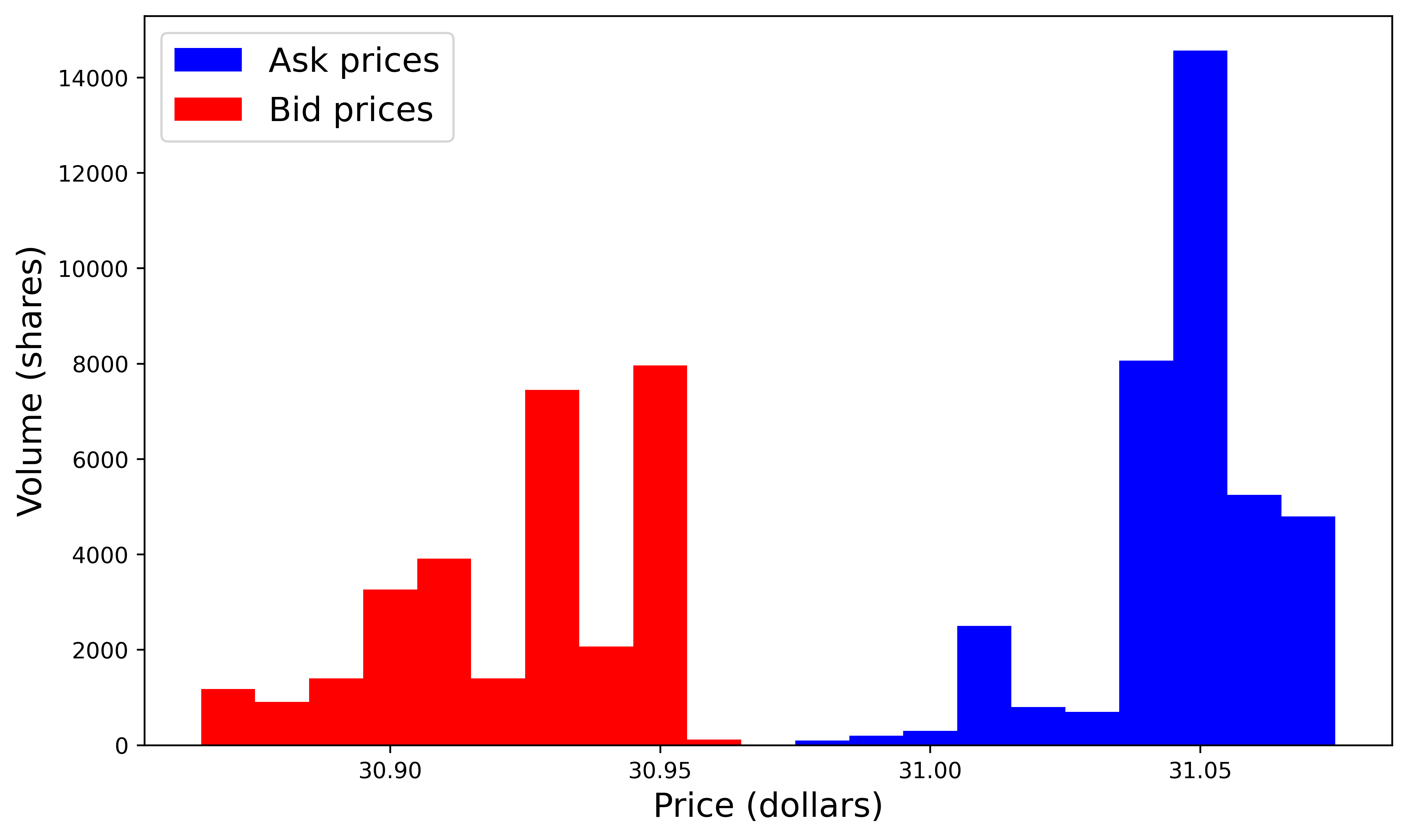

[[File:guide_b5002_lob_MSFT.png | 400px | thumb | A snapshot of the LOB of MSFT(Microsoft) stock at 9:30:08.026 am on 21 June, 2012 with ten levels of bid/ask prices. ]] | [[File:guide_b5002_lob_MSFT.png | 400px | thumb | A snapshot of the LOB of MSFT(Microsoft) stock at 9:30:08.026 am on 21 June, 2012 with ten levels of bid/ask prices. ]] | ||

</div> | </div> | ||

A matching engine is used to match the incoming buy and sell orders. This typically follows the price-time priority rule <ref name="preis2011price"/>, whereby orders are first ranked according to their price. Multiple orders having the same price are then ranked according to the time they were entered. If the price and time are the same for the incoming orders, then the larger order gets executed first. The matching engine uses the LOB to store pending orders that could not be executed upon arrival. | A matching engine is used to match the incoming buy and sell orders. This typically follows the price-time priority rule <ref name="preis2011price">T.PREIS, ''Price-time priority and pro rata matching in an order book model of financial markets'', in Econophysics of Order-driven Markets, Springer, 2011, pp.65--72.</ref>, whereby orders are first ranked according to their price. Multiple orders having the same price are then ranked according to the time they were entered. If the price and time are the same for the incoming orders, then the larger order gets executed first. The matching engine uses the LOB to store pending orders that could not be executed upon arrival. | ||

| Line 63: | Line 63: | ||

The client progressively receives the answers to the RFQ and can deal at any time | The client progressively receives the answers to the RFQ and can deal at any time | ||

with the dealer who has proposed the best price, or decide not to trade. Each dealer knows whether a deal was done (with her/him, but also with another | with the dealer who has proposed the best price, or decide not to trade. Each dealer knows whether a deal was done (with her/him, but also with another | ||

dealer - without knowing the identity of this dealer) or not. If a transaction occurred, the best dealer usually knows the cover price (the second best bid price in the RFQ), if there is one. We refer the reader to <ref name="fermanian2015agents"/> for a more in-depth discussion of MD2C bond trading platforms. | dealer - without knowing the identity of this dealer) or not. If a transaction occurred, the best dealer usually knows the cover price (the second best bid price in the RFQ), if there is one. We refer the reader to <ref name="fermanian2015agents">J.-D. Fermanian, O.GU{\'e}ant, and A.RACHEZ, ''Agents' Behavior on Multi-Dealer-to-Client Bond Trading Platforms'', CREST, Center for Research in Economics and Statistics, 2015.</ref> for a more in-depth discussion of MD2C bond trading platforms. | ||

'''Market Participants.''' | '''Market Participants.''' | ||

| Line 76: | Line 76: | ||

Optimal execution is a fundamental problem in financial modeling. The simplest version is the case of a trader who wishes to buy or sell a given amount of a single asset within a given time period. The trader seeks strategies that maximize their return from, or alternatively, minimize the cost of, the execution of the transaction. | Optimal execution is a fundamental problem in financial modeling. The simplest version is the case of a trader who wishes to buy or sell a given amount of a single asset within a given time period. The trader seeks strategies that maximize their return from, or alternatively, minimize the cost of, the execution of the transaction. | ||

'''The Almgren--Chriss Model.''' A classical framework for optimal execution is due to Almgren--Chriss <ref name="AC2001"/>. In this setup a trader is required to sell an amount <math>q_0</math> of an asset, with | '''The Almgren--Chriss Model.''' A classical framework for optimal execution is due to Almgren--Chriss <ref name="AC2001">R.ALMGREN and N.CHRISS, ''Optimal execution of portfolio transactions'', Journal of Risk, 3 (2001), pp.5--40.</ref>. In this setup a trader is required to sell an amount <math>q_0</math> of an asset, with | ||

price <math>S_0</math> at time 0, over the time period <math>[0,T]</math> with trading decisions made at discrete time points | price <math>S_0</math> at time 0, over the time period <math>[0,T]</math> with trading decisions made at discrete time points | ||

<math>t=1,\ldots,T</math>. The final inventory <math>q_T</math> is required to be zero. Therefore the goal is to determine the liquidation strategy <math>u_1,u_2,\ldots,u_{T}</math>, where <math>u_t</math> (<math>t=1,2,\ldots,T</math>) denotes the amount of the asset to sell at time <math>t</math>. It is assumed that selling quantities of the asset will have two types of price impact -- a temporary impact which refers to any temporary price movement due to the supply-demand imbalance caused by the selling, and a permanent impact, which is a long-term effect on the ‘equilibrium’ price due to the trading, that will remain at least for the trading period. | <math>t=1,\ldots,T</math>. The final inventory <math>q_T</math> is required to be zero. Therefore the goal is to determine the liquidation strategy <math>u_1,u_2,\ldots,u_{T}</math>, where <math>u_t</math> (<math>t=1,2,\ldots,T</math>) denotes the amount of the asset to sell at time <math>t</math>. It is assumed that selling quantities of the asset will have two types of price impact -- a temporary impact which refers to any temporary price movement due to the supply-demand imbalance caused by the selling, and a permanent impact, which is a long-term effect on the ‘equilibrium’ price due to the trading, that will remain at least for the trading period. | ||

| Line 130: | Line 130: | ||

\end{equation} | \end{equation} | ||

</math> | </math> | ||

The above Almgren--Chriss framework for liquidating a single asset can be extended to the case of multiple assets <ref name="AC2001"/>{{rp|at=Appendix A}}. We also note that the general solution to the Almgren-Chriss model had been constructed previously in <ref name="grinold2000active"/>{{rp|at=Chapter 16}}. | The above Almgren--Chriss framework for liquidating a single asset can be extended to the case of multiple assets <ref name="AC2001"/>{{rp|at=Appendix A}}. We also note that the general solution to the Almgren-Chriss model had been constructed previously in <ref name="grinold2000active">R.C. Grinold and R.N. Kahn, ''Active portfolio management'', (2000).</ref>{{rp|at=Chapter 16}}. | ||

This simple version of the Almgren--Chriss framework has a closed-form solution but it relies heavily on the assumptions of the dynamics and the linear form of the permanent and temporary price impact. The mis-specification of the dynamics and market impacts may lead to undesirable strategies and potential losses. In addition, the solution in \eqref{eq:amgren-chriss} is a pre-planned strategy that does not depend on real-time market conditions. Hence this strategy may miss certain opportunities when the market moves. This motivates the use of an RL approach which is more flexible and able to incorporate market conditions when making decisions. | This simple version of the Almgren--Chriss framework has a closed-form solution but it relies heavily on the assumptions of the dynamics and the linear form of the permanent and temporary price impact. The mis-specification of the dynamics and market impacts may lead to undesirable strategies and potential losses. In addition, the solution in \eqref{eq:amgren-chriss} is a pre-planned strategy that does not depend on real-time market conditions. Hence this strategy may miss certain opportunities when the market moves. This motivates the use of an RL approach which is more flexible and able to incorporate market conditions when making decisions. | ||

'''Evaluation Criteria and Benchmark Algorithms.''' Before discussing the RL approach, we introduce several widely-used criteria to evaluate the performance of execution algorithms in the literature such as the Profit and Loss (PnL), Implementation Shortfall, and the Sharp ratio. The PnL is the '' final'' profit or loss induced by a given execution algorithm over the whole time period, which is made up of transactions at all time points. | '''Evaluation Criteria and Benchmark Algorithms.''' Before discussing the RL approach, we introduce several widely-used criteria to evaluate the performance of execution algorithms in the literature such as the Profit and Loss (PnL), Implementation Shortfall, and the Sharp ratio. The PnL is the '' final'' profit or loss induced by a given execution algorithm over the whole time period, which is made up of transactions at all time points. | ||

The Implementation Shortfall <ref name="perold1988implementation"/> for an execution algorithm is defined as the difference between the {PnL} of the algorithm and the {PnL} received by trading the entire amount of the asset instantly. The Sharpe ratio <ref name="sharpe1966"/> is defined as the ratio of expected return to standard deviation of the return; thus it measures return per unit of risk. Two popular variants of the Sharpe ratio are the differential Sharpe ratio <ref name="moody1998dSharpe"/> and the Sortino ratio <ref name="sortino1994"/>. | The Implementation Shortfall <ref name="perold1988implementation">A.F. Perold, ''The implementation shortfall: Paper versus reality'', Journal of Portfolio Management, 14 (1988), p.4.</ref> for an execution algorithm is defined as the difference between the {PnL} of the algorithm and the {PnL} received by trading the entire amount of the asset instantly. The Sharpe ratio <ref name="sharpe1966">W.F. Sharpe, ''Mutual fund performance'', The Journal of Business, 39 (1966), pp.119--138.</ref> is defined as the ratio of expected return to standard deviation of the return; thus it measures return per unit of risk. Two popular variants of the Sharpe ratio are the differential Sharpe ratio <ref name="moody1998dSharpe">J.MOODY, L.WU, Y.LIAO, and M.SAFFELL, ''Performance functions and reinforcement learning for trading systems and portfolios'', Journal of Forecasting, 17 (1998), pp.441--470.</ref> and the Sortino ratio <ref name="sortino1994">F.A. Sortino and L.N. Price, ''Performance measurement in a downside risk framework'', The Journal of Investing, 3 (1994), pp.59--64.</ref>. | ||

In addition, some classical pre-specified strategies are used as benchmarks to evaluate the performance of a given RL-based execution strategy. Popular choices include executions strategies based on Time-Weighted Average Price (TWAP) and Volume-Weighted Average Price (VWAP) as well as the Submit and Leave (SnL) policy where a trader places a sell order for all shares at a fixed limit order price, and goes to the market with any unexecuted shares remaining at time <math>T</math>. | In addition, some classical pre-specified strategies are used as benchmarks to evaluate the performance of a given RL-based execution strategy. Popular choices include executions strategies based on Time-Weighted Average Price (TWAP) and Volume-Weighted Average Price (VWAP) as well as the Submit and Leave (SnL) policy where a trader places a sell order for all shares at a fixed limit order price, and goes to the market with any unexecuted shares remaining at time <math>T</math>. | ||

'''RL Approach.''' We first provide a brief overview of the existing literature on RL for optimal execution. The most popular types of RL methods that have been used in optimal execution problems are <math>Q</math>-learning algorithms and (double) DQN <ref name="HW2014"/><ref name="NLJ2018"/><ref name="ZZR2020"/><ref name="JK2019"/><ref name="DGK2019"/><ref name="SHYO2014"/><ref name="NFK2006"/><ref name="cartea2021survey"/>. Policy-based algorithms are also popular in this field, including (deep) policy gradient methods <ref name="hambly2020policy"/><ref name="ZZR2020"/>, A2C <ref name="ZZR2020"/>, PPO <ref name="DGK2019"/><ref name="LB2020"/>, and DDPG <ref name="Ye2020"/>. The benchmark strategies studied in these papers include the Almgren--Chriss solution <ref name="HW2014"/><ref name="hambly2020policy"/>, the TWAP strategy <ref name="NLJ2018"/><ref name="DGK2019"/><ref name="LB2020"/>, the VWAP strategy <ref name="LB2020"/>, and the SnL policy <ref name="NFK2006"/><ref name="Ye2020"/>. In some models the trader is allowed to buy or sell the asset at each time point <ref name="JK2019"/><ref name="ZZR2020"/><ref name="WWMD2019"/><ref name="Deng2016"/>, whereas there are also many models where only one trading direction is allowed <ref name="NFK2006"/><ref name="HW2014"/><ref name="hambly2020policy"/><ref name="NLJ2018"/><ref name="DGK2019"/><ref name="SHYO2014"/><ref name="Ye2020"/><ref name="LB2020"/>. The state variables are often composed of time stamp, the market attributes including (mid-)price of the asset and/or the spread, the inventory process and past returns. The control variables are typically set to be the amount of asset (using market orders) to trade and/or the relative price level (using limit orders) at each time point. Examples of reward functions include cash inflow or outflow (depending on whether we sell or buy) <ref name="SHYO2014"/><ref name="NFK2006"/>, implementation shortfall <ref name="HW2014"/>, profit <ref name="Deng2016"/>, Sharpe ratio <ref name="Deng2016"/>, return <ref name="JK2019"/>, and PnL <ref name="WWMD2019"/>. Popular choices of performance measure include Implementation Shortfall <ref name="HW2014"/><ref name="hambly2020policy"/>, PnL (with a penalty term of transaction cost) <ref name="NLJ2018"/><ref name="WWMD2019"/><ref name="Deng2016"/>, trading cost <ref name="NFK2006"/><ref name="SHYO2014"/>, profit <ref name="Deng2016"/><ref name="JK2019"/>, Sharpe ratio <ref name="Deng2016"/><ref name="ZZR2020"/>, Sortino ratio <ref name="ZZR2020"/>, and return <ref name="ZZR2020"/>. | '''RL Approach.''' We first provide a brief overview of the existing literature on RL for optimal execution. The most popular types of RL methods that have been used in optimal execution problems are <math>Q</math>-learning algorithms and (double) DQN <ref name="HW2014">D.HENDRICKS and D.WILCOX, ''A reinforcement learning extension to the Almgren-Chriss framework for optimal trade execution'', in 2014 IEEE Conference on Computational Intelligence for Financial Engineering & Economics (CIFEr), IEEE, 2014, pp.457--464.</ref><ref name="NLJ2018">B.NING, F.H.T. Ling, and S.JAIMUNGAL, ''Double deep Q-learning for optimal execution'', arXiv preprint arXiv:1812.06600, (2018).</ref><ref name="ZZR2020">Z.ZHANG, S.ZOHREN, and S.ROBERTS, ''Deep reinforcement learning for trading'', The Journal of Financial Data Science, 2 (2020), pp.25--40.</ref><ref name="JK2019">G.JEONG and H.Y. Kim, ''Improving financial trading decisions using deep Q-learning: Predicting the number of shares, action strategies, and transfer learning'', Expert Systems with Applications, 117 (2019), pp.125--138.</ref><ref name="DGK2019">\leavevmode\vrule height 2pt depth -1.6pt width 23pt, ''Deep execution-value and policy based reinforcement learning for trading and beating market benchmarks'', Available at SSRN 3374766, (2019).</ref><ref name="SHYO2014">Y.SHEN, R.HUANG, C.YAN, and K.OBERMAYER, ''Risk-averse reinforcement learning for algorithmic trading'', in 2014 IEEE Conference on Computational Intelligence for Financial Engineering & Economics (CIFEr), IEEE, 2014, pp.391--398.</ref><ref name="NFK2006">Y.NEVMYVAKA, Y.FENG, and M.KEARNS, ''Reinforcement learning for optimized trade execution'', in Proceedings of the 23rd International Conference on Machine Learning, 2006, pp.673--680.</ref><ref name="cartea2021survey">{\'A}.CARTEA, S.JAIMUNGAL, and L.S{\'a}nchez-Betancourt, ''Deep reinforcement learning for algorithmic trading'', Available at SSRN, (2021).</ref>. Policy-based algorithms are also popular in this field, including (deep) policy gradient methods <ref name="hambly2020policy">B.HAMBLY, R.XU, and H.YANG, ''Policy gradient methods for the noisy linear quadratic regulator over a finite horizon'', SIAM Journal on Control and Optimization, 59 (2021), pp.3359--3391.</ref><ref name="ZZR2020"/>, A2C <ref name="ZZR2020"/>, PPO <ref name="DGK2019"/><ref name="LB2020">S.LIN and P.A. Beling, ''An end-to-end optimal trade execution framework based on proximal policy optimization'', in IJCAI, 2020, pp.4548--4554.</ref>, and DDPG <ref name="Ye2020">Z.YE, W.DENG, S.ZHOU, Y.XU, and J.GUAN, ''Optimal trade execution based on deep deterministic policy gradient'', in Database Systems for Advanced Applications, Springer International Publishing, 2020, pp.638--654.</ref>. The benchmark strategies studied in these papers include the Almgren--Chriss solution <ref name="HW2014"/><ref name="hambly2020policy"/>, the TWAP strategy <ref name="NLJ2018"/><ref name="DGK2019"/><ref name="LB2020"/>, the VWAP strategy <ref name="LB2020"/>, and the SnL policy <ref name="NFK2006"/><ref name="Ye2020"/>. In some models the trader is allowed to buy or sell the asset at each time point <ref name="JK2019"/><ref name="ZZR2020"/><ref name="WWMD2019">H.WEI, Y.WANG, L.MANGU, and K.DECKER, ''Model-based reinforcement learning for predictions and control for limit order books'', arXiv preprint arXiv:1910.03743, (2019).</ref><ref name="Deng2016">Y.Deng, F.Bao, Y.Kong, Z.Ren, and Q.Dai, ''Deep direct reinforcement learning for financial signal representation and trading'', IEEE Transactions on Neural Networks and Learning Systems, 28 (2017), pp.653--664.</ref>, whereas there are also many models where only one trading direction is allowed <ref name="NFK2006"/><ref name="HW2014"/><ref name="hambly2020policy"/><ref name="NLJ2018"/><ref name="DGK2019"/><ref name="SHYO2014"/><ref name="Ye2020"/><ref name="LB2020"/>. The state variables are often composed of time stamp, the market attributes including (mid-)price of the asset and/or the spread, the inventory process and past returns. The control variables are typically set to be the amount of asset (using market orders) to trade and/or the relative price level (using limit orders) at each time point. Examples of reward functions include cash inflow or outflow (depending on whether we sell or buy) <ref name="SHYO2014"/><ref name="NFK2006"/>, implementation shortfall <ref name="HW2014"/>, profit <ref name="Deng2016"/>, Sharpe ratio <ref name="Deng2016"/>, return <ref name="JK2019"/>, and PnL <ref name="WWMD2019"/>. Popular choices of performance measure include Implementation Shortfall <ref name="HW2014"/><ref name="hambly2020policy"/>, PnL (with a penalty term of transaction cost) <ref name="NLJ2018"/><ref name="WWMD2019"/><ref name="Deng2016"/>, trading cost <ref name="NFK2006"/><ref name="SHYO2014"/>, profit <ref name="Deng2016"/><ref name="JK2019"/>, Sharpe ratio <ref name="Deng2016"/><ref name="ZZR2020"/>, Sortino ratio <ref name="ZZR2020"/>, and return <ref name="ZZR2020"/>. | ||

We now discuss some more details of the RL algorithms and experimental settings in the above papers. | We now discuss some more details of the RL algorithms and experimental settings in the above papers. | ||

For value-based algorithms, <ref name="NFK2006"/> provided the first large scale empirical analysis of a RL method applied to optimal execution problems. They focused on a modified <math>Q</math>-learning algorithm to select price levels for limit order trading, which leads to significant improvements over simpler forms of optimization such as the SnL policies in terms of trading costs. <ref name="SHYO2014"/> proposed a risk-averse RL algorithm for optimal liquidation, which can be viewed as a generalization of <ref name="NFK2006"/>. This algorithm achieves substantially lower trading costs over the period when the 2010 flash-crash happened compared with the risk-neutral RL algorithm in <ref name="NFK2006"/>. <ref name="HW2014"/> combined the Almgren--Chriss solution and the <math>Q</math>-learning algorithm and showed that they are able to improve the Implementation Shortfall of the Almgren--Chriss solution by up to 10.3% on average, using LOB data which includes five price levels. <ref name="NLJ2018"/> proposed a modified double DQN algorithm for optimal execution and showed that the algorithm outperforms the TWAP strategy on seven out of nine stocks using PnL as the performance measure. They added a single one-second time step <math>\Delta T</math> at the end of the horizon to guarantee that all shares are liquidated over <math>T+\Delta T</math>. <ref name="JK2019"/> designed a trading system based on DQN which determines both the trading direction and the number of shares to trade. Their approach was shown to increase the total profits by at least four times in four different index stocks compared with a benchmark trading model which trades a fixed number of shares each time. They also used ''transfer'' learning to avoid overfitting, where some knowledge/information is reused when learning in a related or similar situation. | For value-based algorithms, <ref name="NFK2006"/> provided the first large scale empirical analysis of a RL method applied to optimal execution problems. They focused on a modified <math>Q</math>-learning algorithm to select price levels for limit order trading, which leads to significant improvements over simpler forms of optimization such as the SnL policies in terms of trading costs. <ref name="SHYO2014"/> proposed a risk-averse RL algorithm for optimal liquidation, which can be viewed as a generalization of <ref name="NFK2006"/>. This algorithm achieves substantially lower trading costs over the period when the 2010 flash-crash happened compared with the risk-neutral RL algorithm in <ref name="NFK2006"/>. <ref name="HW2014"/> combined the Almgren--Chriss solution and the <math>Q</math>-learning algorithm and showed that they are able to improve the Implementation Shortfall of the Almgren--Chriss solution by up to 10.3% on average, using LOB data which includes five price levels. <ref name="NLJ2018"/> proposed a modified double DQN algorithm for optimal execution and showed that the algorithm outperforms the TWAP strategy on seven out of nine stocks using PnL as the performance measure. They added a single one-second time step <math>\Delta T</math> at the end of the horizon to guarantee that all shares are liquidated over <math>T+\Delta T</math>. <ref name="JK2019"/> designed a trading system based on DQN which determines both the trading direction and the number of shares to trade. Their approach was shown to increase the total profits by at least four times in four different index stocks compared with a benchmark trading model which trades a fixed number of shares each time. They also used ''transfer'' learning to avoid overfitting, where some knowledge/information is reused when learning in a related or similar situation. | ||

For policy-based algorithms, <ref name="Deng2016"/> combined deep learning with RL to determine whether to sell, hold, or buy at each time point. In the first step of their model, neural networks are used to summarize the market features and in the second step the RL part makes trading decisions. The proposed method was shown to outperform several other deep learning and deep RL models in terms of PnL, total profits, and Sharpe ratio. They suggested that in practice, the Sharpe ratio was more recommended as the reward function compared with total profits. <ref name="Ye2020"/> used the DDPG algorithm for optimal execution over a short time horizon (two minutes) and designed a network to extract features from the market data. Experiments on real LOB data with 10 price levels show that the proposed approach significantly outperforms the existing methods, including the SnL policy (as baseline), the <math>Q</math>-learning algorithm, and the method in <ref name="HW2014"/>. <ref name="LB2020"/> proposed an adaptive framework based on PPO with neural networks including LSTM and fully-connected networks, and showed that the framework outperforms the baseline models including TWAP and VWAP, as well as several deep RL models on most of 14 US equities. <ref name="hambly2020policy"/> applied the (vanilla) policy gradient method to the LOB data of five stocks in different sectors and showed that they improve the Implementation Shortfall of the Almgren--Chriss solution by around 20%. <ref name="leal2020learning"/> used neural networks to learn the mapping between the risk-aversion parameter and the optimal control with potential market impacts incorporated. | For policy-based algorithms, <ref name="Deng2016"/> combined deep learning with RL to determine whether to sell, hold, or buy at each time point. In the first step of their model, neural networks are used to summarize the market features and in the second step the RL part makes trading decisions. The proposed method was shown to outperform several other deep learning and deep RL models in terms of PnL, total profits, and Sharpe ratio. They suggested that in practice, the Sharpe ratio was more recommended as the reward function compared with total profits. <ref name="Ye2020"/> used the DDPG algorithm for optimal execution over a short time horizon (two minutes) and designed a network to extract features from the market data. Experiments on real LOB data with 10 price levels show that the proposed approach significantly outperforms the existing methods, including the SnL policy (as baseline), the <math>Q</math>-learning algorithm, and the method in <ref name="HW2014"/>. <ref name="LB2020"/> proposed an adaptive framework based on PPO with neural networks including LSTM and fully-connected networks, and showed that the framework outperforms the baseline models including TWAP and VWAP, as well as several deep RL models on most of 14 US equities. <ref name="hambly2020policy"/> applied the (vanilla) policy gradient method to the LOB data of five stocks in different sectors and showed that they improve the Implementation Shortfall of the Almgren--Chriss solution by around 20%. <ref name="leal2020learning">L.LEAL, M.LAURI{\`e}re, and C.-A. Lehalle, ''Learning a functional control for high-frequency finance'', arXiv preprint arXiv:2006.09611, (2020).</ref> used neural networks to learn the mapping between the risk-aversion parameter and the optimal control with potential market impacts incorporated. | ||

For comparison between value-based and policy-based algorithms, <ref name="DGK2019"/> explored double DQN and PPO algorithms in different market environments -- when the benchmark TWAP is optimal, PPO is shown to converge to TWAP whereas double DQN may not; when TWAP is not optimal, both algorithms outperform this benchmark. <ref name="ZZR2020"/> showed that DQN, policy gradient, and A2C outperform several baseline models including classical time-series momentum strategies, on test data of 50 liquid futures contracts. Both continuous and discrete action spaces were considered in their work. They observed that DQN achieves the best performance and the second best is the A2C approach. | For comparison between value-based and policy-based algorithms, <ref name="DGK2019"/> explored double DQN and PPO algorithms in different market environments -- when the benchmark TWAP is optimal, PPO is shown to converge to TWAP whereas double DQN may not; when TWAP is not optimal, both algorithms outperform this benchmark. <ref name="ZZR2020"/> showed that DQN, policy gradient, and A2C outperform several baseline models including classical time-series momentum strategies, on test data of 50 liquid futures contracts. Both continuous and discrete action spaces were considered in their work. They observed that DQN achieves the best performance and the second best is the A2C approach. | ||

In addition, model-based RL algorithms have also been used for optimal execution. <ref name="WWMD2019"/> built a profitable electronic trading agent to place buy and sell orders using model-based RL, which outperforms two benchmarks strategies in terms of PnL on LOB data. They used a recurrent neural network to learn the state transition probability. We note that multi-agent RL has also been used to address the optimal execution problem, see for example <ref name="BL2019"/><ref name="KFMW2020"/>. | In addition, model-based RL algorithms have also been used for optimal execution. <ref name="WWMD2019"/> built a profitable electronic trading agent to place buy and sell orders using model-based RL, which outperforms two benchmarks strategies in terms of PnL on LOB data. They used a recurrent neural network to learn the state transition probability. We note that multi-agent RL has also been used to address the optimal execution problem, see for example <ref name="BL2019">W.BAO and X.-y. Liu, ''Multi-agent deep reinforcement learning for liquidation strategy analysis'', arXiv preprint arXiv:1906.11046, (2019).</ref><ref name="KFMW2020">M.KARPE, J.FANG, Z.MA, and C.WANG, ''Multi-agent reinforcement learning in a realistic limit order book market simulation'', in Proceedings of the First ACM International Conference on AI in Finance, ICAIF '20, 2020.</ref>. | ||

===<span id="sec:portfolio_optimization"></span>Portfolio Optimization=== | ===<span id="sec:portfolio_optimization"></span>Portfolio Optimization=== | ||

In portfolio optimization problems, a trader needs to select and trade the best portfolio of assets in order to maximize some objective function, which typically includes the expected return and some measure of the risk. The benefit of investing in such portfolios is that the diversification of investments achieves higher return per unit of risk than only investing in a single asset (see, e.g. <ref name="zivot2017introduction"/>). | In portfolio optimization problems, a trader needs to select and trade the best portfolio of assets in order to maximize some objective function, which typically includes the expected return and some measure of the risk. The benefit of investing in such portfolios is that the diversification of investments achieves higher return per unit of risk than only investing in a single asset (see, e.g. <ref name="zivot2017introduction">E.ZIVOT, ''Introduction to Computational Finance and Financial Econometrics'', Chapman & Hall Crc, 2017.</ref>). | ||

'''Mean-Variance Portfolio Optimization.''' | '''Mean-Variance Portfolio Optimization.''' | ||

The first significant mathematical model for portfolio optimization is the ''Markowitz'' model <ref name="Markowitz1952"/>, also called the ''mean-variance'' model, where an investor seeks a portfolio to maximize the expected total return for any given level of risk measured by variance. This is a single-period optimization problem and is then generalized to multi-period portfolio optimization problems in <ref name="mossin1968optimal"/><ref name="hakansson1971multi"/><ref name="samuelson1975lifetime"/><ref name="merton1974"/><ref name="steinbach1999markowitz"/><ref name="li2000multiperiodMV"/>. In this mean-variance framework, the risk of a portfolio is quantified by the variance of the wealth and the optimal investment strategy is then sought to maximize the final wealth penalized by a variance term. The mean-variance framework is of particular interest because it not only captures both the portfolio return and the risk, but also suffers from the ''time-inconsistency'' problem <ref name="strotz1955myopia"/><ref name="vigna2016time"/><ref name="xiao2020corre"/>. That is the optimal strategy selected at time <math>t</math> is no longer optimal at time <math>s > t</math> and the Bellman equation does not hold. A breakthrough was made in <ref name="li2000multiperiodMV"/>, in which they were the first to derive the analytical solution to the discrete-time multi-period mean-variance problem. They applied an ''embedding'' approach, which transforms the mean-variance problem to an LQ problem where classical approaches can be used to find the solution. The same approach was then used to solve the continuous-time mean-variance problem <ref name="zhou2000continuous"/>. In addition to the embedding scheme, other methods including the consistent planning approach <ref name="basak2010dynamic"/><ref name="bjork2010general"/> and the dynamically optimal strategy <ref name="pedersen2017optimal"/> have also been applied to solve the time-inconsistency problem arising in the mean-variance formulation of portfolio optimization. | The first significant mathematical model for portfolio optimization is the ''Markowitz'' model <ref name="Markowitz1952">H.M. Markowitz, ''Portfolio selection'', Journal of Finance, 7 (1952), pp.77--91.</ref>, also called the ''mean-variance'' model, where an investor seeks a portfolio to maximize the expected total return for any given level of risk measured by variance. This is a single-period optimization problem and is then generalized to multi-period portfolio optimization problems in <ref name="mossin1968optimal">J.MOSSIN, ''Optimal multiperiod portfolio policies'', The Journal of Business, 41 (1968), pp.215--229.</ref><ref name="hakansson1971multi">N.H. Hakansson, ''Multi-period mean-variance analysis: Toward a general theory of portfolio choice'', The Journal of Finance, 26 (1971), pp.857--884.</ref><ref name="samuelson1975lifetime">P.A. Samuelson, ''Lifetime portfolio selection by dynamic stochastic programming'', Stochastic Optimization Models in Finance, (1975), pp.517--524.</ref><ref name="merton1974">R.C. Merton and P.A. Samuelson, ''Fallacy of the log-normal approximation to optimal portfolio decision-making over many periods'', Journal of Financial Economics, 1 (1974), pp.67--94.</ref><ref name="steinbach1999markowitz">M.STEINBACH, ''Markowitz revisited: Mean-variance models in financial portfolio analysis'', Society for Industrial and Applied Mathematics, 43 (2001), pp.31--85.</ref><ref name="li2000multiperiodMV">D.LI and W.-L. Ng, ''Optimal dynamic portfolio selection: Multiperiod mean-variance formulation'', Mathematical Finance, 10 (2000), pp.387--406.</ref>. In this mean-variance framework, the risk of a portfolio is quantified by the variance of the wealth and the optimal investment strategy is then sought to maximize the final wealth penalized by a variance term. The mean-variance framework is of particular interest because it not only captures both the portfolio return and the risk, but also suffers from the ''time-inconsistency'' problem <ref name="strotz1955myopia">R.H. Strotz, ''Myopia and inconsistency in dynamic utility maximization'', The Review of Economic Studies, 23 (1955), pp.165--180.</ref><ref name="vigna2016time">E.VIGNA, ''On time consistency for mean-variance portfolio selection'', Collegio Carlo Alberto Notebook, 476 (2016).</ref><ref name="xiao2020corre">H.XIAO, Z.ZHOU, T.REN, Y.BAI, and W.LIU, ''Time-consistent strategies for multi-period mean-variance portfolio optimization with the serially correlated returns'', Communications in Statistics-Theory and Methods, 49 (2020), pp.2831--2868.</ref>. That is the optimal strategy selected at time <math>t</math> is no longer optimal at time <math>s > t</math> and the Bellman equation does not hold. A breakthrough was made in <ref name="li2000multiperiodMV"/>, in which they were the first to derive the analytical solution to the discrete-time multi-period mean-variance problem. They applied an ''embedding'' approach, which transforms the mean-variance problem to an LQ problem where classical approaches can be used to find the solution. The same approach was then used to solve the continuous-time mean-variance problem <ref name="zhou2000continuous">X.Y. Zhou and D.LI, ''Continuous-time mean-variance portfolio selection: A stochastic LQ framework'', Applied Mathematics and Optimization, 42 (2000), pp.19--33.</ref>. In addition to the embedding scheme, other methods including the consistent planning approach <ref name="basak2010dynamic">S.BASAK and G.CHABAKAURI, ''Dynamic mean-variance asset allocation'', The Review of Financial Studies, 23 (2010), pp.2970--3016.</ref><ref name="bjork2010general">T.BJORK and A.MURGOCI, ''A general theory of Markovian time inconsistent stochastic control problems'', Available at SSRN 1694759, (2010).</ref> and the dynamically optimal strategy <ref name="pedersen2017optimal">J.L. Pedersen and G.PESKIR, ''Optimal mean-variance portfolio selection'', Mathematics and Financial Economics, 11 (2017), pp.137--160.</ref> have also been applied to solve the time-inconsistency problem arising in the mean-variance formulation of portfolio optimization. | ||

Here we introduce the multi-period mean-variance portfolio optimization problem as given in <ref name="li2000multiperiodMV"/>. Suppose there are <math>n</math> risky assets in the market and an investor enters the market at time 0 with initial wealth <math>x_0</math>. The goal of the investor is to reallocate his wealth at each time point <math>t=0,1,\ldots,T</math> among the <math>n</math> assets to achieve the optimal trade off between the return and the risk of the investment. The random rates of return of the assets at <math>t</math> is denoted by <math>\pmb{e}_t =(e_t^1,\ldots,e_t^n)^\top</math>, where <math>e_t^i</math> (<math>i=1,\ldots,n</math>) is the rate of return of the <math>i</math>-th asset at time <math>t</math>. The vectors <math>\pmb{e}_t</math>, <math>t=0,1,\ldots,T-1</math>, are assumed to be statistically independent (this independence assumption can be relaxed, see, e.g. <ref name="xiao2020corre"/>) with known mean <math>\pmb{\mu}_t=(\mu_t^1,\ldots,\mu_t^n)^\top\in\mathbb{R}^{n}</math> and known standard deviation <math>\sigma_{t}^i</math> for <math>i=1,\ldots,n</math> and <math>t=0,\ldots,T-1</math>. The covariance matrix is denoted by <math>\pmb{\Sigma}_t\in\mathbb{R}^{n\times n}</math>, where <math>[\pmb{\Sigma}_{t}]_{ii}=(\sigma_{t}^i)^2</math> and <math>[\pmb{\Sigma}_t]_{ij}=\rho_{t}^{ij}\sigma_{t}^i\sigma_{t}^j</math> for <math>i,j=1,\ldots,n</math> and <math>i\neq j</math>, where <math>\rho_{t}^{ij}</math> is the correlation between assets <math>i</math> and <math>j</math> at time <math>t</math>. We write <math>x_t</math> for the wealth of the investor at time <math>t</math> and <math>u_t^i</math> (<math>i=1,\ldots,n-1</math>) for the amount invested in the <math>i</math>-th asset at time <math>t</math>. Thus the amount invested in the <math>n</math>-th asset is <math>x_t-\sum_{i=1}^{n-1} u_t^i</math>. An investment strategy is denoted by <math>\pmb{u}_t= (u_t^1,u_t^2,\ldots,u_t^{n-1})^\top</math> for <math>t=0,1,\ldots,T-1</math>, and the goal is to find an optimal strategy such that the portfolio return is maximized while minimizing the risk of the investment, that is, | Here we introduce the multi-period mean-variance portfolio optimization problem as given in <ref name="li2000multiperiodMV"/>. Suppose there are <math>n</math> risky assets in the market and an investor enters the market at time 0 with initial wealth <math>x_0</math>. The goal of the investor is to reallocate his wealth at each time point <math>t=0,1,\ldots,T</math> among the <math>n</math> assets to achieve the optimal trade off between the return and the risk of the investment. The random rates of return of the assets at <math>t</math> is denoted by <math>\pmb{e}_t =(e_t^1,\ldots,e_t^n)^\top</math>, where <math>e_t^i</math> (<math>i=1,\ldots,n</math>) is the rate of return of the <math>i</math>-th asset at time <math>t</math>. The vectors <math>\pmb{e}_t</math>, <math>t=0,1,\ldots,T-1</math>, are assumed to be statistically independent (this independence assumption can be relaxed, see, e.g. <ref name="xiao2020corre"/>) with known mean <math>\pmb{\mu}_t=(\mu_t^1,\ldots,\mu_t^n)^\top\in\mathbb{R}^{n}</math> and known standard deviation <math>\sigma_{t}^i</math> for <math>i=1,\ldots,n</math> and <math>t=0,\ldots,T-1</math>. The covariance matrix is denoted by <math>\pmb{\Sigma}_t\in\mathbb{R}^{n\times n}</math>, where <math>[\pmb{\Sigma}_{t}]_{ii}=(\sigma_{t}^i)^2</math> and <math>[\pmb{\Sigma}_t]_{ij}=\rho_{t}^{ij}\sigma_{t}^i\sigma_{t}^j</math> for <math>i,j=1,\ldots,n</math> and <math>i\neq j</math>, where <math>\rho_{t}^{ij}</math> is the correlation between assets <math>i</math> and <math>j</math> at time <math>t</math>. We write <math>x_t</math> for the wealth of the investor at time <math>t</math> and <math>u_t^i</math> (<math>i=1,\ldots,n-1</math>) for the amount invested in the <math>i</math>-th asset at time <math>t</math>. Thus the amount invested in the <math>n</math>-th asset is <math>x_t-\sum_{i=1}^{n-1} u_t^i</math>. An investment strategy is denoted by <math>\pmb{u}_t= (u_t^1,u_t^2,\ldots,u_t^{n-1})^\top</math> for <math>t=0,1,\ldots,T-1</math>, and the goal is to find an optimal strategy such that the portfolio return is maximized while minimizing the risk of the investment, that is, | ||

| Line 173: | Line 173: | ||

</math> | </math> | ||

where <math>\alpha_t</math> and <math>\beta_t</math> are explicit functions of <math>\pmb{\mu}_t</math> and <math>\pmb{\Sigma}_t</math>, which are omitted here and can be found in <ref name="li2000multiperiodMV"/>. | where <math>\alpha_t</math> and <math>\beta_t</math> are explicit functions of <math>\pmb{\mu}_t</math> and <math>\pmb{\Sigma}_t</math>, which are omitted here and can be found in <ref name="li2000multiperiodMV"/>. | ||

The above framework has been extended in different ways, for example, the risk-free asset can also be involved in the portfolio, and one can maximize the cumulative form of \eqref{eqn:MV_obj} rather than only focusing on the final wealth <math>x_T</math>. For more details about these variants and solutions, see <ref name="xiao2020corre"/>. In addition to the mean-variance framework, other major paradigms in portfolio optimization are the Kelly Criterion and Risk Parity. We refer to <ref name="Sato2019Survey"/> for a review of these optimal control frameworks and popular model-free RL algorithms for portfolio optimization. | The above framework has been extended in different ways, for example, the risk-free asset can also be involved in the portfolio, and one can maximize the cumulative form of \eqref{eqn:MV_obj} rather than only focusing on the final wealth <math>x_T</math>. For more details about these variants and solutions, see <ref name="xiao2020corre"/>. In addition to the mean-variance framework, other major paradigms in portfolio optimization are the Kelly Criterion and Risk Parity. We refer to <ref name="Sato2019Survey">Y.SATO, ''Model-free reinforcement learning for financial portfolios: A brief survey'', arXiv preprint arXiv:1904.04973, (2019).</ref> for a review of these optimal control frameworks and popular model-free RL algorithms for portfolio optimization. | ||

Note that the classical stochastic control approach for portfolio optimization problems across multiple assets requires both a realistic representation of the temporal dynamics of individual assets, as well as an adequate representation of their co-movements. This is extremely difficult when the assets belong to different classes (for example, stocks, options, futures, interest rates and their derivatives). On the other hand, the model-free RL approach does not rely on the specification of the joint dynamics across assets. | Note that the classical stochastic control approach for portfolio optimization problems across multiple assets requires both a realistic representation of the temporal dynamics of individual assets, as well as an adequate representation of their co-movements. This is extremely difficult when the assets belong to different classes (for example, stocks, options, futures, interest rates and their derivatives). On the other hand, the model-free RL approach does not rely on the specification of the joint dynamics across assets. | ||

'''RL Approach.''' Both value-based methods such as <math>Q</math>-learning <ref name="du2016algorithm"/><ref name="pendharkar2018trading"/>, SARSA <ref name="pendharkar2018trading"/>, and DQN <ref name="PSC2020"/>, and policy-based algorithms such as DPG and DDPG <ref name="xiong2018practical"/><ref name="JXL2017"/><ref name="YLKSD2019"/><ref name="liang2018adversarial"/><ref name="aboussalah2020value"/><ref name="cong2021alphaportfolio"/> have been applied to solve portfolio optimization problems. The state variables are often composed of time, asset prices, asset past returns, current holdings of assets, and remaining balance. The control variables are typically set to be the amount/proportion of wealth invested in each component of the portfolio. Examples of reward signals include portfolio return <ref name="JXL2017"/><ref name="pendharkar2018trading"/><ref name="YLKSD2019"/>, (differential) Sharpe ratio <ref name="du2016algorithm"/><ref name="pendharkar2018trading"/>, and profit <ref name="du2016algorithm"/>. The benchmark strategies include Constantly Rebalanced Portfolio (CRP) <ref name="YLKSD2019"/><ref name="JXL2017"/> where at each period the portfolio is rebalanced to the initial wealth distribution among the assets, and the buy-and-hold or do-nothing strategy <ref name="PSC2020"/><ref name="aboussalah2020value"/> which does not take any action but rather holds the initial portfolio until the end. The performance measures studied in these papers include the Sharpe ratio <ref name="YLKSD2019"/><ref name="WZ2019"/><ref name="xiong2018practical"/><ref name="JXL2017"/><ref name="liang2018adversarial"/><ref name="PSC2020"/><ref name="wang2019"/>, the Sortino ratio <ref name="YLKSD2019"/>, portfolio returns <ref name="wang2019"/><ref name="xiong2018practical"/><ref name="liang2018adversarial"/><ref name="PSC2020"/><ref name="YLKSD2019"/><ref name="aboussalah2020value"/>, portfolio values <ref name="JXL2017"/><ref name="xiong2018practical"/><ref name="pendharkar2018trading"/>, and cumulative profits <ref name="du2016algorithm"/>. Some models incorporate the transaction costs <ref name="JXL2017"/><ref name="liang2018adversarial"/><ref name="du2016algorithm"/><ref name="PSC2020"/><ref name="YLKSD2019"/><ref name="aboussalah2020value"/> and investments in the risk-free asset <ref name="YLKSD2019"/><ref name="WZ2019"/><ref name="wang2019"/><ref name="du2016algorithm"/><ref name="JXL2017"/>. | '''RL Approach.''' Both value-based methods such as <math>Q</math>-learning <ref name="du2016algorithm">X.DU, J.ZHAI, and K.LV, ''Algorithm trading using Q-learning and recurrent reinforcement learning'', Positions, 1 (2016), p.1.</ref><ref name="pendharkar2018trading">P.C. Pendharkar and P.CUSATIS, ''Trading financial indices with reinforcement learning agents'', Expert Systems with Applications, 103 (2018), pp.1--13.</ref>, SARSA <ref name="pendharkar2018trading"/>, and DQN <ref name="PSC2020">H.PARK, M.K. Sim, and D.G. Choi, ''An intelligent financial portfolio trading strategy using deep Q-learning'', Expert Systems with Applications, 158 (2020), p.113573.</ref>, and policy-based algorithms such as DPG and DDPG <ref name="xiong2018practical">Z.XIONG, X.-Y. Liu, S.ZHONG, H.YANG, and A.WALID, ''Practical deep reinforcement learning approach for stock trading'', arXiv preprint arXiv:1811.07522, (2018).</ref><ref name="JXL2017">Z.JIANG, D.XU, and J.LIANG, ''A deep reinforcement learning framework for the financial portfolio management problem'', arXiv preprint arXiv:1706.10059, (2017).</ref><ref name="YLKSD2019">P.YU, J.S. Lee, I.KULYATIN, Z.SHI, and S.DASGUPTA, ''Model-based deep reinforcement learning for dynamic portfolio optimization'', arXiv preprint arXiv:1901.08740, (2019).</ref><ref name="liang2018adversarial">Z.LIANG, H.CHEN, J.ZHU, K.JIANG, and Y.LI, ''Adversarial deep reinforcement learning in portfolio management'', arXiv preprint arXiv:1808.09940, (2018).</ref><ref name="aboussalah2020value">A.M. Aboussalah, ''What is the value of the cross-sectional approach to deep reinforcement learning?'', Available at SSRN, (2020).</ref><ref name="cong2021alphaportfolio">L.W. Cong, K.TANG, J.WANG, and Y.ZHANG, ''Alphaportfolio: Direct construction through deep reinforcement learning and interpretable ai'', SSRN Electronic Journal. https://doi. org/10.2139/ssrn, 3554486 (2021).</ref> have been applied to solve portfolio optimization problems. The state variables are often composed of time, asset prices, asset past returns, current holdings of assets, and remaining balance. The control variables are typically set to be the amount/proportion of wealth invested in each component of the portfolio. Examples of reward signals include portfolio return <ref name="JXL2017"/><ref name="pendharkar2018trading"/><ref name="YLKSD2019"/>, (differential) Sharpe ratio <ref name="du2016algorithm"/><ref name="pendharkar2018trading"/>, and profit <ref name="du2016algorithm"/>. The benchmark strategies include Constantly Rebalanced Portfolio (CRP) <ref name="YLKSD2019"/><ref name="JXL2017"/> where at each period the portfolio is rebalanced to the initial wealth distribution among the assets, and the buy-and-hold or do-nothing strategy <ref name="PSC2020"/><ref name="aboussalah2020value"/> which does not take any action but rather holds the initial portfolio until the end. The performance measures studied in these papers include the Sharpe ratio <ref name="YLKSD2019"/><ref name="WZ2019">H.WANG and X.Y. Zhou, ''Continuous-time mean--variance portfolio selection: A reinforcement learning framework'', Mathematical Finance, 30 (2020), pp.1273--1308.</ref><ref name="xiong2018practical"/><ref name="JXL2017"/><ref name="liang2018adversarial"/><ref name="PSC2020"/><ref name="wang2019">H.WANG, ''Large scale continuous-time mean-variance portfolio allocation via reinforcement learning'', Available at SSRN 3428125, (2019).</ref>, the Sortino ratio <ref name="YLKSD2019"/>, portfolio returns <ref name="wang2019"/><ref name="xiong2018practical"/><ref name="liang2018adversarial"/><ref name="PSC2020"/><ref name="YLKSD2019"/><ref name="aboussalah2020value"/>, portfolio values <ref name="JXL2017"/><ref name="xiong2018practical"/><ref name="pendharkar2018trading"/>, and cumulative profits <ref name="du2016algorithm"/>. Some models incorporate the transaction costs <ref name="JXL2017"/><ref name="liang2018adversarial"/><ref name="du2016algorithm"/><ref name="PSC2020"/><ref name="YLKSD2019"/><ref name="aboussalah2020value"/> and investments in the risk-free asset <ref name="YLKSD2019"/><ref name="WZ2019"/><ref name="wang2019"/><ref name="du2016algorithm"/><ref name="JXL2017"/>. | ||

For value-based algorithms, <ref name="du2016algorithm"/> considered the portfolio optimization problems of a risky asset and a risk-free asset. They compared the performance of the <math>Q</math>-learning algorithm and a Recurrent RL (RRL) algorithm under three different value functions including the Sharpe ratio, differential Sharpe ratio, and profit. The RRL algorithm is a policy-based method which uses the last action as an input. They concluded that the <math>Q</math>-learning algorithm is more sensitive to the choice of value function and has less stable performance than the RRL algorithm. They also suggested that the (differential) Sharpe ratio is preferred rather than the profit as the reward function. <ref name="pendharkar2018trading"/> studied a two-asset personal retirement portfolio optimization problem, in which traders who manage retirement funds are restricted from making frequent trades and they can only access limited information. They tested the performance of three algorithms: SARSA and <math>Q</math>-learning methods with discrete state and action space that maximize either the portfolio return or differential Sharpe ratio, and a TD learning method with discrete state space and continuous action space that maximizes the portfolio return. The TD method learns the portfolio returns by using a linear regression model and was shown to outperform the other two methods in terms of portfolio values. <ref name="PSC2020"/> proposed a portfolio trading strategy based on DQN which chooses to either hold, buy or sell a pre-specified quantity of the asset at each time point. In their experiments with two different three-asset portfolios, their trading strategy is superior to four benchmark strategies including the do-nothing strategy and a random strategy (take an action in the feasible space randomly) using performance measures including the cumulative return and the Sharpe ratio. <ref name="dixon2020g"/> applies a G-learning-based algorithm, a probabilistic extension of <math>Q</math>-learning | For value-based algorithms, <ref name="du2016algorithm"/> considered the portfolio optimization problems of a risky asset and a risk-free asset. They compared the performance of the <math>Q</math>-learning algorithm and a Recurrent RL (RRL) algorithm under three different value functions including the Sharpe ratio, differential Sharpe ratio, and profit. The RRL algorithm is a policy-based method which uses the last action as an input. They concluded that the <math>Q</math>-learning algorithm is more sensitive to the choice of value function and has less stable performance than the RRL algorithm. They also suggested that the (differential) Sharpe ratio is preferred rather than the profit as the reward function. <ref name="pendharkar2018trading"/> studied a two-asset personal retirement portfolio optimization problem, in which traders who manage retirement funds are restricted from making frequent trades and they can only access limited information. They tested the performance of three algorithms: SARSA and <math>Q</math>-learning methods with discrete state and action space that maximize either the portfolio return or differential Sharpe ratio, and a TD learning method with discrete state space and continuous action space that maximizes the portfolio return. The TD method learns the portfolio returns by using a linear regression model and was shown to outperform the other two methods in terms of portfolio values. <ref name="PSC2020"/> proposed a portfolio trading strategy based on DQN which chooses to either hold, buy or sell a pre-specified quantity of the asset at each time point. In their experiments with two different three-asset portfolios, their trading strategy is superior to four benchmark strategies including the do-nothing strategy and a random strategy (take an action in the feasible space randomly) using performance measures including the cumulative return and the Sharpe ratio. <ref name="dixon2020g">M.DIXON and I.HALPERIN, ''G-learner and girl: Goal based wealth management with reinforcement learning'', arXiv preprint arXiv:2002.10990, (2020).</ref> applies a G-learning-based algorithm, a probabilistic extension of <math>Q</math>-learning | ||

which scales to high dimensional portfolios while providing a flexible choice of utility functions, for wealth management problems. In addition, the authors also extend the G-learning algorithm to the setting of Inverse Reinforcement Learning (IRL) where rewards collected by the agent are not observed, and should instead be inferred. | which scales to high dimensional portfolios while providing a flexible choice of utility functions, for wealth management problems. In addition, the authors also extend the G-learning algorithm to the setting of Inverse Reinforcement Learning (IRL) where rewards collected by the agent are not observed, and should instead be inferred. | ||

For policy-based algorithms, <ref name="JXL2017"/> proposed a framework combining neural networks with DPG. They used a so-called Ensemble of Identical Independent Evaluators (EIIE) topology to predict the potential growth of the assets in the immediate future using historical data which includes the highest, lowest, and closing prices of portfolio components. The experiments using real cryptocurrency market data showed that their framework achieves higher Sharpe ratio and cumulative portfolio values compared with three benchmarks including CRP and several published RL models. <ref name="xiong2018practical"/> explored the DDPG algorithm for the portfolio selection of 30 stocks, where at each time point, the agent can choose to buy, sell, or hold each stock. The DDPG algorithm was shown to outperform two classical strategies including the min-variance portfolio allocation method <ref name="yang2018practical"/> in terms of several performance measures including final portfolio values, annualized return, and Sharpe ratio, using historical daily prices of the 30 stocks. <ref name="liang2018adversarial"/> considered the DDPG, PPO, and policy gradient method with an adversarial learning scheme which learns the execution strategy using noisy market data. They applied the algorithms for portfolio optimization to five stocks using market data and the policy gradient method with adversarial learning scheme achieves higher daily return and Sharpe ratio than the benchmark CRP strategy. They also showed that the DDPG and PPO algorithms fail to find the optimal policy even in the training set. <ref name="YLKSD2019"/> proposed a model using DDPG, which includes prediction of the assets future price movements based on historical prices and synthetic market data generation using a ''Generative Adversarial Network'' (GAN) <ref name="goodfellow2014GAN"/>. The model outperforms the benchmark CRP and the model considered in <ref name="JXL2017"/> in terms of several performance measures including Sharpe ratio and Sortino ratio. <ref name="cong2021alphaportfolio"/> embedded alpha portfolio strategies into a deep policy-based method and designed a framework which is easier to interpret. | For policy-based algorithms, <ref name="JXL2017"/> proposed a framework combining neural networks with DPG. They used a so-called Ensemble of Identical Independent Evaluators (EIIE) topology to predict the potential growth of the assets in the immediate future using historical data which includes the highest, lowest, and closing prices of portfolio components. The experiments using real cryptocurrency market data showed that their framework achieves higher Sharpe ratio and cumulative portfolio values compared with three benchmarks including CRP and several published RL models. <ref name="xiong2018practical"/> explored the DDPG algorithm for the portfolio selection of 30 stocks, where at each time point, the agent can choose to buy, sell, or hold each stock. The DDPG algorithm was shown to outperform two classical strategies including the min-variance portfolio allocation method <ref name="yang2018practical">H.YANG, X.-Y. Liu, and Q.WU, ''A practical machine learning approach for dynamic stock recommendation'', in 2018 17th IEEE International Conference On Trust, Security And Privacy In Computing And Communications/12th IEEE International Conference On Big Data Science And Engineering (TrustCom/BigDataSE), IEEE, 2018, pp.1693--1697.</ref> in terms of several performance measures including final portfolio values, annualized return, and Sharpe ratio, using historical daily prices of the 30 stocks. <ref name="liang2018adversarial"/> considered the DDPG, PPO, and policy gradient method with an adversarial learning scheme which learns the execution strategy using noisy market data. They applied the algorithms for portfolio optimization to five stocks using market data and the policy gradient method with adversarial learning scheme achieves higher daily return and Sharpe ratio than the benchmark CRP strategy. They also showed that the DDPG and PPO algorithms fail to find the optimal policy even in the training set. <ref name="YLKSD2019"/> proposed a model using DDPG, which includes prediction of the assets future price movements based on historical prices and synthetic market data generation using a ''Generative Adversarial Network'' (GAN) <ref name="goodfellow2014GAN">I.GOODFELLOW, J.POUGET-Abadie, M.MIRZA, B.XU, D.WARDE-Farley, S.OZAIR, A.COURVILLE, and Y.BENGIO, ''Generative adversarial nets'', Advances in Neural Information Processing Systems, 27 (2014).</ref>. The model outperforms the benchmark CRP and the model considered in <ref name="JXL2017"/> in terms of several performance measures including Sharpe ratio and Sortino ratio. <ref name="cong2021alphaportfolio"/> embedded alpha portfolio strategies into a deep policy-based method and designed a framework which is easier to interpret. | ||

Using Actor-Critic methods, <ref name="aboussalah2020value"/> combined the mean-variance framework (the actor determines the policy using the mean-variance framework) and the Kelly Criterion framework (the critic evaluates the policy using their growth rate). They studied eight policy-based algorithms including DPG, DDPG, and PPO, among which DPG was shown to achieve the best performance. | Using Actor-Critic methods, <ref name="aboussalah2020value"/> combined the mean-variance framework (the actor determines the policy using the mean-variance framework) and the Kelly Criterion framework (the critic evaluates the policy using their growth rate). They studied eight policy-based algorithms including DPG, DDPG, and PPO, among which DPG was shown to achieve the best performance. | ||

In addition to the above discrete-time models, <ref name="WZ2019"/> studied the entropy-regularized continuous-time mean-variance framework with one risky asset and one risk-free asset. They proved that the optimal policy is Gaussian with decaying variance and proposed an Exploratory Mean-Variance (EMV) algorithm, which consists of three procedures: policy evaluation, policy improvement, and a self-correcting scheme for learning the Lagrange multiplier. They showed that this EMV algorithm outperforms two benchmarks, including the analytical solution with estimated model parameters obtained from the classical maximum likelihood method <ref name="campbell2012econometrics"/>{{rp|at=Section 9.3.2}} and a DDPG algorithm, in terms of annualized sample mean and sample variance of the terminal wealth, and Sharpe ratio in their simulations. The continuous-time framework in <ref name="WZ2019"/> was then generalized in <ref name="wang2019"/> to large scale portfolio selection setting with <math>d</math> risky assets and one risk-free asset. The optimal policy is shown to be multivariate Gaussian with decaying variance. They tested the performance of the generalized EMV algorithm on price data from the stocks in the S\&P 500 with <math>d\geq 20</math> and it outperforms several algorithms including DDPG. | In addition to the above discrete-time models, <ref name="WZ2019"/> studied the entropy-regularized continuous-time mean-variance framework with one risky asset and one risk-free asset. They proved that the optimal policy is Gaussian with decaying variance and proposed an Exploratory Mean-Variance (EMV) algorithm, which consists of three procedures: policy evaluation, policy improvement, and a self-correcting scheme for learning the Lagrange multiplier. They showed that this EMV algorithm outperforms two benchmarks, including the analytical solution with estimated model parameters obtained from the classical maximum likelihood method <ref name="campbell2012econometrics">J.Y. Campbell, A.W. Lo, and A.C. MacKinlay, ''The econometrics of financial markets'', Princeton University Press, 1997.</ref>{{rp|at=Section 9.3.2}} and a DDPG algorithm, in terms of annualized sample mean and sample variance of the terminal wealth, and Sharpe ratio in their simulations. The continuous-time framework in <ref name="WZ2019"/> was then generalized in <ref name="wang2019"/> to large scale portfolio selection setting with <math>d</math> risky assets and one risk-free asset. The optimal policy is shown to be multivariate Gaussian with decaying variance. They tested the performance of the generalized EMV algorithm on price data from the stocks in the S\&P 500 with <math>d\geq 20</math> and it outperforms several algorithms including DDPG. | ||

===Option Pricing and Hedging=== | ===Option Pricing and Hedging=== | ||

Understanding how to price and hedge financial derivatives is a cornerstone of modern mathematical and computational finance due to its importance in the finance industry. A financial derivative is a contract that derives its value from the performance of an underlying entity. For example, a call or put ''option'' is a contract which gives the holder the right, but not the obligation, to buy or sell an underlying asset or instrument at a specified strike price prior to or on a specified date called the expiration date. Examples of option types include European options which can only be exercised at expiry, and American options which can be exercised at any time before the option expires. | Understanding how to price and hedge financial derivatives is a cornerstone of modern mathematical and computational finance due to its importance in the finance industry. A financial derivative is a contract that derives its value from the performance of an underlying entity. For example, a call or put ''option'' is a contract which gives the holder the right, but not the obligation, to buy or sell an underlying asset or instrument at a specified strike price prior to or on a specified date called the expiration date. Examples of option types include European options which can only be exercised at expiry, and American options which can be exercised at any time before the option expires. | ||

'''The Black-Scholes Model.''' One of the most important mathematical models for option pricing is the Black-Scholes or Black-Scholes-Merton (BSM) model <ref name="BS1973"/><ref name="merton1973"/>, in which we aim to find the price of a European option <math>V(S_t,t)</math> (<math>0\leq t\leq T</math>) with underlying stock price <math>S_t</math>, expiration time <math>T</math>, | '''The Black-Scholes Model.''' One of the most important mathematical models for option pricing is the Black-Scholes or Black-Scholes-Merton (BSM) model <ref name="BS1973">F.BLACK and M.SCHOLES, ''The pricing of options and corporate liabilities'', Journal of Political Economy, 81 (1973), pp.637--654.</ref><ref name="merton1973">R.C. Merton, ''Theory of rational option pricing'', The Bell Journal of Economics and Management Science, (1973), pp.141--183.</ref>, in which we aim to find the price of a European option <math>V(S_t,t)</math> (<math>0\leq t\leq T</math>) with underlying stock price <math>S_t</math>, expiration time <math>T</math>, | ||

and payoff at expiry <math>P(S_T)</math>. The underlying stock price <math>S_t</math> is assumed to be non-dividend paying and to follow a geometric Brownian motion | and payoff at expiry <math>P(S_T)</math>. The underlying stock price <math>S_t</math> is assumed to be non-dividend paying and to follow a geometric Brownian motion | ||

| Line 217: | Line 217: | ||

d_1=\frac{1}{\sigma\sqrt{T-t}}\left(\ln\left(\frac{s}{K}\right)+\left(r+\frac{\sigma^2}{2}\right)(T-t)\right),\qquad d_2=d_1-\sigma\sqrt{T-t}. | d_1=\frac{1}{\sigma\sqrt{T-t}}\left(\ln\left(\frac{s}{K}\right)+\left(r+\frac{\sigma^2}{2}\right)(T-t)\right),\qquad d_2=d_1-\sigma\sqrt{T-t}. | ||

</math> | </math> | ||

We refer to the survey <ref name="broadie2004anniversary"/> for details about extensions of the BSM model, other classical option pricing models, and numerical methods such as the Monte Carlo method. | We refer to the survey <ref name="broadie2004anniversary">M.BROADIE and J.B. Detemple, ''Anniversary article: Option pricing: Valuation models and applications'', Management Science, 50 (2004), pp.1145--1177.</ref> for details about extensions of the BSM model, other classical option pricing models, and numerical methods such as the Monte Carlo method. | ||

In a complete market one can ''hedge'' a given derivative contract by buying and selling the underlying asset in the right way to eliminate risk. In the Black-Scholes analysis ''delta'' hedging is used, in which we hedge the risk of a call option by shorting <math>\Delta_t</math> units of the underlying asset with <math>\Delta_t:=\frac{\partial V}{\partial S}(S_t,t)</math> (the sensitivity of the option price with respect to the asset price). It is also possible to use financial derivatives to ''hedge'' against the volatility of given positions in the underlying assets. However, in practice, we can only rebalance portfolios at discrete time points and frequent transactions may incur high costs. Therefore an optimal hedging strategy depends on the tradeoff between the hedging error and the transaction costs. It is worth mentioning that this is in a similar spirit to the mean-variance portfolio optimization framework introduced in [[#sec:portfolio_optimization |Section]]. Much effort has been made to include the transaction costs using classical approaches such as dynamic programming, see, for example <ref name="leland1985option"/><ref name="figlewski1989options"/><ref name="henrotte1993transaction"/>. We also refer to <ref name="giurca2021"/> for the details about delta hedging under different model assumptions. | In a complete market one can ''hedge'' a given derivative contract by buying and selling the underlying asset in the right way to eliminate risk. In the Black-Scholes analysis ''delta'' hedging is used, in which we hedge the risk of a call option by shorting <math>\Delta_t</math> units of the underlying asset with <math>\Delta_t:=\frac{\partial V}{\partial S}(S_t,t)</math> (the sensitivity of the option price with respect to the asset price). It is also possible to use financial derivatives to ''hedge'' against the volatility of given positions in the underlying assets. However, in practice, we can only rebalance portfolios at discrete time points and frequent transactions may incur high costs. Therefore an optimal hedging strategy depends on the tradeoff between the hedging error and the transaction costs. It is worth mentioning that this is in a similar spirit to the mean-variance portfolio optimization framework introduced in [[#sec:portfolio_optimization |Section]]. Much effort has been made to include the transaction costs using classical approaches such as dynamic programming, see, for example <ref name="leland1985option">H.E. Leland, ''Option pricing and replication with transactions costs'', The Journal of Finance, 40 (1985), pp.1283--1301.</ref><ref name="figlewski1989options">S.FIGLEWSKI, ''Options arbitrage in imperfect markets'', The Journal of Finance, 44 (1989), pp.1289--1311.</ref><ref name="henrotte1993transaction">P.HENROTTE, ''Transaction costs and duplication strategies'', Graduate School of Business, Stanford University, (1993).</ref>. We also refer to <ref name="giurca2021">A.GIURCA and S.BOROVKOVA, ''Delta hedging of derivatives using deep reinforcement learning'', Available at SSRN 3847272, (2021).</ref> for the details about delta hedging under different model assumptions. | ||

However, the above discussion addresses option pricing and hedging in a model-based way since there are strong assumptions made on the asset price dynamics and the form of transaction costs. In practice some assumptions of the BSM model are not realistic since: 1) transaction costs due to commissions, market impact, and non-zero bid-ask spread exist in the real market; 2) the volatility is not constant; 3) short term returns typically have a heavy tailed distribution <ref name="cont2001empirical"/><ref name="chakraborti2011econophysics"/>. Thus the resulting prices and hedges may suffer from model mis-specification when the real asset dynamics is not exactly as assumed and the transaction costs are difficult to model. Thus we will focus on a model-free RL approach that can address some of these issues. | However, the above discussion addresses option pricing and hedging in a model-based way since there are strong assumptions made on the asset price dynamics and the form of transaction costs. In practice some assumptions of the BSM model are not realistic since: 1) transaction costs due to commissions, market impact, and non-zero bid-ask spread exist in the real market; 2) the volatility is not constant; 3) short term returns typically have a heavy tailed distribution <ref name="cont2001empirical">R.CONT, ''Empirical properties of asset returns: stylized facts and statistical issues'', Quantitative Finance, 1 (2001), p.223.</ref><ref name="chakraborti2011econophysics">A.CHAKRABORTI, I.M. Toke, M.PATRIARCA, and F.ABERGEL, ''Econophysics review: I. empirical facts'', Quantitative Finance, 11 (2011), pp.991--1012.</ref>. Thus the resulting prices and hedges may suffer from model mis-specification when the real asset dynamics is not exactly as assumed and the transaction costs are difficult to model. Thus we will focus on a model-free RL approach that can address some of these issues. | ||