guide:E3fb0c985a: Difference between revisions

No edit summary |

No edit summary |

||

| Line 1: | Line 1: | ||

<div class="d-none"><math> | |||

\newcommand{\LS}{\mathtt{line\_search}} | |||

\newcommand{\mI}{\mathrm{I}} | |||

\newcommand{\mB}{\mathrm{B}} | |||

\newcommand{\conv}{\mathrm{conv}} | |||

\newcommand{\inte}{\mathrm{int}} | |||

\newcommand{\tg}{\tilde{g}} | |||

\newcommand{\tx}{\tilde{x}} | |||

\newcommand{\ty}{\tilde{y}} | |||

\newcommand{\tz}{\tilde{z}} | |||

\newcommand{\id}{\mathrm{id}} | |||

\newcommand{\K}{\mathrm{KL}} | |||

\newcommand{\kl}{\mathrm{kl}} | |||

\newcommand{\bp}{\boldsymbol{p}} | |||

\newcommand{\tr}{\mathrm{Tr}} | |||

\newcommand{\E}{\mathbb{E}} | |||

\newcommand{\N}{\mathbb{N}} | |||

\newcommand{\R}{\mathbb{R}} | |||

\newcommand{\Q}{\mathbb{Q}} | |||

\newcommand{\KL}{\mathrm{KL}} | |||

\newcommand{\LG}{\overline{\log}(K)} | |||

\newcommand{\ocP}{\overline{\mathcal{P}}} | |||

\newcommand{\cZ}{\mathcal{Z}} | |||

\newcommand{\cA}{\mathcal{A}} | |||

\newcommand{\cR}{\mathcal{R}} | |||

\newcommand{\cB}{\mathcal{B}} | |||

\newcommand{\cN}{\mathcal{N}} | |||

\newcommand{\cM}{\mathcal{M}} | |||

\newcommand{\cF}{\mathcal{F}} | |||

\newcommand{\cL}{\mathcal{L}} | |||

\newcommand{\cX}{\mathcal{X}} | |||

\newcommand{\cI}{\mathcal{I}} | |||

\newcommand{\cJ}{\mathcal{J}} | |||

\newcommand{\cY}{\mathcal{Y}} | |||

\newcommand{\cH}{\mathcal{H}} | |||

\newcommand{\cP}{\mathcal{P}} | |||

\newcommand{\cT}{\mathcal{T}} | |||

\newcommand{\cC}{\mathcal{C}} | |||

\newcommand{\cS}{\mathcal{S}} | |||

\newcommand{\cE}{\mathcal{E}} | |||

\newcommand{\cK}{\mathcal{K}} | |||

\newcommand{\cD}{\mathcal{D}} | |||

\newcommand{\oD}{\overline{\mathcal{D}}} | |||

\newcommand{\oR}{\overline{R}} | |||

\newcommand{\wh}{\widehat} | |||

\newcommand{\argmax}{\mathop{\mathrm{argmax}}} | |||

\newcommand{\argmin}{\mathop{\mathrm{argmin}}} | |||

\newcommand{\todo}{{\bf TO DO } } | |||

\newcommand{\Ber}{\mathop{\mathrm{Ber}}} | |||

\newcommand{\beq}{\begin{equation}} | |||

\newcommand{\eeq}{\end{equation}} | |||

\newcommand{\beqa}{\begin{eqnarray}} | |||

\newcommand{\eeqa}{\end{eqnarray}} | |||

\newcommand{\beqan}{\begin{eqnarray*}} | |||

\newcommand{\eeqan}{\end{eqnarray*}} | |||

\newcommand{\qed}{\hfill\BlackBox} | |||

\newcommand{\charfct}{\ds1} % | |||

\newcommand{\Fcal}{\mathcal{F}} | |||

\newcommand{\Xcal}{\mathcal{X}} | |||

\newcommand{\Hcal}{\mathcal{H}} | |||

\newcommand{\Gcal}{\mathcal{G}} | |||

\newcommand{\Nat}{\mathbb{N}} | |||

\newcommand{\mathds}{\mathbb}</math></div> | |||

\label{mirror} | |||

In the previous chapter we showed that dimension-free oracle complexity is possible when the objective function <math>f</math> and the constraint set <math>\cX</math> are well-behaved in the Euclidean norm; e.g. if for all points <math>x \in \cX</math> and all subgradients <math>g \in \partial f(x)</math>, one has that <math>\|x\|_2</math> and <math>\|g\|_2</math> are independent of the ambient dimension <math>n</math>. If this assumption is not met then the gradient descent techniques of [[guide:49d0e37682#dimfree |Chapter]] may lose their dimension-free convergence rates. For instance consider a differentiable convex function <math>f</math> defined on the Euclidean ball <math>\mB_{2,n}</math> and such that <math>\|\nabla f(x)\|_{\infty} \leq 1, \forall x \in \mB_{2,n}</math>. This implies that <math>\|\nabla f(x)\|_{2} \leq \sqrt{n}</math>, and thus projected gradient descent will converge to the minimum of <math>f</math> on <math>\mB_{2,n}</math> at a rate <math>\sqrt{n / t}</math>. In this chapter we describe the method of <ref name="NY83"></ref>, known as mirror descent, which allows to find the minimum of such functions <math>f</math> over the <math>\ell_1</math>-ball (instead of the Euclidean ball) at the much faster rate <math>\sqrt{\log(n) / t}</math>. This is only one example of the potential of mirror descent. This chapter is devoted to the description of mirror descent and some of its alternatives. The presentation is inspired from <ref name="BT03"></ref>, [Chapter 11, <ref name="CL06"></ref>], <ref name="Rak09"></ref><ref name=" Haz11"></ref><ref name=" Bub11"></ref>. | |||

In order to describe the intuition behind the method let us abstract the situation for a moment and forget that we are doing optimization in finite dimension. We already observed that projected gradient descent works in an arbitrary Hilbert space <math>\mathcal{H}</math>. Suppose now that we are interested in the more general situation of optimization in some Banach space <math>\mathcal{B}</math>. In other words the norm that we use to measure the various quantity of interest does not derive from an inner product (think of <math>\mathcal{B} = \ell_1</math> for example). In that case the gradient descent strategy does not even make sense: indeed the gradients (more formally the Fr\'echet derivative) <math>\nabla f(x)</math> are elements of the dual space <math>\mathcal{B}^*</math> and thus one cannot perform the computation <math>x - \eta \nabla f(x)</math> (it simply does not make sense). We did not have this problem for optimization in a Hilbert space <math>\mathcal{H}</math> since by Riesz representation theorem <math>\mathcal{H}^*</math> is isometric to <math>\mathcal{H}</math>. The great insight of Nemirovski and Yudin is that one can still do a gradient descent by first mapping the point <math>x \in \mathcal{B}</math> into the dual space <math>\mathcal{B}^*</math>, then performing the gradient update in the dual space, and finally mapping back the resulting point to the primal space <math>\mathcal{B}</math>. Of course the new point in the primal space might lie outside of the constraint set <math>\mathcal{X} \subset \mathcal{B}</math> and thus we need a way to project back the point on the constraint set <math>\mathcal{X}</math>. Both the primal/dual mapping and the projection are based on the concept of a {\em mirror map} which is the key element of the scheme. Mirror maps are defined in Section [[#sec:mm |Mirror maps]], and the above scheme is formally described in Section [[#sec:MD |Mirror descent]]. | |||

In the rest of this chapter we fix an arbitrary norm <math>\|\cdot\|</math> on <math>\R^n</math>, and a compact convex set <math>\cX \subset \R^n</math>. The dual norm <math>\|\cdot\|_*</math> is defined as <math>\|g\|_* = \sup_{x \in \mathbb{R}^n : \|x\| \leq 1} g^{\top} x</math>. We say that a convex function <math>f : \cX \rightarrow \R</math> is (i) <math>L</math>-Lipschitz w.r.t. <math>\|\cdot\|</math> if <math>\forall x \in \cX, g \in \partial f(x), \|g\|_* \leq L</math>, (ii) <math>\beta</math>-smooth w.r.t. <math>\|\cdot\|</math> if <math>\|\nabla f(x) - \nabla f(y) \|_* \leq \beta \|x-y\|, \forall x, y \in \cX</math>, and (iii) <math>\alpha</math>-strongly convex w.r.t. <math>\|\cdot\|</math> if | |||

<math display="block"> | |||

f(x) - f(y) \leq g^{\top} (x - y) - \frac{\alpha}{2} \|x - y \|^2 , \forall x, y \in \cX, g \in \partial f(x). | |||

</math> | |||

We also define the Bregman divergence associated to <math>f</math> as | |||

<math display="block"> | |||

D_{f}(x,y) = f(x) - f(y) - \nabla f(y)^{\top} (x - y) . | |||

</math> | |||

The following identity will be useful several times: | |||

<span id{{=}}"eq:useful1"/> | |||

<math display="block"> | |||

\begin{equation} \label{eq:useful1} | |||

(\nabla f(x) - \nabla f(y))^{\top}(x-z) = D_{f}(x,y) + D_{f}(z,x) - D_{f}(z,y) . | |||

\end{equation} | |||

</math> | |||

==<span id="sec:mm"></span>Mirror maps== | |||

Let <math>\cD \subset \R^n</math> be a convex open set such that <math>\mathcal{X}</math> is included in its closure, that is <math>\mathcal{X} \subset \overline{\mathcal{D}}</math>, and <math>\mathcal{X} \cap \mathcal{D} \neq \emptyset</math>. We say that <math>\Phi : \cD \rightarrow \R</math> is a mirror map if it safisfies the following properties<ref group="Notes" >Assumption (ii) can be relaxed in some cases, see for example {{ref|name=ABL14}}.</ref>: | |||

<ul style{{=}}"list-style-type:lower-roman"><li> <math>\Phi</math> is strictly convex and differentiable. | |||

</li> | |||

<li> The gradient of <math>\Phi</math> takes all possible values, that is <math>\nabla \Phi(\cD) = \R^n</math>. | |||

</li> | |||

<li> The gradient of <math>\Phi</math> diverges on the boundary of <math>\cD</math>, that is | |||

<math display="block"> | |||

\lim_{x \rightarrow \partial \mathcal{D}} \|\nabla \Phi(x)\| = + \infty . | |||

</math> | |||

</li> | |||

</ul> | |||

In mirror descent the gradient of the mirror map <math>\Phi</math> is used to map points from the ‘`primal" to the ``dual" (note that all points lie in <math>\R^n</math> so the notions of primal and dual spaces only have an intuitive meaning). Precisely a point <math>x \in \mathcal{X} \cap \mathcal{D}</math> is mapped to <math>\nabla \Phi(x)</math>, from which one takes a gradient step to get to <math>\nabla \Phi(x) - \eta \nabla f(x)</math>. Property (ii) then allows us to write the resulting point as <math>\nabla \Phi(y) = \nabla \Phi(x) - \eta \nabla f(x)</math> for some <math>y \in \cD</math>. The primal point <math>y</math> may lie outside of the set of constraints <math>\cX</math>, in which case one has to project back onto <math>\cX</math>. In mirror descent this projection is done via the Bregman divergence associated to <math>\Phi</math>. Precisely one defines | |||

<math display="block"> | |||

\Pi_{\cX}^{\Phi} (y) = \argmin_{x \in \mathcal{X} \cap \mathcal{D}} D_{\Phi}(x,y) . | |||

</math> | |||

Property (i) and (iii) ensures the existence and uniqueness of this projection (in particular since <math>x \mapsto D_{\Phi}(x,y)</math> is locally increasing on the boundary of <math>\mathcal{D}</math>). The following lemma shows that the Bregman divergence essentially behaves as the Euclidean norm squared in terms of projections (recall [[guide:49d0e37682#lem:todonow |Lemma]]). | |||

{{proofcard|Lemma|lem:todonow2|Let <math>x \in \cX \cap \cD</math> and <math>y \in \cD</math>, then | |||

<math display="block"> | |||

(\nabla \Phi(\Pi_{\cX}^{\Phi}(y)) - \nabla \Phi(y))^{\top} (\Pi^{\Phi}_{\cX}(y) - x) \leq 0 , | |||

</math> | |||

which also implies | |||

<math display="block"> | |||

D_{\Phi}(x, \Pi^{\Phi}_{\cX}(y)) + D_{\Phi}(\Pi^{\Phi}_{\cX}(y), y) \leq D_{\Phi}(x,y) . | |||

</math> | |||

|The proof is an immediate corollary of [[guide:72a2ed5454#prop:firstorder |Proposition]] together with the fact that <math>\nabla_x D_{\Phi}(x,y) = \nabla \Phi(x) - \nabla \Phi(y)</math>.}} | |||

==<span id="sec:MD"></span>Mirror descent== | |||

We can now describe the mirror descent strategy based on a mirror map <math>\Phi</math>. Let <math>x_1 \in \argmin_{x \in \mathcal{X} \cap \mathcal{D}} \Phi(x)</math>. Then for <math>t \geq 1</math>, let <math>y_{t+1} \in \mathcal{D}</math> such that | |||

<span id{{=}}"eq:MD1"/> | |||

<math display="block"> | |||

\begin{equation} \label{eq:MD1} | |||

\nabla \Phi(y_{t+1}) = \nabla \Phi(x_{t}) - \eta g_t, \ \text{where} \ g_t \in \partial f(x_t) , | |||

\end{equation} | |||

</math> | |||

and | |||

<span id{{=}}"eq:MD2"/> | |||

<math display="block"> | |||

\begin{equation} \label{eq:MD2} | |||

x_{t+1} \in \Pi_{\cX}^{\Phi} (y_{t+1}) . | |||

\end{equation} | |||

</math> | |||

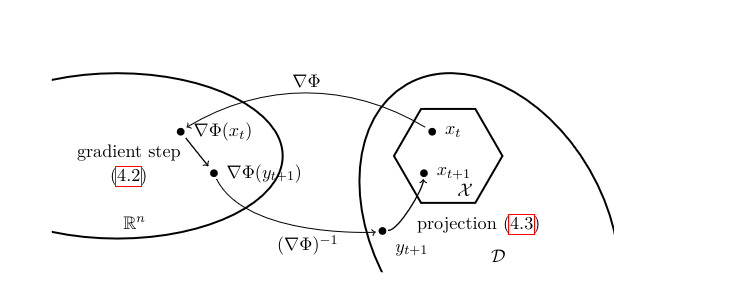

See [[#fig:MD|Figure]] for an illustration of this procedure. | |||

<div id="fig:MD" class="d-flex justify-content-center"> | |||

[[File:tikz34dcb3ef.png | 400px | thumb | Illustration of mirror descent. ]] | |||

</div> | |||

{{proofcard|Theorem|th:MD|Let <math>\Phi</math> be a mirror map <math>\rho</math>-strongly convex on <math>\mathcal{X} \cap \mathcal{D}</math> w.r.t. <math>\|\cdot\|</math>. | |||

Let <math>R^2 = \sup_{x \in \mathcal{X} \cap \mathcal{D}} \Phi(x) - \Phi(x_1)</math>, and <math>f</math> be convex and <math>L</math>-Lipschitz w.r.t. <math>\|\cdot\|</math>. Then mirror descent with <math>\eta = \frac{R}{L} \sqrt{\frac{2 \rho}{t}}</math> satisfies | |||

<math display="block"> | |||

f\bigg(\frac{1}{t} \sum_{s=1}^t x_s \bigg) - f(x^*) \leq RL \sqrt{\frac{2}{\rho t}} . | |||

</math> | |||

|Let <math>x \in \mathcal{X} \cap \mathcal{D}</math>. The claimed bound will be obtained by taking a limit <math>x \rightarrow x^*</math>. Now by convexity of <math>f</math>, the definition of mirror descent, equation \eqref{eq:useful1}, and [[#lem:todonow2 |Lemma]], one has | |||

<math display="block"> | |||

\begin{align*} | |||

& f(x_s) - f(x) \\ | |||

& \leq g_s^{\top} (x_s - x) \\ | |||

& = \frac{1}{\eta} (\nabla \Phi(x_s) - \nabla \Phi(y_{s+1}))^{\top} (x_s - x) \\ | |||

& = \frac{1}{\eta} \bigg( D_{\Phi}(x, x_s) + D_{\Phi}(x_s, y_{s+1}) - D_{\Phi}(x, y_{s+1}) \bigg) \\ | |||

& \leq \frac{1}{\eta} \bigg( D_{\Phi}(x, x_s) + D_{\Phi}(x_s, y_{s+1}) - D_{\Phi}(x, x_{s+1}) - D_{\Phi}(x_{s+1}, y_{s+1}) \bigg) . | |||

\end{align*} | |||

</math> | |||

The term <math>D_{\Phi}(x, x_s) - D_{\Phi}(x, x_{s+1})</math> will lead to a telescopic sum when summing over <math>s=1</math> to <math>s=t</math>, and it remains to bound the other term as follows using <math>\rho</math>-strong convexity of the mirror map and <math>a z - b z^2 \leq \frac{a^2}{4 b}, \forall z \in \R</math>: | |||

<math display="block"> | |||

\begin{align*} | |||

& D_{\Phi}(x_s, y_{s+1}) - D_{\Phi}(x_{s+1}, y_{s+1}) \\ | |||

& = \Phi(x_s) - \Phi(x_{s+1}) - \nabla \Phi(y_{s+1})^{\top} (x_{s} - x_{s+1}) \\ | |||

& \leq (\nabla \Phi(x_s) - \nabla \Phi(y_{s+1}))^{\top} (x_{s} - x_{s+1}) - \frac{\rho}{2} \|x_s - x_{s+1}\|^2 \\ | |||

& = \eta g_s^{\top} (x_{s} - x_{s+1}) - \frac{\rho}{2} \|x_s - x_{s+1}\|^2 \\ | |||

& \leq \eta L \|x_{s} - x_{s+1}\| - \frac{\rho}{2} \|x_s - x_{s+1}\|^2 \\ | |||

& \leq \frac{(\eta L)^2}{2 \rho}. | |||

\end{align*} | |||

</math> | |||

We proved | |||

<math display="block"> | |||

\sum_{s=1}^t \bigg(f(x_s) - f(x)\bigg) \leq \frac{D_{\Phi}(x,x_1)}{\eta} + \eta \frac{L^2 t}{2 \rho}, | |||

</math> | |||

which concludes the proof up to trivial computation.}} | |||

We observe that one can rewrite mirror descent as follows: | |||

<span id{{=}}"eq:MD3"/><span id{{=}}"eq:MDproxview"/> | |||

<math display="block"> | |||

\begin{eqnarray} | |||

x_{t+1} & = & \argmin_{x \in \mathcal{X} \cap \mathcal{D}} \ D_{\Phi}(x,y_{t+1}) \notag \\ | |||

& = & \argmin_{x \in \mathcal{X} \cap \mathcal{D}} \ \Phi(x) - \nabla \Phi(y_{t+1})^{\top} x \label{eq:MD3} \\ | |||

& = & \argmin_{x \in \mathcal{X} \cap \mathcal{D}} \ \Phi(x) - (\nabla \Phi(x_{t}) - \eta g_t)^{\top} x \notag \\ | |||

& = & \argmin_{x \in \mathcal{X} \cap \mathcal{D}} \ \eta g_t^{\top} x + D_{\Phi}(x,x_t) . \label{eq:MDproxview} | |||

\end{eqnarray} | |||

</math> | |||

This last expression is often taken as the definition of mirror descent (see <ref name="BT03"></ref>). It gives a proximal point of view on mirror descent: the method is trying to minimize the local linearization of the function while not moving too far away from the previous point, with distances measured via the Bregman divergence of the mirror map. | |||

==<span id="sec:mdsetups"></span>Standard setups for mirror descent== | |||

'''``Ball setup".''' The simplest version of mirror descent is obtained by taking <math>\Phi(x) = \frac{1}{2} \|x\|^2_2</math> on <math>\mathcal{D} = \mathbb{R}^n</math>. The function <math>\Phi</math> is a mirror map strongly convex w.r.t. <math>\|\cdot\|_2</math>, and furthermore the associated Bregman divergence is given by <math>D_{\Phi}(x,y) = \frac{1}{2} \|x - y\|^2_2</math>. Thus in that case mirror descent is exactly equivalent to projected subgradient descent, and the rate of convergence obtained in [[#th:MD |Theorem]] recovers our earlier result on projected subgradient descent. | |||

\newline | |||

'''``Simplex setup".''' A more interesting choice of a mirror map is given by the negative entropy | |||

<math display="block"> | |||

\Phi(x) = \sum_{i=1}^n x(i) \log x(i), | |||

</math> | |||

on <math>\mathcal{D} = \mathbb{R}_{++}^n</math>. In that case the gradient update <math>\nabla \Phi(y_{t+1}) = \nabla \Phi(x_t) - \eta \nabla f(x_t)</math> can be written equivalently as | |||

<math display="block"> | |||

y_{t+1}(i) = x_{t}(i) \exp\big(- \eta [\nabla f(x_t) ](i) \big) , \ i=1, \hdots, n. | |||

</math> | |||

The Bregman divergence of this mirror map is given by <math>D_{\Phi}(x,y) = \sum_{i=1}^n x(i) \log \frac{x(i)}{y(i)}</math> (also known as the Kullback-Leibler divergence). It is easy to verify that the projection with respect to this Bregman divergence on the simplex <math>\Delta_n = \{x \in \mathbb{R}_+^n : \sum_{i=1}^n x(i) = 1\}</math> amounts to a simple renormalization <math>y \mapsto y / \|y\|_1</math>. Furthermore it is also easy to verify that <math>\Phi</math> is <math>1</math>-strongly convex w.r.t. <math>\|\cdot\|_1</math> on <math>\Delta_n</math> (this result is known as Pinsker’s inequality). Note also that for <math>\mathcal{X} = \Delta_n</math> one has <math>x_1 = (1/n, \hdots, 1/n)</math> and <math>R^2 = \log n</math>. | |||

The above observations imply that when minimizing on the simplex <math>\Delta_n</math> a function <math>f</math> with subgradients bounded in <math>\ell_{\infty}</math>-norm, mirror descent with the negative entropy achieves a rate of convergence of order <math>\sqrt{\frac{\log n}{t}}</math>. On the other hand the regular subgradient descent achieves only a rate of order <math>\sqrt{\frac{n}{t}}</math> in this case! | |||

\newline | |||

'''‘`Spectrahedron setup".''' We consider here functions defined on matrices, and we are interested in minimizing a function <math>f</math> on the {\em spectrahedron} <math>\mathcal{S}_n</math> defined as: | |||

<math display="block"> | |||

\mathcal{S}_n = \left\{X \in \mathbb{S}_+^n : \mathrm{Tr}(X) = 1 \right\} . | |||

</math> | |||

In this setting we consider the mirror map on <math>\mathcal{D} = \mathbb{S}_{++}^n</math> given by the negative von Neumann entropy: | |||

<math display="block"> | |||

\Phi(X) = \sum_{i=1}^n \lambda_i(X) \log \lambda_i(X) , | |||

</math> | |||

where <math>\lambda_1(X), \hdots, \lambda_n(X)</math> are the eigenvalues of <math>X</math>. It can be shown that the gradient update <math>\nabla \Phi(Y_{t+1}) = \nabla \Phi(X_t) - \eta \nabla f(X_t)</math> can be written equivalently as | |||

<math display="block"> | |||

Y_{t+1} = \exp\big(\log X_t - \eta \nabla f(X_t) \big) , | |||

</math> | |||

where the matrix exponential and matrix logarithm are defined as usual. Furthermore the projection on <math>\mathcal{S}_n</math> is a simple trace renormalization. | |||

With highly non-trivial computation one can show that <math>\Phi</math> is <math>\frac{1}{2}</math>-strongly convex with respect to the Schatten <math>1</math>-norm defined as | |||

<math display="block"> | |||

\|X\|_1 = \sum_{i=1}^n \lambda_i(X). | |||

</math> | |||

It is easy to see that for <math>\mathcal{X} = \mathcal{S}_n</math> one has <math>x_1 = \frac{1}{n} \mI_n</math> and <math>R^2 = \log n</math>. In other words the rate of convergence for optimization on the spectrahedron is the same than on the simplex! | |||

==Lazy mirror descent, aka Nesterov’s dual averaging== | |||

In this section we consider a slightly more efficient version of mirror descent for which we can prove that [[#th:MD |Theorem]] still holds true. This alternative algorithm can be advantageous in some situations (such as distributed settings), but the basic mirror descent scheme remains important for extensions considered later in this text (saddle points, stochastic oracles, ...). | |||

In lazy mirror descent, also commonly known as Nesterov's dual averaging or simply dual averaging, one replaces \eqref{eq:MD1} by | |||

<math display="block"> | |||

\nabla \Phi(y_{t+1}) = \nabla \Phi(y_{t}) - \eta g_t , | |||

</math> | |||

and also <math>y_1</math> is such that <math>\nabla \Phi(y_1) = 0</math>. In other words instead of going back and forth between the primal and the dual, dual averaging simply averages the gradients in the dual, and if asked for a point in the primal it simply maps the current dual point to the primal using the same methodology as mirror descent. In particular using \eqref{eq:MD3} one immediately sees that dual averaging is defined by: | |||

<span id{{=}}"eq:DA0"/> | |||

<math display="block"> | |||

\begin{equation} \label{eq:DA0} | |||

x_t = \argmin_{x \in \mathcal{X} \cap \mathcal{D}} \ \eta \sum_{s=1}^{t-1} g_s^{\top} x + \Phi(x) . | |||

\end{equation} | |||

</math> | |||

{{proofcard|Theorem|theorem-1|Let <math>\Phi</math> be a mirror map <math>\rho</math>-strongly convex on <math>\mathcal{X} \cap \mathcal{D}</math> w.r.t. <math>\|\cdot\|</math>. | |||

Let <math>R^2 = \sup_{x \in \mathcal{X} \cap \mathcal{D}} \Phi(x) - \Phi(x_1)</math>, and <math>f</math> be convex and <math>L</math>-Lipschitz w.r.t. <math>\|\cdot\|</math>. Then dual averaging with <math>\eta = \frac{R}{L} \sqrt{\frac{\rho}{2 t}}</math> satisfies | |||

<math display="block"> | |||

f\bigg(\frac{1}{t} \sum_{s=1}^t x_s \bigg) - f(x^*) \leq 2 RL \sqrt{\frac{2}{\rho t}} . | |||

</math> | |||

|We define <math>\psi_t(x) = \eta \sum_{s=1}^{t} g_s^{\top} x + \Phi(x)</math>, so that <math>x_t \in \argmin_{x \in \mathcal{X} \cap \mathcal{D}} \psi_{t-1}(x)</math>. Since <math>\Phi</math> is <math>\rho</math>-strongly convex one clearly has that <math>\psi_t</math> is <math>\rho</math>-strongly convex, and thus | |||

<math display="block"> | |||

\begin{eqnarray*} | |||

\psi_t(x_{t+1}) - \psi_t(x_t) & \leq & \nabla \psi_t(x_{t+1})^{\top}(x_{t+1} - x_{t}) - \frac{\rho}{2} \|x_{t+1} - x_t\|^2 \\ | |||

& \leq & - \frac{\rho}{2} \|x_{t+1} - x_t\|^2 , | |||

\end{eqnarray*} | |||

</math> | |||

where the second inequality comes from the first order optimality condition for <math>x_{t+1}</math> (see [[guide:72a2ed5454#prop:firstorder |Proposition]]). Next observe that | |||

<math display="block"> | |||

\begin{eqnarray*} | |||

\psi_t(x_{t+1}) - \psi_t(x_t) & = & \psi_{t-1}(x_{t+1}) - \psi_{t-1}(x_t) + \eta g_t^{\top} (x_{t+1} - x_t) \\ | |||

& \geq & \eta g_t^{\top} (x_{t+1} - x_t) . | |||

\end{eqnarray*} | |||

</math> | |||

Putting together the two above displays and using Cauchy-Schwarz (with the assumption <math>\|g_t\|_* \leq L</math>) one obtains | |||

<math display="block"> | |||

\frac{\rho}{2} \|x_{t+1} - x_t\|^2 \leq \eta g_t^{\top} (x_t - x_{t+1}) \leq \eta L \|x_t - x_{t+1} \|. | |||

</math> | |||

In particular this shows that <math>\|x_{t+1} - x_t\| \leq \frac{2 \eta L}{\rho}</math> and thus with the above display | |||

<span id{{=}}"eq:DA1"/> | |||

<math display="block"> | |||

\begin{equation} \label{eq:DA1} | |||

g_t^{\top} (x_t - x_{t+1}) \leq \frac{2 \eta L^2}{\rho} . | |||

\end{equation} | |||

</math> | |||

Now we claim that for any <math>x \in \cX \cap \cD</math>, | |||

<span id{{=}}"eq:DA2"/> | |||

<math display="block"> | |||

\begin{equation} \label{eq:DA2} | |||

\sum_{s=1}^t g_s^{\top} (x_s - x) \leq \sum_{s=1}^t g_s^{\top} (x_s - x_{s+1}) + \frac{\Phi(x) - \Phi(x_1)}{\eta} , | |||

\end{equation} | |||

</math> | |||

which would clearly conclude the proof thanks to \eqref{eq:DA1} and straightforward computations. Equation \eqref{eq:DA2} is equivalent to | |||

<math display="block"> | |||

\sum_{s=1}^t g_s^{\top} x_{s+1} + \frac{\Phi(x_1)}{\eta} \leq \sum_{s=1}^t g_s^{\top} x + \frac{\Phi(x)}{\eta} , | |||

</math> | |||

and we now prove the latter equation by induction. At <math>t=0</math> it is true since <math>x_1 \in \argmin_{x \in \cX \cap \cD} \Phi(x)</math>. The following inequalities prove the inductive step, where we use the induction hypothesis at <math>x=x_{t+1}</math> for the first inequality, and the definition of <math>x_{t+1}</math> for the second inequality: | |||

<math display="block"> | |||

\sum_{s=1}^{t} g_s^{\top} x_{s+1} + \frac{\Phi(x_1)}{\eta} \leq g_{t}^{\top}x_{t+1} + \sum_{s=1}^{t-1} g_s^{\top} x_{t+1} + \frac{\Phi(x_{t+1})}{\eta} \leq \sum_{s=1}^{t} g_s^{\top} x + \frac{\Phi(x)}{\eta} . | |||

</math>}} | |||

==Mirror prox== | |||

It can be shown that mirror descent accelerates for smooth functions to the rate <math>1/t</math>. We will prove this result in [[guide:474e0143f8#rand |Chapter]] (see [[guide:474e0143f8#th:SMDsmooth |Theorem]]). We describe here a variant of mirror descent which also attains the rate <math>1/t</math> for smooth functions. This method is called mirror prox and it was introduced in <ref name="Nem04"></ref>. | |||

The true power of mirror prox will reveal itself later in the text when we deal with smooth representations of non-smooth functions as well as stochastic oracles<ref group="Notes" >Basically mirror prox allows for a smooth vector field point of view (see Section [[#sec:vectorfield |The vector field point of view on MD, DA, and MP]]), while mirror descent does not.</ref>. | |||

Mirror prox is described by the following equations: | |||

<math display="block"> | |||

\begin{align*} | |||

& \nabla \Phi(y_{t+1}') = \nabla \Phi(x_{t}) - \eta \nabla f(x_t), \\ \\ | |||

& y_{t+1} \in \argmin_{x \in \mathcal{X} \cap \mathcal{D}} D_{\Phi}(x,y_{t+1}') , \\ \\ | |||

& \nabla \Phi(x_{t+1}') = \nabla \Phi(x_{t}) - \eta \nabla f(y_{t+1}), \\ \\ | |||

& x_{t+1} \in \argmin_{x \in \mathcal{X} \cap \mathcal{D}} D_{\Phi}(x,x_{t+1}') . | |||

\end{align*} | |||

</math> | |||

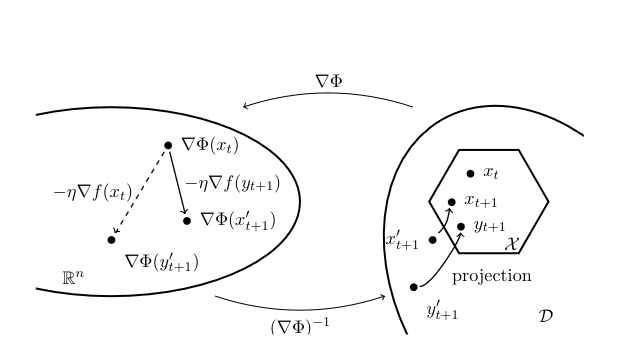

In words the algorithm first makes a step of mirror descent to go from <math>x_t</math> to <math>y_{t+1}</math>, and then it makes a similar step to obtain <math>x_{t+1}</math>, starting again from <math>x_t</math> but this time using the gradient of <math>f</math> evaluated at <math>y_{t+1}</math> (instead of <math>x_t</math>), see [[#fig:mp|Figure]] for an illustration. The following result justifies the procedure. | |||

<div id="fig:mp" class="d-flex justify-content-center"> | |||

[[File:tikz9b7ebc2e.png | 400px | thumb | Illustration of mirror prox. ]] | |||

</div> | |||

{{proofcard|Theorem|theorem-2|Let <math>\Phi</math> be a mirror map <math>\rho</math>-strongly convex on <math>\mathcal{X} \cap \mathcal{D}</math> w.r.t. <math>\|\cdot\|</math>. Let <math>R^2 = \sup_{x \in \mathcal{X} \cap \mathcal{D}} \Phi(x) - \Phi(x_1)</math>, and <math>f</math> be convex and <math>\beta</math>-smooth w.r.t. <math>\|\cdot\|</math>. Then mirror prox with <math>\eta = \frac{\rho}{\beta}</math> satisfies | |||

<math display="block"> | |||

f\bigg(\frac{1}{t} \sum_{s=1}^t y_{s+1} \bigg) - f(x^*) \leq \frac{\beta R^2}{\rho t} . | |||

</math> | |||

|Let <math>x \in \mathcal{X} \cap \mathcal{D}</math>. We write | |||

<math display="block"> | |||

\begin{eqnarray*} | |||

f(y_{t+1}) - f(x) & \leq & \nabla f(y_{t+1})^{\top} (y_{t+1} - x) \\ | |||

& = & \nabla f(y_{t+1})^{\top} (x_{t+1} - x) + \nabla f(x_t)^{\top} (y_{t+1} - x_{t+1}) \\ | |||

& & + (\nabla f(y_{t+1}) - \nabla f(x_t))^{\top} (y_{t+1} - x_{t+1}) . | |||

\end{eqnarray*} | |||

</math> | |||

We will now bound separately these three terms. For the first one, using the definition of the method, [[#lem:todonow2 |Lemma]], and equation \eqref{eq:useful1}, one gets | |||

<math display="block"> | |||

\begin{align*} | |||

& \eta \nabla f(y_{t+1})^{\top} (x_{t+1} - x) \\ | |||

& = ( \nabla \Phi(x_t) - \nabla \Phi(x_{t+1}'))^{\top} (x_{t+1} - x) \\ | |||

& \leq ( \nabla \Phi(x_t) - \nabla \Phi(x_{t+1}))^{\top} (x_{t+1} - x) \\ | |||

& = D_{\Phi}(x,x_t) - D_{\Phi}(x, x_{t+1}) - D_{\Phi}(x_{t+1}, x_t) . | |||

\end{align*} | |||

</math> | |||

For the second term using the same properties than above and the strong-convexity of the mirror map one obtains | |||

<span id{{=}}"eq:pourplustard1"/> | |||

<math display="block"> | |||

\begin{align} | |||

& \eta \nabla f(x_t)^{\top} (y_{t+1} - x_{t+1}) \notag\\ | |||

& = ( \nabla \Phi(x_t) - \nabla \Phi(y_{t+1}'))^{\top} (y_{t+1} - x_{t+1}) \notag\\ | |||

& \leq ( \nabla \Phi(x_t) - \nabla \Phi(y_{t+1}))^{\top} (y_{t+1} - x_{t+1}) \notag\\ | |||

& = D_{\Phi}(x_{t+1},x_t) - D_{\Phi}(x_{t+1}, y_{t+1}) - D_{\Phi}(y_{t+1}, x_t) \label{eq:pourplustard1}\\ | |||

& \leq D_{\Phi}(x_{t+1},x_t) - \frac{\rho}{2} \|x_{t+1} - y_{t+1} \|^2 - \frac{\rho}{2} \|y_{t+1} - x_t\|^2 . \notag | |||

\end{align} | |||

</math> | |||

Finally for the last term, using Cauchy-Schwarz, <math>\beta</math>-smoothness, and <math>2 ab \leq a^2 + b^2</math> one gets | |||

<math display="block"> | |||

\begin{align*} | |||

& (\nabla f(y_{t+1}) - \nabla f(x_t))^{\top} (y_{t+1} - x_{t+1}) \\ | |||

& \leq \|\nabla f(y_{t+1}) - \nabla f(x_t)\|_* \cdot \|y_{t+1} - x_{t+1} \| \\ | |||

& \leq \beta \|y_{t+1} - x_t\| \cdot \|y_{t+1} - x_{t+1} \| \\ | |||

& \leq \frac{\beta}{2} \|y_{t+1} - x_t\|^2 + \frac{\beta}{2} \|y_{t+1} - x_{t+1} \|^2 . | |||

\end{align*} | |||

</math> | |||

Thus summing up these three terms and using that <math>\eta = \frac{\rho}{\beta}</math> one gets | |||

<math display="block"> | |||

f(y_{t+1}) - f(x) \leq \frac{D_{\Phi}(x,x_t) - D_{\Phi}(x,x_{t+1})}{\eta} . | |||

</math> | |||

The proof is concluded with straightforward computations.}} | |||

==<span id="sec:vectorfield"></span>The vector field point of view on MD, DA, and MP== | |||

In this section we consider a mirror map <math>\Phi</math> that satisfies the assumptions from [[#th:MD |Theorem]]. | |||

By inspecting the proof of [[#th:MD |Theorem]] one can see that for arbitrary vectors <math>g_1, \hdots, g_t \in \R^n</math> the mirror descent strategy described by \eqref{eq:MD1} or \eqref{eq:MD2} (or alternatively by \eqref{eq:MDproxview}) satisfies for any <math>x \in \cX \cap \cD</math>, | |||

<span id{{=}}"eq:vfMD"/> | |||

<math display="block"> | |||

\begin{equation} \label{eq:vfMD} | |||

\sum_{s=1}^t g_s^{\top} (x_s - x) \leq \frac{R^2}{\eta} + \frac{\eta}{2 \rho} \sum_{s=1}^t \|g_s\|_*^2 . | |||

\end{equation} | |||

</math> | |||

The observation that the sequence of vectors <math>(g_s)</math> does not have to come from the subgradients of a {\em fixed} function <math>f</math> is the starting point for the theory of online learning, see <ref name="Bub11"></ref> for more details. In this monograph we will use this observation to generalize mirror descent to saddle point calculations as well as stochastic settings. We note that we could also use dual averaging (defined by \eqref{eq:DA0}) which satisfies | |||

<math display="block"> | |||

\sum_{s=1}^t g_s^{\top} (x_s - x) \leq \frac{R^2}{\eta} + \frac{2 \eta}{\rho} \sum_{s=1}^t \|g_s\|_*^2 . | |||

</math> | |||

In order to generalize mirror prox we simply replace the gradient <math>\nabla f</math> by an arbitrary vector field <math>g: \cX \rightarrow \R^n</math> which yields the following equations: | |||

<math display="block"> | |||

\begin{align*} | |||

& \nabla \Phi(y_{t+1}') = \nabla \Phi(x_{t}) - \eta g(x_t), \\ | |||

& y_{t+1} \in \argmin_{x \in \mathcal{X} \cap \mathcal{D}} D_{\Phi}(x,y_{t+1}') , \\ | |||

& \nabla \Phi(x_{t+1}') = \nabla \Phi(x_{t}) - \eta g(y_{t+1}), \\ | |||

& x_{t+1} \in \argmin_{x \in \mathcal{X} \cap \mathcal{D}} D_{\Phi}(x,x_{t+1}') . | |||

\end{align*} | |||

</math> | |||

Under the assumption that the vector field is <math>\beta</math>-Lipschitz w.r.t. <math>\|\cdot\|</math>, i.e., <math>\|g(x) - g(y)\|_* \leq \beta \|x-y\|</math> one obtains with <math>\eta = \frac{\rho}{\beta}</math> | |||

<span id{{=}}"eq:vfMP"/> | |||

<math display="block"> | |||

\begin{equation} \label{eq:vfMP} | |||

\sum_{s=1}^t g(y_{s+1})^{\top}(y_{s+1} - x) \leq \frac{\beta R^2}{\rho}. | |||

\end{equation} | |||

</math> | |||

==General references== | |||

{{cite arXiv|last1=Bubeck|first1=Sébastien|year=2015|title=Convex Optimization: Algorithms and Complexity|eprint=1405.4980|class=math.OC}} | |||

==References== | |||

{{reflist}} | |||

==Notes== | |||

{{Reflist|group=Notes}} | |||

Revision as of 18:47, 3 June 2024

\label{mirror} In the previous chapter we showed that dimension-free oracle complexity is possible when the objective function [math]f[/math] and the constraint set [math]\cX[/math] are well-behaved in the Euclidean norm; e.g. if for all points [math]x \in \cX[/math] and all subgradients [math]g \in \partial f(x)[/math], one has that [math]\|x\|_2[/math] and [math]\|g\|_2[/math] are independent of the ambient dimension [math]n[/math]. If this assumption is not met then the gradient descent techniques of Chapter may lose their dimension-free convergence rates. For instance consider a differentiable convex function [math]f[/math] defined on the Euclidean ball [math]\mB_{2,n}[/math] and such that [math]\|\nabla f(x)\|_{\infty} \leq 1, \forall x \in \mB_{2,n}[/math]. This implies that [math]\|\nabla f(x)\|_{2} \leq \sqrt{n}[/math], and thus projected gradient descent will converge to the minimum of [math]f[/math] on [math]\mB_{2,n}[/math] at a rate [math]\sqrt{n / t}[/math]. In this chapter we describe the method of [1], known as mirror descent, which allows to find the minimum of such functions [math]f[/math] over the [math]\ell_1[/math]-ball (instead of the Euclidean ball) at the much faster rate [math]\sqrt{\log(n) / t}[/math]. This is only one example of the potential of mirror descent. This chapter is devoted to the description of mirror descent and some of its alternatives. The presentation is inspired from [2], [Chapter 11, [3]], [4][5][6].

In order to describe the intuition behind the method let us abstract the situation for a moment and forget that we are doing optimization in finite dimension. We already observed that projected gradient descent works in an arbitrary Hilbert space [math]\mathcal{H}[/math]. Suppose now that we are interested in the more general situation of optimization in some Banach space [math]\mathcal{B}[/math]. In other words the norm that we use to measure the various quantity of interest does not derive from an inner product (think of [math]\mathcal{B} = \ell_1[/math] for example). In that case the gradient descent strategy does not even make sense: indeed the gradients (more formally the Fr\'echet derivative) [math]\nabla f(x)[/math] are elements of the dual space [math]\mathcal{B}^*[/math] and thus one cannot perform the computation [math]x - \eta \nabla f(x)[/math] (it simply does not make sense). We did not have this problem for optimization in a Hilbert space [math]\mathcal{H}[/math] since by Riesz representation theorem [math]\mathcal{H}^*[/math] is isometric to [math]\mathcal{H}[/math]. The great insight of Nemirovski and Yudin is that one can still do a gradient descent by first mapping the point [math]x \in \mathcal{B}[/math] into the dual space [math]\mathcal{B}^*[/math], then performing the gradient update in the dual space, and finally mapping back the resulting point to the primal space [math]\mathcal{B}[/math]. Of course the new point in the primal space might lie outside of the constraint set [math]\mathcal{X} \subset \mathcal{B}[/math] and thus we need a way to project back the point on the constraint set [math]\mathcal{X}[/math]. Both the primal/dual mapping and the projection are based on the concept of a {\em mirror map} which is the key element of the scheme. Mirror maps are defined in Section Mirror maps, and the above scheme is formally described in Section Mirror descent. In the rest of this chapter we fix an arbitrary norm [math]\|\cdot\|[/math] on [math]\R^n[/math], and a compact convex set [math]\cX \subset \R^n[/math]. The dual norm [math]\|\cdot\|_*[/math] is defined as [math]\|g\|_* = \sup_{x \in \mathbb{R}^n : \|x\| \leq 1} g^{\top} x[/math]. We say that a convex function [math]f : \cX \rightarrow \R[/math] is (i) [math]L[/math]-Lipschitz w.r.t. [math]\|\cdot\|[/math] if [math]\forall x \in \cX, g \in \partial f(x), \|g\|_* \leq L[/math], (ii) [math]\beta[/math]-smooth w.r.t. [math]\|\cdot\|[/math] if [math]\|\nabla f(x) - \nabla f(y) \|_* \leq \beta \|x-y\|, \forall x, y \in \cX[/math], and (iii) [math]\alpha[/math]-strongly convex w.r.t. [math]\|\cdot\|[/math] if

We also define the Bregman divergence associated to [math]f[/math] as

The following identity will be useful several times:

Mirror maps

Let [math]\cD \subset \R^n[/math] be a convex open set such that [math]\mathcal{X}[/math] is included in its closure, that is [math]\mathcal{X} \subset \overline{\mathcal{D}}[/math], and [math]\mathcal{X} \cap \mathcal{D} \neq \emptyset[/math]. We say that [math]\Phi : \cD \rightarrow \R[/math] is a mirror map if it safisfies the following properties[Notes 1]:

- [math]\Phi[/math] is strictly convex and differentiable.

- The gradient of [math]\Phi[/math] takes all possible values, that is [math]\nabla \Phi(\cD) = \R^n[/math].

- The gradient of [math]\Phi[/math] diverges on the boundary of [math]\cD[/math], that is

[[math]] \lim_{x \rightarrow \partial \mathcal{D}} \|\nabla \Phi(x)\| = + \infty . [[/math]]

In mirror descent the gradient of the mirror map [math]\Phi[/math] is used to map points from the ‘`primal" to the ``dual" (note that all points lie in [math]\R^n[/math] so the notions of primal and dual spaces only have an intuitive meaning). Precisely a point [math]x \in \mathcal{X} \cap \mathcal{D}[/math] is mapped to [math]\nabla \Phi(x)[/math], from which one takes a gradient step to get to [math]\nabla \Phi(x) - \eta \nabla f(x)[/math]. Property (ii) then allows us to write the resulting point as [math]\nabla \Phi(y) = \nabla \Phi(x) - \eta \nabla f(x)[/math] for some [math]y \in \cD[/math]. The primal point [math]y[/math] may lie outside of the set of constraints [math]\cX[/math], in which case one has to project back onto [math]\cX[/math]. In mirror descent this projection is done via the Bregman divergence associated to [math]\Phi[/math]. Precisely one defines

Property (i) and (iii) ensures the existence and uniqueness of this projection (in particular since [math]x \mapsto D_{\Phi}(x,y)[/math] is locally increasing on the boundary of [math]\mathcal{D}[/math]). The following lemma shows that the Bregman divergence essentially behaves as the Euclidean norm squared in terms of projections (recall Lemma).

Let [math]x \in \cX \cap \cD[/math] and [math]y \in \cD[/math], then

The proof is an immediate corollary of Proposition together with the fact that [math]\nabla_x D_{\Phi}(x,y) = \nabla \Phi(x) - \nabla \Phi(y)[/math].

Mirror descent

We can now describe the mirror descent strategy based on a mirror map [math]\Phi[/math]. Let [math]x_1 \in \argmin_{x \in \mathcal{X} \cap \mathcal{D}} \Phi(x)[/math]. Then for [math]t \geq 1[/math], let [math]y_{t+1} \in \mathcal{D}[/math] such that

and

See Figure for an illustration of this procedure.

Let [math]\Phi[/math] be a mirror map [math]\rho[/math]-strongly convex on [math]\mathcal{X} \cap \mathcal{D}[/math] w.r.t. [math]\|\cdot\|[/math]. Let [math]R^2 = \sup_{x \in \mathcal{X} \cap \mathcal{D}} \Phi(x) - \Phi(x_1)[/math], and [math]f[/math] be convex and [math]L[/math]-Lipschitz w.r.t. [math]\|\cdot\|[/math]. Then mirror descent with [math]\eta = \frac{R}{L} \sqrt{\frac{2 \rho}{t}}[/math] satisfies

Let [math]x \in \mathcal{X} \cap \mathcal{D}[/math]. The claimed bound will be obtained by taking a limit [math]x \rightarrow x^*[/math]. Now by convexity of [math]f[/math], the definition of mirror descent, equation \eqref{eq:useful1}, and Lemma, one has

We observe that one can rewrite mirror descent as follows:

This last expression is often taken as the definition of mirror descent (see [2]). It gives a proximal point of view on mirror descent: the method is trying to minimize the local linearization of the function while not moving too far away from the previous point, with distances measured via the Bregman divergence of the mirror map.

Standard setups for mirror descent

``Ball setup". The simplest version of mirror descent is obtained by taking [math]\Phi(x) = \frac{1}{2} \|x\|^2_2[/math] on [math]\mathcal{D} = \mathbb{R}^n[/math]. The function [math]\Phi[/math] is a mirror map strongly convex w.r.t. [math]\|\cdot\|_2[/math], and furthermore the associated Bregman divergence is given by [math]D_{\Phi}(x,y) = \frac{1}{2} \|x - y\|^2_2[/math]. Thus in that case mirror descent is exactly equivalent to projected subgradient descent, and the rate of convergence obtained in Theorem recovers our earlier result on projected subgradient descent. \newline

``Simplex setup". A more interesting choice of a mirror map is given by the negative entropy

on [math]\mathcal{D} = \mathbb{R}_{++}^n[/math]. In that case the gradient update [math]\nabla \Phi(y_{t+1}) = \nabla \Phi(x_t) - \eta \nabla f(x_t)[/math] can be written equivalently as

The Bregman divergence of this mirror map is given by [math]D_{\Phi}(x,y) = \sum_{i=1}^n x(i) \log \frac{x(i)}{y(i)}[/math] (also known as the Kullback-Leibler divergence). It is easy to verify that the projection with respect to this Bregman divergence on the simplex [math]\Delta_n = \{x \in \mathbb{R}_+^n : \sum_{i=1}^n x(i) = 1\}[/math] amounts to a simple renormalization [math]y \mapsto y / \|y\|_1[/math]. Furthermore it is also easy to verify that [math]\Phi[/math] is [math]1[/math]-strongly convex w.r.t. [math]\|\cdot\|_1[/math] on [math]\Delta_n[/math] (this result is known as Pinsker’s inequality). Note also that for [math]\mathcal{X} = \Delta_n[/math] one has [math]x_1 = (1/n, \hdots, 1/n)[/math] and [math]R^2 = \log n[/math]. The above observations imply that when minimizing on the simplex [math]\Delta_n[/math] a function [math]f[/math] with subgradients bounded in [math]\ell_{\infty}[/math]-norm, mirror descent with the negative entropy achieves a rate of convergence of order [math]\sqrt{\frac{\log n}{t}}[/math]. On the other hand the regular subgradient descent achieves only a rate of order [math]\sqrt{\frac{n}{t}}[/math] in this case! \newline

‘`Spectrahedron setup". We consider here functions defined on matrices, and we are interested in minimizing a function [math]f[/math] on the {\em spectrahedron} [math]\mathcal{S}_n[/math] defined as:

In this setting we consider the mirror map on [math]\mathcal{D} = \mathbb{S}_{++}^n[/math] given by the negative von Neumann entropy:

where [math]\lambda_1(X), \hdots, \lambda_n(X)[/math] are the eigenvalues of [math]X[/math]. It can be shown that the gradient update [math]\nabla \Phi(Y_{t+1}) = \nabla \Phi(X_t) - \eta \nabla f(X_t)[/math] can be written equivalently as

where the matrix exponential and matrix logarithm are defined as usual. Furthermore the projection on [math]\mathcal{S}_n[/math] is a simple trace renormalization. With highly non-trivial computation one can show that [math]\Phi[/math] is [math]\frac{1}{2}[/math]-strongly convex with respect to the Schatten [math]1[/math]-norm defined as

It is easy to see that for [math]\mathcal{X} = \mathcal{S}_n[/math] one has [math]x_1 = \frac{1}{n} \mI_n[/math] and [math]R^2 = \log n[/math]. In other words the rate of convergence for optimization on the spectrahedron is the same than on the simplex!

Lazy mirror descent, aka Nesterov’s dual averaging

In this section we consider a slightly more efficient version of mirror descent for which we can prove that Theorem still holds true. This alternative algorithm can be advantageous in some situations (such as distributed settings), but the basic mirror descent scheme remains important for extensions considered later in this text (saddle points, stochastic oracles, ...). In lazy mirror descent, also commonly known as Nesterov's dual averaging or simply dual averaging, one replaces \eqref{eq:MD1} by

and also [math]y_1[/math] is such that [math]\nabla \Phi(y_1) = 0[/math]. In other words instead of going back and forth between the primal and the dual, dual averaging simply averages the gradients in the dual, and if asked for a point in the primal it simply maps the current dual point to the primal using the same methodology as mirror descent. In particular using \eqref{eq:MD3} one immediately sees that dual averaging is defined by:

Let [math]\Phi[/math] be a mirror map [math]\rho[/math]-strongly convex on [math]\mathcal{X} \cap \mathcal{D}[/math] w.r.t. [math]\|\cdot\|[/math]. Let [math]R^2 = \sup_{x \in \mathcal{X} \cap \mathcal{D}} \Phi(x) - \Phi(x_1)[/math], and [math]f[/math] be convex and [math]L[/math]-Lipschitz w.r.t. [math]\|\cdot\|[/math]. Then dual averaging with [math]\eta = \frac{R}{L} \sqrt{\frac{\rho}{2 t}}[/math] satisfies

We define [math]\psi_t(x) = \eta \sum_{s=1}^{t} g_s^{\top} x + \Phi(x)[/math], so that [math]x_t \in \argmin_{x \in \mathcal{X} \cap \mathcal{D}} \psi_{t-1}(x)[/math]. Since [math]\Phi[/math] is [math]\rho[/math]-strongly convex one clearly has that [math]\psi_t[/math] is [math]\rho[/math]-strongly convex, and thus

Mirror prox

It can be shown that mirror descent accelerates for smooth functions to the rate [math]1/t[/math]. We will prove this result in Chapter (see Theorem). We describe here a variant of mirror descent which also attains the rate [math]1/t[/math] for smooth functions. This method is called mirror prox and it was introduced in [7]. The true power of mirror prox will reveal itself later in the text when we deal with smooth representations of non-smooth functions as well as stochastic oracles[Notes 2]. Mirror prox is described by the following equations:

In words the algorithm first makes a step of mirror descent to go from [math]x_t[/math] to [math]y_{t+1}[/math], and then it makes a similar step to obtain [math]x_{t+1}[/math], starting again from [math]x_t[/math] but this time using the gradient of [math]f[/math] evaluated at [math]y_{t+1}[/math] (instead of [math]x_t[/math]), see Figure for an illustration. The following result justifies the procedure.

Let [math]\Phi[/math] be a mirror map [math]\rho[/math]-strongly convex on [math]\mathcal{X} \cap \mathcal{D}[/math] w.r.t. [math]\|\cdot\|[/math]. Let [math]R^2 = \sup_{x \in \mathcal{X} \cap \mathcal{D}} \Phi(x) - \Phi(x_1)[/math], and [math]f[/math] be convex and [math]\beta[/math]-smooth w.r.t. [math]\|\cdot\|[/math]. Then mirror prox with [math]\eta = \frac{\rho}{\beta}[/math] satisfies

Let [math]x \in \mathcal{X} \cap \mathcal{D}[/math]. We write

The vector field point of view on MD, DA, and MP

In this section we consider a mirror map [math]\Phi[/math] that satisfies the assumptions from Theorem. By inspecting the proof of Theorem one can see that for arbitrary vectors [math]g_1, \hdots, g_t \in \R^n[/math] the mirror descent strategy described by \eqref{eq:MD1} or \eqref{eq:MD2} (or alternatively by \eqref{eq:MDproxview}) satisfies for any [math]x \in \cX \cap \cD[/math],

The observation that the sequence of vectors [math](g_s)[/math] does not have to come from the subgradients of a {\em fixed} function [math]f[/math] is the starting point for the theory of online learning, see [6] for more details. In this monograph we will use this observation to generalize mirror descent to saddle point calculations as well as stochastic settings. We note that we could also use dual averaging (defined by \eqref{eq:DA0}) which satisfies

In order to generalize mirror prox we simply replace the gradient [math]\nabla f[/math] by an arbitrary vector field [math]g: \cX \rightarrow \R^n[/math] which yields the following equations:

Under the assumption that the vector field is [math]\beta[/math]-Lipschitz w.r.t. [math]\|\cdot\|[/math], i.e., [math]\|g(x) - g(y)\|_* \leq \beta \|x-y\|[/math] one obtains with [math]\eta = \frac{\rho}{\beta}[/math]

General references

Bubeck, Sébastien (2015). "Convex Optimization: Algorithms and Complexity". arXiv:1405.4980 [math.OC].

References

- Cite error: Invalid

<ref>tag; no text was provided for refs namedNY83 - 2.0 2.1 Cite error: Invalid

<ref>tag; no text was provided for refs namedBT03 - Cite error: Invalid

<ref>tag; no text was provided for refs namedCL06 - Cite error: Invalid

<ref>tag; no text was provided for refs namedRak09 - Cite error: Invalid

<ref>tag; no text was provided for refs namedHaz11 - 6.0 6.1 Cite error: Invalid

<ref>tag; no text was provided for refs namedBub11 - Cite error: Invalid

<ref>tag; no text was provided for refs namedNem04

Notes

- Assumption (ii) can be relaxed in some cases, see for example [1].

- Basically mirror prox allows for a smooth vector field point of view (see Section The vector field point of view on MD, DA, and MP), while mirror descent does not.