guide:6d878fef61: Difference between revisions

No edit summary |

No edit summary |

||

| Line 1: | Line 1: | ||

<div class="d-none"><math> | |||

\newcommand{\indicator}[1]{\mathbbm{1}_{\left[ {#1} \right] }} | |||

\newcommand{\Real}{\hbox{Re}} | |||

\newcommand{\HRule}{\rule{\linewidth}{0.5mm}} | |||

\newcommand{\mathds}{\mathbb}</math></div> | |||

\label{chapt:brownianMotion} | |||

Continuous time financial models will often use Brownian motion to model the trajectory of asset prices. One can typically open the finance section of a newspaper and see a time series plot of an asset's price history, and it might be possible that the daily movements of the asset resemble a random walk or a path taken by a Brownian motion. Such a connection between asset prices and Brownian motion was central to the formulas of <ref name="blackScholes1973">Black, F. and Scholes, M. (1973).The pricing of options and corporate liabilities.''Journal of Political Economy'', 81(3):637--54.</ref> and <ref name="merton1973">Merton, R. (1973).On the pricing of corporate debt: the risk structure of interest rates.Technical report.</ref>, and have since led to a variety of models for pricing and hedging. The study of Brownian motion can be intense, but the main ideas are a simple definition and the application of It\^o's lemma. For further reading, see <ref name="bjork">Björk, T. (2000).''Arbitrage Theory in Continuous Time''.Oxford University Press, 2nd edition.</ref><ref name="oksendal">{\O}ksendal, B. (2003).''Stochastic differential equations: an introduction with applications''.Springer.</ref>. | |||

==Definition and Properties of Brownian Motion== | |||

On the time interval <math>[0,T]</math>, Brownian motion is a continuous stochastic process <math>(W_t)_{t\leq T}</math> such that | |||

<ul><li> <math>W_0 = 0</math>, | |||

</li> | |||

<li> Independent Increments: for <math>0\leq s' < t'\leq s < t\leq T</math>, <math>W_t-W_s</math> is independent of <math>W_{t'}-W_{s'}</math>, | |||

</li> | |||

<li> Conditionally Gaussian: <math>W_t-W_s\sim\mathcal N(0,t-s)</math>, i.e. is normal with mean zero and variance <math>t-s</math>. | |||

</li> | |||

</ul> | |||

There is a vast study of Brownian motion. We will instead use Brownian motion rather simply; the only other fact that is somewhat important is that Brownian motion is nowhere differentiable, that is | |||

<math display="block">\mathbb P\left(\frac{d}{dt}W_t\hbox{ is undefined for almost-everywhere }t\in[0,T]\right) = 1\ ,</math> | |||

although sometimes people write <math>\dot W_t</math> to denote a white noise process. It should also be pointed out that <math>W_t</math> is a martingale, | |||

<math display="block">\mathbb E[W_t|(W_\tau)_{\tau\leq s}] = W_s\qquad\forall s\leq t\ .</math> | |||

<div id="fig:brownianTraj" class="d-flex justify-content-center"> | |||

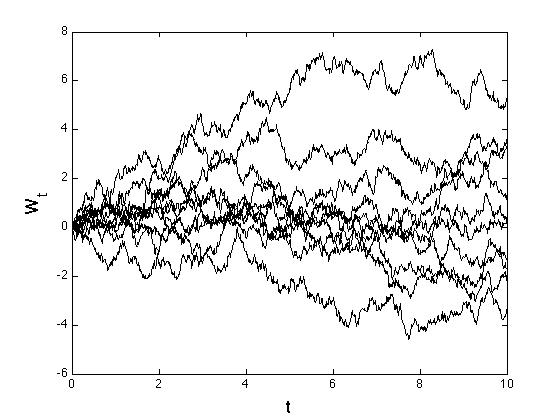

[[File:guide_2ee3d_BrownianTrajectories.jpg | 400px | thumb | A sample of 10 independent Brownian motions. ]] | |||

</div> | |||

'''Simulation.''' There are any number of ways to simulate Brownian motion, but to understand why Brownian motion can be treated like a ‘random walk’, consider the process, | |||

<math display="block">W_{t_n}^N = W_{t_{n-1}}^N+\Bigg\{\begin{array}{cc} | |||

\sqrt{T/N}&\hbox{with probability }1/2\\ | |||

- \sqrt{T/N}&\hbox{with probability }1/2 | |||

\end{array}</math> | |||

with <math>W_{t_0} = 0</math> and <math>t_n = n\frac TN</math> for <math>n=0,1,2\dots,N</math>. Obviously <math>W_{t_n}^N</math> has independent increments, and conditionally has the same mean and variance as Brownian motion. However it's not conditional Gaussian. However, as <math>N\rightarrow \infty</math> the probability law of <math>W_{t_n}^N</math> converges to the probability law of Brownian motion, so this simple random walk is actually a good way to simulate Brownian motion if you take <math>N</math> large. However, one usually has a random number generator that can produce <math>W_{t_n}^N</math> that also has conditionally Gaussian increments, and so it probably better to simulate Brownian motion this way. A sample of 10 independent Brownian Motions simulations are shown in [[#fig:brownianTraj|Figure]]. | |||

==The It\^o Integral== | |||

Introductory calculus courses teach differentiation first and integration second, which makes sense because differentiation is generally a methodical procedure (e.g., you use the chain rule) whereas finding an anti-derivative requires all kinds of change-of-variables, trig-substitutions, and guess-work. The It\^o calculus is derived and taught in the reverse order: first we need to understand the definition of an It\^o (stochastic) integral, and then we can understand what it means for a stochastic process to have a differential. | |||

The construction of the It\^o integral begins with a backward Riemann sum. For some function <math>f:[0,T]\rightarrow \mathbb R</math> (possibly random), non-anticipative of <math>W</math>, the It\^o integral is defined as | |||

<span id{{=}}"eq:itoInt"/> | |||

<math display="block"> | |||

\begin{equation} | |||

\label{eq:itoInt} | |||

\int_0^Tf(t)dW_t =\lim_{N\rightarrow 0}\sum_{n=0}^{N-1} f(t_n)(W_{t_{n+1}}-W_{t_n})\ , | |||

\end{equation} | |||

</math> | |||

where <math>t_n = n\frac TN</math>, with the limit holding in the strong sense. If <math>\mathbb E\int_0^Tf^2(t)dt < \infty</math>, then through an application of Fubini's theorem, it can be shown that equation \eqref{eq:itoInt} is a '''martingale,''' | |||

<math display="block">\mathbb E\left[\int_0^Tf(t)dW_t \Big|(W_\tau)_{\tau\leq s}\right] = \int_0^sf(t)dW_t\qquad\forall s\leq T\ .</math> | |||

Another important property of the stochastic integral is the It\^o Isometry, | |||

{{proofcard|Proposition|proposition-1|'''(It\^o Isometry).''' For any functions <math>f,g</math> (possibly random), non-anticipative of <math>W</math>, with <math>\mathbb E\int_0^Tf^2(t)dt < \infty</math> and <math>\mathbb E\int_0^Tg^2(t)dt < \infty</math>, then | |||

<math display="block">\mathbb E\left(\int_0^Tf(t)dW_t\right)\left(\int_0^Tg(t)dW_t\right) =\mathbb E\int_0^Tf(t)g(t)dt\ .</math>|}} | |||

Some facts about the It\^o integral: | |||

<ul style{{=}}"list-style-type:lower-roman"><li>One can look at Equation \eqref{eq:itoInt} and think about the stochastic integral as a sum of independent normal random variables with mean zero and variance <math>T/N</math>, | |||

<math display="block">\sum_{n=0}^{N-1} f(t_n)(W_{t_{n+1}}-W_{t_n})\sim \mathcal N\left( 0 ~,\frac TN\sum f^2(t_n)\right)\ .</math> | |||

Therefore, one might suspect that <math>\int_0^Tf(t)dW_t</math> is normal distributed.</li> | |||

<li>In fact, the It\^o integral is normally distributed when <math>f</math> is a non-stochastic function, and its variance is given by te It\^o isometry, | |||

<math display="block">\int_0^Tf(t)dW_t\sim \mathcal N\left(0~,\int_0^Tf^2(t)dt\right)\ .</math></li> | |||

<li>The It\^o integral is also defined for functions of another random variable. For instance, <math>f:\mathbb R\rightarrow \mathbb R</math> and another random variable <math>X_t</math>, the It\^o integral is, | |||

<math display="block">\int_0^Tf(X_t)dW_t =\lim_{N\rightarrow\infty}\sum_{n=0}^{N-1}f(X_{t_n})(W_{t_{n+1}}-W_{t_n})\ .</math> | |||

The It\^o isometry for this integral is, | |||

<math display="block">\mathbb E\left(\int_0^Tf(X_t)dW_t\right)^2 = \int_0^T\mathbb Ef^2(X_t)dt\ ,</math> | |||

provided that <math>X</math> is non-anticipative of <math>W</math>.</li> | |||

</ul> | |||

==Stochastic Differential Equations \& It\^o's Lemma== | |||

With the stochastic integral defined, we can now start talking about differentials. In applications the stochastic differential is how we think about random process that evolve over time. For instance, the return on a portfolio or the evolution of a bond yield. The idea is not that various physical phenomena are Brownian motion, but that they are ''driven'' by a Brownian motion. | |||

Instead of a differential equation, the integrands in It\^o integrals satisfy stochastic differential equations (SDEs). For instance, | |||

<math display="block"> | |||

\begin{align*} | |||

&dS_t = \mu S_tdt+\sigma S_tdW_t\qquad\qquad\hbox{geometric Brownian motion,}\\ | |||

&dY_t = \kappa(\theta-Y_t)dt+\gamma dW_t\qquad\hbox{an Ornstein-Uhlenbeck process,}\\ | |||

&dX_t=a(X_t)dt+b(X_t)dW_t\qquad\hbox{a general SDE.} | |||

\end{align*} | |||

</math> | |||

Essentially, the formulation of an SDE tells us the It\^o integral representation. For instance, | |||

<math display="block">dX_t=a(X_t)dt+b(X_t)dW_t\qquad\Leftrightarrow\qquad X_t = X_0+\int_0^ta(X_s)ds+\int_0^tb(X_s)dW_s\ .</math> | |||

Hence, any SDE that has no <math>dt</math>-terms is a martingale. For instance, if <math>dS_t = rS_tdt+\sigma S_tdW_t</math>, then <math>F_t=e^{-rt}S_t</math> satisfies the SDE <math>dF_t = \sigma F_tdW_t</math> and is therefore a martingale. | |||

On any given day, mathematicians, physicists, and practitioners may or may not recall the conditions on functions <math>a</math> and <math>b</math> that provide a sound mathematical framework, but the safest thing to do is to work with coefficients that are known to provide existence and uniqueness of solutions to the SDE (see page 68 of <ref name="oksendal">{\O}ksendal, B. (2003).''Stochastic differential equations: an introduction with applications''.Springer.</ref>). | |||

{{proofcard|Theorem|thm:sdeEU|'''(Conditions for Existence and Uniqueness of Solutions to SDEs).''' For <math>0 < T < \infty</math>, let <math>t\in[0,T]</math> and consider the SDE | |||

<math display="block">dX_t = a(t,X_t)dt+b(t,X_t)dW_t\ ,</math> | |||

with initial condition <math>X_ 0 =x</math> (x constant). Sufficient conditions for existence and uniqueness of square-integrable solutions to this SDE (i.e. solutions such that <math>\mathbb E\int_0^T|X_t|^2dt < \infty</math>) are '''linear growth''' | |||

<math display="block">|a(t,x)|+|b(t,x)|\leq C(1+|x|)\qquad\forall x\in \mathbb R\hbox{ and }\forall t\in [0,T]</math> | |||

for some finite constant <math>C > 0</math>, and '''Lipschitz continuity''' | |||

<math display="block">|a(t,x)-a(t,y)|+|b(t,x)-b(t,y)|\leq D|x-y|\qquad\forall x,y\in\mathbb R\hbox{ and }\forall t\in [0,T]</math> | |||

where <math>0 < D < \infty</math> is the Lipschitz constant.|}} | |||

From the statement of [[#thm:sdeEU |Theorem]] it should be clear that these are not necessary conditions. For instance, the widely used '''square-root process''' | |||

<math display="block">dX_t = \kappa(\bar X-X_t)dt+\gamma\sqrt{X_t}dW_t</math> | |||

does not satisfy linear growth or Lipschitz continuity for <math>x</math> near zero, but there does exist a unique solution if <math>\gamma^2\leq 2\bar X\kappa</math>. In general, existence of solutions for SDEs not covered by [[#thm:sdeEU |Theorem]] needs to be evaluated on a case-by-case basis. The rule of thumb is to stay within the bounds of the theorem, and only work with SDEs outside if you are certain that the solution exists (and is unique). | |||

'''Example''' | |||

The canonical example to demonstrate non-uniqueness for non-Lipschitz coefficients is the Tanaka equation, | |||

<math display="block">dX_t = sgn(X_t)dW_t\ ,</math> | |||

with <math>X_0=0</math>, where <math>sgn(x) </math> is the sign function; <math>sgn(x) = 1</math> if <math>x > 0</math>, <math>sgn(x) = -1</math> if <math>x < 0</math> and <math>sgn(x) = 0</math> if <math>x=0</math>. Consider another Brownian motion <math>\hat W_t</math> and define | |||

<math display="block">\widetilde W_t = \int_0^tsgn(\hat W_s)d\hat W_s\ ,</math> | |||

where it can be checked that <math>\widetilde W_t</math> is also a Brownian motion. We can also write | |||

<math display="block">d\hat W_t = sgn(\hat W_t)d\widetilde W_t\ ,</math> | |||

which shows that <math>X_t=\hat W_t</math> is a solution to the Tanaka equation. However, this is referred to as a '''weak solution''', meaning that the driving Brownian motion was recovered after the solution <math>X</math> was given; a '''strong solution''' is a solution obtained when first the Brownian motion is given. Notice this weak solution is non-unique: take <math>Y_t=-X_t</math> and look at the differential, | |||

<math display="block">dY_t = -dX_t = -sgn(X_t)d\widetilde W_t=sgn(Y_t)d\widetilde W_t\ .</math> | |||

Notice that <math>X_t\equiv 0</math> is also a solution. | |||

Given a stochastic differential equation, It\^o's lemma tells us the differential of any function on that process. It\^o's lemma can be thought of as the stochastic analogue to differentiation, and is a fundamental tool in stochastic differential equations: | |||

{{proofcard|Lemma|lemma-1|'''(It\^o's Lemma).''' Consider the process <math>X_t</math> with SDE <math>dX_t = a(X_t)dt+b(X_t)dW_t</math>. For a function <math>f(t,x)</math> with at least one derivative in <math>t</math> and at least two derivatives in <math>x</math>, we have | |||

<span id{{=}}"eq:itoLemma"/> | |||

<math display="block"> | |||

\begin{equation} | |||

\label{eq:itoLemma} | |||

df(t,X_t) = \left(\frac{\partial}{\partial t}+a(X_t)\frac{\partial}{\partial x}+\frac{b^2(X_t)}{2}\frac{\partial^2}{\partial x^2}\right)f(t,X_t)dt+b(X_t)\frac{\partial}{\partial x}f(t,X_t)dW_t\ . | |||

\end{equation} | |||

</math>|}} | |||

'''Details on the It\^o Lemma.''' The proof of \eqref{eq:itoLemma} is fairly involved and has several details to check, but ultimately, It\^o's lemma is a Taylor expansion to the 2nd order term, e.g. | |||

<math display="block">f(W_t)\simeq f(W_{t_0})+f'(W_{t_0})(W_t-W_{t_0})+\frac 12f''(W_{t_0})(W_t-W_{t_0})^2</math> | |||

for <math>0 < t-t_0\ll 1</math>, (i.e. <math>t</math> just slightly greater than <math>t_0</math>). To get a sense of why higher order terms drop out, take <math>t_0=0</math> and <math>t_n = nt/N</math> for some large <math>N</math>, and use the Taylor expansion: | |||

<math display="block"> | |||

\begin{align*} | |||

f(W_t)-f(W_0) &= \sum_{n=0}^{N-1} f'(W_{t_n})(W_{t_{n+1}}-W_{t_n})+ \frac12\sum_{n=0}^{N-1} f''(W_{t_n})(W_{t_{n+1}}-W_{t_n})^2\\ | |||

&+ \frac16\sum_{n=0}^{N-1} f'''(W_{t_n})(W_{t_{n+1}}-W_{t_n})^3+ \frac{1}{24}\sum_{n=0}^{N-1} f''''(\xi_n)(W_{t_{n+1}}-W_{t_n})^4\ , | |||

\end{align*} | |||

</math> | |||

where <math>\xi_n</math> is some (random) intermediate point to make the expansion exact. Now we use independent increments and the fact that | |||

<math display="block">\mathbb E(W_{t_{n+1}}-W_{t_n})^k=\Bigg\{\begin{array}{cc} | |||

\left(\frac tN\right)^{k/2}(k-1)!!&\hbox{if <math>k</math> even}\\ | |||

0&\hbox{if <math>k</math> odd}\ , | |||

\end{array}</math> | |||

from which is can be seem that the <math>f'</math> and <math>f''</math> are are significant, | |||

<math display="block"> | |||

\begin{align*} | |||

\mathbb E\left(\sum_{n=0}^{N-1} f'(W_{t_n})(W_{t_{n+1}}-W_{t_n})\right)^2&= \frac tN\sum_{n=0}^{N-1} \mathbb E|f'(W_{t_n})|^2 = \mathcal O\left(1\right)\ ,\\ | |||

\mathbb E\sum_{n=0}^{N-1} f''(W_{t_n})(W_{t_{n+1}}-W_{t_n})^2& =\frac tN\sum_{n=0}^{N-1} \mathbb Ef''(W_{t_n})=\mathcal O\left(1\right)\ , | |||

\end{align*} | |||

</math> | |||

and assuming there is some bound <math>M < \infty</math> such that <math>|f'''|\leq M</math> and <math>|f''''|\leq M</math>, we see that the higher order terms are arbitrarily small, | |||

<math display="block"> | |||

\begin{align*} | |||

\mathbb E\left|\sum_{n=0}^{N-1} f'''(W_{t_n})(W_{t_{n+1}}-W_{t_n})^3\right|&\leq M\sum_{n=0}^{N-1} \mathbb E\left|W_{t_{n+1}}-W_{t_n}\right|^3\\ | |||

&\leq M\sum_{n=0}^{N-1}\sqrt{\mathbb E\left|W_{t_{n+1}}-W_{t_n}\right|^6}=\mathcal O\left(\frac{1}{N^{1/2}}\right)\ , \\ | |||

\mathbb E\left|\sum_{n=0}^{N-1} f''''(\xi_n)(W_{t_{n+1}}-W_{t_n})^4\right|&\leq M\sum_{n=0}^{N-1} \mathbb E(W_{t_{n+1}}-W_{t_n})^4=\mathcal O\left(\frac{1}{N}\right)\ , | |||

\end{align*} | |||

</math> | |||

where we've used the Cauchy-Schwartz inequality, <math>\mathbb E|Z|^3\leq \sqrt{\mathbb E|Z|^6}</math> for some random-variable <math>Z</math>.\\ | |||

The following are examples that should help to familiarize with the It\^o lemma and solutions to SDEs: | |||

'''Example''' | |||

<math>dX_t = \kappa(\theta-X_t)dt+\gamma dW_t</math> has solution | |||

<math display="block"> | |||

X_t=\theta+(X_0-\theta)e^{-\kappa t}+\gamma \int_0^te^{-\kappa(t-s)}dW_s\ . | |||

</math> | |||

This solution uses an integrating factor<ref group="Notes" >For an ordinary differential equation <math>\frac{d}{dt}X_t+\kappa X_t = a_t</math>, the integrating factor is <math>e^{\kappa t}</math> and the solution is <math>X_t = X_0e^{-\kappa t}+\int_0^ta_se^{-\kappa(t-s)}ds</math>. An equivalent concept applies for stochastic differential equations.</ref> of <math>e^{\kappa t}</math>, | |||

<math display="block"> | |||

dX_t+\kappa X_tdt = \kappa\theta dt+\gamma dW_t\ . | |||

</math> | |||

'''Example''' | |||

<math display="block">dX_t = dt+2W_tdW_t\ .</math> | |||

'''Example''' | |||

<math>\frac{dS_t}{S_t} = \mu dt+\sigma dW_t</math> has solution | |||

<math display="block"> | |||

S_t = S_0e^{\left(\mu-\frac{1}{2}\sigma^2\right)t+\sigma W_t} | |||

</math> | |||

which is always positive. Again, verify with It\^o's lemma. Also try It\^o's lemma on <math>\log(S_t)</math>. | |||

'''Example''' | |||

Suppose <math>dY_t =(\sigma^2 Y_t^3 -aY_t)dt+\sigma Y_t^2dW_t</math>. Apply It\^o's lemma to <math>X_t = -1/Y_t</math> to get a simpler SDE for <math>X_t</math>, | |||

<math display="block">dX_t = -aX_tdt+\sigma dW_t\ .</math> | |||

Notice that <math>Y_t</math>'s SDE doesn't satisfy the criterion of [[#thm:sdeEU |Theorem]], but though the change of variables we see that <math>Y_t</math> is really a function of <math>X_t</math> that is covered by the theorem. | |||

==Multivariate It\^o Lemma== | |||

Let <math>W_t = (W_t^1,W_t^2,\dots,W_t^n)</math> be an <math>n</math>-dimensional Brownian motion such that <math>\frac 1t\mathbb EW_t^iW_j^j = \indicator{i=j}</math>. Now suppose that we also have a system of SDEs, <math>X_t = (X_t^1,X_t^2,\dots,X_t^m)</math> (with <math>m</math> possibly not equal to <math>n</math>) such that | |||

<math display="block">dX_t^i =a_i(X_t)dt+\sum_{j=1}^nb_{ij}(X_t)dW_t^j\qquad\hbox{for each <math>i\leq m</math>}</math> | |||

where <math>\alpha_i:\mathbb R^m\rightarrow \mathbb R</math> are the drift coefficients, and the diffusion coefficients <math>b_{ij}:\mathbb R^m\rightarrow \mathbb R</math> are such that <math>b_{ij}=b_{ji}</math> and | |||

<math display="block">\sum_{i,j=1}^m\sum_{\ell=1}^nb_{i\ell}(x)b_{j\ell}(x)v_iv_j > 0\qquad\forall v\in \mathbb R^m\hbox{ and }\forall x\in\mathbb R^m, </math> | |||

i.e. for each <math>x\in\mathbb R^m</math> the covariance matrix is positive definite. For some differentiable function, there is a multivariate version of the It\^o lemma. | |||

{{proofcard|Lemma|lemma-2|'''(The Multivariate It\^o Lemma).''' Let <math>f:\mathbb R^+\times\mathbb R^m\rightarrow \mathbb R</math> with at least one derivative in the first argument and at least two derivatives in the remaining <math>m</math> arguments. The differential of <math>f(t,X_t)</math> is | |||

<math display="block">df(t,X_t) = \left(\frac{\partial}{\partial t}+\sum_{i=1}^m\alpha_i(X_t)\frac{\partial}{\partial x_i}+\frac 12\sum_{i,j=1}^m\mathbf b_{ij}(X_t)\frac{\partial^2}{\partial x_i\partial x_j}\right)f(t,X_t)dt</math> | |||

<span id{{=}}"eq:multiVarIto"/> | |||

<math display="block"> | |||

\begin{equation} | |||

\label{eq:multiVarIto} | |||

+\sum_{i=1}^m\sum_{\ell=1}^nb_{i\ell}(X_t)\frac{\partial}{\partial x_i}f(t,X_t)dW_t^\ell\ | |||

\end{equation} | |||

</math> | |||

where <math>\mathbf b_{ij}(x) = \sum_{\ell=1}^nb_{i\ell}(x)b_{j\ell}(x)</math>.|}} | |||

Equation \eqref{eq:multiVarIto} is essentially a 2nd-order Taylor expansion like the univariate case of equation \eqref{eq:itoLemma}. Of course, [[#thm:sdeEU |Theorem]] still applies to to the system of SDEs (make sure <math>a_i</math> and <math>b_{ij}</math> have linear growth and are Lipschitz continuous for each <math>i</math> and <math>j</math>), and in the multidimensional case it is also important whether or not <math>n\geq m</math>, and if so it is important that there is some constant <math>c</math> such that <math>\inf_x\mathbf b(x) > c > 0</math>. If there is not such constant <math>c > 0</math>, then we are possibly dealing with a system that is ''degenerate'' and there could be (mathematically) technical problems. \\ | |||

'''Correlated Brownian Motion.''' Sometimes the multivariate case is formulated with a correlation structure among the <math>W_t^i</math>'s, in which the It\^o lemma of equation \eqref{eq:multiVarIto} will have extra correlation terms. Suppose there is correlation matrix, | |||

<math display="block">\rho = \begin{pmatrix}1&\rho_{12}&\rho_{13}&\dots&\rho_{1n}\\ | |||

\rho_{21}&1&\rho_{23}&\dots&\rho_{2n}\\ | |||

\rho_{31}&\rho_{32}&1&\dots&\rho_{3n}\\ | |||

\vdots&&&\ddots&\\ | |||

\rho_{n1}&\rho_{n2}&\rho_{n3}&\dots&1 | |||

\end{pmatrix}</math> | |||

where <math>\rho_{ij}=\rho_{ji}</math> and such that <math>\frac 1t\mathbb EW_t^iW_t^j=\rho_{ij}</math> for all <math>i,j\leq n</math>. Then equation \eqref{eq:multiVarIto} becomes | |||

<math display="block">df(t,X_t) = \left(\frac{\partial}{\partial t}+\sum_{i=1}^m\alpha_i(X_t)\frac{\partial}{\partial x_i}+\frac 12\sum_{i,j=1}^m\sum_{\ell,k=1}^n\rho_{\ell k}b_{i\ell}(X_t)b_{kj}(X_t)\frac{\partial^2}{\partial x_i\partial x_j}\right)f(t,X_t)dt</math> | |||

<math display="block"> | |||

+\sum_{i=1}^m\sum_{\ell=1}^nb_i(X_t)\frac{\partial}{\partial x_i}f(t,X_t)dW_t^\ell\ .</math> | |||

'''Example''' | |||

<math display="block"> | |||

\begin{align*} | |||

&dX_t=a(X_t)dx+b(X_t)dW_t^1\\ | |||

&dY_t = \alpha(Y_t)dt+\sigma(Y_t)dW_t^2 | |||

\end{align*} | |||

</math> | |||

and with <math>\frac 1t\mathbb EW_t^1W_t^2 = \rho</math>. Then, | |||

<math display="block"> | |||

\begin{align*} | |||

df(t,X_t,Y_t)&= \frac{\partial}{\partial t}f(t,X_t,Y_t)dt\\ | |||

&\\ | |||

&+\underbrace{\left(\frac{b^2(X_t)}{2}\frac{\partial^2}{\partial x^2}+a(X_t)\frac{\partial}{\partial x}\right)f(t,X_t,Y_t)dt+b(X_t)\frac{\partial}{\partial x}f(t,X_t,Y_t)dW_t^1}_{\hbox{<math>X_t</math> terms}}\\ | |||

&+\underbrace{\left(\frac{\sigma^2(Y_t)}{2}\frac{\partial^2}{\partial y^2}+\alpha(Y_t)\frac{\partial}{\partial y}\right)f(t,X_t,Y_t)dt+\sigma(Y_t)\frac{\partial}{\partial y}f(t,X_t,Y_t)dW_t^2}_{\hbox{<math>Y_t</math> terms}}\\ | |||

&+\underbrace{\rho\sigma(X_t)b(X_t)\frac{\partial^2}{\partial x\partial y}f(t,X_t,Y_t)dt}_{\hbox{cross-term.}} | |||

\end{align*} | |||

</math> | |||

==The Feynman-Kac Formula== | |||

If you one could identify the fundamental link between asset pricing and stochastic differential equations, it would be the Feynman-Kac formula. The Feynman-Kac formula says the following: | |||

{{proofcard|Proposition|prop:feynmanKac|'''(The Feynman-Kac Formula).''' Let the function <math>f(x)</math> be bounded, let <math>\psi(x)</math> be twice differentiable with compact support<ref group="Notes" >Compact support of a function means there is a compact subset <math>K</math> such that <math>\psi(x) = 0</math> if <math>x\notin K</math>. For a real function of a scaler variable, this means there is a bound <math>M < \infty</math> such that <math>\psi(x)=0</math> if <math>|x| > M</math>.</ref> in <math>K\subset \mathbb R</math>, and let the function <math>q(x)</math> is bounded below for all <math>x\in\mathbb R</math>, and let <math>X_t</math> be given by the SDE | |||

<span id{{=}}"eq:FCsde"/> | |||

<math display="block"> | |||

\begin{equation} | |||

\label{eq:FCsde} | |||

dX_t = a(X_t)dt+b(X_t)dW_t\ . | |||

\end{equation} | |||

</math> | |||

<ul style{{=}}"list-style-type:lower-roman"><li>For <math>t\in[0,T]</math>, the Feynman-Kac formula is | |||

<span id{{=}}"eq:FCformula"/> | |||

<math display="block"> | |||

\begin{equation} | |||

\label{eq:FCformula} | |||

v(t,x) = \mathbb E\left[ \int_t^Tf(X_s)e^{-\int_t^sq(X_u)du}ds+e^{-\int_t^Tq(X_s)ds}\psi(X_T)\Big|X_t=x\right] | |||

\end{equation} | |||

</math> | |||

and is a solution to the following partial differential equation (PDE): | |||

<span id{{=}}"eq:FCpde"/><span id{{=}}"eq:FCpdeTC"/> | |||

<math display="block"> | |||

\begin{eqnarray} | |||

\label{eq:FCpde} | |||

\frac{\partial}{\partial t}v(t,x) +\left( \frac{b^2(x)}{2}\frac{\partial^2}{\partial x^2}+a(x)\frac{\partial}{\partial x}\right)v(t,x) - q(x)v(t,x)+f(t,x) &=&0\\ | |||

\label{eq:FCpdeTC} | |||

v(T,x)&=&\psi(x)\ . | |||

\end{eqnarray} | |||

</math></li> | |||

<li>If <math>\omega(t,x)</math> is a bounded solution to equations \eqref{eq:FCpde} and \eqref{eq:FCpdeTC} for <math>x\in K</math>, then <math>\omega(t,x) = v(t,x)</math>.</li> | |||

</ul>|}} | |||

The Feynman-Kac formula will be instrumental in pricing European derivatives in the coming sections. For now it is important to take note of how the SDE in \eqref{eq:FCsde} relates to the formula \eqref{eq:FCformula} and to the PDE of \eqref{eq:FCpde} and \eqref{eq:FCpdeTC}. It is also important to conceptualize how the Feynman-Kac formula might be extended to the multivariate case. In particular for scalar solutions of \eqref{eq:FCpde} and \eqref{eq:FCpdeTC} that are expectations of a multivariate process <math>X_t\in\mathbb R^m</math>, the key thing to realize is that <math>x</math>-derivatives in \eqref{eq:FCpde} are the same is the <math>dt</math>-terms in the It\^o lemma. Hence, multivariate Feynman-kac can be deduced from the multivariate It\^o lemma. | |||

==Girsanov Theorem== | |||

Another important link between asset pricing and stochastic differential equations is the Girsanov theorem, which provides a means for defining the equivalent martingale measure. | |||

For <math>T < \infty</math>, consider a Brownian motion <math>(W_t)_{t\leq T}</math>, and consider another process <math>\theta_t</math> that does not anticipate future outcomes of the <math>W</math> (i.e. given the filtration <math>\mathcal F_t^W</math> generated by the history of <math>W</math> up to time <math>t</math>, <math>\theta_t</math> is adapted to <math>\mathcal F_t^W</math>). The first feature to the Girsanov theorem is the Dolean-Dade exponent: | |||

<span id{{=}}"eq:doleanExp"/> | |||

<math display="block"> | |||

\begin{equation} | |||

\label{eq:doleanExp} | |||

Z_t \doteq \exp\left(-\frac 12\int_0^t\theta_s^2ds+\int_0^t\theta_sdW_s\right) | |||

\end{equation} | |||

</math> | |||

A sufficient condition for the application of Girsanov theorem is '''the Novikov condition,''' | |||

<math display="block">\mathbb E\exp\left(\frac 12\int_0^T\theta_s^2ds\right) < \infty\ .</math> | |||

Given the Novikov condition, the process <math>Z_t</math> is a martingale on <math>[0,T]</math> and a new probability measure is defined using the density | |||

<span id{{=}}"eq:Qmeasure"/> | |||

<math display="block"> | |||

\begin{equation} | |||

\label{eq:Qmeasure} | |||

\frac{d\mathbb Q}{d\mathbb P}\Big|_t=Z_t\qquad\forall t\leq T\ ; | |||

\end{equation} | |||

</math> | |||

in general <math>Z</math> may not be a true martingale but only a ''local martingale'' (see [[guide:85577edf09#app:martingalesStoppingTimes |Appendix]] and <ref name="harrisonPliska">Harrison, M. and Pliska, S. (1981).Martingales and stochastic integrals in the theory of continuous trading.''Stochastic Processes and their Applications'', (3):215--260.</ref>). The Girsanov Theorem is stated as follows: | |||

{{proofcard|Theorem|thm:girsanov|'''(Girsanov Theorem).''' If <math>Z_t</math> is a true martingale on <math>[0,T]</math> then the process <math>\widetilde W_t = W_t-\int_0^t\theta_sds</math> is Brownian motion under the measure <math>\mathbb Q</math> on <math>[0,T]</math>.|}} | |||

{{alert-info | The Novikov condition is only sufficient and not necessary. There are other sufficient conditions such as the Kazamaki condition, <math>\sup_{\tau\in\mathcal T}\mathbb Ee^{\frac12\int_0^{T\wedge\tau}\theta_sdW_s} < \infty</math> where <math>\mathcal T</math> is the set of finite stopping times. }} | |||

{{alert-info | It is important to have <math>T < \infty</math>. In fact, the Theorem does not hold for infinite time. }} | |||

'''Example''' | |||

<math display="block">\frac{dS_t}{S_t}=\mu dt+\sigma dW_t\ ,</math> | |||

and let <math>r\geq 0</math> be the risk-free rate of interest. For the exponential martingale | |||

<math display="block">Z_t =\exp\left(-\frac t2\left(\frac{r-\mu}{\sigma}\right)^2+\frac{r-\mu}{\sigma}W_t\right)\ ,</math> | |||

the process <math> W_t^Q \doteq \frac{\mu-r}{\sigma}t+W_t</math> is <math>\mathbb Q</math>-Brownian motion, and the price process satisfies, | |||

<math display="block">\frac{dS_t}{S_t}=rdt+\sigma dW_t^Q\ .</math> | |||

Hence | |||

<math display="block">S_t = S_0\exp\left(\left(r-\frac 12\sigma^2\right)t+\sigma W_t^Q\right)</math> | |||

and <math>S_te^{-rt}</math> is a <math>\mathbb Q</math>-martingale. | |||

==General references== | |||

{{cite arXiv|last1=Papanicolaou|first1=Andrew|year=2015|title=Introduction to Stochastic Differential Equations (SDEs) for Finance|eprint=1504.05309|class=q-fin.MF}} | |||

==References== | |||

{{reflist}} | |||

==Notes== | |||

{{Reflist|group=Notes}} | |||

Revision as of 00:30, 4 June 2024

\label{chapt:brownianMotion} Continuous time financial models will often use Brownian motion to model the trajectory of asset prices. One can typically open the finance section of a newspaper and see a time series plot of an asset's price history, and it might be possible that the daily movements of the asset resemble a random walk or a path taken by a Brownian motion. Such a connection between asset prices and Brownian motion was central to the formulas of [1] and [2], and have since led to a variety of models for pricing and hedging. The study of Brownian motion can be intense, but the main ideas are a simple definition and the application of It\^o's lemma. For further reading, see [3][4].

Definition and Properties of Brownian Motion

On the time interval [math][0,T][/math], Brownian motion is a continuous stochastic process [math](W_t)_{t\leq T}[/math] such that

- [math]W_0 = 0[/math],

- Independent Increments: for [math]0\leq s' \lt t'\leq s \lt t\leq T[/math], [math]W_t-W_s[/math] is independent of [math]W_{t'}-W_{s'}[/math],

- Conditionally Gaussian: [math]W_t-W_s\sim\mathcal N(0,t-s)[/math], i.e. is normal with mean zero and variance [math]t-s[/math].

There is a vast study of Brownian motion. We will instead use Brownian motion rather simply; the only other fact that is somewhat important is that Brownian motion is nowhere differentiable, that is

although sometimes people write [math]\dot W_t[/math] to denote a white noise process. It should also be pointed out that [math]W_t[/math] is a martingale,

Simulation. There are any number of ways to simulate Brownian motion, but to understand why Brownian motion can be treated like a ‘random walk’, consider the process,

with [math]W_{t_0} = 0[/math] and [math]t_n = n\frac TN[/math] for [math]n=0,1,2\dots,N[/math]. Obviously [math]W_{t_n}^N[/math] has independent increments, and conditionally has the same mean and variance as Brownian motion. However it's not conditional Gaussian. However, as [math]N\rightarrow \infty[/math] the probability law of [math]W_{t_n}^N[/math] converges to the probability law of Brownian motion, so this simple random walk is actually a good way to simulate Brownian motion if you take [math]N[/math] large. However, one usually has a random number generator that can produce [math]W_{t_n}^N[/math] that also has conditionally Gaussian increments, and so it probably better to simulate Brownian motion this way. A sample of 10 independent Brownian Motions simulations are shown in Figure.

The It\^o Integral

Introductory calculus courses teach differentiation first and integration second, which makes sense because differentiation is generally a methodical procedure (e.g., you use the chain rule) whereas finding an anti-derivative requires all kinds of change-of-variables, trig-substitutions, and guess-work. The It\^o calculus is derived and taught in the reverse order: first we need to understand the definition of an It\^o (stochastic) integral, and then we can understand what it means for a stochastic process to have a differential. The construction of the It\^o integral begins with a backward Riemann sum. For some function [math]f:[0,T]\rightarrow \mathbb R[/math] (possibly random), non-anticipative of [math]W[/math], the It\^o integral is defined as

where [math]t_n = n\frac TN[/math], with the limit holding in the strong sense. If [math]\mathbb E\int_0^Tf^2(t)dt \lt \infty[/math], then through an application of Fubini's theorem, it can be shown that equation \eqref{eq:itoInt} is a martingale,

Another important property of the stochastic integral is the It\^o Isometry,

(It\^o Isometry). For any functions [math]f,g[/math] (possibly random), non-anticipative of [math]W[/math], with [math]\mathbb E\int_0^Tf^2(t)dt \lt \infty[/math] and [math]\mathbb E\int_0^Tg^2(t)dt \lt \infty[/math], then

Some facts about the It\^o integral:

- One can look at Equation \eqref{eq:itoInt} and think about the stochastic integral as a sum of independent normal random variables with mean zero and variance [math]T/N[/math],

[[math]]\sum_{n=0}^{N-1} f(t_n)(W_{t_{n+1}}-W_{t_n})\sim \mathcal N\left( 0 ~,\frac TN\sum f^2(t_n)\right)\ .[[/math]]Therefore, one might suspect that [math]\int_0^Tf(t)dW_t[/math] is normal distributed.

- In fact, the It\^o integral is normally distributed when [math]f[/math] is a non-stochastic function, and its variance is given by te It\^o isometry,

[[math]]\int_0^Tf(t)dW_t\sim \mathcal N\left(0~,\int_0^Tf^2(t)dt\right)\ .[[/math]]

- The It\^o integral is also defined for functions of another random variable. For instance, [math]f:\mathbb R\rightarrow \mathbb R[/math] and another random variable [math]X_t[/math], the It\^o integral is,

[[math]]\int_0^Tf(X_t)dW_t =\lim_{N\rightarrow\infty}\sum_{n=0}^{N-1}f(X_{t_n})(W_{t_{n+1}}-W_{t_n})\ .[[/math]]The It\^o isometry for this integral is,[[math]]\mathbb E\left(\int_0^Tf(X_t)dW_t\right)^2 = \int_0^T\mathbb Ef^2(X_t)dt\ ,[[/math]]provided that [math]X[/math] is non-anticipative of [math]W[/math].

Stochastic Differential Equations \& It\^o's Lemma

With the stochastic integral defined, we can now start talking about differentials. In applications the stochastic differential is how we think about random process that evolve over time. For instance, the return on a portfolio or the evolution of a bond yield. The idea is not that various physical phenomena are Brownian motion, but that they are driven by a Brownian motion. Instead of a differential equation, the integrands in It\^o integrals satisfy stochastic differential equations (SDEs). For instance,

Essentially, the formulation of an SDE tells us the It\^o integral representation. For instance,

Hence, any SDE that has no [math]dt[/math]-terms is a martingale. For instance, if [math]dS_t = rS_tdt+\sigma S_tdW_t[/math], then [math]F_t=e^{-rt}S_t[/math] satisfies the SDE [math]dF_t = \sigma F_tdW_t[/math] and is therefore a martingale. On any given day, mathematicians, physicists, and practitioners may or may not recall the conditions on functions [math]a[/math] and [math]b[/math] that provide a sound mathematical framework, but the safest thing to do is to work with coefficients that are known to provide existence and uniqueness of solutions to the SDE (see page 68 of [4]).

(Conditions for Existence and Uniqueness of Solutions to SDEs). For [math]0 \lt T \lt \infty[/math], let [math]t\in[0,T][/math] and consider the SDE

From the statement of Theorem it should be clear that these are not necessary conditions. For instance, the widely used square-root process

does not satisfy linear growth or Lipschitz continuity for [math]x[/math] near zero, but there does exist a unique solution if [math]\gamma^2\leq 2\bar X\kappa[/math]. In general, existence of solutions for SDEs not covered by Theorem needs to be evaluated on a case-by-case basis. The rule of thumb is to stay within the bounds of the theorem, and only work with SDEs outside if you are certain that the solution exists (and is unique). Example The canonical example to demonstrate non-uniqueness for non-Lipschitz coefficients is the Tanaka equation,

with [math]X_0=0[/math], where [math]sgn(x) [/math] is the sign function; [math]sgn(x) = 1[/math] if [math]x \gt 0[/math], [math]sgn(x) = -1[/math] if [math]x \lt 0[/math] and [math]sgn(x) = 0[/math] if [math]x=0[/math]. Consider another Brownian motion [math]\hat W_t[/math] and define

where it can be checked that [math]\widetilde W_t[/math] is also a Brownian motion. We can also write

which shows that [math]X_t=\hat W_t[/math] is a solution to the Tanaka equation. However, this is referred to as a weak solution, meaning that the driving Brownian motion was recovered after the solution [math]X[/math] was given; a strong solution is a solution obtained when first the Brownian motion is given. Notice this weak solution is non-unique: take [math]Y_t=-X_t[/math] and look at the differential,

Notice that [math]X_t\equiv 0[/math] is also a solution.

Given a stochastic differential equation, It\^o's lemma tells us the differential of any function on that process. It\^o's lemma can be thought of as the stochastic analogue to differentiation, and is a fundamental tool in stochastic differential equations:

(It\^o's Lemma). Consider the process [math]X_t[/math] with SDE [math]dX_t = a(X_t)dt+b(X_t)dW_t[/math]. For a function [math]f(t,x)[/math] with at least one derivative in [math]t[/math] and at least two derivatives in [math]x[/math], we have

Details on the It\^o Lemma. The proof of \eqref{eq:itoLemma} is fairly involved and has several details to check, but ultimately, It\^o's lemma is a Taylor expansion to the 2nd order term, e.g.

for [math]0 \lt t-t_0\ll 1[/math], (i.e. [math]t[/math] just slightly greater than [math]t_0[/math]). To get a sense of why higher order terms drop out, take [math]t_0=0[/math] and [math]t_n = nt/N[/math] for some large [math]N[/math], and use the Taylor expansion:

where [math]\xi_n[/math] is some (random) intermediate point to make the expansion exact. Now we use independent increments and the fact that

even}\\ 0&\hbox{if [math]k[/math] odd}\ , \end{array}</math> from which is can be seem that the [math]f'[/math] and [math]f''[/math] are are significant,

and assuming there is some bound [math]M \lt \infty[/math] such that [math]|f'''|\leq M[/math] and [math]|f''''|\leq M[/math], we see that the higher order terms are arbitrarily small,

where we've used the Cauchy-Schwartz inequality, [math]\mathbb E|Z|^3\leq \sqrt{\mathbb E|Z|^6}[/math] for some random-variable [math]Z[/math].\\

The following are examples that should help to familiarize with the It\^o lemma and solutions to SDEs: Example [math]dX_t = \kappa(\theta-X_t)dt+\gamma dW_t[/math] has solution

This solution uses an integrating factor[Notes 1] of [math]e^{\kappa t}[/math],

Example

Example [math]\frac{dS_t}{S_t} = \mu dt+\sigma dW_t[/math] has solution

which is always positive. Again, verify with It\^o's lemma. Also try It\^o's lemma on [math]\log(S_t)[/math].

Example Suppose [math]dY_t =(\sigma^2 Y_t^3 -aY_t)dt+\sigma Y_t^2dW_t[/math]. Apply It\^o's lemma to [math]X_t = -1/Y_t[/math] to get a simpler SDE for [math]X_t[/math],

Notice that [math]Y_t[/math]'s SDE doesn't satisfy the criterion of Theorem, but though the change of variables we see that [math]Y_t[/math] is really a function of [math]X_t[/math] that is covered by the theorem.

Multivariate It\^o Lemma

Let [math]W_t = (W_t^1,W_t^2,\dots,W_t^n)[/math] be an [math]n[/math]-dimensional Brownian motion such that [math]\frac 1t\mathbb EW_t^iW_j^j = \indicator{i=j}[/math]. Now suppose that we also have a system of SDEs, [math]X_t = (X_t^1,X_t^2,\dots,X_t^m)[/math] (with [math]m[/math] possibly not equal to [math]n[/math]) such that

}</math> where [math]\alpha_i:\mathbb R^m\rightarrow \mathbb R[/math] are the drift coefficients, and the diffusion coefficients [math]b_{ij}:\mathbb R^m\rightarrow \mathbb R[/math] are such that [math]b_{ij}=b_{ji}[/math] and

i.e. for each [math]x\in\mathbb R^m[/math] the covariance matrix is positive definite. For some differentiable function, there is a multivariate version of the It\^o lemma.

(The Multivariate It\^o Lemma). Let [math]f:\mathbb R^+\times\mathbb R^m\rightarrow \mathbb R[/math] with at least one derivative in the first argument and at least two derivatives in the remaining [math]m[/math] arguments. The differential of [math]f(t,X_t)[/math] is

Equation \eqref{eq:multiVarIto} is essentially a 2nd-order Taylor expansion like the univariate case of equation \eqref{eq:itoLemma}. Of course, Theorem still applies to to the system of SDEs (make sure [math]a_i[/math] and [math]b_{ij}[/math] have linear growth and are Lipschitz continuous for each [math]i[/math] and [math]j[/math]), and in the multidimensional case it is also important whether or not [math]n\geq m[/math], and if so it is important that there is some constant [math]c[/math] such that [math]\inf_x\mathbf b(x) \gt c \gt 0[/math]. If there is not such constant [math]c \gt 0[/math], then we are possibly dealing with a system that is degenerate and there could be (mathematically) technical problems. \\ Correlated Brownian Motion. Sometimes the multivariate case is formulated with a correlation structure among the [math]W_t^i[/math]'s, in which the It\^o lemma of equation \eqref{eq:multiVarIto} will have extra correlation terms. Suppose there is correlation matrix,

where [math]\rho_{ij}=\rho_{ji}[/math] and such that [math]\frac 1t\mathbb EW_t^iW_t^j=\rho_{ij}[/math] for all [math]i,j\leq n[/math]. Then equation \eqref{eq:multiVarIto} becomes

Example

and with [math]\frac 1t\mathbb EW_t^1W_t^2 = \rho[/math]. Then,

terms}}\\ &+\underbrace{\left(\frac{\sigma^2(Y_t)}{2}\frac{\partial^2}{\partial y^2}+\alpha(Y_t)\frac{\partial}{\partial y}\right)f(t,X_t,Y_t)dt+\sigma(Y_t)\frac{\partial}{\partial y}f(t,X_t,Y_t)dW_t^2}_{\hbox{[math]Y_t[/math] terms}}\\ &+\underbrace{\rho\sigma(X_t)b(X_t)\frac{\partial^2}{\partial x\partial y}f(t,X_t,Y_t)dt}_{\hbox{cross-term.}} \end{align*} </math>

The Feynman-Kac Formula

If you one could identify the fundamental link between asset pricing and stochastic differential equations, it would be the Feynman-Kac formula. The Feynman-Kac formula says the following:

(The Feynman-Kac Formula). Let the function [math]f(x)[/math] be bounded, let [math]\psi(x)[/math] be twice differentiable with compact support[Notes 2] in [math]K\subset \mathbb R[/math], and let the function [math]q(x)[/math] is bounded below for all [math]x\in\mathbb R[/math], and let [math]X_t[/math] be given by the SDE

- For [math]t\in[0,T][/math], the Feynman-Kac formula is

[[math]] \begin{equation} \label{eq:FCformula} v(t,x) = \mathbb E\left[ \int_t^Tf(X_s)e^{-\int_t^sq(X_u)du}ds+e^{-\int_t^Tq(X_s)ds}\psi(X_T)\Big|X_t=x\right] \end{equation} [[/math]]and is a solution to the following partial differential equation (PDE):[[math]] \begin{eqnarray} \label{eq:FCpde} \frac{\partial}{\partial t}v(t,x) +\left( \frac{b^2(x)}{2}\frac{\partial^2}{\partial x^2}+a(x)\frac{\partial}{\partial x}\right)v(t,x) - q(x)v(t,x)+f(t,x) &=&0\\ \label{eq:FCpdeTC} v(T,x)&=&\psi(x)\ . \end{eqnarray} [[/math]]

- If [math]\omega(t,x)[/math] is a bounded solution to equations \eqref{eq:FCpde} and \eqref{eq:FCpdeTC} for [math]x\in K[/math], then [math]\omega(t,x) = v(t,x)[/math].

The Feynman-Kac formula will be instrumental in pricing European derivatives in the coming sections. For now it is important to take note of how the SDE in \eqref{eq:FCsde} relates to the formula \eqref{eq:FCformula} and to the PDE of \eqref{eq:FCpde} and \eqref{eq:FCpdeTC}. It is also important to conceptualize how the Feynman-Kac formula might be extended to the multivariate case. In particular for scalar solutions of \eqref{eq:FCpde} and \eqref{eq:FCpdeTC} that are expectations of a multivariate process [math]X_t\in\mathbb R^m[/math], the key thing to realize is that [math]x[/math]-derivatives in \eqref{eq:FCpde} are the same is the [math]dt[/math]-terms in the It\^o lemma. Hence, multivariate Feynman-kac can be deduced from the multivariate It\^o lemma.

Girsanov Theorem

Another important link between asset pricing and stochastic differential equations is the Girsanov theorem, which provides a means for defining the equivalent martingale measure.

For [math]T \lt \infty[/math], consider a Brownian motion [math](W_t)_{t\leq T}[/math], and consider another process [math]\theta_t[/math] that does not anticipate future outcomes of the [math]W[/math] (i.e. given the filtration [math]\mathcal F_t^W[/math] generated by the history of [math]W[/math] up to time [math]t[/math], [math]\theta_t[/math] is adapted to [math]\mathcal F_t^W[/math]). The first feature to the Girsanov theorem is the Dolean-Dade exponent:

A sufficient condition for the application of Girsanov theorem is the Novikov condition,

Given the Novikov condition, the process [math]Z_t[/math] is a martingale on [math][0,T][/math] and a new probability measure is defined using the density

in general [math]Z[/math] may not be a true martingale but only a local martingale (see Appendix and [5]). The Girsanov Theorem is stated as follows:

(Girsanov Theorem). If [math]Z_t[/math] is a true martingale on [math][0,T][/math] then the process [math]\widetilde W_t = W_t-\int_0^t\theta_sds[/math] is Brownian motion under the measure [math]\mathbb Q[/math] on [math][0,T][/math].

Example

and let [math]r\geq 0[/math] be the risk-free rate of interest. For the exponential martingale

the process [math] W_t^Q \doteq \frac{\mu-r}{\sigma}t+W_t[/math] is [math]\mathbb Q[/math]-Brownian motion, and the price process satisfies,

Hence

and [math]S_te^{-rt}[/math] is a [math]\mathbb Q[/math]-martingale.

General references

Papanicolaou, Andrew (2015). "Introduction to Stochastic Differential Equations (SDEs) for Finance". arXiv:1504.05309 [q-fin.MF].

References

- Black, F. and Scholes, M. (1973).The pricing of options and corporate liabilities.Journal of Political Economy, 81(3):637--54.

- Merton, R. (1973).On the pricing of corporate debt: the risk structure of interest rates.Technical report.

- Björk, T. (2000).Arbitrage Theory in Continuous Time.Oxford University Press, 2nd edition.

- 4.0 4.1 {\O}ksendal, B. (2003).Stochastic differential equations: an introduction with applications.Springer.

- Harrison, M. and Pliska, S. (1981).Martingales and stochastic integrals in the theory of continuous trading.Stochastic Processes and their Applications, (3):215--260.

Notes

- For an ordinary differential equation [math]\frac{d}{dt}X_t+\kappa X_t = a_t[/math], the integrating factor is [math]e^{\kappa t}[/math] and the solution is [math]X_t = X_0e^{-\kappa t}+\int_0^ta_se^{-\kappa(t-s)}ds[/math]. An equivalent concept applies for stochastic differential equations.

- Compact support of a function means there is a compact subset [math]K[/math] such that [math]\psi(x) = 0[/math] if [math]x\notin K[/math]. For a real function of a scaler variable, this means there is a bound [math]M \lt \infty[/math] such that [math]\psi(x)=0[/math] if [math]|x| \gt M[/math].