guide:1a7f020d42: Difference between revisions

No edit summary |

mNo edit summary |

||

| Line 1: | Line 1: | ||

<div class="d-none"> | |||

<math> | |||

\( | |||

\require{mathtools} | |||

\def\truelabel{{y}} | |||

\def\clusteridx{{i}} | |||

\def\clustermean{{\boldsymbol \mu}} | |||

\def\nrcluster{{k}} | |||

\def\graph{{\mathcal{G}}} | |||

\def\emperror{{\hat{L}}} | |||

\def\error{{E}} | |||

\def\cluster{{c}} | |||

\def\dataset{{\mathcal{D}}} | |||

\def\featuredim{{n}} | |||

\def\clustercov{{\mathbf{\Sigma}}} | |||

\def\weight{{w}} | |||

\def\weights{{\boldsymbol w}} | |||

\def\nodes{{\mathcal{V}}} | |||

\def\edges{{\mathcal{E}}} | |||

\def\defeq{{\,\coloneqq \,}} | |||

\def\labelvec{{\mathbf{\truelabel}}} | |||

\def\nodeidx{{i}} | |||

\def\itercntr{{r}} | |||

\def\vx{{\mathbf{x}}} | |||

\DeclareMathOperator*{\argmax}{arg\,max} | |||

\DeclareMathOperator*{\argmin}{arg\,min} | |||

\def\truelabel{{y}} | |||

\def\featurevec{{\mathbf{x}}} | |||

\def\sampleidx{{i}} | |||

\def\samplesize{{m}} | |||

\newcommand\prob[1]{p(#1)} | |||

\def\feature{{x}} | |||

\) | |||

</math> | |||

</div> | |||

So far we focused on ML methods that use the <span acronym-label="dbscan" acronym-form="singular+short">ERM</span> principle and lean a hypothesis by minimizing the discrepancy between its predictions and the true labels on a training set. These methods are referred to as supervised methods as they require labeled <span acronym-label="datapoint" acronym-form="singular+short">data point</span>s for which the true label values have been determined by some human (who serves as a “supervisor”). This and the following chapter discus ML methods which do not require to know the label of any <span acronym-label="datapoint" acronym-form="singular+short">data point</span>. These methods are often referred to as “unsupervised” since they do not require a “supervisor” to provide the label values for any <span acronym-label="datapoint" acronym-form="singular+short">data point</span>. | |||

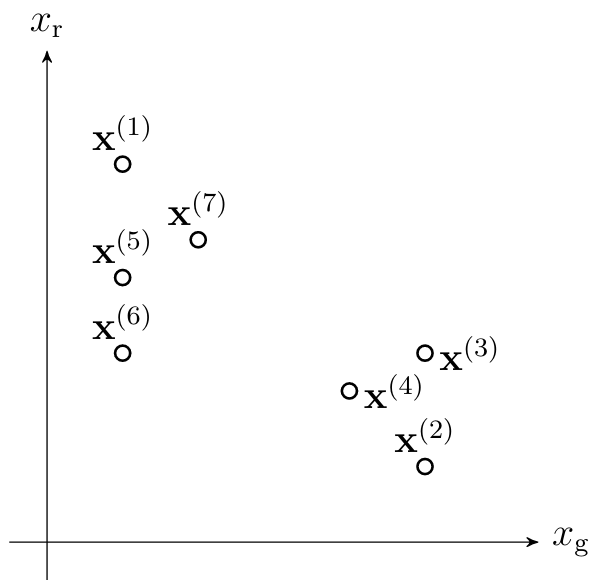

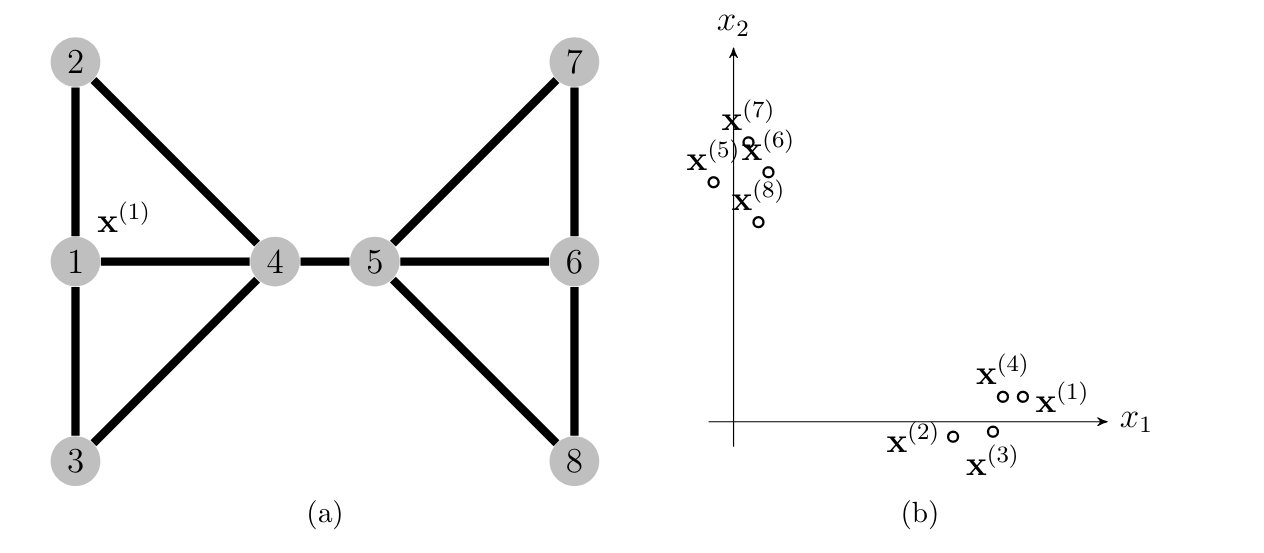

One important family of unsupervised ML methods aim at clustering a given set of <span acronym-label="datapoint" acronym-form="singular+short">data point</span>s such as those depicted in Figure [[#fig_scatterplot_clustering|[fig_scatterplot_clustering]]]. The basic idea of clustering is to decompose a set of <span acronym-label="datapoint" acronym-form="singular+short">data point</span>s into few subsets or <span acronym-label="cluster" acronym-form="singular+short">cluster</span>s that consist of similar <span acronym-label="datapoint" acronym-form="singular+short">data point</span>s. For the <span acronym-label="dataset" acronym-form="singular+short">dataset</span> in Figure [[#fig_scatterplot_clustering|[fig_scatterplot_clustering]]] it seems reasonable to define two clusters, one <span acronym-label="cluster" acronym-form="singular+short">cluster</span> <math display="inline">\big\{ \featurevec^{(1)}, \featurevec^{(5)}, \featurevec^{(6)}, \featurevec^{(7)} \big\}</math> and a second <span acronym-label="cluster" acronym-form="singular+short">cluster</span> <math display="inline">\big\{ \featurevec^{(2)}, \featurevec^{(3)}, \featurevec^{(4)}, \featurevec^{(8)} \big\}</math> . | |||

<div class="d-flex justify-content-center"> | |||

<span id="fig_scatterplot_clustering"></span> | |||

[[File:fig_scatterplot_clustering.png |500px | thumb | Each circle represents an image which is characterized by its average redness <math>\feature_{\rm r}</math> and average greenness <math>\feature_{\rm g}</math>. The <math>\sampleidx</math>-th image is depicted by a circle located at the point <math>\featurevec^{(\sampleidx)} = \big( \feature_{\rm r}^{(\sampleidx)}, \feature_{\rm g}^{(\sampleidx)}\big)^{T} \in \mathbb{R}^{2}</math>. It seems that the images can be grouped into two clusters.]] | |||

</div> | |||

Formally, clustering methods learn a hypothesis that assign each <span acronym-label="datapoint" acronym-form="singular+short">data point</span> either to precisely one cluster (see Section [[#sec_hard_clustering|Hard Clustering with kmeans]]) or several clusters with different degrees of belonging (see Section [[#sec_soft_clustering|Soft Clustering with Gaussian Mixture Models]] ). Different clustering methods use different measures for the similarity between <span acronym-label="datapoint" acronym-form="singular+short">data point</span>s. For <span acronym-label="datapoint" acronym-form="singular+short">data point</span>s characterized by (numeric) Euclidean feature vectors, the similarity between <span acronym-label="datapoint" acronym-form="singular+short">data point</span>s can be naturally defined in terms of the Euclidean distance between feature vectors. Section [[#sec_connect_clustering|Connectivity-based Clustering]] discusses <span acronym-label="clustering" acronym-form="singular+short">clustering</span> methods that use notions of similarity that are not based on a <span acronym-label="euclidspace" acronym-form="singular+short">Euclidean space</span>. | |||

There is a strong conceptual link between <span acronym-label="clustering" acronym-form="singular+short">clustering</span> methods and the <span acronym-label="classification" acronym-form="singular+short">classification</span> methods discussed in Chapter [[#ch_some_examples|[ch_some_examples]]]. Both type of methods learn a hypothesis that reads in the features of a <span acronym-label="datapoint" acronym-form="singular+short">data point</span> and delivers a prediction for some quantity of interest. In classification methods, this quantity of interest is some generic label of a <span acronym-label="datapoint" acronym-form="singular+short">data point</span>. For <span acronym-label="clustering" acronym-form="singular+short">clustering</span> methods, this quantity of interest for a <span acronym-label="datapoint" acronym-form="singular+short">data point</span> is the <span acronym-label="cluster" acronym-form="singular+short">cluster</span> assignment (for <span acronym-label="hardclustering" acronym-form="singular+short">hard clustering</span>) of the <span acronym-label="dob" acronym-form="singular+short">degree of belonging</span> (for <span acronym-label="softclustering" acronym-form="singular+short">soft clustering</span>). A main difference between <span acronym-label="clustering" acronym-form="singular+short">clustering</span> and <span acronym-label="classification" acronym-form="singular+short">classification</span> is that clustering methods do not require the true label (cluster assignment or <span acronym-label="dob" acronym-form="singular+short">degree of belonging</span>) of a single <span acronym-label="datapoint" acronym-form="singular+short">data point</span>. | |||

Classification methods learn a good hypothesis via minimizing their average loss incurred on a training set of labeled <span acronym-label="datapoint" acronym-form="singular+short">data point</span>s. In contrast, <span acronym-label="clustering" acronym-form="singular+short">clustering</span> methods do not have access to a single labeled <span acronym-label="datapoint" acronym-form="singular+short">data point</span>. To find the correct labels (cluster assignments) clustering methods rely solely on the intrinsic geometry of the <span acronym-label="datapoint" acronym-form="singular+short">data point</span>s. We will see that <span acronym-label="clustering" acronym-form="singular+short">clustering</span> methods use this intrinsic geometry to define an <span acronym-label="emprisk" acronym-form="singular+short">empirical risk</span> incurred by a candidate hypothesis. Like <span acronym-label="classification" acronym-form="singular+short">classification</span> methods, also clustering methods use an instance of the <span acronym-label="dbscan" acronym-form="singular+short">ERM</span> principle (see Chapter [[#ch_Optimization|[ch_Optimization]]]) to find a good hypothesis (clustering). | |||

This chapter discusses two main flavours of <span acronym-label="clustering" acronym-form="singular+short">clustering</span> methods: | |||

* <span acronym-label="hardclustering" acronym-form="singular+short">hard clustering</span> (see Section [[#sec_hard_clustering|Hard Clustering with kmeans]]) | |||

* and <span acronym-label="softclustering" acronym-form="singular+short">soft clustering</span> methods (see Section [[#sec_soft_clustering|Soft Clustering with Gaussian Mixture Models]] ). | |||

Hard <span acronym-label="clustering" acronym-form="singular+short">clustering</span> methods learn a hypothesis <math display="inline">h</math> that reads in the feature vector <math display="inline">\featurevec</math> of a <span acronym-label="datapoint" acronym-form="singular+short">data point</span> and delivers a predicted <span acronym-label="cluster" acronym-form="singular+short">cluster</span> assignment <math display="inline">\hat{\truelabel}=h(\featurevec) \in \{1,\ldots,\nrcluster\}</math>. Thus, assigns each <span acronym-label="datapoint" acronym-form="singular+short">data point</span> to one single <span acronym-label="cluster" acronym-form="singular+short">cluster</span>. Section [[#sec_hard_clustering|Hard Clustering with kmeans]] will discuss one of the most widely-used <span acronym-label="hardclustering" acronym-form="singular+short">hard clustering</span> algorithms which is known as <span acronym-label="kmeans" acronym-form="singular+short">k-means</span>. | |||

In contrast to <span acronym-label="hardclustering" acronym-form="singular+short">hard clustering</span> methods, <span acronym-label="softclustering" acronym-form="singular+short">soft clustering</span> methods assign each <span acronym-label="datapoint" acronym-form="singular+short">data point</span> to several <span acronym-label="cluster" acronym-form="singular+short">cluster</span>s with varying <span acronym-label="dob" acronym-form="singular+short">degree of belonging</span>. These methods learn a hypothesis that delivers a vector <math display="inline">\hat{\labelvec}=\big(\hat{\truelabel}_{1},\ldots,\hat{\truelabel}_{\nrcluster}\big)^{T}</math> with entry <math display="inline">\hat{\truelabel}_{\clusteridx} \in [0,1]</math> being the predicted degree by which the <span acronym-label="datapoint" acronym-form="singular+short">data point</span> belongs to the <math display="inline">\clusteridx</math>-th cluster. Hard clustering is an extreme case of <span acronym-label="softclustering" acronym-form="singular+short">soft clustering</span> where we enforce each <span acronym-label="dob" acronym-form="singular+short">degree of belonging</span> to take only values in <math display="inline">\{0,1\}</math>. Moreover, <span acronym-label="hardclustering" acronym-form="singular+short">hard clustering</span> requires that for each <span acronym-label="datapoint" acronym-form="singular+short">data point</span> only of the corresponding <span acronym-label="dob" acronym-form="singular+short">degree of belonging</span> (one for each cluster) is non-zero. | |||

The main focus of this chapter is on methods that require <span acronym-label="datapoint" acronym-form="singular+short">data point</span>s being represented by numeric feature vectors (see Sections [[#sec_hard_clustering|Hard Clustering with kmeans]] and [[#sec_soft_clustering|Soft Clustering with Gaussian Mixture Models]] ). These methods define the similarity between <span acronym-label="datapoint" acronym-form="singular+short">data point</span>s using the Euclidean distance between their feature vectors. Some applications generate <span acronym-label="datapoint" acronym-form="singular+short">data point</span>s for which it is not obvious how to obtain numeric feature vectors such that their Euclidean distances reflect the similarity between <span acronym-label="datapoint" acronym-form="singular+short">data point</span>s. It is then desirable to use a more flexible notion of similarity which does not require to determine (useful) numeric feature vectors of <span acronym-label="datapoint" acronym-form="singular+short">data point</span>s. | |||

Maybe the most fundamental concept to represent similarities between <span acronym-label="datapoint" acronym-form="singular+short">data point</span>s is a similarity graph. The nodes of the similarity graph are the individual <span acronym-label="datapoint" acronym-form="singular+short">data point</span>s of a dataset. Similar <span acronym-label="datapoint" acronym-form="singular+short">data point</span>s are connected by edges (links) that might be assigned some weight that quantities the amount of similarity. Section [[#sec_connect_clustering|Connectivity-based Clustering]] discusses clustering methods that use a graph to represent similarities between <span acronym-label="datapoint" acronym-form="singular+short">data point</span>s. | |||

== <span id="sec_hard_clustering"></span>Hard Clustering with <span acronym-label="kmeans" acronym-form="singular+short">k-means</span> == | |||

Consider a dataset <math display="inline">\dataset</math> which consists of <math display="inline">\samplesize</math> <span acronym-label="datapoint" acronym-form="singular+short">data point</span>s that are indexed by <math display="inline">\sampleidx=1,\ldots,\samplesize</math>. The <span acronym-label="datapoint" acronym-form="singular+short">data point</span>s are characterized via their numeric feature vectors <math display="inline">\featurevec^{(\sampleidx)} \in \mathbb{R}^{\featuredim}</math>, for <math display="inline">\sampleidx=1,\ldots,\samplesize</math>. It will be convenient for the following discussion if we identify a <span acronym-label="datapoint" acronym-form="singular+short">data point</span> with its feature vector. In particular, we refer by <math display="inline">\featurevec^{(\sampleidx)}</math> to the <math display="inline">\sampleidx</math>-th <span acronym-label="datapoint" acronym-form="singular+short">data point</span>. <span acronym-label="hardclustering" acronym-form="singular+short">hard clustering</span> methods decompose (or cluster) the dataset into a given number <math display="inline">\nrcluster</math> of different clusters <math display="inline">\cluster^{(1)},\ldots,\cluster^{(\nrcluster)}</math>. These methods assign each <span acronym-label="datapoint" acronym-form="singular+short">data point</span> <math display="inline">\featurevec^{(\sampleidx)}</math> to one and only one cluster <math display="inline">\cluster^{(\clusteridx)}</math> with the cluster index <math display="inline">\clusteridx \in \{1,\ldots,\nrcluster\}</math>. | |||

Let us define for each <span acronym-label="datapoint" acronym-form="singular+short">data point</span> its label <math display="inline">\truelabel^{(\sampleidx)} \in \{1,\ldots,\nrcluster\}</math> as the index of the cluster to which the <math display="inline">\sampleidx</math>th <span acronym-label="datapoint" acronym-form="singular+short">data point</span> actually belongs to. The <math display="inline">\clusteridx</math>-th cluster consists of all <span acronym-label="datapoint" acronym-form="singular+short">data point</span>s with <math display="inline">\truelabel^{(\sampleidx)}=\clusteridx</math>, <math display="block"> \cluster^{(\clusteridx)} \defeq \big\{ \sampleidx \in \{1,\ldots,\samplesize\} : \truelabel^{(\sampleidx)} = \clusteridx \big\}.</math> We can interpret <span acronym-label="hardclustering" acronym-form="singular+short">hard clustering</span> methods as ML methods that compute predictions <math display="inline">\hat{\truelabel}^{(\sampleidx)}</math> for the (“correct”) cluster assignments <math display="inline">\truelabel^{(\sampleidx)}</math>. The predicted cluster assignments result in the predicted clusters <math display="block">\begin{equation}\label{equ_def_individual_cluster} \widehat{\cluster}^{(\clusteridx)} \defeq \big\{ \sampleidx \in \{1,\ldots,\samplesize\} : \hat{\truelabel}^{(\sampleidx)} = \clusteridx \big\}\mbox{, for } \clusteridx =1,\ldots,\nrcluster.\end{equation}</math> We now discuss a <span acronym-label="hardclustering" acronym-form="singular+short">hard clustering</span> method which is known as <span acronym-label="kmeans" acronym-form="singular+short">k-means</span>. This method does not require the knowledge of the label or (true) cluster assignment <math display="inline">\truelabel^{(\sampleidx)}</math> for any <span acronym-label="datapoint" acronym-form="singular+short">data point</span> in <math display="inline">\dataset</math>. This method computes predicted cluster assignments <math display="inline">\hat{\truelabel}^{(\sampleidx)}</math> based solely from the intrinsic geometry of the feature vectors <math display="inline">\featurevec^{(\sampleidx)} \in \mathbb{R}^{\featuredim}</math> for all <math display="inline">\sampleidx=1,\ldots,\samplesize</math>. Since it does not require any labeled <span acronym-label="datapoint" acronym-form="singular+short">data point</span>s, <span acronym-label="kmeans" acronym-form="singular+short">k-means</span> is often referred to as being an unsupervised method. However, note that <span acronym-label="kmeans" acronym-form="singular+short">k-means</span> requires the number <math display="inline">\nrcluster</math> of clusters to be given as an input (or hyper-) parameter. | |||

The <span acronym-label="kmeans" acronym-form="singular+short">k-means</span> method represents the <math display="inline">\clusteridx</math>-th cluster <math display="inline">\widehat{\cluster}^{(\clusteridx)}</math> by a representative feature vector <math display="inline">\clustermean^{(\clusteridx)} \in \mathbb{R}^{\featuredim}</math>. It seems reasonable to assign <span acronym-label="datapoint" acronym-form="singular+short">data point</span>s in <math display="inline">\dataset</math> to clusters <math display="inline">\widehat{\cluster}^{(\clusteridx)}</math> such that they are well concentrated around the cluster representatives <math display="inline">\clustermean^{(\clusteridx)}</math>. We make this informal requirement precise by defining the clustering error <math display="block"> | |||

\begin{equation} | |||

\label{equ_def_emp_risk_kmeans} | |||

\emperror \big( \{\clustermean^{(\clusteridx)}\}_{\clusteridx=1}^{\nrcluster},\{\hat{y}^{(\sampleidx)}\}_{\sampleidx=1}^{\samplesize} \mid \dataset \big) | |||

=(1/\samplesize) \sum_{\sampleidx=1}^{\samplesize} {\left\|\vx^{(\sampleidx)}-\clustermean^{(\hat{y}^{(\sampleidx)})}\right\|^2}. | |||

\end{equation} | |||

</math> | |||

Note that the clustering error <math display="inline">\emperror</math> \eqref{equ_def_emp_risk_kmeans} depends on both, the cluster assignments <math display="inline">\hat{\truelabel}^{(\sampleidx)}</math>, which define the cluster [[#equ_def_individual_cluster|[equ_def_individual_cluster]]], and the cluster representatives <math display="inline">\clustermean^{(\clusteridx)}</math>, for <math display="inline">\clusteridx=1,\ldots,\nrcluster</math>. | |||

Finding the optimal cluster means <math display="inline">\{\clustermean^{(\clusteridx)}\}_{\clusteridx=1}^{\nrcluster}</math> and cluster assignments <math display="inline">\{\hat{\truelabel}^{(\sampleidx)}\}_{\sampleidx=1}^{\samplesize}</math> that minimize the clustering error \eqref{equ_def_emp_risk_kmeans} is computationally challenging. The difficulty stems from the fact that the clustering error is a non-convex function of the cluster means and assignments. While jointly optimizing the cluster means and assignments is hard, separately optimizing either the cluster means for given assignments or vice-versa is easy. In what follows, we present simple closed-form solutions for these sub-problems. The <math display="inline">k</math>-means method simply combines these solutions in an alternating fashion. | |||

It can be shown that for given predictions (cluster assignments) <math display="inline">\hat{\truelabel}^{(\sampleidx)}</math>, the clustering error \eqref{equ_def_emp_risk_kmeans} is minimized by setting the cluster representatives equal to the <span>'''cluster means'''</span> <math display="block">\begin{equation}\label{eq_def_mean_optimal} | |||

\clustermean^{(\clusteridx)}\defeq \big(1/|\widehat{\cluster}^{(\clusteridx)}| \big) \sum_{\hat{\truelabel}^{(\sampleidx)} = \clusteridx} \featurevec^{(\sampleidx)}.\end{equation}</math> To evaluate \eqref{eq_def_mean_optimal} we need to know the predicted cluster assignments <math display="inline">\hat{\truelabel}^{(\sampleidx)}</math>. The crux is that the optimal predictions <math display="inline">\hat{\truelabel}^{(\sampleidx)}</math>, in the sense of minimizing clustering error \eqref{equ_def_emp_risk_kmeans}, depend themselves on the choice for the cluster representatives <math display="inline">\clustermean^{(\clusteridx)}</math>. In particular, for given cluster representative <math display="inline">\clustermean^{(\clusteridx)}</math> with <math display="inline">\clusteridx=1,\ldots,\nrcluster</math>, the clustering error is minimized by the cluster assignments <math display="block">\begin{equation}\label{equ_def_clustera_assgt-nearst_mean} | |||

\hat{\truelabel}^{(\sampleidx)} \in \argmin_{\clusteridx \in \{1,\ldots,\nrcluster\}} \big\| \featurevec^{(\sampleidx)} - \clustermean^{(\clusteridx)} \big\|.\end{equation}</math> Here, we denote by <math display="inline">\argmin\limits_{\clusteridx' \in \{1,\ldots,\nrcluster\}} \| \featurevec^{(\sampleidx)} - \clustermean^{(\clusteridx')} \|</math> the set of all cluster indices <math display="inline">\clusteridx \in \{1,\ldots,\nrcluster\}</math> such that <math display="inline">\| \featurevec^{(\sampleidx)} - \clustermean^{(\clusteridx)} \| = \min_{\clusteridx' \in \{1,\ldots,\nrcluster\}} \| \featurevec^{(\sampleidx)} - \clustermean^{(\clusteridx')} \|</math>. | |||

Note that \eqref{equ_def_clustera_assgt-nearst_mean} assigns the <math display="inline">\sampleidx</math>th datapoint to those cluster <math display="inline">\cluster^{(\clusteridx)}</math> whose cluster mean <math display="inline">\clustermean^{(\clusteridx)}</math> is nearest (in Euclidean distance) to <math display="inline">\featurevec^{(\sampleidx)}</math>. Thus, if we knew the optimal cluster representatives, we could predict the cluster assignments using \eqref{equ_def_clustera_assgt-nearst_mean}. However, we do not know the optimal cluster representatives unless we have found good predictions for the cluster assignments <math display="inline">\hat{\truelabel}^{(\sampleidx)}</math> (see \eqref{eq_def_mean_optimal}). | |||

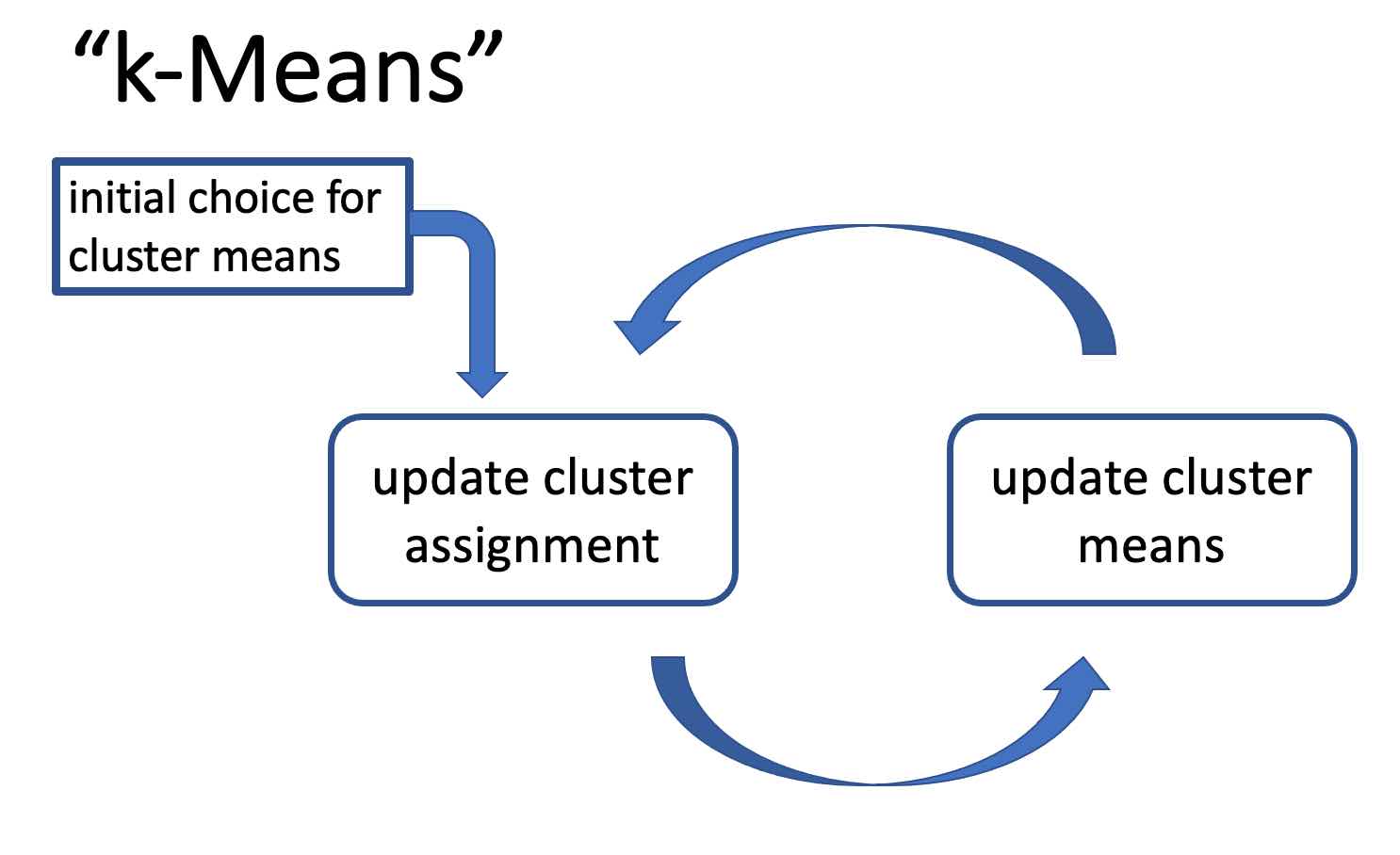

To recap: We have characterized the optimal choice \eqref{eq_def_mean_optimal} for the cluster representatives for given cluster assignments and the optimal choice \eqref{equ_def_clustera_assgt-nearst_mean} for the cluster assignments for given cluster representatives. It seems natural, starting from some initial guess for the cluster representatives, to alternate between the cluster assignment update \eqref{equ_def_clustera_assgt-nearst_mean} and the update \eqref{eq_def_mean_optimal} for the cluster means. This alternating optimization strategy is illustrated in Figure [[#fig_flow_kmeans|[fig_flow_kmeans]]] and summarized in Algorithm [[#alg:kmeans|k-means]]. Note that Algorithm [[#alg:kmeans|k-means]], which is maybe the most basic variant of <math display="inline">k</math>-means, simply alternates between the two updates \eqref{eq_def_mean_optimal} and \eqref{equ_def_clustera_assgt-nearst_mean} until some stopping criterion is satisfied. | |||

<div class="d-flex justify-content-center"> | |||

<span id="fig_flow_kmeans"></span> | |||

[[File:AlgorithmFlowKmeans.jpg |500px | thumb |The workflow of <math>k</math>-means. Starting from an initial guess or estimate | |||

for the cluster means, the cluster assignments and cluster means are updated (improved) in an alternating fashion. ]] | |||

</div> | |||

Algorithm [[#alg:kmeans|k-means]] requires the specification of the number <math display="inline">\nrcluster</math> of clusters and initial choices for the cluster means <math display="inline">\clustermean^{(\clusteridx)}</math>, for <math display="inline">\clusteridx=1,\ldots,\nrcluster</math>. Those quantities are hyper-parameters that must be tuned to the specific geometry of the given dataset <math display="inline">\dataset</math>. This tuning can be based on probabilistic models for the dataset and its cluster structure (see Section [[#equ_prob_models_data|[equ_prob_models_data]]] and ). Alternatively, if Algorithm [[#alg:kmeans|k-means]] is used as pre-processing within an overall supervised ML method (see Chapter [[#ch_some_examples|[ch_some_examples]]]), the validation error (see Section [[#sec_modsel|[sec_modsel]]]) of the overall method might guide the choice of the number <math display="inline">\nrcluster</math> of clusters. | |||

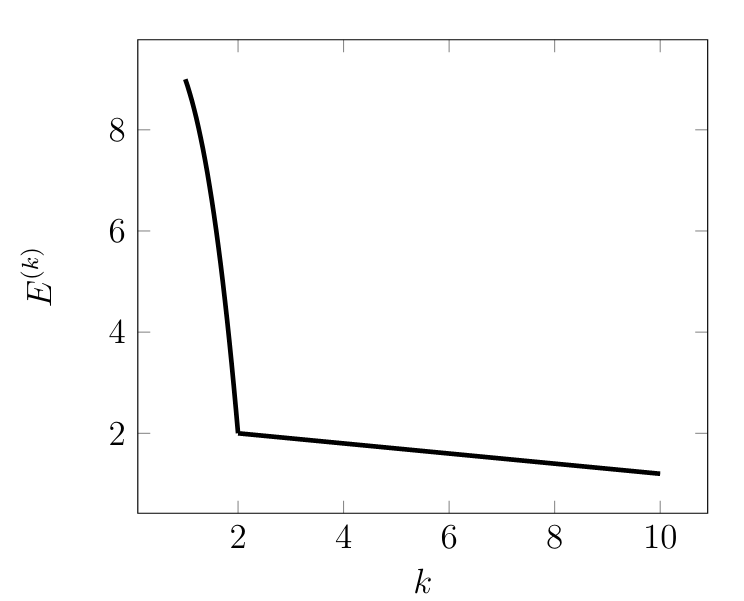

<span>'''Choosing Number of Clusters.'''</span> The choice for the number <math display="inline">\nrcluster</math> of clusters typically depends on the role of the clustering method within an overall ML application. If the clustering method serves as a pre-processing for a supervised ML problem, we could try out different values of the number <math display="inline">\nrcluster</math> and determine, for each choice <math display="inline">\nrcluster</math>, the corresponding validation error. We then pick the value of <math display="inline">\nrcluster</math> which results in the smallest validation error. If the clustering method is mainly used as a tool for data visualization, we might prefer a small number of clusters. The choice for the number <math display="inline">\nrcluster</math> of clusters can also be guided by the so-called “elbow-method”. Here, we run the <math display="inline">k</math>-means Algorithm [[#alg:kmeans|k-means]] for several different choices of <math display="inline">\nrcluster</math>. For each value of <math display="inline">\nrcluster</math>, Algorithm [[#alg:kmeans|k-means]] delivers a clustering with clustering error <math display="block">\error^{(\nrcluster)} = \emperror \big(\{\clustermean^{(\clusteridx)}\}_{\clusteridx=1}^{\nrcluster},\{\hat{\truelabel}^{(\sampleidx)}\}_{\sampleidx=1}^{\samplesize} \mid \dataset \big).</math> | |||

<proc label="K-means Algorithm" id="alg:kmeans"> | |||

'''Input''': dataset <math display="inline">\dataset=\{ \mathbf{x}^{(i)}\}_{i=1}^{n}</math>; number <math display="inline">\nrcluster</math> of clusters; initial cluster means <math display="inline">\clustermean^{(\clusteridx)}</math> for <math display="inline">\clusteridx=1,\ldots,\nrcluster</math>. <span id="equ_step_cluster_asst_update" label="equ_step_cluster_asst_update"></span> | |||

#repeat | |||

#for each datapoint <math display="inline">\mathbf{x}^{(i)}</math>, <math display="inline">i\!=\!1,\ldots,n</math>, do <math display="block">\begin{equation}\label{equ_cluster_assign_update} \hat{y}^{(i)} \defeq \argmin\limits_{\clusteridx' \in \{1,\ldots,\nrcluster\}} \| \mathbf{x}^{(i)} - \clustermean^{(\clusteridx')} \| \quad \mbox{ (update cluster assignments)}\end{equation}</math> <span id="equ_step_cluster_update" label="equ_step_cluster_update"></span> | |||

#for each cluster <math display="inline">\clusteridx\!=\!1,\ldots,\nrcluster</math> do <math display="block">\begin{equation}\label{equ_cluster_mean_update} | |||

\clustermean^{(\clusteridx)} \defeq \frac{1}{|\{i: \hat{y}^{(i)}= \clusteridx\}|} \sum_{i: \hat{y}^{(i)} = \clusteridx} \mathbf{x}^{(i)} \quad \mbox{ (update cluster means) }\end{equation}</math> <span id="equ_def_stop_criterion_kmeans" label="equ_def_stop_criterion_kmeans"></span> | |||

#'''until''' stopping criterion is met | |||

#compute final clustering error <math display="inline">\error^{(\nrcluster)} \defeq (1/n) \sum_{i=1}^{n} {\left\|\mathbf{x}^{(i)}-\clustermean^{(\hat{y}^{(i)})}\right\|^2}</math> | |||

'''Output:''' cluster means <math display="inline">\clustermean^{(\clusteridx)}</math>, for <math display="inline">\clusteridx=1,\ldots,\nrcluster</math>, cluster assignments <math display="inline">\hat{y}^{(i)} \in \{1,\ldots,\nrcluster\}</math>, for <math display="inline">i=1,\ldots,n</math>, final clustering error <math display="inline">\error^{(\nrcluster)}</math> | |||

</proc> | |||

We then plot the minimum empirical error <math display="inline">\error^{(\nrcluster)}</math> as a function of the number <math display="inline">\nrcluster</math> of clusters. Figure [[#fig_ellbow|[fig_ellbow]]] depicts an example for such a plot which typically starts with a steep decrease for increasing <math display="inline">\nrcluster</math> and then flattening out for larger values of <math display="inline">\nrcluster</math>. Note that for <math display="inline">\nrcluster \geq \samplesize</math> we can achieve zero clustering error since each datapoint <math display="inline">\featurevec^{(\sampleidx)}</math> can be assigned to a separate cluster <math display="inline">\cluster^{(\clusteridx)}</math> whose mean coincides with that datapoint, <math display="inline">\featurevec^{(\sampleidx)} = \clustermean^{(\clusteridx)}</math>. | |||

<div class="d-flex justify-content-center"> | |||

<span id="fig_ellbow"></span> | |||

[[File:fig_ellbow.png |500px | thumb | The clustering error <math>\error^{(\nrcluster)}</math> achieved by kmeans for increasing number <math>\nrcluster</math> of clusters. ]] | |||

</div> | |||

<span>'''Cluster-Means Initialization.'''</span> We briefly mention some popular strategies for choosing the initial cluster means in Algorithm [[#alg:kmeans|k-means]]. One option is to initialize the cluster means with realizations of <span acronym-label="iid" acronym-form="singular+short">iid</span> random vectors whose probability distribution is matched to the dataset <math display="inline">\dataset = \{ \vx^{(\sampleidx)} \}_{\sampleidx=1}^{\samplesize}</math> (see Section [[#sec_max_iikelihood|[sec_max_iikelihood]]]). For example, we could use a multivariate normal distribution <math display="inline">\mathcal{N}(\vx;\widehat{\clustermean}, \widehat{\clustercov})</math> with the sample mean <math display="inline">\widehat{\clustermean} = (1/\samplesize) \sum_{\sampleidx=1}^{\samplesize} \featurevec^{(\sampleidx)}</math> and the sample covariance <math display="inline">\widehat{\clustercov} = (1/\samplesize) \sum_{\sampleidx=1}^{\samplesize} (\featurevec^{(\sampleidx)}\!-\!\widehat{\clustermean}) (\featurevec^{(\sampleidx)}\!-\!\widehat{\clustermean})^{T}</math>. Alternatively, we could choose the initial cluster means <math display="inline">\clustermean^{(\clusteridx)}</math> by selecting <math display="inline">\nrcluster</math> different <span acronym-label="datapoint" acronym-form="singular+short">data point</span>s <math display="inline">\featurevec^{(\sampleidx)}</math> from <math display="inline">\dataset</math>. This selection process might combine random choices with an optimization of the distances between cluster means . Finally, the cluster means might also be chosen by evenly partitioning the principal component of the dataset (see Chapter [[#ch_FeatureLearning|[ch_FeatureLearning]]]). | |||

<span>'''Interpretation as <span acronym-label="dbscan" acronym-form="singular+short">ERM</span>.'''</span> For a practical implementation of Algorithm [[#alg:kmeans|k-means]] we need to decide when to stop updating the cluster means and assignments (see \eqref{equ_cluster_assign_update} and \eqref{equ_cluster_mean_update}). To this end it is useful to interpret Algorithm [[#alg:kmeans|k-means]] as a method for iteratively minimizing the clustering error \eqref{equ_def_emp_risk_kmeans}. As can be verified easily, the updates \eqref{equ_cluster_assign_update} and \eqref{equ_cluster_mean_update} always modify (update) the cluster means or assignments in such a way that the clustering error \eqref{equ_def_emp_risk_kmeans} is never increased. Thus, each new iteration of Algorithm [[#alg:kmeans|k-means]] results in cluster means and assignments with a smaller (or the same) clustering error compared to the cluster means and assignments obtained after the previous iteration. Algorithm [[#alg:kmeans|k-means]] implements a form of <span acronym-label="dbscan" acronym-form="singular+short">ERM</span> (see Chapter [[#ch_Optimization|[ch_Optimization]]]) using the clustering error \eqref{equ_def_emp_risk_kmeans} as the <span acronym-label="emprisk" acronym-form="singular+short">empirical risk</span> incurred by the predicted cluster assignments <math display="inline">\hat{y}^{(\sampleidx)}</math>. Note that after completing a full iteration of Algorithm [[#alg:kmeans|k-means]], the cluster means <math display="inline">\big\{ \clustermean^{(\clusteridx)} \big\}_{\clusteridx=1}^{\nrcluster}</math> are fully determined by the cluster assignments <math display="inline">\big\{ \hat{y}^{(\sampleidx)} \big\}_{\sampleidx=1}^{\samplesize}</math> via \eqref{equ_cluster_mean_update}. It seems natural to terminate Algorithm [[#alg:kmeans|k-means]] if the decrease in the clustering error achieved by the most recent iteration is below a prescribed (small) threshold. | |||

<span>'''Clustering and Classification.'''</span> There is a strong conceptual link between Algorithm [[#alg:kmeans|k-means]] and classification methods (see e.g. Section [[#sec_nearest_neighbour_methods|[sec_nearest_neighbour_methods]]]). Both methods essentially learn a hypothesis <math display="inline">h(\featurevec)</math> that maps the feature vector <math display="inline">\featurevec</math> to a predicted label <math display="inline">\hat{\truelabel}=h(\featurevec)</math> from a finite set. The practical meaning of the label values is different for Algorithm [[#alg:kmeans|k-means]] and classification methods. For classification methods, the meaning of the label values is essentially defined by the training set (of labeled <span acronym-label="datapoint" acronym-form="singular+short">data point</span>s) used for <span acronym-label="dbscan" acronym-form="singular+short">ERM</span> [[#equ_def_ERM_funs|[equ_def_ERM_funs]]]. On the other hand, clustering methods use the predicted label <math display="inline">\hat{\truelabel}=h(\featurevec)</math> as a cluster index. | |||

Another main difference between Algorithm [[#alg:kmeans|k-means]] and most classification methods is the choice for the <span acronym-label="emprisk" acronym-form="singular+short">empirical risk</span> used to evaluate the quality or usefulness of a given hypothesis <math display="inline">h(\cdot)</math>. Classification methods typically use an average loss over labeled <span acronym-label="datapoint" acronym-form="singular+short">data point</span>s in a training set as <span acronym-label="emprisk" acronym-form="singular+short">empirical risk</span>. In contrast, Algorithm [[#alg:kmeans|k-means]] uses the clustering error \eqref{equ_def_emp_risk_kmeans} as a form of <span acronym-label="emprisk" acronym-form="singular+short">empirical risk</span>. Consider a hypothesis that resembles the cluster assignments <math display="inline">\hat{\truelabel}^{(\sampleidx)}</math> obtained after completing an iteration in Algorithm [[#alg:kmeans|k-means]], <math display="inline">\hat{\truelabel}^{(\sampleidx)} = h\big( \featurevec^{(\sampleidx)} \big)</math>. Then we can rewrite the resulting clustering error achieved after this iteration as <math display="block">\begin{equation}\label{equ_emp_risk_clustering} \emperror\big( h | \dataset \big) = (1/\samplesize) \sum_{\sampleidx=1}^{\samplesize} \left\|\featurevec^{(\sampleidx)}- \frac{\sum_{\sampleidx' \in \dataset^{(\sampleidx)}} \featurevec^{(\sampleidx')}}{\big| \dataset^{(\sampleidx)} \big|} \right\|^2. \mbox{ with } \dataset^{(\sampleidx)} \defeq \big\{ \sampleidx' : h\big(\featurevec^{(\sampleidx)} \big) = h\big(\featurevec^{(\sampleidx')} \big) \big\}.\end{equation}</math> Note that the <math display="inline">\sampleidx</math>-th summand in \eqref{equ_emp_risk_clustering} depends on the entire <span acronym-label="dataset" acronym-form="singular+short">dataset</span> <math display="inline">\dataset</math> and not only on (the features of) the <math display="inline">\sampleidx</math>-th <span acronym-label="datapoint" acronym-form="singular+short">data point</span> <math display="inline">\featurevec^{(\sampleidx)}</math>. | |||

<span>'''Some Practicalities.'''</span> For a practical implementation of Algorithm [[#alg:kmeans|k-means]] we need to fix three issues. | |||

{| class="table" | |||

|- | |||

! Issue !! Fix | |||

|- | |||

| Tie-breaking || We need to specify what to do if several different cluster indices <math display="inline">\clusteridx\!\in\!\{1,\ldots,\nrcluster\}</math> achieve the minimum value in the cluster assignment update \eqref{equ_cluster_assign_update} during step [[#equ_step_cluster_asst_update|[equ_step_cluster_asst_update]]]. | |||

|- | |||

| Empty-cluster || The cluster assignment update \eqref{equ_cluster_assign_update} in step [[#equ_step_cluster_update|[equ_step_cluster_update]]] of Algorithm [[#alg:kmeans|k-means]] might result in a cluster <math display="inline">\clusteridx</math> with no datapoints associated with it, <math display="inline">|\{ i: \hat{y}^{(i)} = \clusteridx \}|=0</math>. For such a cluster <math display="inline">\clusteridx</math>, the update \eqref{equ_cluster_mean_update} is not well-defined. | |||

|- | |||

| Stopping-criterion || We need to specify a criterion used in step [[#equ_def_stop_criterion_kmeans|[equ_def_stop_criterion_kmeans]]] of Algorithm [[#alg:kmeans|k-means]] to decide when to stop iterating. | |||

|} | |||

Algorithm [[#alg:kmeansimple|k-means II]] is obtained from Algorithm [[#alg:kmeans|k-means]] by fixing those three issues . Step [[#equ_step_cluster_assg_kmeans2|[equ_step_cluster_assg_kmeans2]]] of Algorithm [[#alg:kmeansimple|k-means II]] solves the first issue mentioned above (“tie breaking”), arising when there are several cluster clusters whose means have minimum distance to a <span acronym-label="datapoint" acronym-form="singular+short">data point</span> <math display="inline">\featurevec^{(\sampleidx)}</math>, by assigning <math display="inline">\vx^{(\sampleidx)}</math> to the cluster with smallest cluster index (see \eqref{equ_cluster_assign_update2}). | |||

<proc label="K-means II" id="alg:kmeansimple"> | |||

'''Input:''' dataset <math display="inline">\dataset=\{ \featurevec^{(\sampleidx)}\}_{\sampleidx=1}^{\samplesize}</math>; number <math display="inline">\nrcluster</math> of <span acronym-label="cluster" acronym-form="singular+short">cluster</span>s; tolerance <math display="inline">\varepsilon \geq 0</math>; initial <span acronym-label="cluster" acronym-form="singular+short">cluster</span> means <math display="inline">\big\{ \clustermean^{(\clusteridx)} \big\}_{\clusteridx=1}^{\nrcluster}</math> | |||

#'''Initialize.''' set iteration counter <math display="inline">\itercntr \defeq 0</math>; <math display="inline">\error_{0}\defeq 0</math> <span id="equ_step_cluster_assg_kmeans2" label="equ_step_cluster_assg_kmeans2"></span> | |||

#repeat | |||

#for all datapoints <math display="inline">\sampleidx\!=\!1,\ldots,\samplesize</math>, <math display="block">\begin{equation}\label{equ_cluster_assign_update2} \hat{\truelabel}^{(\sampleidx)} \defeq \min \{ \argmin\limits_{\clusteridx' \in \{1,\ldots,\nrcluster\}} \| \featurevec^{(\sampleidx)} - \clustermean^{(\clusteridx')} \| \} \quad \mbox{ (update cluster assignments)}\end{equation}</math> <span id="equ_def_cluster_indicators" label="equ_def_cluster_indicators"></span> | |||

#for all clusters <math display="inline">\clusteridx\!=\!1,\ldots,\nrcluster</math>, update the activity indicator <math display="block">b^{(\clusteridx)} \defeq \begin{cases} 1 & \mbox{ if } |\{\sampleidx: \hat{y}^{(\sampleidx)}= \clusteridx\}| > 0 \\ 0 & \mbox{ else.} \end{cases}</math> for all <math display="inline">\clusteridx\!=\!1,\ldots,\nrcluster</math> with <math display="inline">b^{(\clusteridx)}=1</math>, <math display="block">\begin{equation}\label{equ_cluster_mean_update2} | |||

\clustermean^{(\clusteridx)} \defeq \frac{1}{|\{ \sampleidx: \hat{\truelabel}^{(\sampleidx)}= \clusteridx\}|} \sum_{\{ \sampleidx: \hat{\truelabel}^{(\sampleidx)} = \clusteridx\}} \featurevec^{(\sampleidx)} \quad \mbox{ | |||

(update cluster means) } \end{equation}</math> | |||

#<math display="inline">\itercntr \defeq \itercntr+1</math> (increment iteration counter) | |||

#<span id="equ_def_clustering_error_kmeans2" label="equ_def_clustering_error_kmeans2"></span> <math display="inline">\error_{\itercntr} \defeq \emperror \big( \{\clustermean^{(\clusteridx)}\}_{\clusteridx=1}^{\nrcluster},\{\hat{\truelabel}^{(\sampleidx)}\}_{\sampleidx=1}^{\samplesize} \mid \dataset \big)</math> (evaluate clustering error \eqref{equ_def_emp_risk_kmeans}) | |||

#<span id="step_stop_criterion_kmeans2" label="step_stop_criterion_kmeans2"></span> <math display="inline">\itercntr > 1</math> and <math display="inline">\error_{\itercntr\!-\!1} - \error_{\itercntr} \leq \varepsilon</math> (check for sufficient decrease in clustering error) | |||

#<span id="equ_def_step_final_clustering_kmeans2" label="equ_def_step_final_clustering_kmeans2"></span><math display="inline">\error^{(\nrcluster)} \defeq (1/\samplesize) \sum_{\sampleidx=1}^{\samplesize} {\left\|\featurevec^{(\sampleidx)}-\clustermean^{(\hat{\truelabel}^{(\sampleidx)})}\right\|^2}</math> (compute final clustering error) | |||

'''Output:''' cluster assignments <math display="inline">\hat{\truelabel}^{(\sampleidx)}\!\in\!\{1,\ldots,\nrcluster\}</math>, cluster means <math display="inline">\clustermean^{(\clusteridx)}</math>, clustering error <math display="inline">\error^{(\nrcluster)}</math>. | |||

</proc> | |||

Step [[#equ_def_cluster_indicators|[equ_def_cluster_indicators]]] of Algorithm [[#alg:kmeansimple|k-means II]] resolves the “empty cluster” issue by computing the variables <math display="inline">b^{(\clusteridx)} \in \{0,1\}</math> for <math display="inline">\clusteridx=1,\ldots,\nrcluster</math>. The variable <math display="inline">b^{(\clusteridx)}</math> indicates if the cluster with index <math display="inline">\clusteridx</math> is active (<math display="inline">b^{(\clusteridx)}= 1</math>) or the cluster <math display="inline">\clusteridx</math> is inactive (<math display="inline">b^{(\clusteridx)}=0</math>). The cluster <math display="inline">\clusteridx</math> is defined to be inactive if there are no <span acronym-label="datapoint" acronym-form="singular+short">data point</span>s assigned to it during the preceding cluster assignment step \eqref{equ_cluster_assign_update2}. The cluster activity indicators <math display="inline">b^{(\clusteridx)}</math> allows to restrict the cluster mean updates \eqref{equ_cluster_mean_update2} only to the clusters <math display="inline">\clusteridx</math> with at least one <span acronym-label="datapoint" acronym-form="singular+short">data point</span> <math display="inline">\featurevec^{(\sampleidx)}</math>. To obtain a stopping criterion, step [[#equ_def_clustering_error_kmeans2|[equ_def_clustering_error_kmeans2]]] Algorithm [[#alg:kmeansimple|k-means II]] monitors the clustering error <math display="inline">\error_{\itercntr}</math> incurred by the cluster means and assignments obtained after <math display="inline">\itercntr</math> iterations. Algorithm [[#alg:kmeansimple|k-means II]] continues updating cluster assignments \eqref{equ_cluster_assign_update2} and cluster means \eqref{equ_cluster_mean_update2} as long as the decrease is above a given threshold <math display="inline">\varepsilon \geq0</math>. | |||

For Algorithm [[#alg:kmeansimple|k-means II]] to be useful we must ensure that the stopping criterion is met within a finite number of iterations. In other words, we must ensure that the clustering error decrease can be made arbitrarily small within a sufficiently large (but finite) number of iterations. To this end, it is useful to represent Algorithm [[#alg:kmeansimple|k-means II]] as a fixed-point iteration <math display="block">\begin{equation}\label{equ_fixed_point_clustering} \{ \hat{\truelabel}^{(\sampleidx)} \}_{\sampleidx=1}^{\samplesize} \mapsto \mathcal{P} \{ \hat{\truelabel}^{(\sampleidx)} \}_{\samplesize=1}^{\samplesize}.\end{equation}</math> The operator <math display="inline">\mathcal{P}</math>, which depends on the dataset <math display="inline">\dataset</math>, reads in a list of cluster assignments and delivers an improved list of cluster assignments aiming at reducing the associated clustering error \eqref{equ_def_emp_risk_kmeans}. Each iteration of Algorithm [[#alg:kmeansimple|k-means II]] updates the cluster assignments <math display="inline">\hat{\truelabel}^{(\sampleidx)}</math> by applying the operator <math display="inline">\mathcal{P}</math>. Representing Algorithm [[#alg:kmeansimple|k-means II]] as a fixed-point iteration \eqref{equ_fixed_point_clustering} allows for an elegant proof of the convergence of Algorithm [[#alg:kmeansimple|k-means II]] within a finite number of iterations (even for <math display="inline">\varepsilon = 0</math>) . | |||

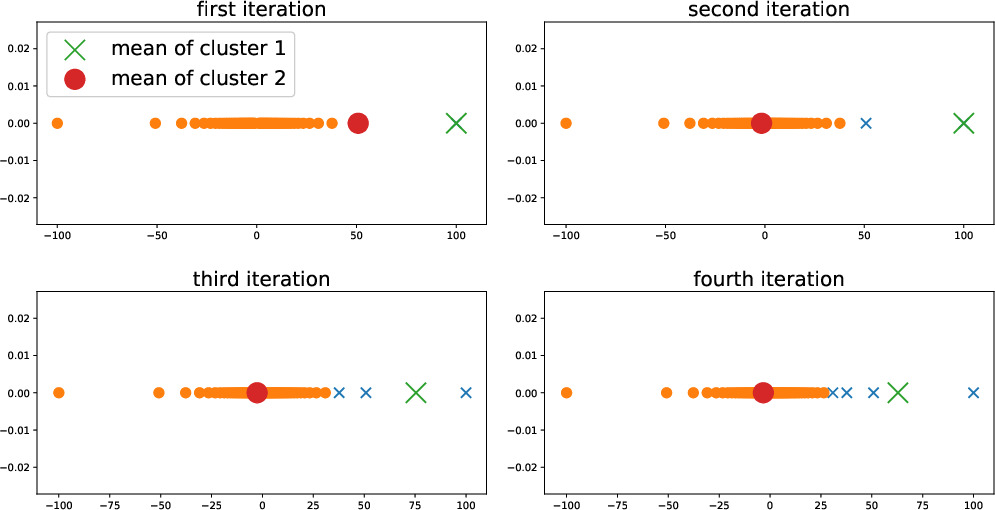

Figure [[#fig:first_iter_kmeans|1.1]] depicts the evolution of the cluster assignments and cluster means during the iterations Algorithm [[#alg:kmeansimple|k-means II]]. Each subplot corresponds to one iteration of Algorithm [[#alg:kmeansimple|k-means II]] and depicts the cluster means before that iteration and the clustering assignments (via the marker symbols) after the corresponding iteration. In particular, the upper left subplot depicts the cluster means before the first iteration (which are the initial cluster means) and the cluster assignments obtained after the first iteration of Algorithm [[#alg:kmeansimple|k-means II]]. | |||

<div class="d-flex justify-content-center"> | |||

<span id="fig:first_iter_kmeans"></span> | |||

[[File: EvolKmeans.jpg | 600px | thumb | The evolution of cluster means \eqref{equ_cluster_mean_update} and cluster assignments \eqref{equ_cluster_assign_update} (depicted as large dot and large cross) during the first four iterations of <span acronym-label="kmeans" acronym-form="singular+short">k-means</span> Algorithm [[#alg:kmeansimple|k-means II]].]] | |||

</div> | |||

Consider running Algorithm [[#alg:kmeansimple|k-means II]] with tolerance <math display="inline">\varepsilon=0</math> (see step [[#step_stop_criterion_kmeans2|[step_stop_criterion_kmeans2]]]) such that the iterations are continued until there is no decrease in the clustering error <math display="inline">\error^{(\itercntr)}</math> (see step [[#equ_def_clustering_error_kmeans2|[equ_def_clustering_error_kmeans2]]] of Algorithm [[#alg:kmeansimple|k-means II]]). As discussed above, Algorithm [[#alg:kmeansimple|k-means II]] will terminate after a finite number of iterations. Moreover, for <math display="inline">\varepsilon=0</math>, the delivered cluster assignments <math display="inline">\big\{ \hat{\truelabel}^{(\sampleidx)} \big\}_{\sampleidx=1}^{\samplesize}</math> are fully determined by the delivered clustered means <math display="inline">\big\{ \clustermean^{(\clusteridx)} \big\}_{\clusteridx=1}^{\nrcluster}</math>, <math display="block">\begin{equation}\label{equ_condition_clusterassmean_kmeans2} \hat{\truelabel}^{(\sampleidx)} = \min \{ \argmin\limits_{\clusteridx' \in \{1,\ldots,\nrcluster\}} \| \featurevec^{(\sampleidx)} - \clustermean^{(\clusteridx')} \| \}.\end{equation}</math> Indeed, if \eqref{equ_condition_clusterassmean_kmeans2} does not hold one can show the final iteration <math display="inline">r</math> would still decrease the clustering error and the stopping criterion in step [[#step_stop_criterion_kmeans2|[step_stop_criterion_kmeans2]]] would not be met. | |||

If cluster assignments and cluster means satisfy the condition \eqref{equ_condition_clusterassmean_kmeans2}, we can rewrite the clustering error \eqref{equ_def_emp_risk_kmeans} as a function of the cluster means solely, <math display="block">\begin{equation}\label{equ_def_clustering_error_means} | |||

\emperror\big( \big\{ \clustermean^{(\clusteridx)} \big\}_{\clusteridx=1}^{\nrcluster} | \dataset \big) \defeq (1/\samplesize) \sum_{\sampleidx=1}^{\samplesize} | |||

\min\limits_{\clusteridx' \in \{1,\ldots,\nrcluster\}} \| \featurevec^{(\sampleidx)} - \clustermean^{(\clusteridx')} \|^{2} .\end{equation}</math> Even for cluster assignments and cluster means that do not satisfy \eqref{equ_condition_clusterassmean_kmeans2}, we can still use \eqref{equ_def_clustering_error_means} to lower bound the clustering error \eqref{equ_def_emp_risk_kmeans}, <math display="block">\emperror\big( \big\{ \clustermean^{(\clusteridx)} \big\}_{\clusteridx=1}^{\nrcluster} | \dataset \big)\leq \emperror \big( \{\clustermean^{(\clusteridx)}\}_{\clusteridx=1}^{\nrcluster},\{\hat{\truelabel}^{(\sampleidx)}\}_{\sampleidx=1}^{\samplesize} \mid \dataset \big)</math>. | |||

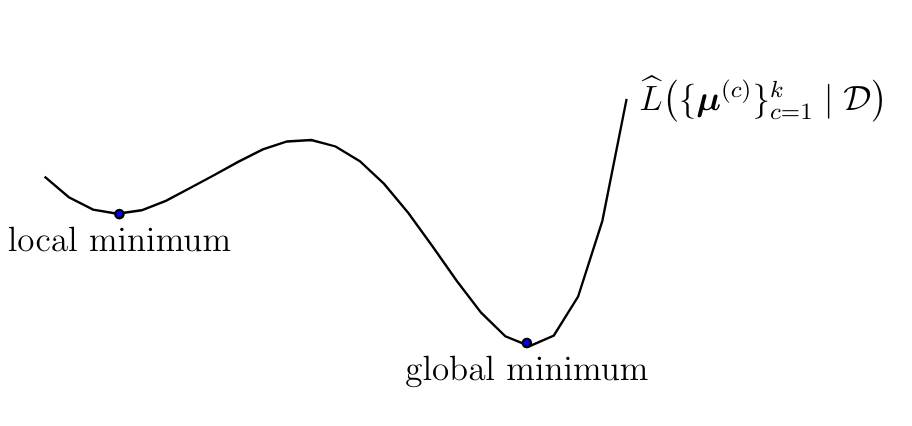

Algorithm [[#alg:kmeansimple|k-means II]] iteratively improves the cluster means in order to minimize \eqref{equ_def_clustering_error_means}. Ideally, we would like Algorithm [[#alg:kmeansimple|k-means II]] to deliver cluster means that achieve the global minimum of \eqref{equ_def_clustering_error_means} (see Figure [[#fig_emp_risk_k_means|[fig_emp_risk_k_means]]]). However, for some combination of dataset <math display="inline">\dataset</math> and initial cluster means, Algorithm [[#alg:kmeansimple|k-means II]] delivers cluster means that form only a local optimum of <math display="inline">\emperror\big( \big\{ \clustermean^{(\clusteridx)} \big\}_{\clusteridx=1}^{\nrcluster} | \dataset \big)</math> which is strictly worse (larger) than its global optimum (see Figure [[#fig_emp_risk_k_means|[fig_emp_risk_k_means]]]). | |||

The tendency of Algorithm [[#alg:kmeansimple|k-means II]] to get trapped around a local minimum of \eqref{equ_def_clustering_error_means} depends on the initial choice for cluster means. It is therefore useful to repeat Algorithm [[#alg:kmeansimple|k-means II]] several times, with each repetition using a different initial choice for the cluster means. We then pick the cluster assignments <math display="inline">\{ \hat{\truelabel}^{(\sampleidx)} \}_{\sampleidx=1}^{\samplesize}</math> obtained for the repetition that resulted in the smallest clustering error <math display="inline">\error^{(\nrcluster)}</math> (see step [[#equ_def_step_final_clustering_kmeans2|[equ_def_step_final_clustering_kmeans2]]]). | |||

<div class="d-flex justify-content-center"> | |||

<span id = "fig_emp_risk_k_means"></span> | |||

[[File:fig_emp_risk_k_means.png | 500px | thumb | The clustering error \eqref{equ_def_clustering_error_means} is a non-convex function of the cluster means <math>\{\clustermean^{(\clusteridx)}\}_{\clusteridx=1}^{\nrcluster}</math>. Algorithm [[#alg:kmeansimple | k-means II]] iteratively updates cluster means to minimize the clustering error but might get trapped around one of its local minimum.]] | |||

</div> | |||

== <span id="sec_soft_clustering"></span> Soft Clustering with Gaussian Mixture Models == | |||

Consider a dataset <math display="inline">\dataset=\{\featurevec^{(1)},\ldots,\featurevec^{(\samplesize)}\}</math> that we wish to group into a given number of <math display="inline">\nrcluster</math> different clusters. The <span acronym-label="hardclustering" acronym-form="singular+short">hard clustering</span> methods discussed in Section [[#sec_hard_clustering|Hard Clustering with kmeans]] deliver cluster assignments <math display="inline">\hat{\truelabel}^{(\sampleidx)}</math>, for <math display="inline">\sampleidx=1,\ldots,\samplesize</math>. The cluster assignment <math display="inline">\hat{\truelabel}^{(\sampleidx)}</math> is the index of the cluster to which the <math display="inline">\sampleidx</math>th <span acronym-label="datapoint" acronym-form="singular+short">data point</span> <math display="inline">\featurevec^{(\sampleidx)}</math> is assigned to. These cluster assignments <math display="inline">\hat{\truelabel}</math> provide rather coarse-grained information. Two <span acronym-label="datapoint" acronym-form="singular+short">data point</span>s <math display="inline">\featurevec^{(\sampleidx)}, \featurevec^{(\sampleidx')}</math> might be assigned to the same cluster <math display="inline">\clusteridx</math> although their distances to the cluster mean <math display="inline">\clustermean^{(\clusteridx)}</math> might differ significantly. Intuitively, these two <span acronym-label="datapoint" acronym-form="singular+short">data point</span>s have a different <span acronym-label="dob" acronym-form="singular+short">degree of belonging</span> to the cluster <math display="inline">\clusteridx</math>. | |||

For some clustering applications it is desirable to quantify the degree by which a <span acronym-label="datapoint" acronym-form="singular+short">data point</span> belongs to a cluster. Soft clustering methods use a continues range, such as the closed interval <math display="inline">[0,1]</math>, of possible values for the <span acronym-label="dob" acronym-form="singular+short">degree of belonging</span>. In contrast, hard clustering methods use only two possible values for the <span acronym-label="dob" acronym-form="singular+short">degree of belonging</span> to a specific cluster, either “full belonging” or no “belonging at all”. While hard clustering methods assign a given <span acronym-label="datapoint" acronym-form="singular+short">data point</span> to precisely one cluster, soft clustering methods typically assign a <span acronym-label="datapoint" acronym-form="singular+short">data point</span> to several different clusters with non-zero <span acronym-label="dob" acronym-form="singular+short">degree of belonging</span>. | |||

This chapter discusses soft clustering methods that compute, for each <span acronym-label="datapoint" acronym-form="singular+short">data point</span> <math display="inline">\featurevec^{(\sampleidx)}</math> in the dataset <math display="inline">\dataset</math>, a vector <math display="inline">\widehat{\labelvec}^{(\sampleidx)}=\big(\hat{\truelabel}_{1}^{(\sampleidx)},\ldots,\hat{\truelabel}_{\nrcluster}^{(\sampleidx)}\big)^{T}</math>. We can interpret the entry <math display="inline">\hat{\truelabel}_{\clusteridx}^{(\sampleidx)} \in [0,1]</math> as the degree by which the <span acronym-label="datapoint" acronym-form="singular+short">data point</span> <math display="inline">\featurevec^{(\sampleidx)}</math> belongs to the cluster <math display="inline">\cluster^{(\clusteridx)}</math>. For <math display="inline">\hat{\truelabel}_{\clusteridx}^{(\sampleidx)} \approx 1</math>, we are quite confident in the <span acronym-label="datapoint" acronym-form="singular+short">data point</span> <math display="inline">\featurevec^{(\sampleidx)}</math> belonging to cluster <math display="inline">\cluster^{(\clusteridx)}</math>. In contrast, for <math display="inline">\hat{\truelabel}_{\clusteridx}^{(\sampleidx)} \approx 0</math>, we are quite confident that the <span acronym-label="datapoint" acronym-form="singular+short">data point</span> <math display="inline">\featurevec^{(\sampleidx)}</math> is outside the cluster <math display="inline">\cluster^{(\clusteridx)}</math>. | |||

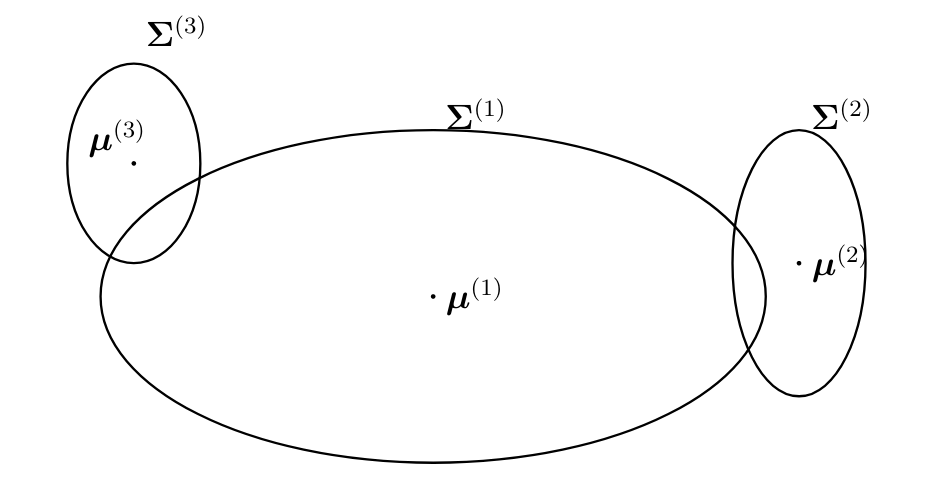

A widely used soft clustering method uses a probabilistic model for the <span acronym-label="datapoint" acronym-form="singular+short">data point</span>s <math display="inline">\dataset = \{ \featurevec^{(\sampleidx)} \}_{\sampleidx=1}^{\samplesize}</math>. Within this model, each cluster <math display="inline">\cluster^{(\clusteridx)}</math>, for <math display="inline">\clusteridx=1,\ldots,\nrcluster</math>, is represented by a multivariate normal distribution <math display="block">\begin{equation}\label{equ_def_mvn_distribution} \mathcal{N} \big(\featurevec ; \clustermean^{(\clusteridx)}, \clustercov^{(\clusteridx)} \big) \!=\! \frac{1}{\sqrt{{\rm det } \{ 2 \pi \clustercov \} }} \exp\big( - (1/2) \big(\featurevec\!-\!\clustermean^{(\clusteridx)} \big)^{T} \big( \clustercov^{(\clusteridx)} \big)^{-1} \big(\featurevec\!-\!\clustermean^{(\clusteridx)}\big) \big).\end{equation}</math> The probability distribution \eqref{equ_def_mvn_distribution} is parametrized by a cluster-specific mean vector <math display="inline">\clustermean^{(\clusteridx)}</math> and an (invertible) cluster-specific covariance matrix <math display="inline">\clustercov^{(\clusteridx)}</math>.<ref>Note that the expression \eqref{equ_def_mvn_distribution} is only valid for an invertible (non-singular) covariance matrix <math display="inline">\clustercov</math>.</ref> Let us interpret a specific <span acronym-label="datapoint" acronym-form="singular+short">data point</span> <math display="inline">\featurevec^{(\sampleidx)}</math> as a realization drawn from the probability distribution \eqref{equ_def_mvn_distribution} of a specific cluster <math display="inline">\clusteridx^{(\sampleidx)}</math>, <math display="block">\begin{equation}\label{equ_cond_dist_GMM} \featurevec^{(\sampleidx)} \sim \mathcal{N} \big(\featurevec ; \clustermean^{(\clusteridx)}, \clustercov^{(\clusteridx)} \big) \mbox{ with cluster index } \clusteridx=\clusteridx^{(\sampleidx)}.\end{equation}</math> We can think of <math display="inline">\clusteridx^{(\sampleidx)}</math> as the true index of the cluster to which the <span acronym-label="datapoint" acronym-form="singular+short">data point</span> <math display="inline">\featurevec^{(\sampleidx)}</math> belongs to. The variable <math display="inline">\clusteridx^{(\sampleidx)}</math> selects the cluster distributions \eqref{equ_def_mvn_distribution} from which the feature vector <math display="inline">\featurevec^{(\sampleidx)}</math> has been generated (drawn). We will therefore refer to the variable <math display="inline">\clusteridx^{(\sampleidx)}</math> as the (true) cluster assignment for the <math display="inline">\sampleidx</math>th <span acronym-label="datapoint" acronym-form="singular+short">data point</span>. Similar to the feature vectors <math display="inline">\featurevec^{(\sampleidx)}</math> we also interpret the cluster assignments <math display="inline">\clusteridx^{(\sampleidx)}</math>, for <math display="inline">\sampleidx=1,\ldots,\samplesize</math> as realizations of <span acronym-label="iid" acronym-form="singular+short">iid</span> <span acronym-label="rv" acronym-form="singular+short">rv</span>s. | |||

In contrast to the feature vectors <math display="inline">\featurevec^{(\sampleidx)}</math>, we do not observe (know) the true cluster indices <math display="inline">\clusteridx^{(\sampleidx)}</math>. After all, the goal of soft clustering is to estimate the cluster indices <math display="inline">\clusteridx^{(\sampleidx)}</math>. We obtain a <span acronym-label="softclustering" acronym-form="singular+short">soft clustering</span> method by estimating the cluster indices <math display="inline">\clusteridx^{(\sampleidx)}</math> based solely on the <span acronym-label="datapoint" acronym-form="singular+short">data point</span>s in <math display="inline">\dataset</math>. To compute these estimates we assume that the (true) cluster indices <math display="inline">\clusteridx^{(\sampleidx)}</math> are realizations of <span acronym-label="iid" acronym-form="singular+short">iid</span> <span acronym-label="rv" acronym-form="singular+short">rv</span>s with the common probability distribution (or probability mass function) <math display="block">\begin{equation}\label{equ_def_cluster_prob} p_{\clusteridx} \defeq \prob{\clusteridx^{(\sampleidx)}=\clusteridx} \mbox{ for } \clusteridx=1,\ldots,\nrcluster.\end{equation}</math> The (prior) probabilities <math display="inline">p_{\clusteridx}</math>, for <math display="inline">\clusteridx=1,\ldots,\nrcluster</math>, are either assumed known or estimated from data . The choice for the probabilities <math display="inline">p_{\clusteridx}</math> could reflect some prior knowledge about different sizes of the clusters. For example, if cluster <math display="inline">\cluster^{(1)}</math> is known to be larger than cluster <math display="inline">\cluster^{(2)}</math>, we might choose the prior probabilities such that <math display="inline">p_{1} > p_{2}</math>. | |||

The probabilistic model given by \eqref{equ_cond_dist_GMM}, \eqref{equ_def_cluster_prob} is referred to as a <span acronym-label="gmm" acronym-form="singular+short">GMM</span>. This name is quite natural as the common marginal distribution for the feature vectors <math display="inline">\featurevec^{(\sampleidx)}</math>, for <math display="inline">\sampleidx=1,\ldots,\samplesize</math>, is a (additive) mixture of multivariate normal (Gaussian) distributions, <math display="block">\begin{equation}\label{equ_def_GMM} \prob{\featurevec^{(\sampleidx)}} = \sum_{\clusteridx=1}^{\nrcluster} \underbrace{\prob{\clusteridx^{(\sampleidx)}=\clusteridx}}_{p_{\clusteridx}} \underbrace{\prob{\featurevec^{(\sampleidx)} | \clusteridx^{(\sampleidx)}=\clusteridx}}_{\mathcal{N}(\featurevec^{(\sampleidx)};\clustermean^{(\clusteridx)}, \clustercov^{(\clusteridx)})}.\end{equation}</math> As already mentioned, the cluster assignments <math display="inline">\clusteridx^{(\sampleidx)}</math> are hidden (unobserved) <span acronym-label="rv" acronym-form="singular+short">rv</span>s. We thus have to infer or estimate these variables from the observed <span acronym-label="datapoint" acronym-form="singular+short">data point</span>s <math display="inline">\featurevec^{(\sampleidx)}</math> which realizations or <span acronym-label="iid" acronym-form="singular+short">iid</span> <span acronym-label="rv" acronym-form="singular+short">rv</span>s with the common distribution \eqref{equ_def_GMM}. | |||

The <span acronym-label="gmm" acronym-form="singular+short">GMM</span> (see \eqref{equ_cond_dist_GMM} and \eqref{equ_def_cluster_prob}) lends naturally to a rigorous definition for the degree <math display="inline">\truelabel^{(\sampleidx)}_{\clusteridx}</math> by which <span acronym-label="datapoint" acronym-form="singular+short">data point</span> <math display="inline">\featurevec^{(\sampleidx)}</math> belongs to cluster <math display="inline">\clusteridx</math>.<ref>Remember that the <span acronym-label="dob" acronym-form="singular+short">degree of belonging</span> <math display="inline">\truelabel^{(\sampleidx)}_{\clusteridx}</math> is considered as the (unknown) label value of a <span acronym-label="datapoint" acronym-form="singular+short">data point</span>. The choice or definition for the labels of <span acronym-label="datapoint" acronym-form="singular+short">data point</span>s is a design choice. In particular, we can define the labels of <span acronym-label="datapoint" acronym-form="singular+short">data point</span>s using a hypothetical probabilistic model such as the <span acronym-label="gmm" acronym-form="singular+short">GMM</span>.</ref> Let us define the label value <math display="inline">\truelabel^{(\sampleidx)}_{\clusteridx}</math> as the “a-posteriori” probability of the cluster assignment <math display="inline">\clusteridx^{(\sampleidx)}</math> being equal to <math display="inline">\clusteridx \in \{1,\ldots,\nrcluster\}</math>: <math display="block">\begin{equation}\label{equ_def_deg_belonging_prob}\truelabel^{(\sampleidx)}_{\clusteridx} \defeq \prob{ \clusteridx^{(\sampleidx)} = \clusteridx | \dataset}.\end{equation}</math> By their very definition \eqref{equ_def_deg_belonging_prob}, the degrees of belonging <math display="inline">y^{(\sampleidx)}_{\clusteridx}</math> always sum to one, <math display="block">\begin{equation}\label{equ_dob_sum_to_one} \sum_{\clusteridx=1}^{\nrcluster} \truelabel^{(\sampleidx)}_{\clusteridx}=1 \mbox{ for each } \sampleidx=1,\ldots,\samplesize.\end{equation}</math> We emphasize that we use the conditional cluster probability \eqref{equ_def_deg_belonging_prob}, conditioned on the dataset <math display="inline">\dataset</math>, for defining the <span acronym-label="dob" acronym-form="singular+short">degree of belonging</span> <math display="inline">\truelabel^{(\sampleidx)}_{\clusteridx}</math>. This is reasonable since the <span acronym-label="dob" acronym-form="singular+short">degree of belonging</span> <math display="inline">\truelabel^{(\sampleidx)}_{\clusteridx}</math> depends on the overall (cluster) geometry of the <span acronym-label="dataset" acronym-form="singular+short">dataset</span> <math display="inline">\dataset</math>. | |||

The definition \eqref{equ_def_deg_belonging_prob} for the label values (<span acronym-label="dob" acronym-form="singular+short">degree of belonging</span>s) <math display="inline">\truelabel^{(\sampleidx)}_{\clusteridx}</math> involves the <span acronym-label="gmm" acronym-form="singular+short">GMM</span> parameters <math display="inline">\{\clustermean^{(\clusteridx)},\clustercov^{(\clusteridx)},p_{\clusteridx}\}_{\clusteridx=1}^{\nrcluster}</math> (see \eqref{equ_def_GMM}). Since we do not know these parameters beforehand we cannot evaluate the conditional probability in \eqref{equ_def_deg_belonging_prob}. A principled approach to solve this problem is to evaluate \eqref{equ_def_deg_belonging_prob} with the true <span acronym-label="gmm" acronym-form="singular+short">GMM</span> parameters replaced by some estimates <math display="inline">\{\widehat{\clustermean}^{(\clusteridx)},\widehat{\clustercov}^{(\clusteridx)},\hat{p}_{\clusteridx}\}_{\clusteridx=1}^{\nrcluster}</math>. Plugging in the <span acronym-label="gmm" acronym-form="singular+short">GMM</span> parameter estimates into \eqref{equ_def_deg_belonging_prob} provides us with predictions <math display="inline">\hat{\truelabel}^{(\sampleidx)}_{\clusteridx}</math> for the degrees of belonging. However, to compute the <span acronym-label="gmm" acronym-form="singular+short">GMM</span> parameter estimates we would have already needed the degrees of belonging <math display="inline">\truelabel^{(\sampleidx)}_{\clusteridx}</math>. This situation is similar to hard clustering where ultime goals is to jointly optimize cluster means and assignments (see Section [[#sec_hard_clustering|Hard Clustering with kmeans]]). | |||

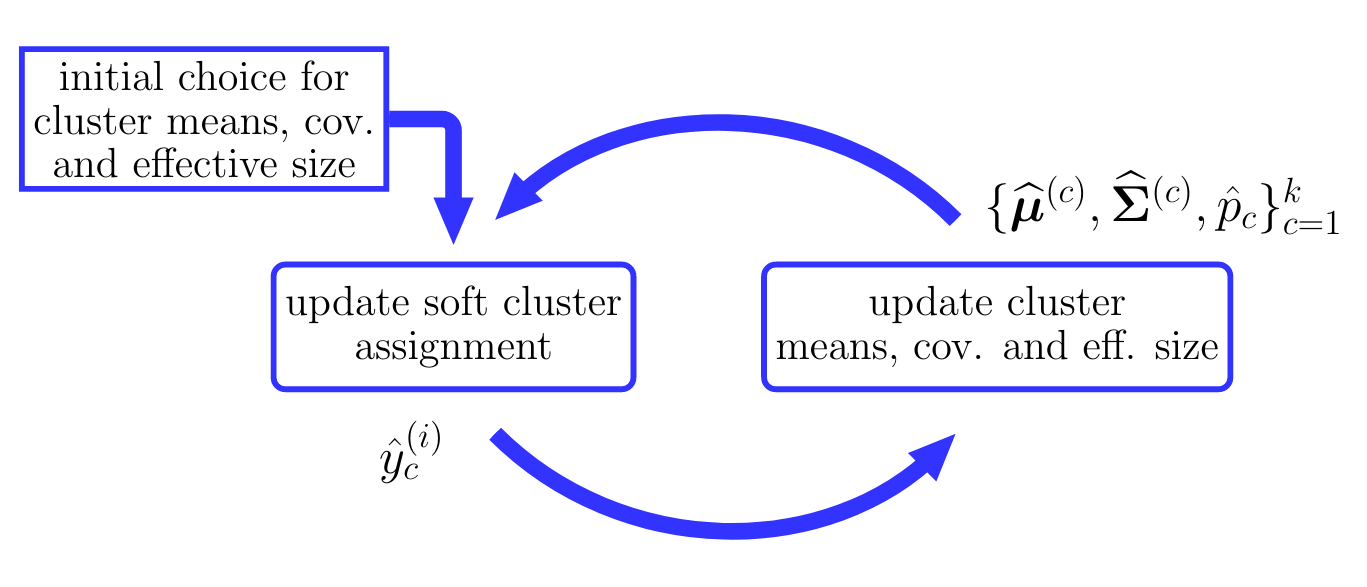

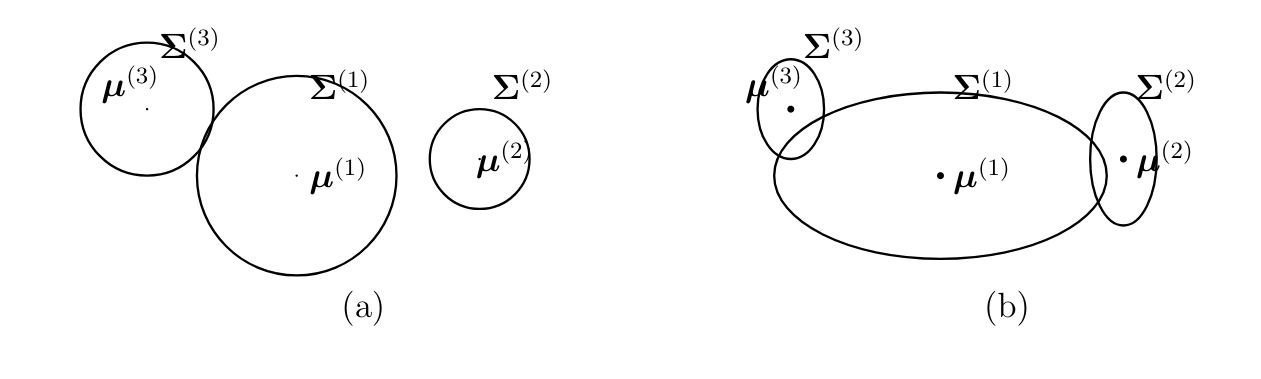

Similar to the spirit of Algorithm [[#alg:kmeans|k-means]] for hard clustering, we solve the above dilemma of <span acronym-label="softclustering" acronym-form="singular+short">soft clustering</span> by an alternating optimization scheme. This scheme, which is illustrated in Figure [[#fig_flow_softclustering|[fig_flow_softclustering]]], alternates between updating (optimizing) the predicted degrees of belonging (or soft cluster assignments) <math display="inline">\hat{\truelabel}^{(\sampleidx)}_{\clusteridx}</math>, for <math display="inline">\sampleidx=1,\ldots,\samplesize</math> and <math display="inline">\clusteridx=1,\ldots,\nrcluster</math>, given the current <span acronym-label="gmm" acronym-form="singular+short">GMM</span> parameter estimates <math display="inline">\{\widehat{\clustermean}^{(\clusteridx)},\widehat{\clustercov}^{(\clusteridx)},\hat{p}_{\clusteridx}\}_{\clusteridx=1}^{\nrcluster}</math> and then updating (optimizing) these <span acronym-label="gmm" acronym-form="singular+short">GMM</span> parameter estimates based on the updated predictions <math display="inline">\hat{\truelabel}^{(\sampleidx)}_{\clusteridx}</math>. We summarize the resulting soft clustering method in Algorithm [[#alg:softclustering|Soft-Clustering Algorithm]]. Each iteration of Algorithm [[#alg:softclustering|Soft-Clustering Algorithm]] consists of an update \eqref{equ_update_soft_cluster_assignm} for the degrees of belonging followed by an update (step [[#equ_GMM_update_step|[equ_GMM_update_step]]]) for the <span acronym-label="gmm" acronym-form="singular+short">GMM</span> parameters. | |||

<div class="d-flex justify-content-center"> | |||

<span id="fig_flow_softclustering.png "></span> | |||

[[File:fig_flow_softclustering.png | 500px | thumb | The workflow of the soft clustering Algorithm [[#alg:softclustering|Soft-Clustering Algorithm]]. Starting from an initial guess or estimate for the cluster parameters, the soft cluster assignments and cluster parameters are updated (improved) in an alternating fashion.]] | |||

</div> | |||

To analyze Algorithm [[#alg:softclustering|Soft-Clustering Algorithm]] it is helpful to interpret (the features of) <span acronym-label="datapoint" acronym-form="singular+short">data point</span>s <math display="inline">\featurevec^{(\sampleidx)}</math> as realizations of <span acronym-label="iid" acronym-form="singular+short">iid</span> <span acronym-label="rv" acronym-form="singular+short">rv</span>s distributed according to a <span acronym-label="gmm" acronym-form="singular+short">GMM</span> \eqref{equ_cond_dist_GMM}-\eqref{equ_def_cluster_prob}. We can then understand Algorithm [[#alg:softclustering|Soft-Clustering Algorithm]] as a method for estimating the <span acronym-label="gmm" acronym-form="singular+short">GMM</span> parameters based on observing realizations drawn from the <span acronym-label="gmm" acronym-form="singular+short">GMM</span> \eqref{equ_cond_dist_GMM}-\eqref{equ_def_cluster_prob}. We can estimate the parameters of a probability distribution using the <span acronym-label="ml" acronym-form="singular+short">maximum likelihood</span> method (see Section [[#sec_max_iikelihood|[sec_max_iikelihood]]] and ). As its name suggests, <span acronym-label="ml" acronym-form="singular+short">maximum likelihood</span> methods estimate the <span acronym-label="gmm" acronym-form="singular+short">GMM</span> parameters by maximizing the probability (density) <math display="block">\begin{equation}\label{equ_def_prob_den_GMM} | |||

%f^{({\rm GMM})} \big( \{ \clustermean^{(\clusteridx)}, \clustercov^{(\clusteridx)}, p_{\clusteridx} \}_{\clusteridx=1}^{\nrcluster}\big) \defeq | |||

p \big(\dataset; \{ \clustermean^{(\clusteridx)}, \clustercov^{(\clusteridx)}, p_{\clusteridx} \}_{\clusteridx=1}^{\nrcluster}\big)\end{equation}</math> of actually observing the <span acronym-label="datapoint" acronym-form="singular+short">data point</span>s in the dataset <math display="inline">\dataset</math>. | |||

<div class="d-flex justify-content-center"> | |||

<span id="fig_GMM_elippses" label="fig_GMM_elippses"></span> | |||

[[File:fig_GMM_elippses.png | 500px | thumb | The GMM \eqref{equ_cond_dist_GMM}, \eqref{equ_def_cluster_prob} results in a probability distribution \eqref{equ_def_GMM} for (feature vectors of) <span class="mw-gls mw-gls-first" data-name ="datapoint">data point</span>s which is a weighted sum of multivariate normal distributions <math>\mathcal{N}(\clustermean^{(\clusteridx)}, \clustercov^{(\clusteridx)})</math>. The weight of the <math>\clusteridx</math>-th component is the cluster probability <math> \prob{\clusteridx^{(\sampleidx)}=\clusteridx}</math>.]] | |||

</div> | |||

It can be shown that Algorithm [[#alg:softclustering|Soft-Clustering Algorithm]] is an instance of a generic approximate maximum likelihood technique referred to as expectation maximization <span acronym-label="em" acronym-form="singular+short">EM</span> (see for more details). In particular, each iteration of Algorithm [[#alg:softclustering|Soft-Clustering Algorithm]] updates the <span acronym-label="gmm" acronym-form="singular+short">GMM</span> parameter estimates such that the corresponding probability density \eqref{equ_def_prob_den_GMM} does not decrease . If we denote the <span acronym-label="gmm" acronym-form="singular+short">GMM</span> parameter estimate obtained after <math display="inline">\itercntr</math> iterations of Algorithm [[#alg:softclustering|Soft-Clustering Algorithm]] by <math display="inline">{\boldsymbol \theta}^{(\itercntr)}</math> , <math display="block">\begin{equation}\label{equ_proability_increasing_GMM}p \big(\dataset;{\boldsymbol \theta}^{(\itercntr\!+\!1)} \big) \geq p \big(\dataset;{\boldsymbol \theta}^{(\itercntr)} \big)\end{equation}</math> | |||

<proc label="A Soft-Clustering Algorithm" id="alg:softclustering"> | |||

'''Input:''' dataset <math display="inline">\dataset=\{ \featurevec^{(\sampleidx)}\}_{\sampleidx=1}^{\samplesize}</math>; number <math display="inline">\nrcluster</math> of clusters, initial <span acronym-label="gmm" acronym-form="singular+short">GMM</span> parameter estimates <math display="inline">\{\widehat{\clustermean}^{(\clusteridx)},\widehat{\clustercov}^{(\clusteridx)},\hat{p}_{\clusteridx}\}_{\clusteridx=1}^{\nrcluster}</math> | |||

<ul style="list-style-type:number"> | |||

<li>'''repeat'''</li> | |||

<li><span id="equ_update_degree_of_belonging" label="equ_update_degree_of_belonging"></span> for each <math display="inline">\sampleidx=1,\ldots,\samplesize</math> and <math display="inline">\clusteridx=1,\ldots,\nrcluster</math>, update degrees of belonging <math display="block">\begin{align} | |||

\label{equ_update_soft_cluster_assignm} | |||

\hat{\truelabel}_{\clusteridx}^{(\sampleidx)} & \defeq \frac{\hat{p}_{\clusteridx} \mathcal{N}(\featurevec^{(\sampleidx)};\widehat{\clustermean}^{(\clusteridx)},\widehat{\clustercov}^{(\clusteridx)})}{\sum_{\clusteridx'=1}^{\nrcluster} \hat{p}_{\clusteridx'}\mathcal{N}(\featurevec^{(\sampleidx)};\widehat{\clustermean}^{(\clusteridx')},\widehat{\clustercov}^{(\clusteridx')})} %\nonumber \\ | |||

\end{align}</math> </li> | |||

<li><span id="equ_GMM_update_step" label="equ_GMM_update_step"></span> for each <math display="inline">\clusteridx \in \{1,\ldots,\nrcluster\}</math>, update <span acronym-label="gmm" acronym-form="singular+short">GMM</span> parameter estimates: | |||

* <math display="inline">\hat{p}_{\clusteridx}\!\defeq\!\samplesize_{\clusteridx} /\samplesize</math> with effective cluster size <math display="inline">\samplesize_{\clusteridx} \defeq \sum\limits_{\sampleidx=1}^{\samplesize} \hat{\truelabel}_{\clusteridx}^{(\sampleidx)}</math> (cluster probability) | |||

* <math display="inline">\widehat{\clustermean}^{(\clusteridx)} \defeq (1/\samplesize_{\clusteridx}) \sum\limits_{\sampleidx=1}^{\samplesize} \hat{\truelabel}_{\clusteridx}^{(\sampleidx)} \featurevec^{(\sampleidx)}</math> (cluster mean) | |||

* <math display="inline">\widehat{\clustercov}^{(\clusteridx)} \defeq (1/\samplesize_{\clusteridx}) {\sum\limits_{\sampleidx=1}^{\samplesize} \hat{\truelabel}_{\clusteridx}^{(\sampleidx)} \big(\featurevec^{(\sampleidx)}\!-\!\widehat{\clustermean}^{(\clusteridx)}\big) \big(\featurevec^{(\sampleidx)}\!-\!\widehat{\clustermean}^{(\clusteridx)}\big)^{T} }</math> (cluster covariance matrix)</li> | |||

<li>'''until''' stopping criterion met</li> | |||

</ul> | |||

<span id="equ_conv_soft_clustering_algo" label="equ_conv_soft_clustering_algo"></span>'''Output:''' predicted degrees of belonging <math display="inline">\widehat{\labelvec}^{(\sampleidx)}=(\hat{\truelabel}_{1}^{(\sampleidx)},\ldots,\hat{y}_{\nrcluster}^{(\sampleidx)})^{T}</math> for <math display="inline">\sampleidx=1,\ldots,\samplesize</math>. | |||

</proc> | |||

As for Algorithm [[#alg:kmeans|k-means]], we can also interpret Algorithm [[#alg:softclustering|Soft-Clustering Algorithm]] as an instance of the <span acronym-label="dbscan" acronym-form="singular+short">ERM</span> principle discussed in Chapter [[#ch_Optimization|[ch_Optimization]]]. Indeed, maximizing the probability density \eqref{equ_def_prob_den_GMM} is equivalent to minimizing the <span acronym-label="emprisk" acronym-form="singular+short">empirical risk</span> <math display="block">\begin{equation}\label{equ_def_emp_risk_soft_clustering} | |||

\emperror \big({\boldsymbol \theta} \mid \dataset \big) \defeq | |||

- \log p \big( \dataset; {\boldsymbol \theta} \big) \mbox{ with GMM parameters } {\boldsymbol \theta} \defeq \{ \clustermean^{(\clusteridx)}, \clustercov^{(\clusteridx)}, p_{\clusteridx} \}_{\clusteridx=1}^{\nrcluster}\end{equation}</math> The <span acronym-label="emprisk" acronym-form="singular+short">empirical risk</span> \eqref{equ_def_emp_risk_soft_clustering} is the negative logarithm of the probability (density) \eqref{equ_def_prob_den_GMM} of observing the dataset <math display="inline">\dataset</math> as <span acronym-label="iid" acronym-form="singular+short">iid</span> realizations of the <span acronym-label="gmm" acronym-form="singular+short">GMM</span> \eqref{equ_def_GMM}. The monotone increase in the probability density \eqref{equ_proability_increasing_GMM} achieved by the iterations of Algorithm [[#alg:softclustering|Soft-Clustering Algorithm]] translate into a monotone decrease of the <span acronym-label="emprisk" acronym-form="singular+short">empirical risk</span>, <math display="block">\begin{equation}\label{equ_emprisk_decreasing_GMM}\emperror \big({\boldsymbol \theta}^{(\itercntr)} \mid \dataset \big) \leq \emperror \big({\boldsymbol \theta}^{(\itercntr-1)} \mid \dataset \big) \mbox{ with iteration counter } \itercntr.\end{equation}</math> | |||

The monotone decrease \eqref{equ_emprisk_decreasing_GMM} in the <span acronym-label="emprisk" acronym-form="singular+short">empirical risk</span> \eqref{equ_def_emp_risk_soft_clustering} achieved by the iterations of Algorithm [[#alg:softclustering|Soft-Clustering Algorithm]] naturally lends to a stopping criterion. Let <math display="inline">\error_{\itercntr}</math> denote the <span acronym-label="emprisk" acronym-form="singular+short">empirical risk</span> \eqref{equ_def_emp_risk_soft_clustering} achieved by the <span acronym-label="gmm" acronym-form="singular+short">GMM</span> parameter estimates <math display="inline">{\boldsymbol \theta}^{(\itercntr)}</math> obtained after <math display="inline">\itercntr</math> iterations in Algorithm [[#alg:softclustering|Soft-Clustering Algorithm]]. Algorithm [[#alg:softclustering|Soft-Clustering Algorithm]] stops iterating as soon as the decrease <math display="inline">\error_{\itercntr\!-\!1} - \error_{\itercntr}</math> achieved by the <math display="inline">\itercntr</math>-th iteration of Algorithm [[#alg:softclustering|Soft-Clustering Algorithm]] falls below a given (positive) threshold <math display="inline">\varepsilon>0</math>. | |||