Taylor Series

The subject of Section 7 was the function defined by a given power series. In contrast, in this section we start with a given function and ask whether or not there exists a power series which defines it. More precisely, if [math]f[/math] is a function containing the number [math]a[/math] in its domain, then does there exist a power series [math]\sum_{i=0}^\infty a_i (x - a)^i[/math] with nonzero radius of convergence which defines a function equal to [math]f[/math] on the interval of convergence of the power series? If the answer is yes, then the power series is uniquely determined. Specifically, it follows from Theorem (7.4), page 526, that [math]a_i = \frac{1}{i!} f^{(i)}(a)[/math], for every integer [math]i \geq 0[/math]. Hence

for every [math]x[/math] in the interval of convergence of the power series.

Let [math]f[/math] be a function which has derivatives of every order at [math]a[/math]. The power series [math]\sum_{i=0}^\infty \frac{1}{i!} f^{(i)}(a)(x - a)^i[/math] is called the Taylor series of the function [math]f[/math] about the number [math]a[/math]. The existence of this series, whose definition is motivated by the preceding paragraph, requires only the existence of every derivative [math]f^{(i)}(a)[/math]. However, the natural inference that the existence of the Taylor series for a given function implies the convergence of the series to the values of the function is false. In a later theorem we shall give an additional condition which makes the inference true. Two examples of Taylor series are the

series for [math]e^x[/math] and the series for [math]\ln(1 + x)[/math] developed in Example 1 of Section 7.

The value of a convergent infinite series can be approximated by its partial sums. For a Taylor series [math]\sum_{i=0}^\infty \frac{1}{i!} f^{(i)}(a)(x-a)^i[/math], the nth partial sum is a polynomial in [math](x-a)[/math], which we shall denote by [math]T_n[/math]. The definition is as follows: Let [math]n[/math] be a nonnegative integer and [math]f[/math] a function such that [math]f^{(i)}(a)[/math] exists for every integer [math]i = 0, . . ., n[/math]. Then the [math]n[/math]th Taylor approximation to the function [math]f[/math] about the number [math]a[/math] is the polynomial [math]T_n[/math] given by

for every real number [math]x[/math]. For each integer [math]k = 0, . . ., n[/math], direct computation of the [math]k[/math]th derivative at [math]a[/math] of the Taylor polynomial [math]T_n[/math] shows that it is equal to [math]f^{(k)}(a)[/math]. Thus we have the simple but important result:

The [math]n[/math]th Taylor approximation [math]T_n[/math] to the function [math]f[/math] about a satisfies

Example

Let [math]f[/math] be the function defined by [math]f(x) = \frac{1}{x}[/math]. For [math]n[/math] = 0, 1, 2, and 3, compute the Taylor polynomial [math]T_n[/math] for the function [math]f[/math] about the number 1, and superimpose the graph of each on the graph of [math]f[/math]. The derivatives are:

From the definition in (1), we therefore obtain

These equations express the functions [math]T_n[/math] as polynomials in [math]x - 1[/math] rather than as polynomials in [math]x[/math]. The advantage of this form is that it exhibits most clearly the behavior of each approximation in the vicinity of the number 1. Each one can, of course, be expanded to get a polynomial in [math]x[/math]. If we do this, we obtain

The graphs are shown in Figure 10. The larger the degree of the approximating polynomial, the more closely its graph “hugs” the graph of [math]f[/math] for values of [math]x[/math] close to 1.

The basic relation between a function [math]f[/math] and the approximating Taylor polynomials [math]T_n[/math] will be presented in Theorem (8.3). In proving it, we shall use the following lemma, which is an extension of Rolle's Theorem (see pages 111 ancl 112).

Let [math]F[/math] be a function with the property that the [math](n + 1)[/math]st derivative [math]F^{(n+1)}(t)[/math] exists for every [math]t[/math] in a closed interval [math][a, b][/math], where [math]a \lt b[/math]. If

\item[i]] [math]F^{i}(a) = 0, \;\;\;\mathrm{for}\; i = 0, 1, . . ., n, \;\mathrm{and} [/math]

\item[(ii)] [math]F(b)= 0,[/math]

then there exists [math]a[/math] real number [math]c[/math] in the open interval [math](a, b)[/math] such that [math]F^{(n+1)}(c) = 0[/math].

The idea of the proof is to apply Rolle's Theorem over and over again, starting with [math]i = 0[/math] and finishing with [math]i = n[/math]. (In checking the continuity requirements of Rolle's Theorem, remember that if a function has a derivative at a point, then it is continuous there.) A proof by induction on [math]n[/math] proceeds as follows: If [math]n = 0[/math], the result is a direct consequence of Rolle's Theorem. We must next prove from the assumption that if the lemma is true for [math]n = k[/math], then it is also true for [math]n = k + 1[/math]. Thus we assume that there exists a real number [math]c[/math] in the open interval [math](a, b)[/math] such that [math]F^{(k+1)}(c) = 0[/math] and shall prove that there exists another real number [math]c'[/math] in [math](a, b)[/math] such that [math]F^{(k+2)}(c') = 0[/math]. The hypotheses of (8.2) with [math]n = k + 1[/math] assure us that [math]F^{(k+2)}(t)[/math] exists for every [math]t[/math] in [math][a, b][/math] and that [math]F^{(k+1)}(a) = 0[/math]. The function [math]F^{(k +1)}[/math] satisfies the premises of Rolle's Theorem, since it is continuous on [math][a, c][/math], differentiable on [math](a, c)[/math], and [math]F^{(k+1)}(a) = F^{(k+1)}(c) = 0[/math]. Hence there exists a real number [math]c'[/math] in [math](a, c)[/math] with the property that [math]F^{(k+2)}(c') = 0[/math]. Since [math](a, c)[/math] is a subset of [math](a, b)[/math], the number [math]c'[/math] is also in [math](a, b)[/math], and this completes the proof.

We come now to the main theorem of the section:

Let [math]f[/math] be a function wifh the property that the [math](n + 1)[/math]st derivative [math]f^{(n+1)}(t)[/math] exists for every [math]t[/math] in the closed interval [math][a, b][/math], where [math]a \lt b[/math]. Then there exists a real number [math]c[/math] in the open interval [math](a, b)[/math] such that

Let the real number [math]K[/math] be defined by the equation

It has been assumed in the statement and proof of Taylor's Theorem that [math]a \lt b[/math]. However, if [math]b \lt a[/math], the same statement is true except that the [math](n + 1)[/math]st derivative exists in [math][b, a][/math] and the number [math]c[/math] lies in [math](b, a)[/math]. Except for the obvious modifications, the proof is identical to the one given. Suppose now that we are given a function [math]f[/math] such that [math]f^{(n+1)}[/math] exists at every point of some interval [math]I[/math] containing the number [math]a[/math]. Since Taylor's Theorem holds for any choice of [math]b[/math] in [math]I[/math] other than [math]a[/math], we may regard [math]b[/math] as the value of a variable. If we denote the variable by [math]x[/math], we have:

If [math]f^{(n+1)}(t)[/math] exists for every [math]t[/math] in an interval [math]I[/math] containing the number [math]a[/math], then, for every [math]x[/math] in [math]I[/math] other than [math]a[/math], there exists a real number [math]c[/math] in the open interval with endpoints [math]a[/math] and [math]x[/math] such that

The conclusion of this theorem is frequently called Taylor's Formula and [math]R_n[/math] is called the Remainder. As before, using the notation for the approximating Taylor polynomials, we can write the formula succinctly as

Example

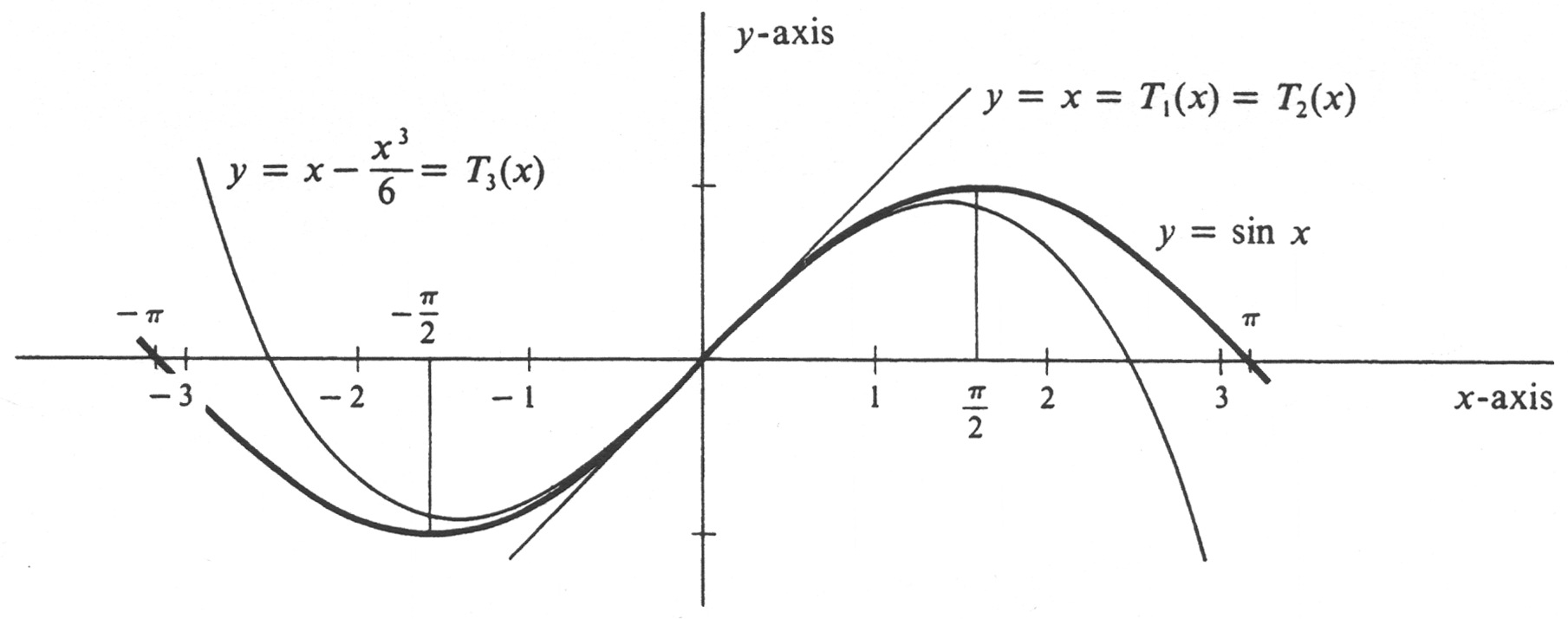

(a) Compute Taylor's Formula with the Remainder where [math]f(x) = \sin x, a = 0[/math], and [math]n[/math] is arbitrary. (b) Draw the graphs of [math]\sin x[/math] and of the polynomials [math]T_n(x)[/math], for [math]n[/math] = 1, 2, and 3. (c) Prove that, for every real number [math]x[/math],

The first four derivatives are given by

Thus the derivatives of [math]\sin x[/math] follow a regular cycle which repeats after every fourth derivation. In general, the even-order derivatives are given by

and the odd-order derivatives by

If we set [math]f(x) = \sin x[/math], then

Hence the [math]n[/math]th Taylor approximation is the polynomial

in which the coefficient of every even power of [math]x[/math] is zero. To handle this alternation, we define the integer [math]k[/math] by the rule

It then follows that

[If [math]n = 0[/math], we have the exception [math]T_0(x) = 0[/math].] For the remainder, we obtain

for some real number [math]c[/math] (which depends on both [math]x[/math] and [math]n[/math]) such that [math]|c| \lt |x|[/math]. The Taylor formula for [math]\sin x[/math] about the number 0 is therefore given by

where [math]k[/math] is defined by equation (4), and the remainder [math]R_n[/math] by (6). For part (b), the approximating polynomials [math]T_1, T_2,[/math] and [math]T_3[/math] can be read directly from equation (5) [together with (4)]. We obtain

Their graphs together with the graph of [math]\sin x[/math] are shown in Figure 11.

To prove that [math]\sin x[/math] can be defined by the infinite power series given in (c), we must show that, for every real number [math]x[/math],

where [math]k[/math] is the integer defined in (4). Since [math]\sin x = T_n(x) + R_n[/math], an equivalent proposition is

To prove the latter, we use the important fact that the absolute values of the functions sine and cosine are never greater than 1. Hence, in the expression for [math]R_n[/math] in (6), we know that [math]|\cos c| \leq 1[/math] and [math]|\sin c| \leq 1[/math]. It therefore follows from (6) that

It is easy to show by the Ratio Test [see Problem 4(b), page 510] that [math]\lim_{n \rightarrow \infty} \frac{|x|^{n+1}}{(n+1)!} = 0[/math]. Hence [math]\lim_{n \rightarrow \infty} R_n = 0[/math], and we have proved that

The form of the remainder in Taylor's Theorem provides one answer to the question posed at the beginning of the section, which, briefly stated, was: When can a given function be defined by a power series? The answer provided in the following theorem is obtained by a direct generalization of the method used to establish the convergence of the Taylor series for [math]\sin x[/math].

Let [math]f[/math] be a function which has derivatives of every order at every point of an interval [math]I[/math] containing the number [math]a[/math]. If the derivatives are uniformly bounded on [math]I[/math], i.e., if there exists a real number [math]B[/math] such that [math]|f^{(n)}(t)| \leq B[/math], for every integer [math]n \geq 0[/math] and every [math]t[/math] in [math]I[/math], then

Since [math]f(x) = T_n(x) + R_n[/math] [see Theorem (8.4) and formula (3)], we must prove that [math]f(x) = \lim_{n \rightarrow \infty} T_n(x)[/math], or, equivalently, that [math]\lim_{n \rightarrow \infty} R_n = 0[/math]. Generally speaking, the number [math]c[/math] which appears in the expression for the remainder [math]R_n[/math] will be different for each integer [math]n[/math] and each [math]x[/math] in [math]I[/math]. But since the number [math]B[/math] is a bound for the absolute values of all derivatives of [math]f[/math] everywhere in [math]I[/math], we have [math]|f^{(n+1)}(c)| \leq B[/math]. Hence

However [see Problem 4(b), page 510],

It is an important fact, referred to at the beginning of the section, that the convergence of the Taylor series to the values of the function which defines it cannot be inferred from the existence of the derivatives alone. In Theorem (8.5), for example, we added the very strong hypothesis that all the derivatives of [math]f[/math] are uniformly bounded on [math]I[/math]. The following function defined by

has the property that [math]f^n(x)[/math] exists for every integer [math]n \geq 0[/math] and every real number [math]x[/math]. Moreover, it can be shown that [math]f^n(0) = 0[/math], for every [math]n \geq 0[/math]. It follows that the Taylor series about 0 for this function is the trivial power series [math]\sum_{i=0}^\infty 0 \cdot x^i[/math]. This series converges to 0 for every real number [math]x[/math], and does not converge to [math]f(x)[/math], except for [math]x = 0[/math]. When a Taylor polynomial or series is computed about the number zero, as in Example 2, there is a tradition for associating with it the name of the mathematician Maclaurin instead of that of Taylor. Thus the Maclaurin series for a given function is a power series in [math]x[/math], and is simply the special case of the Taylor series in which [math]a = 0[/math]. Suppose that, for a given [math]n[/math], we replace the values of a function [math]f[/math] by those of the [math]n[/math]th Taylor approximation to the function about some number [math]a[/math]. How good is the resulting approximation? The answer depends on the interval (containing [math]a[/math]) over which we wish to use the values of the polynomial [math]T_n[/math]. Since [math]f(x) - T_n(x) = R_n[/math], the problem becomes one of finding a bound for [math]|R_n|[/math] over the interval in question.

Example

(a) Compute the first three terms of the Taylor series of the function [math]f(x) = (x +1)^{1/3}[/math] about [math]x = 7[/math]. That is, compute

(b) Show that [math]T_2(x)[/math] approximates [math]f(x)[/math] to within [math]\frac{5}{3^4 \cdot 2^8} = 0.00024[/math] (approximately) for every [math]x[/math] in the interval [7, 8]. Taking derivatives, we get

Hence

and the polynomial approximation to [math]f(x)[/math] called for in (a) is therefore

For part (b), we have [math]|f(x)-T_2(x)| = |R_2|[/math] and

for some number [math]c[/math] which is between [math]x[/math] and 7. To obtain a bound for [math]|R_2|[/math] over the prescribed interval [7, 8], we observe that in that interval the maximum value of [math](x - 7)[/math] occurs when [math]x = 8[/math] and the maximum value of [math]|f'''|[/math] occurs when [math]x = 7[/math]. Hence

Since [math]f'''(7) = \frac{2 \cdot 5}{3^3 \cdot 2^8}[/math], we get

Hence for every [math]x[/math] in the interval [7, 8], the difference in absolute value between [math](x + 1)^{1/3}[/math] and the quadratic polynomial [math]T_2(x)[/math] is less than 0.00025.

General references

Doyle, Peter G. (2008). "Crowell and Slesnick's Calculus with Analytic Geometry" (PDF). Retrieved Oct 29, 2024.