Model Selection and Regularization

Ridge Regression

Tikhonov regularization, named for Andrey Tikhonov, is a method of regularization of ill-posed problems. Also known as ridge regression,[a] it is particularly useful to mitigate the problem of multicollinearity in linear regression, which commonly occurs in models with large numbers of parameters.[1] In general, the method provides improved efficiency in parameter estimation problems in exchange for a tolerable amount of bias (see bias–variance tradeoff).[2]

In the simplest case, the problem of a near-singular moment matrix [math](\mathbf{X}^\mathsf{T}\mathbf{X})[/math] is alleviated by adding positive elements to the diagonals, thereby decreasing its condition number. Analogous to the ordinary least squares estimator, the simple ridge estimator is then given by

where [math]\mathbf{y}[/math] is the regressand, [math]\mathbf{X}[/math] is the design matrix, [math]\mathbf{I}[/math] is the identity matrix, and the ridge parameter [math]\lambda \geq 0[/math] serves as the constant shifting the diagonals of the moment matrix.[3] It can be shown that this estimator is the solution to the least squares problem subject to the constraint [math]\beta^\mathsf{T}\beta = c[/math], which can be expressed as a Lagrangian:

which shows that [math]\lambda[/math] is nothing but the Lagrange multiplier of the constraint. Typically, [math]\lambda[/math] is chosen according to a heuristic criterion, so that the constraint will not be satisfied exactly. Specifically in the case of [math]\lambda = 0[/math], in which the constraint is non-binding, the ridge estimator reduces to ordinary least squares.

Tikhonov regularization

Suppose that for a known matrix [math]A[/math] and vector [math]\mathbf{b}[/math], we wish to find a vector [math]\mathbf{x}[/math] such that

The standard approach is ordinary least squares linear regression. However, if no [math]\mathbf{x}[/math] satisfies the equation or more than one [math]\mathbf{x}[/math] does—that is, the solution is not unique—the problem is said to be ill posed. In such cases, ordinary least squares estimation leads to an overdetermined, or more often an underdetermined system of equations. Most real-world phenomena have the effect of low-pass filters in the forward direction where [math]A[/math] maps [math]\mathbf{x}[/math] to [math]\mathbf{b}[/math]. Therefore, in solving the inverse-problem, the inverse mapping operates as a high-pass filter that has the undesirable tendency of amplifying noise (eigenvalues / singular values are largest in the reverse mapping where they were smallest in the forward mapping). In addition, ordinary least squares implicitly nullifies every element of the reconstructed version of [math]\mathbf{x}[/math] that is in the null-space of [math]A[/math], rather than allowing for a model to be used as a prior for [math]\mathbf{x}[/math]. Ordinary least squares seeks to minimize the sum of squared residuals, which can be compactly written as

where [math]\|\cdot\|_2[/math] is the Euclidean norm.

In order to give preference to a particular solution with desirable properties, a regularization term can be included in this minimization:

for some suitably chosen Tikhonov matrix [math]\Gamma [/math]. In many cases, this matrix is chosen as a scalar multiple of the identity matrix ([math]\Gamma = \alpha I[/math]), giving preference to solutions with smaller norms; this is known as L2 regularization.[4] In other cases, high-pass operators (e.g., a difference operator or a weighted Fourier operator) may be used to enforce smoothness if the underlying vector is believed to be mostly continuous. This regularization improves the conditioning of the problem, thus enabling a direct numerical solution. An explicit solution, denoted by [math]\hat{x}[/math], is given by

The effect of regularization may be varied by the scale of matrix [math]\Gamma[/math]. For [math]\Gamma = 0[/math] this reduces to the unregularized least-squares solution, provided that [math](A^{\mathsf{T}}A)^{-1}[/math] exists.

[math]L_2[/math] regularization is used in many contexts aside from linear regression, such as classification with logistic regression or support vector machines,[5] and matrix factorization.[6]

Lasso

In statistics and machine learning, lasso (least absolute shrinkage and selection operator; also Lasso or LASSO) is a regression analysis method that performs both variable selection and regularization in order to enhance the prediction accuracy and interpretability of the resulting statistical model. It was originally introduced in geophysics,[7] and later by Robert Tibshirani,[8] who coined the term.

Lasso was originally formulated for linear regression models. This simple case reveals a substantial amount about the estimator. These include its relationship to ridge regression and best subset selection and the connections between lasso coefficient estimates and so-called soft thresholding. It also reveals that (like standard linear regression) the coefficient estimates do not need to be unique if covariates are collinear.

Though originally defined for linear regression, lasso regularization is easily extended to other statistical models including generalized linear models, generalized estimating equations, proportional hazards models, and M-estimators.[8][9] Lasso's ability to perform subset selection relies on the form of the constraint and has a variety of interpretations including in terms of geometry, Bayesian statistics and convex analysis.

History

Lasso was introduced in order to improve the prediction accuracy and interpretability of regression models. It selects a reduced set of the known covariates for use in a model.[8][7]

Lasso was developed independently in geophysics literature in 1986, based on prior work that used the [math]\ell^1[/math] penalty for both fitting and penalization of the coefficients. Statistician Robert Tibshirani independently rediscovered and popularized it in 1996, based on Breiman's nonnegative garrote.[7][10]

Prior to lasso, the most widely used method for choosing covariates was stepwise selection. That approach only improves prediction accuracy in certain cases, such as when only a few covariates have a strong relationship with the outcome. However, in other cases, it can increase prediction error.

At the time, ridge regression was the most popular technique for improving prediction accuracy. Ridge regression improves prediction error by shrinking the sum of the squares of the regression coefficients to be less than a fixed value in order to reduce overfitting, but it does not perform covariate selection and therefore does not help to make the model more interpretable.

Lasso achieves both of these goals by forcing the sum of the absolute value of the regression coefficients to be less than a fixed value, which forces certain coefficients to zero, excluding them from impacting prediction. This idea is similar to ridge regression, which also shrinks the size of the coefficients, however Ridge Regression tends to set far fewer coefficients to zero.

Least squares

Consider a sample consisting of [math]N[/math] cases, each of which consists of [math]p[/math] covariates and a single outcome. Let [math] y_i [/math] be the outcome and [math] x_i:=(x_1,x_2,\ldots,x_p)_i^T[/math] be the covariate vector for the i th case. Then the objective of lasso is to solve

Here [math] \beta_0 [/math] is the constant coefficient, [math] \beta:=(\beta_1,\beta_2,\ldots, \beta_p)[/math] is the coefficient vector, and [math]t[/math] is a prespecified free parameter that determines the degree of regularization.

Letting [math] X [/math] be the covariate matrix, so that [math] X_{ij} = (x_i)_j [/math] and [math]x_i^T[/math] is the [math]i[/math] th row of [math]X[/math], the expression can be written more compactly as

where [math] \| u \|_p = \left( \sum_{i=1}^N | u_i |^p \right)^{1/p} [/math] is the standard [math] \ell^p [/math] norm.

Denoting the scalar mean of the data points [math]x_i[/math] by [math]\bar{x}[/math] and the mean of the response variables [math]y_i[/math] by [math]\bar{y}[/math], the resulting estimate for [math]\beta_0[/math] is [math] \hat{\beta}_0 = \bar{y} - \bar{x}^T \beta [/math], so that

and therefore it is standard to work with variables that have been made zero-mean. Additionally, the covariates are typically standardized [math] \textstyle \left( \sum_{i=1}^N x_{i}^2 = 1 \right) [/math] so that the solution does not depend on the measurement scale.

It can be helpful to rewrite

in the so-called Lagrangian form

where the exact relationship between [math] t [/math] and [math] \lambda [/math] is data dependent.

Orthonormal covariates

Some basic properties of the lasso estimator can now be considered.

Assuming first that the covariates are orthonormal so that [math] ( x_i \mid x_j ) = \delta_{ij} [/math], where [math] ( \cdot \mid \cdot ) [/math] is the inner product and [math] \delta_{ij} [/math] is the Kronecker delta, or, equivalently, [math] X^T X = I [/math], then using subgradient methods it can be shown that

[math] S_\alpha [/math] is referred to as the soft thresholding operator, since it translates values towards zero (making them exactly zero if they are small enough) instead of setting smaller values to zero and leaving larger ones untouched as the hard thresholding operator, often denoted [math] H_\alpha [/math], would.

In ridge regression the objective is to minimize

yielding

It can also be compared to regression with best subset selection, in which the goal is to minimize

where [math] \| \cdot \|_0 [/math] is the "[math] \ell^0 [/math] norm", which is defined as [math] \| z \| = m [/math] if exactly [math]m[/math] components of [math]z[/math] are nonzero. In this case, it can be shown that

where [math] H_\alpha [/math] is the so-called hard thresholding function and [math] \mathrm{I} [/math] is an indicator function (it is 1 if its argument is true and 0 otherwise).

Therefore, the lasso estimates share features of both ridge and best subset selection regression since they both shrink the magnitude of all the coefficients, like ridge regression and set some of them to zero, as in the best subset selection case. Additionally, while ridge regression scales all of the coefficients by a constant factor, lasso instead translates the coefficients towards zero by a constant value and sets them to zero if they reach it.

In one special case two covariates, say [math]j[/math] and [math]k[/math], are identical for each observation, so that [math] x_{(j)} = x_{(k)} [/math], where [math] x_{(j),i} = x_{(k),i} [/math]. Then the values of [math] \beta_j [/math] and [math] \beta_k [/math] that minimize the lasso objective function are not uniquely determined. In fact, if some [math] \hat{\beta} [/math] in which [math] \hat{\beta}_j \hat{\beta}_k \geq 0 [/math], then if [math] s \in [0,1] [/math] replacing [math] \hat{\beta}_j [/math] by [math] s ( \hat{\beta}_j + \hat{\beta}_k ) [/math] and [math] \hat{\beta}_k [/math] by [math] (1 - s ) ( \hat{\beta}_j + \hat{\beta}_k ) [/math], while keeping all the other [math] \hat{\beta}_i [/math] fixed, gives a new solution, so the lasso objective function then has a continuum of valid minimizers.[11] Several variants of the lasso, including the Elastic net regularization, have been designed to address this shortcoming.

Geometric interpretation

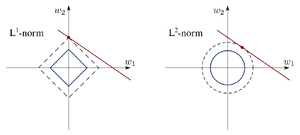

Lasso can set coefficients to zero, while the superficially similar ridge regression cannot. This is due to the difference in the shape of their constraint boundaries. Both lasso and ridge regression can be interpreted as minimizing the same objective function

but with respect to different constraints[math] \| \beta \|_1 \leq t [/math] for lasso and [math] \| \beta \|_2^2 \leq t [/math] for ridge. The figure shows that the constraint region defined by the [math] \ell^1 [/math] norm is a square rotated so that its corners lie on the axes (in general a cross-polytope), while the region defined by the [math] \ell^2 [/math] norm is a circle (in general an [math]n[/math]-sphere), which is rotationally invariant and, therefore, has no corners. As seen in the figure, a convex object that lies tangent to the boundary, such as the line shown, is likely to encounter a corner (or a higher-dimensional equivalent) of a hypercube, for which some components of [math] \beta [/math] are identically zero, while in the case of an n-sphere, the points on the boundary for which some of the components of [math] \beta [/math] are zero are not distinguished from the others and the convex object is no more likely to contact a point at which some components of [math] \beta [/math] are zero than one for which none of them are.

Making λ easier to interpret with an accuracy-simplicity tradeoff

The lasso can be rescaled so that it becomes easy to anticipate and influence the degree of shrinkage associated with a given value of [math] \lambda [/math].[12] It is assumed that [math]X[/math] is standardized with z-scores and that [math]y[/math] is centered (zero mean). Let [math]\beta_0[/math] represent the hypothesized regression coefficients and let [math]b_{OLS}[/math] refer to the data-optimized ordinary least squares solutions. We can then define the Lagrangian as a tradeoff between the in-sample accuracy of the data-optimized solutions and the simplicity of sticking to the hypothesized values.[13] This results in

where [math] q_i [/math] is specified below. The first fraction represents relative accuracy, the second fraction relative simplicity, and [math]\lambda[/math] balances between the two.

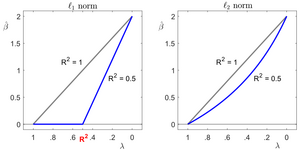

Given a single regressor, relative simplicity can be defined by specifying [math]q_i[/math] as [math]|b_{OLS}-\beta_{0}|[/math], which is the maximum amount of deviation from [math]\beta_0[/math] when [math] \lambda=0 [/math]. Assuming that [math]\beta_{0}=0[/math], the solution path can be defined in terms of [math]R^2[/math]:

If [math]\lambda=0[/math], the ordinary least squares solution (OLS) is used. The hypothesized value of [math]\beta_0=0[/math] is selected if [math]\lambda[/math] is bigger than [math]R^2[/math]. Furthermore, if [math]R^2=1[/math], then [math]\lambda[/math] represents the proportional influence of [math]\beta_0=0[/math]. In other words, [math]\lambda\times100\%[/math] measures in percentage terms the minimal amount of influence of the hypothesized value relative to the data-optimized OLS solution.

If an [math]\ell_2[/math]-norm is used to penalize deviations from zero given a single regressor, the solution path is given by

[math]b_{\ell_2}=\bigg(1+\frac{\lambda}{R^{2}(1-\lambda)}\bigg)^{-1}b_{OLS}[/math]. Like [math]b_{\ell_1}[/math], [math]b_{\ell_2}[/math] moves in the direction of the point [math](\lambda = R^2, b=0)[/math] when [math]\lambda[/math] is close to zero; but unlike [math]b_{\ell_1}[/math], the influence of [math]R^2[/math] diminishes in [math]b_{\ell_2}[/math] if [math]\lambda[/math] increases (see figure).

Given multiple regressors, the moment that a parameter is activated (i.e. allowed to deviate from [math]\beta_0[/math]) is also determined by a regressor's contribution to [math]R^2[/math] accuracy. First,

An [math]R^2[/math] of 75% means that in-sample accuracy improves by 75% if the unrestricted OLS solutions are used instead of the hypothesized [math]\beta_0[/math] values. The individual contribution of deviating from each hypothesis can be computed with the [math]p[/math] x [math]p[/math] matrix

where [math]\tilde y_0=y-X\beta_0[/math]. If [math]b=b_{OLS}[/math] when [math]R^2[/math] is computed, then the diagonal elements of [math]R^{\otimes}[/math] sum to [math]R^2[/math]. The diagonal [math]R^{\otimes}[/math] values may be smaller than 0 or, less often, larger than 1. If regressors are uncorrelated, then the [math]i^{th}[/math] diagonal element of [math]R^{\otimes}[/math] simply corresponds to the [math]r^2[/math] value between [math]x_i[/math] and [math]y[/math].

A rescaled version of the adaptive lasso of can be obtained by setting [math]q_{\mbox{adaptive lasso},i}=|b_{OLS,i}-\beta_{0,i}|[/math].[14] If regressors are uncorrelated, the moment that the [math]i^{th}[/math] parameter is activated is given by the [math]i^{th}[/math] diagonal element of [math]R^{\otimes}[/math]. Assuming for convenience that [math]\beta_0[/math] is a vector of zeros,

That is, if regressors are uncorrelated, [math]\lambda[/math] again specifies the minimal influence of [math]\beta_0[/math]. Even when regressors are correlated, the first time that a regression parameter is activated occurs when [math]\lambda[/math] is equal to the highest diagonal element of [math]R^{\otimes}[/math].

These results can be compared to a rescaled version of the lasso by defining [math]q_{\mbox{lasso},i}=\frac{1}{p} \sum_{l} |b_{OLS,l}-\beta_{0,l}|[/math], which is the average absolute deviation of [math]b_{OLS}[/math] from [math]\beta_0[/math]. Assuming that regressors are uncorrelated, then the moment of activation of the [math]i^{th}[/math] regressor is given by

For [math]p=1[/math], the moment of activation is again given by [math]\tilde \lambda_{\text{lasso},i}=R^2[/math]. If [math]\beta_0[/math] is a vector of zeros and a subset of [math]p_B[/math] relevant parameters are equally responsible for a perfect fit of [math]R^2=1[/math], then this subset is activated at a [math]\lambda[/math] value of [math]\frac{1}{p}[/math]. The moment of activation of a relevant regressor then equals [math]\frac{1}{p}\frac{1}{\sqrt{p_B}}p_B\frac{1}{\sqrt{p_B}}=\frac{1}{p}[/math]. In other words, the inclusion of irrelevant regressors delays the moment that relevant regressors are activated by this rescaled lasso. The adaptive lasso and the lasso are special cases of a '1ASTc' estimator. The latter only groups parameters together if the absolute correlation among regressors is larger than a user-specified value.[12]

Choice of regularization parameter

Choosing the regularization parameter ([math]\lambda[/math]) is a fundamental part of lasso. A good value is essential to the performance of lasso since it controls the strength of shrinkage and variable selection, which, in moderation can improve both prediction accuracy and interpretability. However, if the regularization becomes too strong, important variables may be omitted and coefficients may be shrunk excessively, which can harm both predictive capacity and inferencing. Cross-validation is often used to find the regularization parameter.

Information criteria such as the Bayesian information criterion (BIC) and the Akaike information criterion (AIC) might be preferable to cross-validation, because they are faster to compute and their performance is less volatile in small samples.[15] An information criterion selects the estimator's regularization parameter by maximizing a model's in-sample accuracy while penalizing its effective number of parameters/degrees of freedom. Zou et al. proposed to measure the effective degrees of freedom by counting the number of parameters that deviate from zero.[16] The degrees of freedom approach was considered flawed by Kaufman and Rosset[17] and Janson et al.,[18] because a model's degrees of freedom might increase even when it is penalized harder by the regularization parameter. As an alternative, the relative simplicity measure defined above can be used to count the effective number of parameters.[15] For the lasso, this measure is given by

, which monotonically increases from zero to [math] p [/math] as the regularization parameter decreases from [math] \infty [/math] to zero.

References

- Kennedy, Peter (2003). A Guide to Econometrics (Fifth ed.). Cambridge: The MIT Press. pp. 205–206. ISBN 0-262-61183-X.

- Gruber, Marvin (1998). Improving Efficiency by Shrinkage: The James–Stein and Ridge Regression Estimators. Boca Raton: CRC Press. pp. 7–15. ISBN 0-8247-0156-9.

- For the choice of [math]\lambda[/math] in practice, see "Choosing Ridge Parameter for Regression Problems" (2005). Communications in Statistics – Theory and Methods 34 (5): 1177–1182. doi:.

- Ng, Andrew Y. (2004). Feature selection, L1 vs. L2 regularization, and rotational invariance (PDF). Proc. ICML.

- "LIBLINEAR: A library for large linear classification" (2008). Journal of Machine Learning Research 9: 1871–1874.

- "Online nonnegative matrix factorization with robust stochastic approximation" (2012). IEEE Transactions on Neural Networks and Learning Systems 23 (7): 1087–1099. doi:. PMID 24807135.

- 7.0 7.1 7.2 "Linear inversion of band-limited reflection seismograms." (1986). SIAM Journal on Scientific and Statistical Computing 7 (4): 1307–1330. SIAM. doi:.

- 8.0 8.1 8.2 8.3 8.4 Tibshirani, Robert (1996). "Regression Shrinkage and Selection via the lasso". Journal of the Royal Statistical Society 58 (1): 267–88. Wiley.

- Tibshirani, Robert (1997). "The lasso Method for Variable Selection in the Cox Model". Statistics in Medicine 16 (4): 385–395. doi:. PMID 9044528.

- Breiman, Leo (1995). "Better Subset Regression Using the Nonnegative Garrote". Technometrics 37 (4): 373–84. doi:.

- "Regularization and Variable Selection via the Elastic Net" (2005). Journal of the Royal Statistical Society 67 (2): 301–20. Wiley. doi:.

- 12.0 12.1 Hoornweg, Victor (2018). "Chapter 8". Science: Under Submission. Hoornweg Press. ISBN 978-90-829188-0-9.

- "Accelerating Big Data Analysis through LASSO-Random Forest Algorithm in QSAR Studies" (October 2021). Bioinformatics 37 (19): 469–475. doi:. ISSN 1367-4803.

- Zou, Hui (2006). "The Adaptive Lasso and Its Oracle Properties" (PDF).

- 15.0 15.1 Hoornweg, Victor (2018). "Chapter 9". Science: Under Submission. Hoornweg Press. ISBN 978-90-829188-0-9.

- "On the 'Degrees of Freedom' of the Lasso" (2007). The Annals of Statistics 35 (5): 2173–2792. doi:.

- "When does more regularization imply fewer degrees of freedom? Sufficient conditions and counterexamples" (2014). Biometrika 101 (4): 771–784. doi:. ISSN 0006-3444.

- "Effective degrees of freedom: a flawed metaphor" (2015). Biometrika 102 (2): 479–485. doi:. ISSN 0006-3444. PMID 26977114.

Notes

- In statistics, the method is known as ridge regression, in machine learning it and its modifications are known as weight decay, and with multiple independent discoveries, it is also variously known as the Tikhonov–Miller method, the Phillips–Twomey method, the constrained linear inversion method, L2 regularization, and the method of linear regularization. It is related to the Levenberg–Marquardt algorithm for non-linear least-squares problems.

Wikipedia References

- Wikipedia contributors. "Tikhonov regularization". Wikipedia. Wikipedia. Retrieved 17 August 2022.

- Wikipedia contributors. "Lasso (statistics)". Wikipedia. Wikipedia. Retrieved 17 August 2022.