Partial Identification of Structural Models

In this section I focus on the literature concerned with learning features of structural econometric models. These are models where economic theory is used to postulate relationships among observable outcomes [math]\ey[/math], observable covariates [math]\ex[/math], and unobservable variables [math]\nu[/math]. For example, economic theory may guide assumptions on economic behavior (e.g., utility maximization) and equilibrium that yield a mapping from [math](\ex,\nu)[/math] to [math]\ey[/math]. The researcher is interested in learning features of these relationships (e.g., utility function, distribution of preferences), and to this end may supplement the data and economic theory with functional form assumptions on the mapping of interest and distributional assumptions on the observable and unobservable variables. The earlier literature on partial identification of features of structural models includes important examples of nonparametric analysis of random utility models and revealed preference extrapolation, e.g. [1], [2], [3], [4], [5], [6], and others.

The earlier literature also addresses semiparametric analysis, where the underlying models are specified up to parameters that are finite dimensional (e.g., preference parameters) and parameters that are infinite dimensional (e.g., distribution functions); important examples include [7], [8], [9](Section 2.10), [10], [11], [12], [13], [14], [15], [16], [17], [18], and others. Contrary to the nonparametric bounds results discussed in Section, and especially in the case of semiparametric models, structural partial identification often yields an identification region that is not constructive.[Notes 1] Indeed, the boundary of the set is not obtained in closed form as a functional of the distribution of the observable data. Rather, the identification region can often be characterized as a level set of a properly specified criterion function. The recent spark of interest in partial identification of structural microeconometric models was fueled by the work of [19], [20] and [21], and [22]. Each of these papers has advanced the literature in fundamental ways, studying conceptually very distinct problems. [19] are concerned with partial identification of the decision process yielding binary outcomes in a semiparametric model, when one of the explanatory variables is interval valued.

Hence, the root cause of the identification problem they study is that the data is incomplete.[Notes 2]

[20] and [21] are concerned with identification (and estimation) of simultaneous equation models with dummy endogeneous variables which are representations of two-player entry games with multiple equilibria.[Notes 3] [22] are concerned with nonparametric identification and estimation of the distribution of valuations in a model of English auctions under weak assumptions on bidders' behavior. In both cases, the root cause of the identification problem is that the structural model is incomplete. This is because the model makes multiple predictions for the observed outcome variables (respectively: the players' actions; and the bidders' bids), but does not specify how one of them is selected to yield the observed data.

Set-valued predictions for the observable outcome (endogenous variables) are a key feature of partially identified structural models. The goal of this section is to explain how they result in a wide array of theoretical frameworks, and how sharp identification regions can be characterized using a unified approach based on random set theory. Although the work of [19], [20] and [21], and [22] has spurred many of the developments discussed in this section, for pedagogical reasons I organize the presentation based on application topic rather than chronologically. The work of [23] and [24] further stimulated a large empirical literature that applies partial identification methods to a wide array of questions of substantive economic importance, to which I return in Section Further Theoretical Advances and Empirical Applications.

Discrete Choice in Single Agent Random Utility Models

Let [math]\cI[/math] denote a population of decision makers and [math]\cY=\{c_1,\dots,c_{|\cY|}\}[/math] a finite universe of potential alternatives (feasible set henceforth). Let [math]\bU[/math] be a family of real valued functions defined over the elements of [math]\cY[/math]. Let [math]\in^* [/math] denote ‘`is chosen from." Then observed choice is consistent with a ’'random utility model if there exists a function [math]\bu_i[/math] drawn from [math]\bU[/math] according to some probability distribution, such that [math]\P(c \in^* C)=\P(\bu_i(c) \ge \bu_i(b)\forall b \in C)[/math] for all [math]c\in C[/math], all non empty sets [math]C \subset \cY[/math], and all [math]i\in\cI[/math] [1]. See [25](Chapter 13) for a textbook presentation of this class of models, and [26] for a review of sufficient conditions for point identification of nonparametric and semiparametric limited dependent variables models. As in the seminal work of [27], assume that the decision makers and alternatives are characterized by observable and unobservable vectors of real valued attributes. Denote the observable attributes by [math]\ex_i \equiv \{\ex_i^1,(\ex_{ic}^2,c\in\cY)\},i\in\cI[/math]. These include attribute vectors [math]\ex_i^1[/math] that are specific to the decision maker, as well as attribute vectors [math]\ex_{ic}^2[/math] that include components that are specific to the alternative and components that are indexed by both. Denote the unobservable attributes (preferences) by [math]\nu_i\equiv(\zeta_i,\{\epsilon_{ic},c\in\cY\}),i\in\cI[/math]. These are idiosyncratic to the decision maker and similarly may include alternative and decision maker specific terms. Denote [math]\cX,\cV[/math] the supports of [math]\ex,\nu[/math], respectively. In what follows, I label ‘`standard" a random utility model that maintains some form of exogeneity for [math]\ex_i[/math] (e.g., mean or quantile or statistical independence with [math]\nu_i[/math]) and presupposes observation of data that include [math]\{(\eC_i,\ey_i,\ex_i):\ey_i \in^* \eC_i\}, i=1,\dots,n[/math], with [math]\eC_i[/math] the choice set faced by decision maker [math]i[/math] and [math]|\eC_i|\ge 2[/math] (e.g., [28](Assumption 1)). Often it is also assumed that all members of the population face the same choice set, [math]\eC_i=D[/math] for all [math]i\in\cI[/math] and some known [math]D\subseteq\cY[/math], although this requirement is not critical to identification analysis.

Semiparametric Binary Choice Models with Interval Valued Covariates

[19] provide inference methods for nonparametric, semiparametric, and parametric conditional expectation functions when one of the conditioning variables is interval valued. I have discussed their nonparametric and parametric sharp bounds on conditional expectations with interval valued covariates in Identification Problems and, and Theorems SIR- and SIR-, respectively. Here I focus on their analysis of semiparametric binary choice models. Compared to the generic notation set forth at the beginning of Section Discrete Choice in Single Agent Random Utility Models, I let [math]\eC_i=\cY=\{0,1\}[/math] for all [math]i\in\cI[/math], and with some abuse of notation I denote the vector of observed covariates [math](\xL,\xU,\ew)[/math].

Let [math](\ey,\xL,\xU,\ew)\sim\sP[/math] be observable random variables in [math]\{0,1\}\times\R\times\R\times\R^d[/math], [math]d \lt \infty[/math], and let [math]\ex\in\R[/math] be an unobservable random variable. Let [math]\ey=\one(\ew\theta + \delta\ex +\epsilon \gt 0)[/math]. Assume [math]\delta \gt 0[/math], and further normalize [math]\delta=1[/math] because the threshold-crossing condition is invariant to the scale of the parameters. Here [math]\epsilon[/math] is an unobserved heterogeneity term with continuous distribution conditional on [math](\ew,\ex,\xL,\xU)[/math], [math](\ew,\ex,\xL,\xU)[/math]-a.s., and [math]\theta\in\Theta\subset\R^d[/math] is a parameter vector representing decision makers’ preferences, with compact parameter space [math]\Theta[/math]. Assume that [math]\sR[/math], the joint distribution of [math](\ey,\ex,\xL,\xU,\ew,\epsilon)[/math], is such that [math]\sR(\xL\le\ex\le\xU)=1[/math]; [math] \sR(\epsilon |\ew,\ex,\xL,\xU)=\sR(\epsilon|\ew,\ex)[/math]; and for a specified [math]\alpha \in (0,1)[/math], [math]\sq_{\sR}^\epsilon(\alpha,\ew,\ex)=0[/math] and [math]\sR(\epsilon \le 0|\ew,\ex)=\alpha[/math], [math](\ew,\ex)[/math]-a.s.. In the absence of additional information, what can the researcher learn about [math]\theta[/math]?

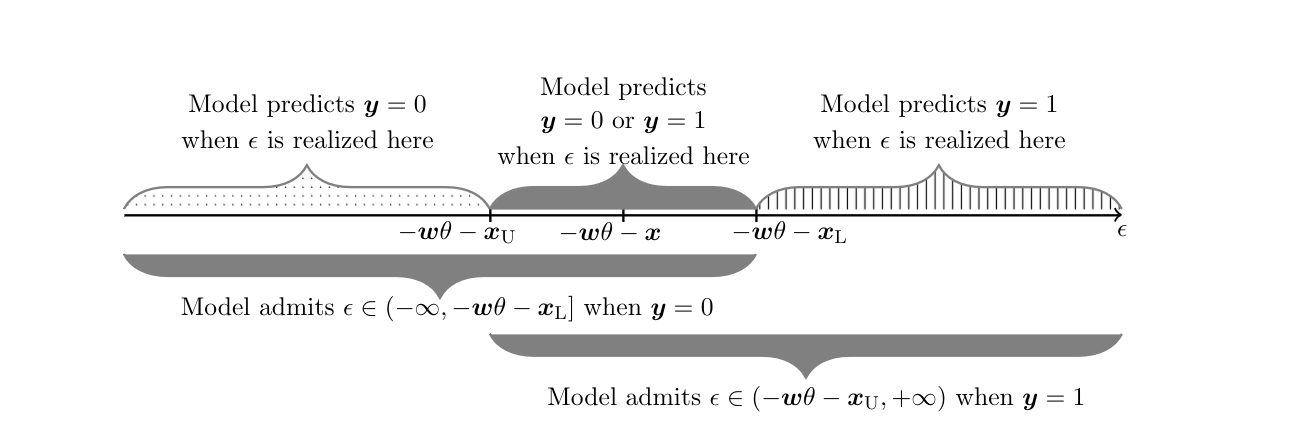

Compared to Identification Problem (see p.\pageref{IP:interval_covariate}), here one continues to impose [math]\ex\in[\xL,\xU][/math] a.s. The sign restriction on [math]\delta[/math] replaces the monotonicity restriction (M) in Identification Problem, but does not imply it unless the distribution of [math]\epsilon[/math] is independent of [math]\ex[/math] conditional on [math]\ew[/math]. The quantile independence restriction is inspired by [29]. For given [math]\theta\in\Theta[/math], this model yields set valued predictions because [math]\ey=1[/math] can occur whenever [math]\epsilon \gt -\ew\theta-\xU[/math], whereas [math]\ey=0[/math] can occur whenever [math]\epsilon\le -\ew\theta-\xL[/math], and [math]-\ew\theta-\xU \le -\ew\theta-\xL[/math]. Conversely, observation of [math]\ey=1[/math] allows one to conclude that [math]\epsilon\in(-\ew\theta-\xU,+\infty)[/math], whereas observation of [math]\ey=0[/math] allows one to conclude that [math]\epsilon\in(-\infty,-\ew\theta-\xL][/math], and these regions of possible realizations of [math]\epsilon[/math] overlap. In contrast, when [math]\ex[/math] is observed the prediction is unique because the value [math]-\ew\theta-\ex[/math] partitions the space of realizations of [math]\epsilon[/math] in two disjoint sets, one associated with [math]\ey=1[/math] and the other with [math]\ey=0[/math]. Figure depicts the model's set-valued predictions for [math]\ey[/math] given [math](\ew,\xL,\xU)[/math] as a function of [math]\epsilon[/math], and the model's set valued predictions for [math]\epsilon[/math] given [math](\ew,\xL,\xU)[/math] as a function of [math]\ey[/math].[Notes 4] Why does this set-valued prediction hinder point identification? The reason is that the distribution of the observable data relates to the model structure in an incomplete manner. The model predicts [math]\sM(\ey=1|\ew,\xL,\xU)=\int \sR(\ey=1|\ew,\ex,\xL,\xU)d\sR(\ex|\ew,\xL,\xU)=\int \sR(\epsilon \gt -\ew\theta-\ex|\ew,\ex)d\sR(\ex|\ew,\xL,\xU),(\ew,\xL,\xU)[/math]-a.s. Because the distribution [math]\sR(\ex|\ew,\xL,\xU)[/math] is left completely unspecified, one can find multiple values for [math](\theta,\sR(\ex|\ew,\xL,\xU),\sR(\epsilon|\ew,\ex))[/math], satisfying the assumptions in Identification Problem, such that [math]\sM(\ey=1|\ew,\xL,\xU)=\sP(\ey=1|\ew,\xL,\xU),(\ew,\xL,\xU)[/math]-a.s. Nonetheless, in general, not all values of [math]\theta\in\Theta[/math] can be paired with some [math]\sR(\ex|\ew,\xL,\xU)[/math] and [math]\sR(\epsilon|\ew,\ex)[/math] so that they are compatible with [math]\sP(\ey=1|\ew,\xL,\xU),(\ew,\xL,\xU)[/math]-a.s. and with the maintained assumptions. Hence, [math]\theta[/math] can be partially identified using the information in the model and observed data.

Under the Assumptions of Identification Problem, the sharp identification region for [math]\theta[/math] is

For any [math]\vartheta\in\Theta[/math], define the set of possible values for the unobservable associated with the possible realizations of [math](\ey,\ew,\xL,\xU)[/math], illustrated in Figure, as[Notes 5]

Any given [math]\vartheta\in\Theta[/math], [math]\vartheta\neq\theta[/math], violates the above conditions if and only if [math]\sP\big((\ew,\xL,\xU):\, \{0\le\ew\vartheta+\xL\cap \sP(\ey=1|\ew,\xL,\xU)\le 1-\alpha\}\cup \{\ew\vartheta+\xU\le 0\cap \sP(\ey=1|\ew,\xL,\xU)\ge 1-\alpha\}\big) \gt 0[/math].

Key Insight:

The analysis in [19] systematically studies what can be learned under increasingly strong sets of assumptions.

These include both assumptions that constrain the model from fully nonparametric to semiparametric to parametric, as well as assumptions that constrain the distribution of the observable covariates.

For example, [19](Corollary to Proposition 2) provide sufficient conditions on the joint distribution of [math](\ew,\xL,\xU)[/math] that allow for identification of the sign of components of [math]\theta[/math], as well as for point identification of [math]\theta[/math].[Notes 7]

The careful analysis of the identifying power of increasingly stronger assumptions is the pillar of the partial identification approach to empirical research proposed by Manski, as illustrated in Section.

The work of [19] was the first example of this kind in semiparametric structural models.

Revisiting [19] study of Identification Problem nearly 20 years later yields important insights on the differences between point and partial identification analysis. It is instructive to take as a point of departure the analysis of [29], which under the additional assumption that [math](\ey,\ew,\ex)[/math] is observed yields

In this case, [math]\theta[/math] is identified relative to [math]\vartheta\in\Theta[/math] if

[19] extend this reasoning to the case that [math]\ex[/math] is unobserved, but known to satisfy [math]\ex\in [\xL,\xU][/math] a.s. The first part of their analysis, collected in their Proposition 2, characterizes the collection of values that cannot be distinguished from [math]\theta[/math] on the basis of [math]\sP(\ew,\xL,\xU)[/math] alone, through a clear generalization of \eqref{eq:manski85}:

It is worth emphasizing that the characterization in \eqref{eq:region:man:tam02:potential} depends on [math]\theta[/math], and makes no use of the information in [math]\sP(\ey|\ew,\xL,\xU)[/math]. The Corollary to Proposition 2 yields conditions on [math]\sP(\ew,\xL,\xU)[/math] under which either the sign of components of [math]\theta[/math], or [math]\theta[/math] itself, can be identified, regardless of the distribution of [math]\ey|\ew,\xL,\xU[/math]. [19](Lemma 1) provide a second characterization, which presupposes knowledge of [math]\sP(\ey,\ew,\xL,\xU)[/math], yields a set smaller than the one in \eqref{eq:region:man:tam02:potential}, and coincides with the result in Theorem SIR-. [19] use the same notation for the two sets, although the sets are conceptually and mathematically distinct.[Notes 8] The result in Theorem SIR- is due to [19](Lemma 1), but the proof provided here is new, as is the use of random set theory in this application.[Notes 9]

Key Insight:The preceding discussion allows me to draw a novel connection between the two characterizations in [19], and the distinction put forward by [31] and [32](Chapter XXX in this Volume, Definition 2) in partial identification between potential observational equivalence and observational equivalence.[Notes 10] Applying [31]'s definition, parameter vectors [math]\theta[/math] and [math]\vartheta[/math] are potentially observationally equivalent if there exists some distribution of [math]\ey|\ew,\xL,\xU[/math] for which conditions \eqref{eq:key_sharp:man:tam02_1}-\eqref{eq:key_sharp:man:tam02_2} hold. Simple algebra confirms that this yields the region in \eqref{eq:region:man:tam02:potential}. This notion of potential observational equivalence parallels one of the notions used to obtain sufficient conditions for point identification in the semiparametric literature (as in, e.g. [29]). Both notions, as explained in [32](Section 4.1), make no reference to the conditional distribution of outcomes given covariates delivered by the process being studied. To obtain that parameters [math]\theta[/math] and [math]\vartheta[/math] are observationally equivalent one requires instead that conditions \eqref{eq:key_sharp:man:tam02_1}-\eqref{eq:key_sharp:man:tam02_2} hold for the observed distribution [math]\sP(\ey=1|\ew,\xL,\xU)[/math] (as opposed to ‘`for some distribution" as in the case of potential observational equivalence). This yields the sharp identification region in \eqref{eq:ThetaI_man:tam02_binary}.

[33] studies random ’'expected utility models, where agents choose the alternative that maximizes their expected utility. The core difference with standard models is that [33] does not fully specify the subjective beliefs that agents use to form their expectations, but only a set of such beliefs. [33] shows that the resulting, partially identified, discrete choice model can be formulated similarly to how [19] treat interval valued covariates, and leverages their results to obtain bounds on preference parameters.[Notes 11]

[34] consider a different but closely related model to the semiparametric binary response model studied by [19]. They assume that an instrumental variable [math]\ez[/math] is available, that [math]\epsilon[/math] is independent of [math]\ex[/math] conditional on [math](\ew,\ez)[/math], and that [math]Corr(\ez,\epsilon)=0[/math]. They assume that the distribution of [math]\ex[/math] is absolutely continuous with support [math][v_1,v_k][/math], and that [math]\ex[/math] is not a deterministic linear function of [math](\ew,\ez)[/math]. They consider the case that [math]\ex[/math] is unobserved but known to belong to one of the fixed (and known) intervals [math][v_i,v_{i+1})[/math], [math]i=1,\dots,k-1[/math], with [math]\sR[\ex\in[v_i,v_{i+1})|\ew,\ez] \gt 0[/math] almost surely for all [math]i[/math]. Finally, they assume that [math](-\ew\theta-\epsilon)\in [v_1,v_k][/math] with probability one. They do not, however, make quantile independence assumptions. Their point of departure is the fact that under these conditions, if [math]\ex[/math] were observed, one could employ a transformation proposed by [35] for the binary outcome [math]\ey[/math], such that [math]\theta[/math] can be identified through a simple linear moment condition. Specifically, let

where [math]f_\ex(\cdot|\ew,\ez)[/math] is the conditional density function of [math]\ex[/math]. Then, using the assumption that [math]\ez[/math] and [math]\epsilon[/math] are uncorrelated, one has

With interval valued [math]\ex[/math], [34] denote by [math]\ex^*[/math] the random variable that takes value [math]i\in\{1,\dots,k-1\}[/math] if [math]\ex\in[v_i,v_{i+1})[/math], so that the observed data are draws from the joint distribution of [math](\ey,\ew,\ez,\ex^*)[/math].

They let [math]\delta(\ex^*)=v_{\ex^*+1}-v_{\ex^*}[/math] denote the length of the [math]\ex^*[/math]-th interval, and define the transformed outcome variable:

The assumptions on [math]\ex[/math] yield that, given [math]\ez[/math] and [math]\ew[/math], [math]\epsilon[/math] does not depend on [math]\ex^*[/math]. Moreover, [math]\sP(\ey=1|\ex^*,\ew,\ez)[/math] is non-decreasing in [math]\ex^*[/math] and [math]\sF_\epsilon(\cdot|\ez,\ew,\ex,\ex^*)=\sF_\epsilon(\cdot|\ez,\ew)[/math]. [34] show that the sharp identification region for [math]\theta[/math] is

where [math]\E_\sP(\ez \ey^* + \ez \eU)[/math] is the Aumann (or selection) expectation of the random interval [math]\ez \ey^* + \ez \eU[/math], see Definition, with

In this expression, [math]r_{\ex^*}(\ew,\ez)\equiv\sP(\ey=1|\ex^*,\ew,\ez)[/math] and by convention [math]r_0(\ew,\ez)=0[/math] and [math]r_K(\ew,\ez)=1[/math], see [34](Theorem 4). If [math]r_i(\ew,\ez),i=0,\dots,k[/math], were observed, this characterization would be very similar to the one provided by [36] for Identification Problem, see equation eq:ThetaI_BLP. However, these random functions need to be estimated. While the first-stage estimation of [math]r_i(\ew,\ez),i=0,\dots,k[/math], does not affect the identification arguments, it does complicate inference, see [37] and the discussion in Section.

Endogenous Explanatory Variables

Whereas the standard random utility model presumes some form of exogeneity for [math]\ex[/math], in practice often some explanatory variables are endogenous. This problem has been addressed in the literature to obtain point identification of the model through a combination of several assumptions, including large support conditions, special regressors, control function restrictions, and more (see, e.g., [38][39][35][40]). [41] analyze the distinct but related problem of identification in a censored regression model with endogeneous explanatory variables, and provide sufficient conditions for point identification.[Notes 12] Here I discuss how to carry out identification analysis in the absence of such assumptions when instrumental variables [math]\ez[/math] are available, as proposed by [42]. They consider a more general case than I do here, with utility function that is not parametrically specified and not restricted to be separable in the unobservables. Even in that more general case, the identification analysis follows through similar steps as reported here. \begin{IP}[Discrete Choice with Endogenous Explanatory Variables]\label{IP:discrete:choice:endogenous} Let [math](\ey,\ex,\ez)\sim\sP[/math] be observable random variables in [math]\cY\times\cX\times\cZ[/math]. Let all members of the population face the same choice set [math]\cY[/math]. Suppose that each alternative has one unobservable attribute [math]\epsilon_c,c\in\cY[/math] and let [math]\nu\equiv(\epsilon_{c_1},\dots,\epsilon_{c_{|\cY|}})[/math].[Notes 13] Let [math]\nu\sim\sQ[/math] and assume that [math]\nu\independent\ez[/math]. Suppose [math]\sQ[/math] belongs to a nonparametric family of distributions [math]\cT[/math], and that the conditional distribution of [math]\nu|\ex,\ez[/math], denoted [math]\sR(\nu|\ex,\ez)[/math], is absolutely continuous with respect to Lebesgue measure with everywhere positive density on its support, [math](\ex,\ez)[/math]-a.s. Suppose utility is separable in unobservables and has a functional form known up to finite dimensional parameter vector [math]\theta\in\Theta\subset\R^m[/math], so that [math]\bu_i(c)=g(\ex_c;\theta)+\epsilon_c[/math], [math](\ex_c,\epsilon_c)[/math]-a.s., for all [math]c\in\cY[/math]. Maintain the normalizations [math]g(\ex_{c_{|\cY|}};\theta)=0[/math] for all [math]\theta\in\Theta[/math] and all [math]\ex\in\cX[/math], and [math]g(x_c^0;\theta)=\bar{g}[/math] for known [math](x_c^0,\bar{g})[/math] for all [math]\theta\in\Theta[/math] and [math]c\in\cY[/math].[Notes 14] Given [math](\ex,\ez,\nu)[/math], suppose [math]\ey[/math] is the utility maximizing choice in [math]\cY[/math]. In the absence of additional information, what can the researcher learn about [math](\theta,\sQ)[/math]? \qedex }} The key challenge to identification here results because the distribution of [math]\nu[/math] can vary across different values of [math]\ex[/math], both conditional and unconditional on [math]\ez[/math]. Why does this fact hinder point identification? For a given [math]\vartheta\in\Theta[/math] and for any [math]c\in\cY[/math] and [math]x\in\cX[/math], the model yields that [math]c[/math] is optimal, and hence chosen, if and only if [math]\nu[/math] realizes in the set

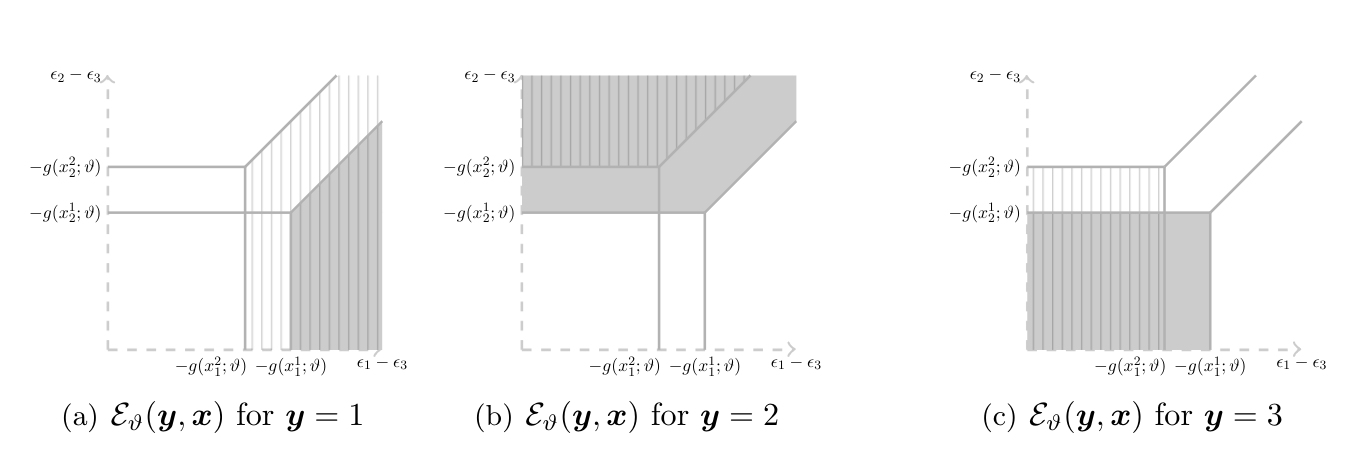

Figure plots the set [math]\cE_\vartheta(\ey,\ex)[/math] in a stylized example with [math]\cY=\{1,2,3\}[/math] and [math]\cX=\{x^1,x^2\}[/math], as a function of [math](\epsilon_1-\epsilon_3,\epsilon_2-\epsilon_3)[/math].[Notes 15] Consider the model implied distribution, denoted [math]\sM[/math] below, of the optimal choice. Then, recalling the restriction [math]\ez\independent\nu[/math], we have

Because the joint distribution of [math](\ex,\nu)[/math] conditional on [math]\ez[/math] is left completely unrestricted (other than \eqref{eq:che:ros:instrument}), one can find multiple triplets [math](\vartheta,\sQ,\sR(\nu|\ex,\ez))[/math] satisfying the maintained assumptions and with [math]\sM(c|\ex\in R_x,\ez;\vartheta)=\sP(c|\ex\in R_x,\ez)[/math] for all [math]c\in\cY[/math] and [math]R_x\subseteq\cX[/math], [math]\ez[/math]-a.s.

It is instructive to compare \eqref{eq:che:ros:model:distrib}-\eqref{eq:che:ros:instrument} with [27] conditional logit. Under the standard assumptions, [math]\ex\independent\nu[/math] so that no instrumental variables are needed. This yields [math]\sQ(\nu)=\sR(\nu|\ex)[/math] [math]\ex[/math]-a.s., and in addition [math]\sQ[/math] is typically known, with corresponding simplifications in \eqref{eq:che:ros:model:distrib}. The resulting system of equalities can be inverted under standard order and rank conditions to yield point identification of [math]\theta[/math]. Further insights can be gained by looking at Figure. As the value of [math]\ex[/math] changes from [math]x^1[/math] to [math]x^2[/math], the region of values where, say, alternative 1 is optimal changes. When [math]\ex[/math] is exogenous, say independent of [math]\nu[/math], this yields a system of equalities relating [math](\theta,\sQ)[/math] to the observed distribution [math]\sP(\ey,\ex)[/math] which, as stated above, can be inverted to obtain point identification. When [math]\ex[/math] is endogenous, this reasoning breaks down because the conditional distribution [math]\sR(\nu|\ex,\ez)[/math] may change across realizations of [math]\ex[/math]. Figure also offers an instructive way to connect Identification Problem with the identification problem studied in Section Semiparametric Binary Choice Models with Interval Valued Covariates (as well as with those in Sections Static, Simultaneous-Move Finite Games with Multiple Equilibria-Auction Models with Independent Private Values below). In the latter, the model has set-valued predictions for the outcome variable given realizations of the covariates and unobserved heterogeneity terms, which overlap across realizations of the unobserved heterogeneity terms. In the problem studied here, the model has singleton-valued predictions for the outcome variable of interest [math]\ey[/math] as a function of the observable explanatory variables [math]\ex[/math] and unobservables [math]\nu[/math]. However, for given realization of [math]\nu[/math], the model admits sets of values for the endogenous variables [math](\ey,\ex)[/math], which overlap across realizations of [math]\nu[/math]. Because the model is silent on the joint distribution of [math](\ex,\nu)[/math] (except for requiring that the marginal distribution of [math]\nu[/math] does not depend on [math]\ez[/math]), partial identification results. It is possible to couple the maintained assumptions with the observed data to learn features of [math](\theta,\sQ)[/math]. Because the observed choice [math]\ey[/math] is assumed to maximize utility, for the data generating [math](\theta,\sQ)[/math] the model yields

with [math]\cE_\theta(\ey,\ex)[/math] a random closed set as per Definition. Equation \eqref{eq:che:ros:e_in_E} exhausts the modeling content of Identification Problem. Theorem (as expressed in eq:dom-c) can then be leveraged to extract its empirical content from the observed distribution [math]\sP(\ey,\ex,\ez)[/math]. As a preparation for doing so, note that for given [math]F\in\cF[/math] (with [math]\cF[/math] the collection of closed subsets of [math]\cV[/math]) and [math]\vartheta\in\Theta[/math], we have

so that this probability can be learned from the observed data.

Under the assumptions of Identification Problem, the sharp identification region for [math](\theta,\sQ)[/math] is

To simplify notation, I write [math]\cE_\vartheta\equiv\cE_\vartheta(\ey,\ex)[/math]. Let [math](\cE_\vartheta,\ex,\ez)=\{(\mathbf{e},\ex,\ez):\mathbf{e}\in\cE_\vartheta\}[/math]. If the model is correctly specified, [math](\nu,\ex,\ez)\in(\cE_\theta,\ex,\ez)[/math]-a.s. for the data generating value of [math](\theta,\sQ)[/math]. Using Theorem and Theorem 2.33 in [30], it follows that [math](\vartheta,\tilde\sQ)[/math] is observationally equivalent to [math](\theta,\sQ)[/math] if and only if

While Theorem SIR- relies on checking inequality \eqref{eq:SIR:discrete:choice:endogenous} for all [math]F\in\cF[/math], the results in [42](Theorem 2) and [30](Chapter 2) can be used to obtain a smaller collection of sets over which to verify it. In particular, if [math]\ex[/math] has a discrete distribution, it suffices to use a finite collection of sets. For example, in the case depicted in Figure with [math]\cX=\{x^1,x^2\}[/math], [42](Section 3.3 of the 2011 CeMMAP working paper version CWP39/11) show that [math]\idr{\theta,\sQ}[/math] is obtained by checking at most twelve inequalities in \eqref{eq:SIR:discrete:choice:endogenous}. The left hand side of these inequalities is a linear function of six values that the distribution [math]\tilde\sQ[/math] assigns to each of the component regions depicted in Figure (the one where [math]\cE_\vartheta(1,x^1)\cap\cE_\vartheta(1,x^2)[/math] realizes; the one where [math]\cE_\vartheta(1,x^1)\cap\cE_\vartheta(3,x^2)[/math] realizes; etc.) Hence, in this example, [math](\vartheta,\tilde\sQ)\in\idr{\theta,\sQ}[/math] if and only if [math]\tilde\sQ[/math] assigns to these six regions a probability mass such that for [math]\vartheta[/math] the twelve inequalities characterized by [42] hold.

Key Insight: A conceptual contribution of [42] is to show that one can frame models with endogenous explanatory variables as incomplete models. Incompleteness here results from the fact that the model does not specify how the endogenous variables [math]\ex[/math] are determined. One can then think of these as models with set-valued predictions for the endogeneous variables ([math]\ey[/math] and [math]\ex[/math] in this application), even though the outcome of the model ([math]\ey[/math]) is uniquely predicted by the realization of the observed explanatory variables ([math]\ex[/math]) and the unobserved heterogeneity terms ([math]\nu[/math]). Random set theory can again be leveraged to characterize sharp identification regions. [32](Chapter XXX in this Volume) discuss related generalized instrumental variables models where random set methods are used to obtain characterizations of sharp identification regions in the presence of endogenous explanatory variables.

Unobserved Heterogeneity in Choice Sets and/or Consideration Sets

Compared to the general framework set forth at the beginning of Section Discrete Choice in Single Agent Random Utility Models, as pointed out in [43], often the researcher observes [math](\ey_i,\ex_i)[/math] but not [math]\eC_i[/math], [math]i=1,\dots,n[/math]. Even when [math]\eC_i[/math] is observable, the researcher may be unaware of which of its elements the decision maker actually evaluates before selecting one. In what follows, to shorten expressions, I refer to both the measurement problem of unobserved choice sets and the (cognitive) problem of limited consideration as ‘`unobserved heterogeneity in choice sets."

Learning features of preferences using discrete choice data in the presence of unobserved heterogeneity in choice sets is a formidable task. When a decision maker chooses an alternative, this may be because her choice set equals the feasible set and the chosen alternative is the one yielding the highest utility. Then observed choice reveals preferences. But it can also be that the decision maker has access to/considers only the chosen alternative (e.g., [1](p. 99)). Then observed choice is driven entirely by choice set composition, and is silent about preferences. A plethora of scenarios between these extremes is possible, but the researcher does not know which has generated the observed data. This fundamental identification problem calls either for restrictions on the random utility model and consideration set formation process, or for collection of richer data that eliminates unobserved heterogeneity in [math]\eC_i[/math] or allows for enhanced modeling of it (see, e.g., [44]).

A sizable literature spanning behavioral economics, econometrics, experimental economics, marketing, microeconomics, and psychology, has put forward different models to formalize the complex process that leads to the formation of the set of alternatives that the agent considers or can choose from (see, e.g., [45][46][47](for early contributions)). [43] proposes both a general econometric model where decision makers draw choice sets from an unknown distribution, as well as a specific model of choice set formation, independent from preferences, and studies their implications for the distributional structure of random utility models.[Notes 16]

However, assumptions about the choice set formation process are often rooted in a desire to achieve point identification rather than in information contained in the model or observed data.[Notes 17] It is then important to ask what can be learned about decision maker’s preferences under minimal assumptions on the choice set formation process. Allowing for unrestricted dependence between choice sets and preferences, while challenging for identification analysis, is especially relevant. Indeed, decision makers' unobserved attributes may determine both their preferences and which items in the feasible set they pay attention to or are available to them (e.g., through unobserved liquidity constraints, unobserved characteristics such as religious preferences in the context of school choice, or behavioral phenomena such as aversion to extremes, salience, etc.). Here I use the framework put forward by [48] to study identification of discrete choice models with unobserved heterogeneity in choice sets and preferences.

\begin{IP}[Discrete Choice with Unobserved Heterogeneity in Choice Sets and Preferences]\label{IP:BCMT} Let [math](\ey,\ex)\sim \sP[/math] be observable random variables in [math]\cY\times\cX[/math]. Assume that there exists a real valued function [math]g[/math], which for simplicity I posit known up to parameter [math]\delta\in\Delta\subset\R^m[/math] and continuous in its second argument, such that [math]\bu_i(c)=g(\ex_{ic},\nu_i;)[/math], [math](\ex_{ic},\nu_i)[/math]-a.s., for all [math]c\in\cY,i\in\cI[/math], where [math]\ex_{ic}[/math] denotes the vectors of attributes relevant to alternative [math]c[/math], and includes attributes that are alternative invariant and ones that are alternative specific (respectively, [math]\ex_i^1[/math] and [math]\ex_{ic}^2[/math] in the general notation laid out in Section Discrete Choice in Single Agent Random Utility Models). Suppose that [math]\ey=\arg\max_{c\in \eC}g(\ex_c,\nu;\delta)[/math], where ties are assumed to occur with probability zero and [math]\eC[/math] is an unobservable choice set drawn from the subsets of [math]\cY[/math] according to some unknown probability distribution. Suppose [math]\sR(|\eC|\ge\kappa)=1[/math] for some known constant [math]\kappa\ge 2[/math]. Let [math]\sQ[/math] denote the distribution of [math]\nu[/math], and assume that it is known up to a finite dimensional parameter [math]\gamma\in\Gamma\subset\R^k[/math]. For simplicity, assume that [math]\nu\independent\ex[/math].[Notes 18] In the absence of additional information, what can the researcher learn about [math]\theta\equiv[\delta;\gamma][/math]? \qedex }}

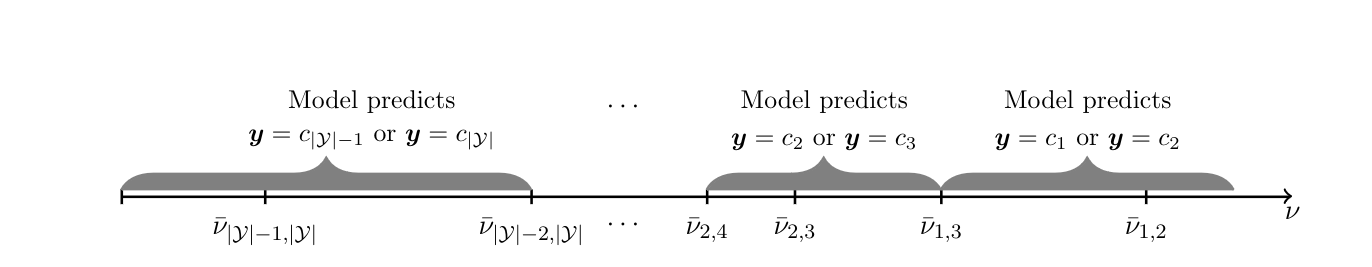

The model just laid out has set valued predictions for the decision maker's optimal choice, because different alternatives might be optimal depending on which choice set the decision maker draws. Figure, which is based on the analysis in [48], illustrates the set valued predictions in a stylized example. In the figure [math]\nu[/math] is assumed to be a scalar; [math]\bar{\nu}_{j,m}[/math] denotes the threshold value of [math]\nu[/math] above which [math]c_j[/math] yields higher utility than [math]c_m[/math] and below which [math]c_m[/math] yields higher utility than [math]c_j[/math] (the threshold's dependence on [math](\ex;\delta)[/math] is suppressed for notational convenience). Consider the case that [math]\nu\in[\bar{\nu}_{2,3},\bar{\nu}_{1,2}][/math], so that [math]c_2[/math] is the option yielding the highest utility among all options in [math]\cY[/math]. When [math]\kappa=|\cY|-1[/math], the agent may draw a choice set that does not include one of the alternatives in [math]\cY[/math]. If the excluded alternative is not [math]c_2[/math] (or if [math]\eC[/math] realizes equal to [math]\cY[/math]), the model predicts that the decision maker chooses [math]c_2[/math]. If [math]\eC[/math] realizes equal to [math]\cY\setminus\{c_2\}[/math], the model predicts that the decision maker chooses the second best: [math]c_1[/math] if [math]\nu\in[\bar{\nu}_{1,3},\bar{\nu}_{1,2}][/math], and [math]c_3[/math] if [math]\nu\in[\bar{\nu}_{2,3},\bar{\nu}_{1,3}][/math]. Conversely, observation of [math]\ey=c_1[/math] allows one to conclude that [math]\nu\ge\bar\nu_{1,3}[/math], and [math]\ey=c_2[/math] that [math]\nu\ge\bar\nu_{2,4}[/math], with [math]\bar\nu_{2,4}\le\bar\nu_{1,3}[/math], and these regions of possible realizations of [math]\nu[/math] overlap.

Why does this set valued prediction hinder point identification? The reason is similar to the explanation given for Identification Problem: the distribution of the observable data relates to the model structure in an incomplete manner, because the distribution of the (unobserved) choice sets is left completely unspecified. [48] show that one can find multiple candidate distributions for [math]\eC[/math] and parameter vectors [math]\vartheta[/math], such that together they yield a model implied distribution for [math]\ey|\ex[/math] that matches [math]\sP(\ey|\ex)[/math], [math]\ex[/math]-a.s. [48] propose to work directly with the set of model implied optimal choices given [math](\ex,\nu)[/math] associated with each possible realization of [math]\eC[/math], which is depicted in Figure for a specific example. The key idea is that, according to the model, the observed choice maximizes utility among the alternatives in [math]\eC[/math]. Hence, for the data generating value of [math]\theta[/math], it belongs to the set of model implied optimal choices. With this, the authors are able to characterize [math]\idr{\theta}[/math] through Theorem as the collection of parameter vectors that satisfy a finite number of conditional moment inequalities.

Key Insight: [48] show that working directly with the set of model implied optimal choices given [math](\ex,\nu)[/math] allows one to dispense with considering all possible distributions of choice sets that are allowed for in Identification Problem to complete the model. Such distributions may depend on [math]\nu[/math] even after conditioning on observables and may constitute an infinite dimensional nuisance parameter, which creates great difficulties for the computation of [math]\idr{\theta}[/math] and for inference.

Identification Problem sets up a structure where preferences include idiosyncratic components [math]\nu[/math] that are decision maker specific and can depend on [math]\eC[/math], and where heterogeneity in [math]\eC[/math] can be driven either by a measurement problem, or by the decision maker's limited attention to the options available to her. However, for computational and finite sample inference reasons, it restricts the family of utility functions to be known up to a finite dimensional parameter vector [math]\delta[/math].

A rich literature in decision theory has analyzed a different framework, where the decision maker's choice set is observable to the researcher, but the decision maker does not consider all alternatives in it (for recent contributions see, e.g., [49][50]). In this literature, the utility function is left completely unspecified, so that interest focuses on identification of preference orderings of the available options. Unobserved heterogeneity in preferences is assumed away, so that heterogeneous choice is driven by randomness in consideration sets. If the consideration set formation process is left unspecified or is subject only to weak restrictions, point identification of the preference orderings is not possible even if preferences are homogeneous and the researcher observes a representative agent facing multiple distinct choice problems with varying choice sets.

[51] propose a general model for the consideration set formation process where the only restriction is a weak and intuitive monotonicity condition: the probability that any particular consideration set is drawn does not decrease when the number of possible consideration sets decreases. Within this framework, they provide revealed preference theory and testable implications for observable choice probabilities. \begin{IP}[Homogeneous Preference Orderings in Random Attention Models]\label{IP:RAM} Let [math](\ey,\eC)\sim\sP[/math] be a pair of observable random variable and random set in [math]\cY\times\mathfrak{D}[/math], where [math]\mathfrak{D}=\{D:D\subseteq\cY\}\setminus\emptyset[/math].[Notes 19] Let [math]\mu:\mathfrak{D}\times\mathfrak{D}\to[0,1][/math] denote an attention rule such that [math]\mu(A|G)\ge 0[/math] for all [math]A\subseteq G[/math], [math]\mu(A|G)=0[/math] for all [math]A\nsubseteq G[/math], and [math]\sum_{A\subset G}\mu(A|G)=1[/math], [math]A,G\in\mathfrak{D}[/math]. Assume that for any [math]b\in G\setminus A[/math],

and that the decision maker has a strict preference ordering [math]\succ[/math] on [math]\cY[/math].[Notes 20] In the absence of additional information, what can the researcher learn about [math]\succ[/math]? \qedex }} [51] posit that an observed distribution of choice [math]\sP(\ey|\eC)[/math] has a random attention representation, and hence they name it a random attention model, if there exists a preference ordering [math]\succ[/math] over [math]\cY[/math] and a monotonic attention rule [math]\mu[/math] such that

The sharp identification region for the preference ordering, denoted [math]\idr{\succ}[/math] henceforth, is given by the collection of preference orderings for which one can find a monotonic attention rule to pair it with, so that \eqref{eq:RAM} holds. Of course, an observed distribution of choice can be represented by multiple preference orderings and attention rules. The authors, however, show in their Lemma 1 that if for some [math]G\in\mathfrak{D}[/math] with [math]\{b,c\}\in G[/math],

then [math]c \succ b[/math] for any [math]\succ[/math] for which one can find a monotonic attention rule [math]\mu[/math] such that \eqref{eq:RAM} holds. Because of preference transitivity, one can also learn [math]a\succ b[/math] if in addition to the above condition one has [math]\cp(a|G^\prime) \gt \cp(a|G^\prime\setminus \{c\})[/math] for some [math]c\in G^\prime[/math] and [math]G^\prime\in\mathfrak{D}[/math]. The authors further show in their Theorem 1 that the collection of preference relations associated with all possible instances of \eqref{eq:RAM_violation_reg} for all [math]c\in G[/math] and [math]G\in\mathfrak{D}[/math] yield all information about preferences given the observed choice probabilities. This yields a system of linear inequalities in [math]\cp(c|G)[/math] that fully characterize [math]\idr{\succ}[/math]. Let [math]\vec{\cp}[/math] denote the vector with elements [math][\cp(c|G):c\in G,G\in\mathfrak{D}][/math] and [math]\Pi_\succ[/math] denote a conformable matrix collecting the constraints on [math]\sP(\ey|\eC)[/math] embodied in \eqref{eq:RAM_violation_reg} and its generalizations based on transitive closure. Then

The authors show that for any given preference ordering [math]\succ[/math], the matrix [math]\Pi_\succ[/math] characterizing whether [math]\succ \in \idr{\succ}[/math] through the system of linear inequalities in \eqref{eq:SIR:RAM} is unique, and they provide a simple algorithm to compute it. They also show that mild additional assumptions, such as, for example, that decision makers facing binary choice sets pay attention to both alternatives frequently enough, can substantially increase the informational content of the data (i.e., substantially tighten [math]\idr{\succ}[/math]).

Key Insight: [51] show that learning features of preference orderings in Identification Problem requires the existence in the data of choice problems where the choice probabilities satisfy \eqref{eq:RAM_violation_reg}. The latter is a violation of the principle of ‘`regularity" [52] according to which the probability of choosing an alternative from any set is at least as large as the probability of choosing it from any of its supersets. Regularity is a monotonicity property of choice probabilities, and it is implied by a wide array of models of decision making. The monotonicity of attention rules in \eqref{eq:RAM:monotonicity} can be viewed as regularity of the process that chooses a consideration set from the subsets of the choice set. [51] show that it is implied by various models of limited attention. While the violation required in \eqref{eq:RAM_violation_reg} is weak in that it needs only to occur for some [math]G[/math], it sheds a different light on the severity of the identification problem described at the beginning of this section. Regularity of choice probabilities and (partial) identification of preference orderings can co-exist only under restrictions on the consideration set formation process that are stronger than the regularity of attention rules in \eqref{eq:RAM:monotonicity}.

[53] and [54] provide different sets of sufficient conditions for point identification of models of limited consideration. In both cases, the authors posit specific models of consideration set formation and provide sufficient conditions for point identification under exclusion and large support assumptions. [53] assume that unobserved heterogeneity in preferences and in consideration sets are independent. They exploit violations of Slutsky symmetry that result from inattention, assuming that for each alternative there is an observable characteristic with large support that does not affect the consideration probability of the other options.

[54] provide a thorough analysis of the extent of dependency between consideration and preferences under which semi-nonparametric point identification of the distribution of preferences and consideration attains. They exploit a requirement of standard economic theory --the Spence-Mirrlees single crossing property of utility functions-- coupled with a mild strengthening of the classic conditions for semi-nonparametric identification of discrete choice models with full consideration and identical choice sets (see, e.g., [26]), assuming that there is at least one decision maker-specific characteristic with large support that affects utility but not consideration.

Prediction of Choice Behavior with Counterfactual Choice Sets

Building on [2], [55] studies a question related but distinct from those in Identification problem - problem. He is concerned with prediction of choice behavior when decision makers face counterfactual choice sets. [55] frames this question as one of predicting treatment response (see Section Treatment Effects with and without Instrumental Variables). Here the collection of potential treatments is given by [math]\mathfrak{D}[/math], the nonempty subsets of the universe of feasible alternatives [math]\cY[/math], and the response function specifies the alternative chosen by a decision maker when facing choice set [math]G\in\mathfrak{D}[/math]. [55] assumes that the researcher observes realized choice sets and chosen alternatives, [math](\ey,\eC)\sim\sP[/math].[Notes 21] Under the standard assumptions laid out at the beginning of Section Discrete Choice in Single Agent Random Utility Models, specifically if utility functions are (say) linear in [math]\epsilon_{ic}[/math] and the distribution of [math]\epsilon_{ic}[/math] is (say) Type I extreme value or multivariate normal, prediction of choice behavior with counterfactual choice sets is immediate (and point identified). [55], however, leaves utility functions completely unspecified, and in fact works directly with preference orderings, which he labels decision maker’s types. He places no restriction on the distribution of preference types, except requiring that they are independent of the observed choice sets. [55] shows that under these rather weak assumptions, the distribution of predicted choices from counterfactual choice sets can be partially identified, and characterized as the solution to linear programs.

Specifically, let [math]\ey^*(G)[/math] denote the decision maker's optimal choice when facing choice set [math]G\in\mathfrak{D}[/math]. Assume [math]\ey^*(\cdot)\independent\eC[/math], and let [math]y_k[/math] denote the choice function for a decision maker of type [math]k[/math] --that is, a decision maker with a specific preference ordering labeled [math]k[/math]. One example of such preference ordering might be [math]c_1\succ c_2\succ\dots\succ c_{|\cY|}[/math]. If a decision maker of this type faces, say, choice set [math]G=\{c_2,c_3,c_4\}[/math], then she chooses alternative [math]c_2[/math]. Let [math]K[/math] denote the set of logically possible types, and [math]\theta_k[/math] the probability that a decision maker in the population is of type [math]k[/math]. Suppose that the researcher posits a behavioral model specifying [math]K[/math], [math]\{y_k,k=1,\dots,K\}[/math], and restrictions that constrain [math]\theta[/math] to lie in some specified set of distributions. Let [math]\Theta[/math] denote the values of [math]\vartheta[/math] that satisfy these requirements plus the conditions [math]\vartheta_k\ge 0[/math] for all [math]k\in K[/math] and [math]\sum_{k\in K}\vartheta_k=1[/math]. Then for any [math]c\in\cY[/math] and [math]\vartheta\in\Theta[/math], the model predicts

How can one partially identify this probability based on the observed data? Suppose [math]\eC[/math] is observed to take realizations [math]D_1,\dots,D_m[/math]. Then the data reveal

This yields that the sharp identification region for [math]\theta[/math] is

If the behavioral model is correctly specified, [math]\idr{\theta}[/math] is non-empty. In turn, the sharp identification region for each choice probability is

and its extreme points can be obtained by solving linear programs.

[56] provide closely related sharp bounds on features of counterfactual choices in the nonparametric random utility model of demand, where observable choices are repeated cross-sections and one allows for unrestricted, unobserved heterogeneity. Their approach builds on the work of [57], who test weather agents' behavior is consistent with the Axiom of Revealed Stochastic Preference (SARP) in a random utility model in which the utility function of each consumer over commodity bundles is assumed to satisfy only the basic restriction that ‘`more is better" with no satiation. Because the testing exercise is to be carried out using repeated cross-sections data, the authors maintain the assumption that multiple populations of consumers who face distinct choice sets have the same distribution of preferences. With this structure in place, de facto the task is to test the full implications of rationality without functional form restrictions. [57]’s approach is based on several novel ideas. As a first step, they leverage an earlier insight of [58] to discretize the data without loss of information, so that they can define a large but finite set of rational preferences types. As a second step, they show that this implies that rationality can be tested by checking whether observed behavior lies in a cone corresponding to positive linear combinations of preference types. While the problem is discrete, its dimension is at first sight prohibitive. Nonetheless, Kitamura and Stoye are able to develop novel computational methods that render the problem tractable. They apply their method to the U.K. Household Expenditure Survey, adapting to their framework results on nonparametric instrumental variable analysis by [59] so that they can handle price endogeneity.

[60] builds on [55] to learn program effects when agents are randomly assigned to control or treatment. The treatment group is provided access to the program, while the control group is not. However, members of the control group may receive access to the program from outside the experiment, leading to noncompliance with the randomly assigned treatment. The researcher wants to learn about the average effect of program access on the decision to participate in the program and on the subsequent outcome. While sufficiently rich data may allow the researcher to learn these effects, [60] is concerned with the identification problem that arises when the researcher only observes the treatment assignment status, the program participation decision, and the outcome, but not the receipt of program access for every agent. [60] formalizes this problem as one where the received treatment is selected from a choice set that depends on the assigned treatment and is unobservable to the researcher, and the agents optimally choose whether to participate in the program by maximizing their utility function over their choice set. Importantly, the utility functions are not subject to parametric restrictions, similarly to [55]. But while [55] assumed independence of choice sets and preference types, [60] allows them to be arbitrarily dependent on each other, as in [48]. [60] approach leverages specific assumptions on random assignment of treatments and on compliance (or lack thereof) of participants to obtain nonparametric bounds on the treatment effects of interest that can be characterized using tractable linear programs.

Static, Simultaneous-Move Finite Games with Multiple Equilibria

An Inference Approach Robust to the Presence of Multiple Equilibria

[20] and [21] substantially enlarge the scope of partial identification analysis of structural models by showing how to apply it to learn features of payoff functions in static, simultaneous-move finite games of complete information with multiple equilibria. [61] extend the approach and considerations that follow to games of incomplete information. To start, here I focus on two-player entry games with complete information.[Notes 22]

Let [math](\ey_1,\ey_2,\ex_1,\ex_2)\sim\sP[/math] be observable random variables in [math]\{0,1\}\times\{0,1\}\times\R^d\times\R^d[/math], [math]d \lt \infty[/math]. Suppose that [math](\ey_1,\ey_2)[/math] result from simultaneous move, pure strategy Nash play (PSNE) in a game where the payoffs are [math]\bu_j(\ey_j,\ey_{3-j},\ex_j;\beta_j,\delta_j)\equiv \ey_j(\ex_j\beta_j+\delta_j\ey_{3-j}+\eps_j)[/math], [math]j=1,2[/math] and the strategies are ‘`enter" ([math]\ey_j=1[/math]) or ``stay out" ([math]\ey_j=0[/math]). Here [math](\ex_1,\ex_2)[/math] are observable payoff shifters, [math](\eps_1,\eps_2)[/math] are payoff shifters observable to the players but not to the econometrician, [math]\delta_1\le 0,\delta_2\le 0[/math] are interaction effect parameters, and [math]\beta_1,\beta_2[/math] are parameter vectors in [math]B\subset\R^d[/math] reflecting the effect of the observable covariates on payoffs. Each player enters the market if and only if entering yields non-negative payoff, so that [math]\ey_j=\one(\ex_j\beta_j+\delta_j\ey_{3-j}+\eps_j\ge 0)[/math]. For simplicity, assume that [math]\eps\equiv(\eps_1,\eps_2)[/math] is independent of [math]\ex\equiv(\ex_1,\ex_2)[/math] and has bivariate Normal distribution with mean vector zero, variances equal to one (a normalization required by the threshold crossing nature of the model), and correlation [math]\rho\in [-1,1][/math]. In the absence of additional information, what can the researcher learn about [math]\theta=[\delta_1\delta_2\beta_1\beta_2\rho][/math]?

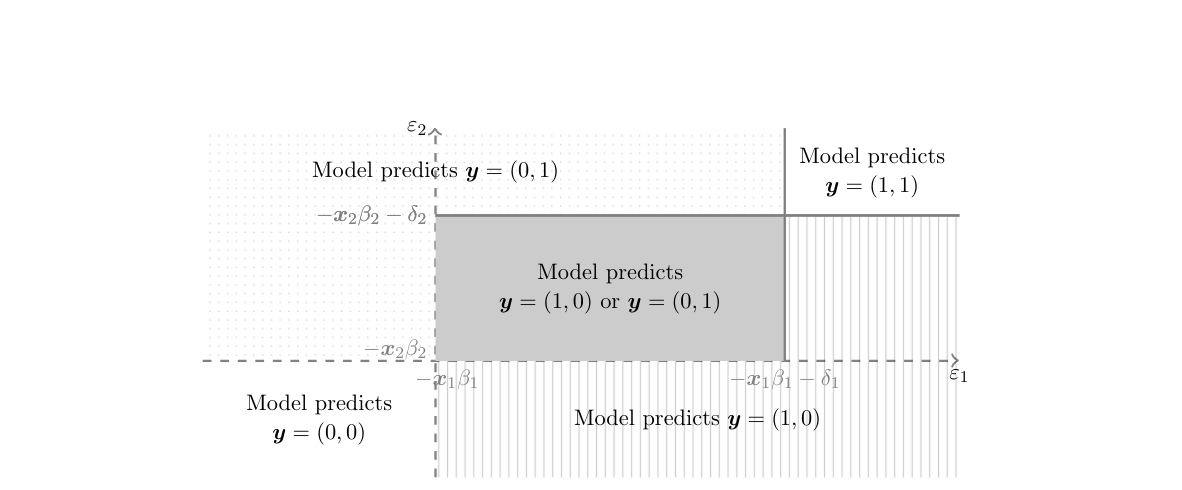

From the econometric perspective, this is a generalization of a standard discrete choice model to a bivariate simultaneous response model which yields a stochastic representation of equilibria in a two player, two action game. Generically, for a given value of [math]\theta[/math] and realization of the payoff shifters, the model just laid out admits multiple equilibria (existence of PSNE is guaranteed because the interaction parameters are non-positive). In other words, it yields set valued predictions as depicted in Figure.[Notes 23] Why does this set valued prediction hinder point identification? Intuitively, the challenge can be traced back to the fact that for different values of [math]\theta\in\Theta[/math], one may find different ways to assign the probability mass in [math][-\ex_1\beta_1,-\ex_1\beta_1-\delta_1)\times [-\ex_2\beta_2,-\ex_2\beta_2-\delta_2)[/math] to [math](0,1)[/math] and [math](1,0)[/math], so as to match the observed distribution [math]\sP(\ey_1,\ey_2|\ex_1,\ex_2)[/math]. More formally, for fixed [math]\vartheta\in\Theta[/math] and given [math](\ex,\eps)[/math] and [math](y_1,y_2)\in\{0,1\}\times\{0,1\}[/math], let

so that in Figure [math]\cE_\vartheta[(1,0),(0,1);\ex][/math] is the gray region, [math]\cE_\vartheta[(0,1);\ex][/math] is the dotted region, etc. Let [math]\sR(y_1,y_2|\ex,\eps)[/math] be a ’'selection mechanism that assigns to each possible outcome of the game [math](y_1,y_2)\in\{0,1\}\times\{0,1\}[/math] the probability that it is played conditional on observable and unobservable payoff shifters. In order to be admissible, [math]\sR(y_1,y_2|\ex,\eps)[/math] must be such that [math]\sR(y_1,y_2|\ex,\eps)\ge 0[/math] for all [math](y_1,y_2)\in\{0,1\}\times\{0,1\}[/math], [math]\sum_{(y_1,y_2)\in\{0,1\}\times\{0,1\}}\sR(y_1,y_2|\ex,\eps)=1[/math], and

Let [math]\Phi_r[/math] denote the probability distribution of a bivariate Normal random variable with zero means, unit variances, and correlation [math]r\in[-1,1][/math]. Let [math]\sM(y_1,y_2|\ex)[/math] denote the model predicted probability that the outcome of the game realizes equal to [math](y_1,y_2)[/math]. Then the model yields

Because [math]\sR(\cdot|\ex,\eps)[/math] is left completely unspecified, other than the basic restrictions listed above that render it an admissible selection mechanism, one can find multiple values for [math](\vartheta,\sR(\cdot|\ex,\eps))[/math] such that [math]\sM(y_1,y_2|\ex)=\sP(y_1,y_2|\ex)[/math] for all [math](y_1,y_2)\in\{0,1\}\times\{0,1\}[/math] [math]\ex[/math]-a.s.

Multiplicity of equilibria implies that the mapping from the model's exogenous variables [math](\ex_1,\ex_2,\eps_1,\eps_2)[/math] to outcomes [math](\ey_1,\ey_2)[/math] is a correspondence rather than a function. This violates the classical “principal assumptions” or “coherency conditions” for simultaneous discrete response models discussed extensively in the econometrics literature (e.g., [62][63][64][65][66]). Such coherency conditions require the existence of a unique reduced form, mapping the model's exogenous variables and parameters to a unique realization of the endogenous variable; hence, they constrain the model to be recursive or triangular in nature. As pointed out by [67], however, the coherency conditions shut down exactly the social interaction effect of interest by requiring, e.g., that [math]\delta_1\delta_2=0[/math], so that at least one player's action has no impact on the other player's payoff.

The desire to learn about interaction effects coupled with the difficulties generated by multiplicity of equilibria prompted the earlier literature to provide at least two different ways to achieve point identification. The first one relies on imposing simplifying assumptions that shift focus to outcome features that are common across equilibria. For example, [68][69][70] and [71] study entry games where the number, though not the identities, of entrants is uniquely predicted by the model in equilibrium. Unfortunately, however, these simplifying assumptions substantially constrain the amount of heterogeneity in player's payoffs that the model allows for. The second approach relies on explicitly modeling a selection mechanism which specifies the equilibrium played in the regions of multiplicity. For example, [67] assume it to be a constant; [72] assume a more flexible, covariate dependent parametrization; and [71] considers two possible selection mechanism specifications, one where the incumbent moves first, and the other where the most profitable player moves first. Unfortunately, however, the chosen selection mechanism can have non-trivial effects on inference, and the data and theory might be silent on which is more appropriate. A nice example of this appears in [71](Table VII). [61] review and extend a number of results on the identification of entry models extensively used in the empirical literature. [14] discusses the observable implications of models with multiple equilibria, and within the analysis of a model with homogeneous preferences shows that partial identification is possible (see [14](p. 1435)). I refer to [73] for a review of the literature on econometric analysis of games with multiple equilibria.

[21] show, on the other hand, that it is possible to partially identify entry models that allow for rich heterogeneity in payoffs and for any possible selection mechanism (even ones that are arbitrarily dependent on the unobservable payoff shifters after conditioning on the observed payoff shifters). In addition, [20] provides sufficient conditions for point identification based on exclusion restrictions and large support assumptions. [74] analyze partial identification of nonparametric models of entry in a two-player model, drawing connections with the program evaluation literature.

Key Insight: An important conceptual contribution of [20] is to clarify the distinction between a model which is incoherent, so that no reduced form exists, and a model which is incomplete, so that multiple reduced forms may exist. Models with multiple equilibria belong to the latter category. Whereas the earlier literature in partial identification had been motivated by measurement problems, e.g., missing or interval data, the work of [20] and [21] is motivated by the fact that economic theory often does not specify how an equilibrium is selected in the regions of the exogenous variables which admit multiple equilibria. This is a conceptually completely distinct identification problem.

[21] propose to use simple and tractable implications of the model to learn features of the structural parameters of interest. Specifically, they point out that the probability of observing any outcome of the game cannot be smaller than the model's implied probability that such outcome is the unique equilibrium of the game, and cannot be larger than the model's implied probability that such outcome is one of the possible equilibria of the game. Looking at Figure this means, for example, that the observed [math]\sP((\ey_1,\ey_2)=(0,1)|\ex_1,\ex_2)[/math] cannot be smaller than the probability that [math](\eps_1,\eps_2)[/math] realizes in the dotted region, and cannot be larger than the probability that it realizes either in the dotted region or in the gray region. Compared to the model predicted distribution in \eqref{eq:games_model:pred}, this means that [math]\sP((\ey_1,\ey_2)=(0,1)|\ex_1,\ex_2)[/math] cannot be smaller than the expression obtained setting, for [math]\eps\in\Eps_\vartheta[(1,0);(0,1);\ex][/math], [math]\sR(0,1|\ex,\eps)=0[/math], and cannot be larger than that obtained with [math]\sR(0,1|\ex,\eps)=1[/math]. Denote by [math]\Phi(A_1,A_2;\rho)[/math] the probability that the bivariate normal with mean vector zero, variances equal to one, and correlation [math]\rho[/math] assigns to the event [math]\{\eps_1\in A_1,\eps_2\in A_2\}[/math]. Then [21] show that any [math]\vartheta=[d_1,d_2,b_1,b_2,r][/math] that is observationally equivalent to the data generating value [math]\theta[/math] satisfies, [math](\ex_1,\ex_2)[/math]-a.s.,

While the approach of [21] is summarized here for a two player entry game, it extends without difficulty to any finite number of players and actions and to solution concepts other than pure strategy Nash equilibrium.

[75] build on the insights of [21] to study what is the identification power of equilibrium in games. To do so, they compare the set-valued model predictions and what can be learned about [math]\theta[/math] when one assumes only level-[math]k[/math] rationality as opposed to Nash play. In static entry games of complete information, they find that the model's predictions when [math]k\ge 2[/math] are similar to those obtained with Nash behavior and allowing for multiple equilibria and mixed strategies. [76] extend the analysis of [75] to the class of supermodular games.

The collections of parameter vectors satisfying (in)equalities \eqref{eq:CT_00}-\eqref{eq:CT_01L} yields the sharp identification region [math]\idr{\theta}[/math] in the case of two player entry games with pure strategy Nash equilibrium as solution concept, as shown by [77](Supplementary Appendix D, Corollary D.4). When there are more than two players or more than two actions (or with different solutions concepts, such as, e.g., mixed strategy Nash equilibrium; correlated equilibrium; or rationality of level [math]k[/math] as in [75]), the characterization in [21] obtained by extending the reasoning just laid out yields an outer region. [77] use elements of random set theory to provide a general and computationally tractable characterization of the identification region that is sharp, regardless of the number of players and actions, or the solution concept adopted. For the case of PSNE with any finite number of players or actions, [78] provide a computationally tractable sharp characterization of the identification region using elements of optimal transportation theory.

Characterization of Sharpness through Random Set Theory

[77] provide a general approach based on random set theory that delivers sharp identification regions on parameters of structural semiparametric models with set valued predictions. Here I summarize it for the case of static, simultaneous move finite games of complete information, first with PSNE as solution concept and then with mixed strategy Nash equilibrium. Then I discuss games of incomplete information.

For a given [math]\vartheta\in\Theta[/math], denote the set of pure strategy Nash equilibria (depicted in Figure) as [math]\eY_\vartheta(\ex,\eps)[/math]. It is easy to show that [math]\eY_\vartheta(\ex,\eps)[/math] is a random closed set as in Definition. Under the assumption in Identification Problem that [math]\ey[/math] results from simultaneous move, pure strategy Nash play, at the true DGP value of [math]\theta\in\Theta[/math], one has

Equation \eqref{eq:y_in_Y_games} exhausts the modeling content of Identification Problem. Theorem can be leveraged to extract its empirical content from the observed distribution [math]\sP(\ey,\ex)[/math]. For a given [math]\vartheta\in\Theta[/math] and [math]K\subset\cY[/math], let [math]\sT_{\eY_{\vartheta}(\ex,\eps)}(K;\Phi_r)[/math] denote the probability of the event [math]\{\eY_\vartheta(\ex,\eps)\cap K\neq \emptyset\}[/math] implied when [math]\eps\sim\Phi_r[/math], [math]\ex[/math]-a.s.

Under the assumptions of Identification Problem, the sharp identification region for [math]\theta[/math] is

To simplify notation, let [math]\eY_\vartheta\equiv \eY_\vartheta(\ex,\eps)[/math]. In order to establish sharpness, it suffices to show that [math]\vartheta\in \idr{\theta}[/math] if and only if one can complete the model with an admissible selection mechanism [math]\sR(y_1,y_2|\ex,\eps)[/math] such that [math]\sR(y_1,y_2|\ex,\eps)\ge 0[/math] for all [math](y_1,y_2)\in\{0,1\}\times\{0,1\}[/math], [math]\sum_{(y_1,y_2)\in\{0,1\}\times\{0,1\}}\sR(y_1,y_2|\ex,\eps)=1[/math], and satisfying \eqref{eq:games:sel:mec:1}-\eqref{eq:games:sel:mec:2}, so that [math]\sM(y_1,y_2|\ex)=\sP(y_1,y_2|\ex)[/math] for all [math](y_1,y_2)\in\{0,1\}\times\{0,1\}[/math] [math]\ex[/math]-a.s., with [math]\sM(y_1,y_2|\ex)[/math] defined in \eqref{eq:games_model:pred}. Suppose first that [math]\vartheta[/math] is such that a selection mechanism with these properties is available. Then there exists a selection of [math]\eY_\vartheta[/math] which is equal to the prediction selected by the selection mechanism and whose conditional distribution is equal to [math]\sP(\ey|\ex)[/math], [math]\ex[/math]-a.s., and therefore [math]\vartheta \in \idr{\theta}[/math]. Next take [math]\vartheta \in \idr{\theta}[/math]. Then by Theorem, [math]\ey[/math] and [math]\eY_\vartheta[/math] can be realized on the same probability space as random elements [math]\ey'[/math] and [math]\eY'_\vartheta[/math], so that [math]\ey'[/math] and [math]\eY'_\vartheta[/math] have the same distributions, respectively, as [math]\ey[/math] and [math]\eY_\vartheta[/math], and [math]\ey' \in \Sel(\eY'_\vartheta)[/math], where [math]\Sel(\eY'_\vartheta)[/math] is the set of all measurable selections from [math]\eY'_\vartheta[/math], see Definition. One can then complete the model with a selection mechanism that picks [math]\ey'[/math] with probability 1, and the result follows.

The characterization provided in Theorem SIR- for games with multiple PSNE, taken from [77](Supplementary Appendix D), is equivalent to the one in [78]. When [math]J=2[/math] and [math]\cY=\{0,1\}\times\{0,1\}[/math], the inequalities in \eqref{eq:SIR:entry_game} reduce to \eqref{eq:CT_00}-\eqref{eq:CT_01L}. With more players and/or more actions, the inequalities in \eqref{eq:SIR:entry_game} are a superset of those in \eqref{eq:CT_00}-\eqref{eq:CT_01L}, with the latter comprised of the ones in \eqref{eq:SIR:entry_game} for [math]K=\{k\}[/math] and [math]k=\cY\setminus\{k\}[/math], for all [math]k\in\cY[/math]. Hence, the inequalities in \eqref{eq:SIR:entry_game} are more informative. Of course, the computational cost incurred to characterize [math]\idr{\theta}[/math] may grow with the number of inequalities involved. I discuss computational challenges in partial identification in Section.

Key Insight:(Random set theory and partial identification -- continued) In Identification Problem lack of point identification can be traced back to the set valued predictions delivered by the model, which in turn derive from the model incompleteness defined by [20]. As stated in the Introduction, constructing the (random) set of model predictions delivered by the maintained assumptions is an exercise typically carried out in identification analysis, regardless of whether random set theory is applied. Indeed, for the problem studied in this section, [20](Figure 1) put forward the set of admissible outcomes of the game. [77] propose to work directly with this random set to characterize [math]\idr{\theta}[/math]. The fundamental advantage of this approach is that it dispenses with considering the possible selection mechanisms that may complete the model. Selection mechanisms may depend on the model's unobservables even after conditioning on observables and may constitute an infinite dimensional nuisance parameter, which creates great difficulties for the computation of [math]\idr{\theta}[/math] and for inference.

Next, I discuss the case that the outcome of the game results from simultaneous move, mixed strategy Nash play.[Notes 24] When mixed strategies are allowed for, the model predicts multiple mixed strategy Nash equilibria (MSNE). But whereas when only pure strategies are allowed for, if the model is correctly specified, the observed outcome of the game is one of the predicted PSNE, with mixed strategy it is only the result of a random mixing draw from one of the predicted MSNE. Hence, the identification problem is more complex, and in order to obtain a tractable characterization of [math]\theta[/math]'s sharp identification region one needs to use different tools from random set theory.

To keep the treatment simple here I continue to consider the case of two players with two strategies, as in Identification Problem, with mixed strategies allowed for, and refer to [30](Section 3.4) for the general case. Fix [math]\vartheta\in\Theta[/math]. Let [math]\sigma_j:\{0,1\}\to [0,1][/math] denote the probability that player [math]j[/math] enters the market, with [math]1-\sigma_j[/math] the probability that she stays out. With some abuse of notation, let [math]\bu_j(\sigma_j,\sigma_{-j},\ex_j,\eps_j,\vartheta)[/math] denote the expected payoff associated with the mixed strategy profile [math]\sigma=(\sigma_1,\sigma_2)[/math]. For a given realization [math](x,e)[/math] of [math](\ex,\eps)[/math] and a given value of [math]\vartheta\in\Theta[/math], the set of mixed strategy Nash equilibria is

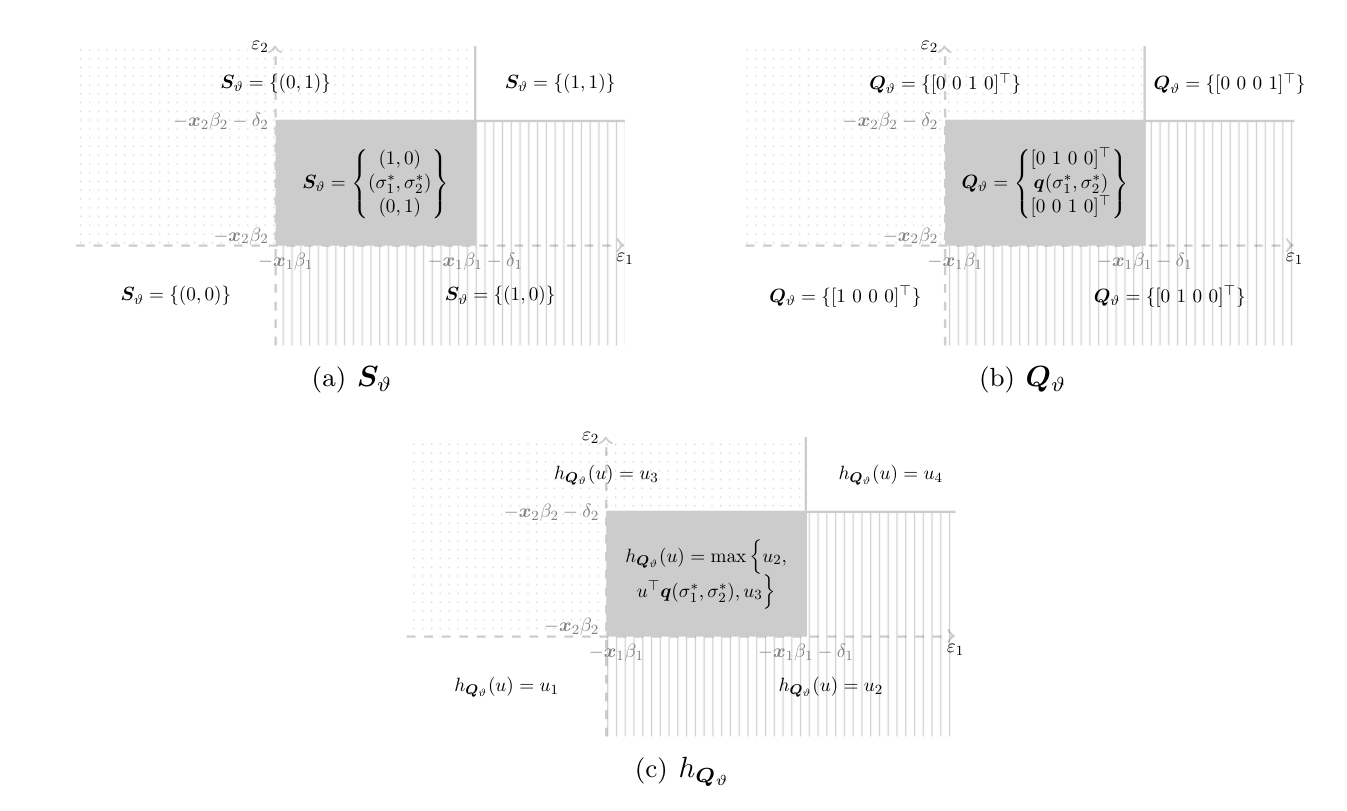

[77] show that [math]\eS_\vartheta\equiv S_\vartheta(\ex,\eps)[/math] is a random closed set in [math][0,1]^2[/math]. Its realizations are illustrated in Panel (a) of Figure as a function of [math](\eps_1,\eps_2)[/math].[Notes 25]

Define the set of possible multinomial distributions over outcomes of the game associated with the selections [math]\sigma[/math] of each possible realization of [math]\eS_{\vartheta}[/math] as

As [math]\eQ_\vartheta[/math] is the image of a continuous map applied to the random compact set [math]\eS_\vartheta[/math], it is a random compact set. Its realizations are plotted in Panel (b) of Figure as a function of [math](\eps_1,\eps_2)[/math].

The multinomial distribution over outcomes of the game determined by a given [math]\sigma\in\eS_\vartheta[/math] is a function of [math]\eps[/math]. To obtain the predicted distribution over outcomes of the game conditional on observed payoff shifters only, one needs to integrate out the unobservable payoff shifters [math]\eps[/math]. Doing so requires care, as it needs to be done for each [math]\eq(\sigma)\in\eQ_\vartheta[/math]. First, observe that all the [math]\eq(\sigma)\in\eQ_\vartheta[/math] are contained in the [math]3[/math] dimensional unit simplex, and are therefore integrable. Next, define the conditional selection expectation (see Definition) of [math]\eQ_\vartheta[/math] as

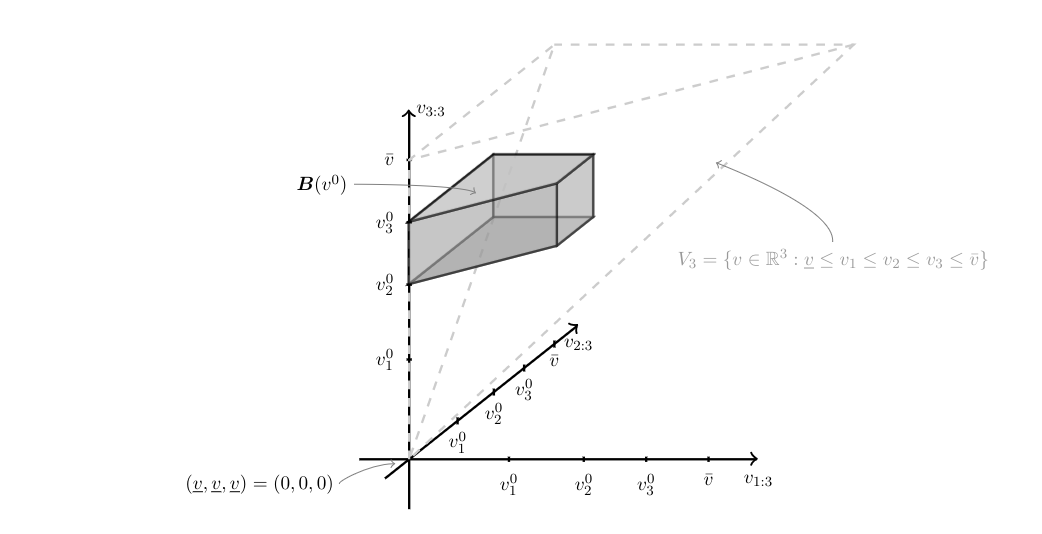

where [math]\Sel(\eS_\vartheta)[/math] is the set of all measurable selections from [math]\eS_\vartheta[/math], see Definition. By construction, [math]\E_{\Phi_r}(\eQ_\vartheta|\ex)[/math] is the set of probability distributions over action profiles conditional on [math]\ex[/math] which are consistent with the maintained modeling assumptions, i.e., with all the model's implications (including the assumption that [math]\eps\sim\Phi_r[/math]). If the model is correctly specified, there exists at least one vector [math]\theta\in\Theta[/math] such that the observed conditional distribution [math]\cp(\ex)\equiv[\sP(\ey=y^1|\ex),\dots,\sP(\ey=y^4|\ex)]^\top[/math] almost surely belongs to the set [math]\E_{\Phi_\rho}(\eQ_\theta|\ex)[/math]. Indeed, by the definition of [math]\E_{\Phi_\rho}(\eQ_\theta|\ex)[/math], [math]\cp(\ex)\in \E_{\Phi_\rho}(\eQ_\theta|\ex)[/math] almost surely if and only if there exists [math]\eq\in \Sel(\eQ_\theta)[/math] such that [math]\E_{\Phi_\rho}(\eq|\ex)=\cp(\ex)[/math] almost surely, with [math]\Sel(\eQ_\theta)[/math] the set of all measurable selections from [math]\eQ_\theta[/math]. Hence, the collection of parameter vectors [math]\vartheta\in\Theta[/math] that are observationally equivalent to the data generating value [math]\theta[/math] is given by the ones that satisfy [math]\cp(\ex)\in \E_{\Phi_r}(\eQ_\vartheta|\ex)[/math] almost surely. In turn, observing that by Theorem the set [math]\E_{\Phi_r}(\eQ_\vartheta|\ex)[/math] is convex, we have that [math]\cp(\ex)\in \E_{\Phi_r}(\eQ_\vartheta|\ex)[/math] if and only if [math]u^\top \cp(\ex)\leq h_{\E_{\Phi_r}(\eQ_\vartheta|\ex)}(u)[/math] for all [math]u[/math] in the unit ball (see, e.g., [79](Theorem 13.1)), where [math]h_{\E_{\Phi_r}(\eQ_\vartheta|\ex)}(u)[/math] is the support function of [math]\E_{\Phi_r}(\eQ_\vartheta|\ex)[/math], see Definition.

Under the assumptions in Identification Problem, allowing for mixed strategies and with the observed outcomes of the game resulting from mixed strategy Nash play, the sharp identification region for [math]\theta[/math] is

where [math]\mu[/math] is any probability measure on [math]\mathbb{B}^{|\cY|}[/math], and [math]|\cY|=4[/math] in this case.

Show ProofTheorem (equation eq:dom_Aumann:cond) yields \eqref{eq:SIR_sharp_mixed_sup}, because by the arguments given before the theorem, [math]\idr{\theta}=\{\vartheta \in \Theta:\;\cp(\ex)\in \E_{\Phi_r}(\eQ_\vartheta|\ex),\ex\text{-a.s.}\}[/math]. The result in \eqref{eq:SIR_sharp_mixed_int} follows because the integrand in \eqref{eq:SIR_sharp_mixed_int} is continuous in [math]u[/math] and both conditions inside the curly brackets are satisfied if and only if [math]u^\top \cp(\ex)-\E_{\Phi_r}[h_{\eQ_\vartheta}(u)|\ex]\leq 0[/math] [math]\forall u\in \mathbb{B}^{|\cY|}[/math] [math]\ex[/math]-a.s.

For a fixed [math]u\in\mathbb{B}^4[/math], the possible realizations of [math]h_{\eQ_\vartheta}(u)[/math] are plotted in Panel (c) of Figure as a function of [math](\eps_1,\eps_2)[/math]. The expectation of [math]h_{\eQ_\vartheta}(u)[/math] is quite straightforward to compute, whereas calculating the set [math]\E_{\Phi_r}(\eQ_\vartheta|\ex)[/math] is computationally prohibitive in many cases. Hence, the characterization in \eqref{eq:SIR_sharp_mixed_sup} is computationally attractive, because for each [math]\vartheta\in\Theta[/math] it requires to maximize an easy-to-compute superlinear, hence concave, function over a convex set, and check if the resulting objective value vanishes. Several efficient algorithms in convex programming are available to solve this problem, see for example the MatLab software for disciplined convex programming CVX [80]. Nonetheless, [math]\idr{\theta}[/math] itself is not necessarily convex, hence tracing out its boundary is non-trivial. I return to computational challenges in partial identification in Section.\medskip

Key Insight: Random set theory and partial identification -- continued [77] provide a general characterization of sharp identification regions for models with convex moment predictions. These are models that for a given [math]\vartheta\in\Theta[/math] and realization of observable variables, predict a set of values for a vector of variables of interest. This set is not necessarily convex, as exemplified by [math]\eY_\vartheta[/math] and [math]\eQ_\vartheta[/math], which are finite. No restriction is placed on the manner in which, in the DGP, a specific model prediction is selected from this set. When the researcher takes conditional expectations of the resulting elements of this set, the unrestricted process of selection yields a convex set of moments for the model variables (all possible mixtures). This is the model's convex set of moment predictions. If this set were almost surely single valued, the researcher would learn (features of) [math]\theta[/math] by solving moment equality conditions involving the observed variables and predicted ones. The approach reviewed in this section is a set-valued method of moments that extends the singleton-valued one commonly used in econometrics.

I conclude this section discussing the case of static, simultaneous move finite games of incomplete information, using the results in [77](Supplementary Appendix C).[Notes 26] For clarity, I formalize the maintained assumptions.