Sums of Discrete Random Variables

In this chapter we turn to the important question of determining the distribution of a sum of independent random variables in terms of the distributions of the individual constituents. In this section we consider only sums of discrete random variables, reserving the case of continuous random variables for the next section. We consider here only random variables whose values are integers. Their distribution functions are then defined on these integers. We shall find it convenient to assume here that these distribution functions are defined for all integers, by defining them to be~0 where they are not otherwise defined.

Convolutions

Suppose [math]X[/math] and [math]Y[/math] are two independent discrete random variables with distribution functions [math]m_1(x)[/math] and [math]m_2(x)[/math]. Let [math]Z = X+Y[/math]. We would like to determine the distribution function [math]m_3(x)[/math] of [math]Z[/math]. To do this, it is enough to determine the probability that [math]Z[/math] takes on the value [math]z[/math], where [math]z[/math] is an arbitrary integer. Suppose that [math]X = k[/math], where [math]k[/math] is some integer. Then [math]Z = z[/math] if and only if [math]Y = z-k[/math]. So the event [math]Z = z[/math] is the union of the pairwise disjoint events

where [math]k[/math] runs over the integers. Since these events are pairwise disjoint, we have

Thus, we have found the distribution function of the random variable [math]Z[/math]. This leads to the following definition.

Let [math]X[/math] and [math]Y[/math] be two independent integer-valued random variables, with distribution functions [math]m_1(x)[/math] and [math]m_2(x)[/math] respectively. Then the convolution of [math]m_1(x)[/math] and [math]m_2(x)[/math] is the distribution function [math]m_3 = m_1*m_2[/math] given by

It is easy to see that the convolution operation is commutative, and it is straightforward to

show that it is also associative.

Now let [math]S_n = X_1 + X_2 +\cdots+ X_n[/math] be the sum of [math]n[/math]

independent random variables of an independent trials process with common distribution

function [math]m[/math] defined on the integers. Then the distribution function of [math]S_1[/math] is [math]m[/math]. We can

write

Thus, since we know the distribution function of [math]X_n[/math] is [math]m[/math], we can find the distribution function of [math]S_n[/math] by induction.

Example A die is rolled twice. Let [math]X_1[/math] and [math]X_2[/math] be the outcomes, and let [math]S_2 = X_1 + X_2[/math] be the sum of these outcomes. Then [math]X_1[/math] and [math]X_2[/math] have the common distribution function:

The distribution function of [math]S_2[/math] is then the convolution of this distribution with itself. Thus,

Continuing in this way we would find [math]P(S_2 = 5) = 4/36[/math], [math]P(S_2 = 6) = 5/36[/math], [math]P(S_2 = 7) = 6/36[/math], [math]P(S_2 = 8) = 5/36[/math], [math]P(S_2 = 9) = 4/36[/math], [math]P(S_2 = 10) = 3/36[/math], [math]P(S_2 = 11) = 2/36[/math], and [math]P(S_2 = 12) = 1/36[/math]. The distribution for [math]S_3[/math] would then be the convolution of the distribution for [math]S_2[/math] with the distribution for [math]X_3[/math]. Thus

and so forth.

This is clearly a tedious job, and a program should be written to carry out this calculation. To do this we first write a program to form the convolution of two densities [math]p[/math] and [math]q[/math] and return the density [math]r[/math]. We can then write a program to find

the density for the sum [math]S_n[/math] of [math]n[/math] independent random variables with a common density [math]p[/math], at least in the case that the random variables have a finite number of possible values.

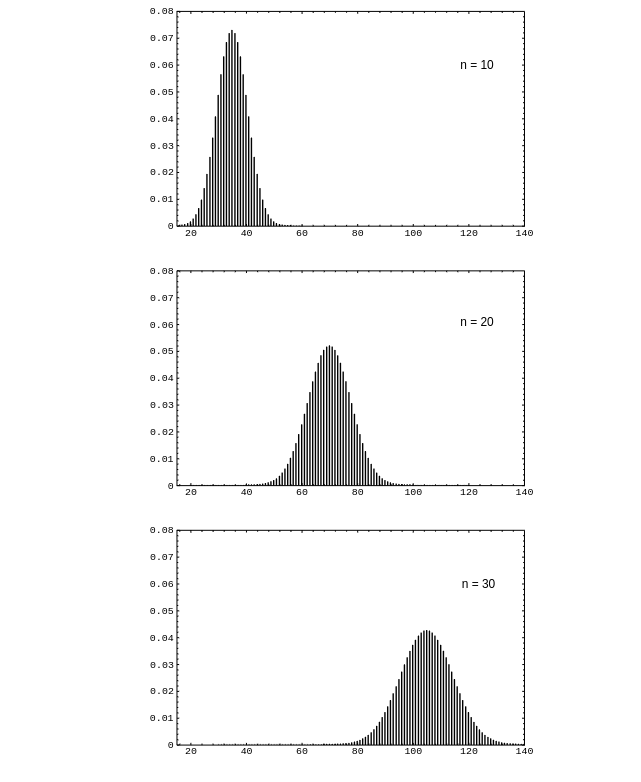

Running this program for the example of rolling a die [math]n[/math] times for [math]n = 10,\ 20,\ 30[/math] results in the distributions shown in Figure. We see that, as in the case of Bernoulli trials, the distributions become bell-shaped. We shall discuss in Central Limit Theorem a very general theorem called the Central Limit Theorem that will explain this phenomenon.

Example A well-known method for evaluating a bridge hand is: an ace is assigned a value of 4, a king 3, a queen 2, and a jack 1. All other cards are assigned a value of 0. The point count of the hand is then the sum of the values of the cards in the hand. (It is actually more complicated than this, taking into account voids in suits, and so forth, but we consider here this simplified form of the point count.) If a card is dealt at random to a player, then the point count for this card has distribution

Let us regard the total hand of 13 cards as 13 independent trials with this common distribution. (Again this is not quite correct because we assume here that we are always choosing a card from a full deck.) Then the distribution for the point count [math]C[/math] for the hand can be found from the program NFoldConvolution by using the distribution for a single card and choosing [math]n = 13[/math]. A player with a point count of 13 or more is said to have an opening bid. The probability of having an opening bid is then

Since we have the distribution of [math]C[/math], it is easy to compute this probability. Doing this we find that

so that about one in four hands should be an opening bid according to this simplified model. A more realistic discussion of this problem can be found in Epstein, The Theory of Gambling and Statistical Logic.[Notes 1]

For certain special distributions it is possible to find an expression for the

distribution that results from convoluting the distribution with itself [math]n[/math] times.

The convolution of two binomial distributions, one with

parameters

[math]m[/math] and

[math]p[/math] and the other with parameters [math]n[/math] and [math]p[/math], is a binomial distribution with parameters [math](m+n)[/math]

and

[math]p[/math]. This fact follows easily from a consideration of the experiment which consists of first

tossing a coin [math]m[/math] times, and then tossing it [math]n[/math] more times.

The convolution of [math]k[/math] geometric distributions with

common parameter

[math]p[/math] is a negative binomial distribution with parameters [math]p[/math] and [math]k[/math]. This can be seen by

considering the experiment which consists of tossing a coin until the [math]k[/math]th head appears.

General references

Doyle, Peter G. (2006). "Grinstead and Snell's Introduction to Probability" (PDF). Retrieved June 6, 2024.