ResNet

Content for this page was copied verbatim from Herberg, Evelyn (2023). "Lecture Notes: Neural Network Architectures". arXiv:2304.05133 [cs.LG].

We have seen in Example that for a FNN with depth [math]L[/math] we have the derivative

In the case that we consider a very deep network, i.e. large [math]L[/math], the product in the derivative can be problematic, [1][2], especially if we take derivatives with respect to variables from early layers. Two cases may occur:

- If [math] \frac{\partial y^{[j]}}{\partial y^{[j-1]}} \lt 1[/math] for all [math]j[/math], the product, and hence the whole derivative, tends to zero for growing [math]L[/math]. This problem is referred to as vanishing gradient.

- On the other hand, if [math] \frac{\partial y^{[j]}}{\partial y^{[j-1]}} \gt 1[/math] for all [math]j[/math], the product, and hence the whole derivative, tends to infinity for growing [math]L[/math]. This problem is referred to as exploding gradient.

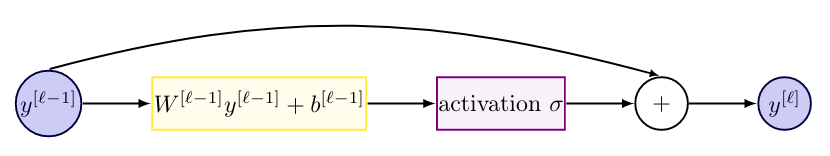

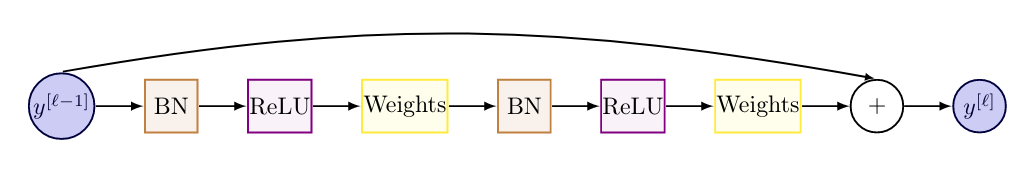

Residual Networks (ResNets) have been developed in [3][4] with the intention to solve the vanishing gradient problem. Employing the same notation as in FNNs, simplified ResNet layers can be represented in the following way

with [math]y^{[0]} = u[/math] the input data. Essentially, a ResNet is a FNN with an added skip connection, i.e. [math]+y^{[\ell-1]}[/math], cf. Figure

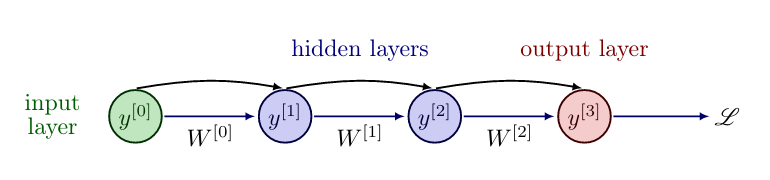

We now revisit the simple FNN with two hidden layers from Example, and add skip connections to make it a ResNet, see Figure.

Example

Consider a simple ResNet with one node per layer and assume that we only consider weights [math]W^{[\ell]} \in \mathbb{R}[/math] and no biases. For the network in Figure we have [math]\theta = (W^{[0]},W^{[1]},W^{[2]})^{\top}[/math], and

We define for [math]\ell = 1,\ldots,L[/math]

so that in the ResNet setup

Computing the components of the gradient, we employ the chain rule to obtain e.g.

where [math]\mathbb{I}[/math] denotes the identity. In general for depth [math]L[/math], we get

If we generalize the derivative \eqref{eq:ResNetder} to ResNet architectures, where we do not only consider weights [math]W^{[\ell]}[/math], see e.g. [5](Theorem 6.1), the structure of the product in the derivative remains the same, i.e. it also contains an identity term.

Remember that for FNNs it holds [math]y^{[j]} = a^{[j]}[/math], i.e. the fraction in the product coincides in both cases. However, due to the added identity, even if

holds for all [math]j[/math], we will not encounter vanishing gradients in the ResNet architecture. The exploding gradients problem can still occur.

We will see in Section Different ResNet Versions that there exist several versions of ResNets. However, from a mathematical point of view the simplified version \eqref{eq:ResNet} is especially interesting, because it can be related to ordinary differential equations (ODEs), as first done in [6]. Inserting a parameter [math]\tau^{[\ell]} \in \mathbb{R}[/math] in front of the activation function [math]\sigma[/math] and rearranging the terms of the forward propagation delivers

Here, we consider the same activation function [math]\sigma[/math] for all layers. Now, the left hand side of the equation can be interpreted as a finite difference representation of a time derivative, where [math]\tau^{[\ell]}[/math] is the time step size and [math]y^{[\ell]}, y^{[\ell-1]}[/math] are the values attained at two neighboring points in time. This relation between ResNets and ODEs is also studied under the name of Neural ODEs, [7]. It is also possible to learn the time step size [math]\tau^{[\ell]}[/math] as an additional variable, [5].

Let us now introduce the different ResNet versions from the original papers, [3][4].

Different ResNet Versions

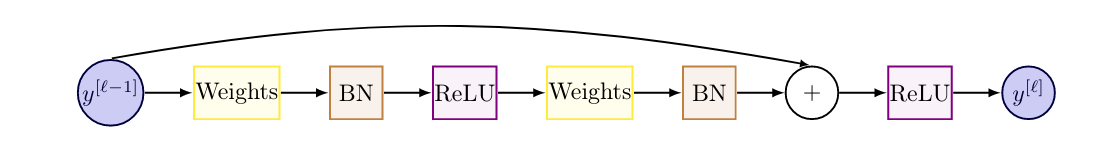

In contrast to the simplified ResNet layer version \eqref{eq:ResNet} that we introduced, original ResNet architectures [3] consist of residual blocks, cf. Figure. Here, different layers are grouped together into one residual block and then residual blocks are stacked to form a ResNet.

In the residual block, cf. Figure, we have the following layer types:

- Weights: fully connected or convolutional layer,

- BN: Batch Normalization layer, cf. Section Batch Normalization ,

- ReLU: activation function [math]\sigma = \text{ReLU}[/math].

Clearly, the residual block and the simplified ResNet layer both contain a skip connection, which is the integral part of ResNets success, since it helps avoid the vanishing gradient problem. However, the residual block is less easy to interpret from a mathematical point of view and can not directly be related to ODEs.

In frameworks like Tensorflow and Pytorch, if you encounter a network architecture called "ResNet", it will usually be built by stacking residual blocks of this original form, Figure.

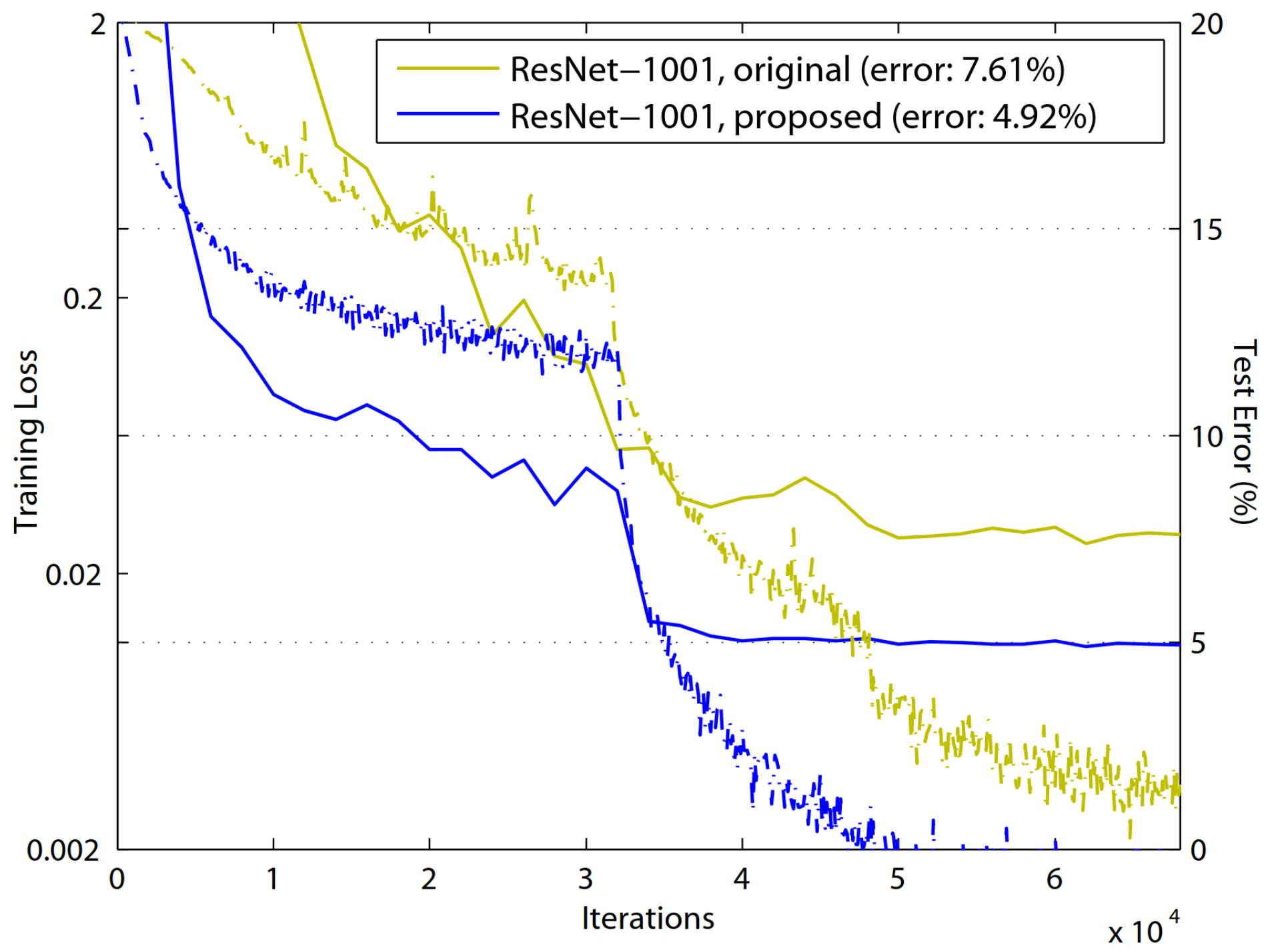

Subsequently, in a follow up paper, [4], several other options to sort the occurring layers in a residual block have been introduced. The option which performed best in numerical tests (see also Figure) is illustrated in Figure.

A ResNet built with full pre-activation residual blocks can be found e.g. in Tensorflow under the name "ResNetV2". In the literature, there also exist other variants of the simplified ResNet layer, e.g. with a weight matrix applied outside the activation function.

In Figure we see a comparison of a 1001-layer ResNet built with original residual blocks and a 1001-layer ResNet built with full pre-activation (proposed) residual blocks. This result clearly demonstrates the advantage of full pre-activation for very deep networks, since both training loss and test error can be improved with the proposed residual block.

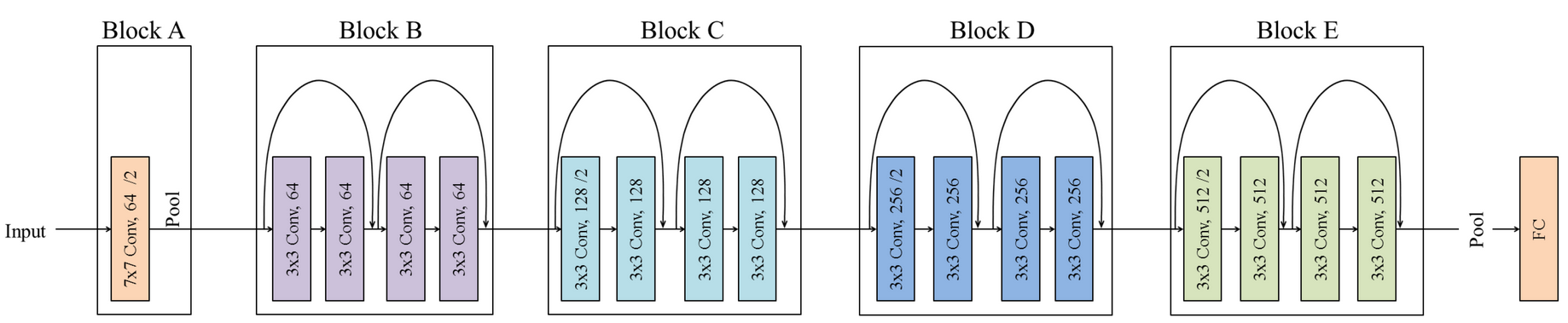

ResNet18

As an example for a ResNet architecture, we look at "ResNet18", cf. Figure. Here, 18 indicates the number of layers with learnable weights, i.e. convolutional and fully connected layers. Even though the batch normalization layers also contain learnable weights, they are typically not counted here. This is a ResNet architecture intended for use on image data sets, hence the weights layers are convolutional layers, like in a CNN.

In Block A the input data is pre-processed, while Block B to Block E are built of two residual blocks each. To be certain which type of residual block the network is built of, it is recommended to look into the details of the implementation. However, in most cases the original residual block, cf. Figure, is employed. Finally, as usual for a classification task, the data is flattened and passed through a fully connected (FC) layer before the output is generated. Altogether, "ResNet18" has over 10 million trainable parameters, i.e. variables.

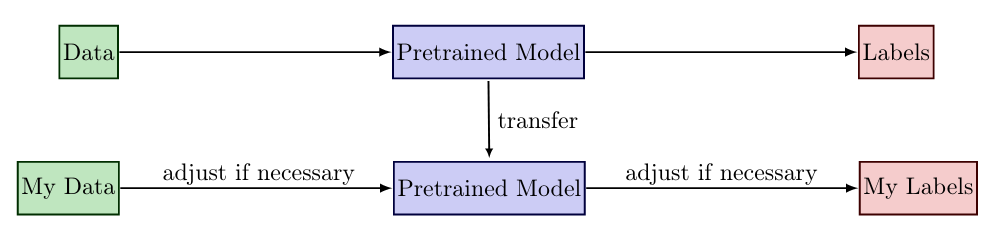

Transfer Learning

An advantage of having so many different ResNet architectures available and also pre-trained is, that they can be employed for Transfer Learning, see e.g. [9]. The main idea of transfer learning is to take a model that has been trained on a (potentially large) data set for the same type of task (e.g. image classification), and then adjust the first/last layer to fit your data set. Depending on your task you may need to change one or both layers, for example:

- Your input data has a different structure: adapt the first layer.

- Your data set has a different set of labels (supervisions): adapt the last layer.

If your input data and labels both coincide with the original task then you don't need to employ transfer learning. You can just use the pre-trained model for your task. When adapting a layer, this layers needs to be initialized. All remaining layers can be initialized with the pre-trained weights, which will most likely give a good starting point. Then you train the adapted network on your data, which will typically take a lot loss time than training with a random initialization. Hence, transfer learning can save a significant amount of computing time. Clearly, transfer learning is also possible with other network architectures, as long as the network has been pre-trained.

General references

Herberg, Evelyn (2023). "Lecture Notes: Neural Network Architectures". arXiv:2304.05133 [cs.LG].

References

- Y. Bengio, P. Simard, and P. Frasconi. “ (1994). "Learning long-term dependencies with gradient descent is difficult". IEEE transactions on neural networks 5.

- Glorot, X. and Bengio, Y. (2010), "Understanding the difficulty of training deep feedforward neural networks", Proceedings of the thirteenth international conference on artificial intelligence and statistics, JMLR Workshop and Conference ProceedingsCS1 maint: uses authors parameter (link)

- 3.0 3.1 3.2 3.3 He, K. and Zhang, X. and Ren, S. and Sun, J. (2016), "Deep residual learning for image recognition", Proceedings of the IEEE conference on computer vision and pattern recognitionCS1 maint: uses authors parameter (link)

- 4.0 4.1 4.2 4.3 4.4 4.5 He, K. and Zhang, X. and Ren, S. and Sun, J. (2016). "Identity mappings in deep residual networks". European conference on computer vision. Springer.CS1 maint: uses authors parameter (link)

- 5.0 5.1 5.2 H. Antil and H. Díaz and E. Herberg (2022). "An Optimal Time Variable Learning Framework for Deep Neural Networks". arXiv preprint arXiv:2204.08528.

- Haber, E. and Ruthotto, L. (2017). "Stable architectures for deep neural networks". Inverse problems 34. IOP Publishing. doi:.

- Chen, R. T. Q. and Rubanova, Y. and Bettencourt, J. and Duvenaud, D. K. (2018). "Neural ordinary differential equations". Advances in neural information processing systems 31.

- Ghassemi, S. and Magli, E. (2019). "Convolutional Neural Networks for On-Board Cloud Screening". Remote Sensing 11. doi:.

- CS231n Convolutional Neural Networks for Visual Recognition, 2023