Elements of Combinatorial Analysis and Simple Random Walks

In this chapter we are going to give an introduction to combinatorial aspects of probability theory and describe several simple methods for the computation of different problems, where the usage of these is an important aspect. We will also already give some of the most basic examples of discrete distributions and consider combinatorial aspects of random events such as a first look at a simple random walk. We will look at the reflection principle and basic terminology of random walks as a combinatorial theory. Finally, we will consider probabilities of events for special random walks.

Summability conditions

Let [math]I[/math] be an index set, and [math](a_i)_{i\in I}\subset \Rbar_+^I[/math] (i.e. [math]a_i\in[0,\infty][/math], for all [math] i\in I[/math]). We will write

The family [math](a_i)_{i\in I}[/math] is said to be summable if [math]\sum_{i\in I}a_i[/math] is finite. The following elementary properties are important for such a family [math](a_i)_{i\in I}[/math].

- For [math]H\subseteq I[/math] we have

[[math]] \sum_{h\in H}a_h\leq \sum_{i\in I}a_i [[/math]]and [math](a_h)_{h\in H}[/math] is summable if [math](a_i)_{i\in I}[/math] is summable.

- For a family [math](a_i)_{i\in I}[/math] to be summable, it is necessary and sufficient that the Cauchy criterion is satisfied, i.e. for all [math]\epsilon \gt 0[/math], there is a finite set [math]J\subset I[/math], with all finite subsets [math]K\subset I[/math] satisfying [math]K\cap J=\varnothing[/math], we get

[[math]] \sum_{i\in K}a_i \lt \epsilon. [[/math]]

- If the family [math](a_i)_{i\in I}[/math] is summable, the set [math]\{i\in I\mid a_i\not=0\}[/math] is at most countable, as the union of the sets [math]I_n=\left\{i\in I\mid a_i\geq \frac{1}{n}\right\}[/math]. Moreover, [math]I_n[/math] has at most [math]\left[n\left(\sum_{i\in I}a_i\right)\right][/math] elements, where [math][x][/math] denotes the integer part of [math]x[/math].

To avoid confusion we want to emphasize that If [math]A_1,...,A_n[/math] are disjoint sets, we will sometimes write [math]\sum_{n\geq 1}A_n[/math] instead of [math]\bigsqcup_{n\geq 1}A_n[/math].

Let [math](a_i)_{i\in I}\subset\bar\R_+^I[/math] be a family of numbers in [math]\bar\R_+[/math]. If [math]I=\sum_{\lambda\in L}H_{\lambda}[/math] and [math]q_\lambda=\sum_{i\in H_{\lambda}}a_i[/math], then

Exercise.

In the case [math]I=\N[/math], we say that [math]\sum_{n\in\N}u_n[/math] converges absolutely if [math]\sum_{n\in\N}\vert u_n\vert[/math] converges. In this case we can modify the order in which the terms are taken without changing the convergence nor the sum of the series.

For all [math]n\in\N\setminus\{0\}[/math] we have

Finite Probability Spaces

General Probability Spaces

Let [math](\Omega,\A)[/math] be a measurable space. A probability measure defined on [math]\A[/math] is an application

such that

- [math]\p[\Omega]=1[/math].

- for all [math](A_n)_{n\in \N}[/math], with [math]A_n\cap A_m=\varnothing[/math] for [math]n\not=m[/math], we have

[[math]] \p\left[\bigcup_{n\in\N}A_n\right]=\sum_{n\in \N}\p[A_n]. [[/math]]

We want to recall the following properties without a proof.

- For [math]A,B\in\A[/math] with [math]A\subset B[/math] we get [math]\p[A]\leq \p[b][/math].

- For [math]A\in\A[/math] we get [math]\p[A^C]=1-\p[A][/math].

- If [math](A_n)_{n\in\N}\subset\A[/math] and [math]A_n\uparrow A[/math] as [math]n\to\infty[/math], then [math]\p[A_n]\uparrow \p[A][/math] as [math]n\to\infty[/math].

- If [math](A_n)_{n\in\N}\subset\A[/math] and [math]A_n\downarrow A[/math] as [math]n\to\infty[/math], then [math]\p[A_n]\downarrow \p[A][/math] as [math]n\to\infty[/math].

Let [math]\mu[/math] be a measure on a measureable space [math](\Omega,\A)[/math].

- If [math](A_n)_{n\in\N}\subset\A[/math] is an increasing family of measurable sets, then

[[math]] \mu\left( \bigcup_{n\in\N}A_n \right)=\lim_{n\to\infty}\mu(A_n). [[/math]]Conversely, if an additive function satisfies the property above, then it is a measure.

- Let [math]\mu[/math] be an additive, positive and bounded function on [math]\A[/math]. Then [math]\sigma[/math]-additivity is equivalent to say that for all decreasing sequences [math](B_n)_{n\in\N}\subset\A[/math], we get the implication

[[math]] \bigcap_{n\in\N}B_n=\varnothing\Longrightarrow \lim_{n\to\infty}\mu(B_n)=0. [[/math]]

Probability measures on finite spaces

There are two cases where [math](\Omega,\A)[/math] is a measurable space, either [math]\Omega[/math] is finite or [math]\A[/math] is finite. An important question is how to define probability measures on finite or countable spaces. If [math]I[/math] is at most countable, then having a probability measure on [math]\mathcal{P}(I)[/math] is equivalent to having a family [math](a_i)_{i\in I}[/math] of positive real numbers such that [math]\sum_{i\in I}a_i=1[/math]. Moreover, for [math]A\subset I[/math], we can define a measure [math]\mu[/math] by

where [math]\mu(\{i\})=a_i[/math] for all [math]i\in I[/math].

Example

We got the following examples:

The Poisson distribution

The Poisson distribution with parameter [math]\lambda \gt 0[/math], is a probability measure [math]\Pi_\lambda[/math] on [math](\N,\mathcal{P}(\N))[/math], defined for all [math]n\geq0[/math] by

For [math]A\subset \N[/math] we thus get

In order to check that it is indeed a probability distribution, we need to check that [math]\sum_{i\geq 0}\Pi_\lambda(\{i\})=1[/math]. indeed, we have

The Riemann [math]\zeta[/math]-function

Another example can be obtained by considering the function [math]\zeta(s)=\sum_{n\geq 1}n^{-s}[/math] for [math]s \gt 1[/math], which we can deform to a probability measure. Indeed, if we set [math]a_n=\frac{1}{\zeta(s)}\cdot \frac{1}{n^s}[/math] for all [math]n\geq 1[/math], we get

The Geometric distribution

Similarly one can define the geometric distribution with parameter [math]r\in(0,1)[/math] on [math](\N\setminus\{0\},\mathcal{P}(\N\setminus\{0\}))[/math] by

The Uniform distribution

For a finite state space [math]\Omega[/math], we can define the uniform distribution for any measurable set [math]A\in\A[/math] by

For [math]\omega\in\Omega[/math] we get [math]\p[\{\omega\}]=\frac{1}{\vert\Omega\vert}.[/math] Take for example [math]\Omega=\{1,2,...,N\}[/math]. Then [math]\p[\{k\}]=\frac{1}{N}[/math].

There is no uniform measure on [math]\N[/math], since [math]\N[/math] is not finite. Thus from now on we shall only consider the case of a finite state space [math]\Omega[/math] or a finite [math]\sigma[/math]-Algebra [math]\A[/math].

Let [math]\A[/math] be a finite [math]\sigma[/math]-Algebra on a state space [math]\Omega[/math]. Then there exists a partition [math]A_1,...,A_n[/math] of [math]\Omega[/math], such that [math]A_k\in\A[/math] for all [math]k\in\{1,...,n\}[/math] and with the property that any [math]A\in \A[/math] can be written as a union of the events [math]A_1,...,A_n[/math]. The sets are called the atoms of [math]\A[/math].

Fix [math]\omega\in\Omega[/math]. Define the set [math]C(\omega):=\bigcap_{B\in\A,\atop\omega\in B}B[/math]. Then by finite intersection it follows that [math]C(\omega)\in\A[/math]. In fact [math]C(\omega)[/math] is the smallest element of [math]\A[/math], which contains [math]\omega[/math]. Moreover, it is easy to see that [math]\omega\sim\omega'[/math] if and only if [math]C(\omega)=C(\omega')[/math] defines an equivalence relation on [math]\Omega[/math] and that [math]\omega'\in C(\omega)[/math] if and only if [math]C(\omega)=C(\omega')[/math]. Hence if [math]A[/math] is an equivalence class and [math]\omega\in A[/math], then [math]A=C(\omega)[/math]. Therefore, one can take the events [math]A_1,...,A_n[/math] as the equivalence classes.

Basics of combinatorial Analysis

Sampling

Let us consider a population of size [math]n\in\N[/math], i.e. a set [math]S=\{S_1,...,S_n\}[/math] with [math]n[/math] elements. We call any ordered sequence [math](S_{i_1},...,S_{i_r})[/math] of [math]r[/math] elements of [math]S[/math] a sample of size [math]r[/math], drawn from this population. Then there are two possible procedures.

- Sampling with replacement, i.e. the same element can be drawn more than once.

- Sampling without replacement. Here an element one choses is removed from the population. In this case [math]r\leq n[/math].

In either case, our experiment is described by a sample space [math]\Omega[/math] in which each individual points represents a sample of size [math]r[/math]. In the first case, we get [math]\vert\Omega\vert=n^r[/math], where an element [math]\omega\in\Omega[/math] is of the form [math]\omega=(S_{i_1},...,S_{i_r})[/math]. In the second case we get

Example

We got the following examples:

- If by a "ten letter word" is meant a (possibly meaningless) sequence of ten letters, then such a word represents a sample from the population of 26 letters. There are [math]26^{10}[/math] such words.

- Tossing a coin [math]r[/math] times is one way of obtaining a sample of size [math]r[/math] drawn from the population of the letters [math]H[/math] and [math]T[/math]. Usually we assign equal probabilities to all samples, namely [math]n^{-r}[/math] is sampling with replacement and [math]\frac{1}{n(n-1)...(n-r+1)}[/math] is a sampling without replacement.

- A random sample of size [math]r[/math] with replacement is taken from a population of size [math]n[/math], and we assume that [math]r\leq n[/math]. The probability [math]p[/math] that in the sample no element appears twice is

[[math]] p=\frac{n(n-1)\dotsm (n-r+1)}{n^r}\left(\p[A]=\frac{\vert A\vert}{\vert\Omega\vert}\right). [[/math]]Consequently, the probability of having four different numbers chosen from [math]\{0,1,2,3,...,9\}[/math] is (here [math]n=10[/math], [math]r=4[/math])[[math]] \frac{10\times 9\times 8\times 7}{10^4}\approx 0.5. [[/math]]In sampling without replacement the probability for any fixed element of the population to be included in a random sample of size [math]r[/math] is[[math]] 1-\frac{(n-1)(n-2)\dotsm (n-r)}{\underbrace{n(n-1)\dotsm (n-r+1)}_{\text{probability of the complementary event}}}=1-\frac{n-r}{n}=\frac{r}{n}. [[/math]]We have used that for [math]A\in\A[/math], [math]\p[A]=1-\p[A^C][/math]. Similarly, in sampling with replacement the probability that a fixed element of the population to be included in a random sample of size [math]r[/math] is[[math]] 1-\left(\frac{n-1}{n}\right)^r=1-\left(1-\frac{1}{n}\right)^2 [[/math]]

Subpopulations

Let [math]S=\{S_1,...,S_n\}[/math] be a population of size [math]n[/math]. We call subpopulations of size [math]r[/math] any set of [math]r[/math] elements, distinct or not, chosen from [math]S[/math]. Elements of a subpopulation are not ordered, i.e. two samples of size [math]r[/math] from [math]S[/math] correspond to the same subpopulation if they only differ by the order of the elements. Here we have two cases as well. The case [math]\underline{without}[/math] replacement: We must have [math]r\leq n[/math]. There are [math]\binom{n}{r}=\frac{n!}{r!(n-r)!}[/math]. We have that [math]\binom{n}{r}=\binom{n}{n-r}[/math]. We always use the convention [math]0!=1[/math].

Example

We got the following examples:

Poker

There are [math]\binom{52}{5}=2'598'960[/math] hands at poker. Let us calculate the probability [math]p[/math] that a hand at poker contains 5 different face values. The face values can be chosen in [math]\binom{13}{5}[/math] different ways, and corresponding to each case we can choose one of the four suits. It follows that

Senator problem

Each of the 50 states has two senators. We consider the event that in a committee of 50 senators chose at random

- a given state is represented.

- all states are represented.

In the first case, it is better to calculate the probability [math]q[/math] of the complementary event that the given state is not represented

For the second case we note that a comittee including a senator of all states can be chosen in [math]2^{50}[/math] different ways. The probability that all states are included is

An occupancy problem

Consider a random distribution of [math]r[/math] balls in [math]n[/math] cells. To find the probability [math]p_k[/math] that a specified cell contains exactly [math]k[/math] balls [math](k=0,1,...,r)[/math]. We note that [math]k[/math] balls can be chosen in [math]\binom{r}{k}[/math] different ways and the remaining [math](r-k)[/math] balls can be placed into the remaining [math](n-1)[/math] cells in [math](n-1)^{r-k}[/math] so we get

We call [math]\tilde p=\frac{1}{n}[/math], then

This is called the binomial distribution of parameters [math]p[/math] and [math]r[/math]. It is a probability distribution on [math]\{0,1,...,r\}[/math] and

For example in a coin tossing game we have [math]r[/math] tosses and we set [math]H\leftrightarrow 1[/math] and [math]T\leftrightarrow 0[/math]. We have the state space

Moreover, we have that

where [math]S_r[/math] is the number of heads. Assume that [math]\p(H)=p\in[0,1][/math], then

Let [math]r_1,...,r_k[/math] be integers such that [math]r_1+...+r_k=n[/math] [math](r_i\geq 0,r_i\in\N)[/math]. The number of ways in which a population of [math]n[/math] elements can be partitioned into [math]k[/math] subpopulations of which the first contains [math]r_1[/math] elements, the second contains [math]r_2[/math] elements etc., is given by

For the first part we note that

Example

A throw of twelve dice can result in [math]6^{12}[/math] different outcomes. The event that each face can appear twice is

The case [math]\underline{with}[/math] replacement: We take the point of view of occupancy problems. Our model is that of placing randomly [math]r[/math] balls into [math]n[/math] cells. Such an event is completely described by its occupancy numbers [math]r_1,...,r_n[/math] where [math]r_k[/math] stands for the number of balls in the [math]k[/math]-th cell. Every [math]n[/math]-tupel of integers satisfying [math]r_1+...+r_k=r[/math] describes a possible configuration. Two distributions are distinguishable if the occupancy numbers are different. We denote by [math]A_{r,n}[/math] the number of distinguishable distributions. It is also the number of subpopulations of size [math]r[/math] with replacement from a population [math]S=\{S_1,...,S_n\}[/math] of size [math]n[/math] and it is characterized by the number [math]r_k[/math] of appearances of the individual [math]S_k[/math] with the restriction [math]r_1+...+r_n=r[/math].

There are two different proofs.

- We represent the balls by [math]\bigotimes[/math] and the cells by the [math]n[/math] spaces between [math]n+1[/math] bars. Then

[[math]] \mid \bigotimes \bigotimes \bigotimes \mid\bigotimes\mid\mid\mid\mid \bigotimes \bigotimes \bigotimes \bigotimes\mid [[/math]]is used as a symbol for a distribution of [math]r=8[/math] balls in [math]n=6[/math] cells. The occupancy numbers are[[math]] 3,1,0,0,0,4\Longrightarrow 3+1+0+0+0+4=8. [[/math]]Such a symbol necessarily starts and ends with a bar, but the remaining [math](n-1)[/math] bars and [math]r[/math] balls can appear in an arbitrary order. We have that[[math]] \binom{n+r-1}{r} [[/math]]is the number of ways of selecting [math]r[/math] places (for the balls) out of [math]n+r-1[/math].

- For [math]|t_i| \lt 1[/math] with [math]1\leq i\leq n[/math]

[[math]] \begin{align*} \prod_{1\leq i\leq n}\frac{1}{1-t_i}=\prod_{1\leq i\leq n}\left(\sum_{m_i\geq 0}t_i^{m_i}\right)&=\sum_{0\leq m_1,...,m_n}t_1^{m_1}\cdot t_2^{m_2}\dotsm t_n^{m_n}\\ &=\sum_{r\geq 0}\sum_{m_1+...+m_n=r\atop m_1\geq 0,...,m_n\geq 0}t_1^{m_1}\cdot t_2^{m_2}\dotsm t_n^{m_n} \end{align*} [[/math]]For [math]t_1=t_2=\dotsm=t_n=t[/math] we have[[math]] \frac{1}{(1-t)^n}=\sum_{r\geq 0}\sum_{m_1+...+m_n=r\atop m_1\geq 0,...,m_n\geq 0}t^r=\sum_{r\geq 0}A_{r,n}\cdot t^r. [[/math]]Taylor's formula gives us that[[math]] \frac{1}{(1-r)^n}=\sum_{r\geq 0}\binom{n+r-1}{r}t^n\Longrightarrow A_{r,n}=\binom{n+r-1}{r}. [[/math]]

Example

The partial derivatives of order [math]r[/math] of a [math]C^{\infty}[/math] function [math]f(x_1,...,x_n)[/math] of [math]n[/math] variables do not depend on the order of differentiation but only on the number of times that each variable appears. Hence there exists [math]\binom{n+r-1}{r}[/math] different partial derivatives of order [math]r[/math].

[math]A_{r,n}[/math] is also the number of different integer solutions to the equation [math]r_1+...+r_n=r[/math].

Combination of events

We write a permutation [math]\sigma\in\Sigma_N[/math] in the following way

We know that the permutation group has cardinality [math]\vert\Sigma_N\vert=N![/math]. The probability of a permutation is given by

We go back to a probability space [math](\Omega,\A,\p)[/math]. If [math]A_1,A_2\in\A[/math] with [math]A=A_1\cup A_2[/math], we get

What about [math]\p[A][/math] when [math]A=\bigcup_{i=1}^NA_i[/math], where [math]A_1,...,A_N\subset \A[/math]? We shall note [math]\p_i=\p[A_i][/math], [math]\forall 1\leq i\leq N[/math] and [math]\p_{ij}=\p[A_i\cap A_j][/math] for [math]i \lt j[/math]. For [math]i_1 \lt ... \lt i_k[/math] we have that

We note that

Moreover, [math]S_r[/math] has [math]\binom{N}{r}[/math] terms. For [math]N=2[/math] we get

Example

Two equivalent decks of [math]N[/math] cards. Each are put into random order and matched against each other. If a card occupies the same place in both decks we speack of a match. We want to compute the probability of having at least one match. Let us number the cards [math]1,...,N[/math] with

where the first line denotes the first deck and the second line denotes the second deck. A match for [math]k[/math] corresponds to [math]k=\sigma(k)[/math]. In that case we call [math]k[/math] a fix point of the permutation [math]\sigma[/math]. We look for the number of permutations of [math]\{1,...,N\}[/math] (out of the [math]N[/math]) which have at least 1 fixed point. Let [math]A_k[/math] be the event [math]k=\sigma(k)[/math]. Clearly

similarly if [math]i \lt j[/math],

More generally

and hence

If [math]\p_1[/math] is the probability of the least fixed point, then

Random Walks

The Reflection Principle

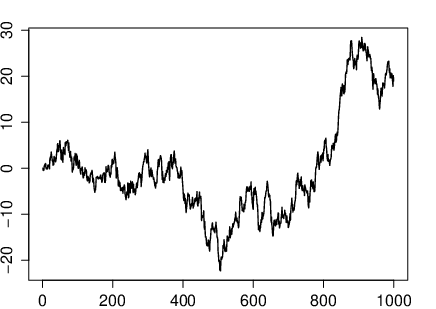

From a formal point of view, we shall be concerned with arrangements of finitely many [math]+1[/math] and [math]-1[/math]. Consider [math]n=p+q[/math] symbols [math]\epsilon_1,...,\epsilon_n[/math], where [math]\epsilon_j\in\{-1,+1\}[/math] for all [math]1\leq j\leq n[/math]. Suppose that there are [math]p[/math] [math]\{+1\}[/math]'s and [math]q[/math] [math]\{-1\}[/math]'s. Then [math]S_k=\epsilon_1+...+\epsilon_k[/math] represents the difference between the number of [math]\{+1\}[/math]'s and [math]\{-1\}[/math]'s at the first [math]k[/math] places.

The arrangement [math](\epsilon_1,...,\epsilon_n)[/math] will be represented by a polygonal line whose [math]k[/math]'th side has slope [math]\epsilon_k[/math], and whose [math]k[/math]'th vertex has ordinate [math]S_k[/math]. Such lines will be called a path. We shall use [math](t,x)[/math] for coordinates.

Let [math]n \gt 0[/math] and [math]x[/math] be integers. A path [math](S_0,S_1,...,S_n)[/math] from the origin to the point [math](n,x)[/math] is a polygonal line whose vertices have abscissas [math]0,1,2,...,n[/math] and ordinates [math]S_0,S_1,...,S_n[/math] satisfying (10) with [math]S_n=x[/math]. We shall refer to [math]n[/math] as the length of the path. There are [math]2^n[/math] paths of length [math]n[/math]. Moreover, we have

A path from the origin to an arbitrary point [math](n,x)[/math] exists only if [math]n[/math] and [math]x[/math] are of the form as in the definition. In this case the [math]p[/math] places for the positive [math]\epsilon_k[/math]'s can be chosen from the [math]n=p+q[/math] available places in

different ways. {[math]Convention:[/math]} [math]N_{n,x}=0[/math] whenever [math]n[/math] and [math]x[/math] are not of the form as in the definition. this implies that, [math]N_{n,x}[/math] represents the number of different paths from the origin to an arbitrary point [math](n,x)[/math].

Example

[Ballot theorem] Suppose that in a ballot candidate [math]P[/math] scores [math]p[/math] votes and candidate [math]Q[/math] scores [math]q[/math] votes, where [math]p \gt q[/math]. the probability that throughout the counting there are always more votes for [math]P[/math] than for [math]Q[/math] equals [math]\frac{p-q}{p+q}[/math]. The whole voting record may be represented by a path of length [math]p+q[/math] in which [math]\epsilon_k=+1[/math], if the [math]k[/math]'th vote is for [math]P[/math] and [math]\epsilon_k=-1[/math] otherwise. Conversely every path from the origin to the point [math](p+q,p-q)[/math] can be interpreted as a voting with the given totals [math]p[/math] and [math]q[/math]. [math]S_k[/math] is the number of votes by which [math]P[/math] leads just after the [math]k[/math]'th vote. The candidate [math]P[/math] leads throughout the voting if [math]S_1 \gt 0,S_2 \gt 0,...,S_n \gt 0[/math] (in the ballot theorem, it is implicitly assumed that all paths are equally probable).

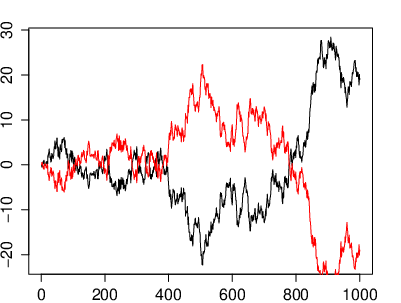

Let [math]A=(a,\alpha)[/math] and [math]B=(b,\beta)[/math] be two independent points with [math]b \gt a\geq 0[/math], [math]a,b\in\N[/math], [math]\alpha \gt 0[/math], [math]\beta \gt 0[/math], [math]\alpha,\beta\in\N[/math]. By reflection of [math]A[/math] on the [math]t[/math]-axis we mean the point [math]A'=(a,-\alpha)[/math].

The number of paths from [math]A[/math] to [math]B[/math] which touch or cross the [math]t[/math]-axis equals the number of paths from [math]A'[/math] to [math]B[/math].

Consider a path [math](S_a=\alpha,S_{a+1},...,S_b=B)[/math] from [math]A[/math] to [math]B[/math] having one or more vertices on that axis. Let [math]t[/math] be the abscissa of the first such vertex that is [math]S_a \gt 0,S_{a+1} \gt 0,...,S_{k-1} \gt 0,S_k \gt 0[/math]. Then [math](-S_a,-S_{a+1},...,-S_{k-1},S_k,S_{k+1},...,S_b)[/math] is a path leading from [math]A'[/math] to [math]B[/math] and having [math]T=(t,0)[/math] as its vertex on the [math]t[/math]-axis. This gives a one to one correspondence between all paths from [math]A'[/math] to [math]B[/math] and paths from [math]A[/math] to [math]B[/math] that have a vertex on the [math]t[/math]-axis.

Let us now prove the ballot theorem. Let [math]n[/math] and [math]x[/math] be positive integers. There are exactly [math]\frac{x}{n}N_{n,x}[/math] paths [math](S_1,...,S_n=x)[/math] such that

Indeed clearly there exists exactly as many admissible paths as there are paths from [math](1,1)[/math] to [math](n,x)[/math], which neither or cross the [math]t[/math]-axis. From the previous lemma the number of such paths equals

where we used that [math]N_{n,x}=\binom{p+q}{p}[/math]. So we get

Random Walk terminology

We set [math]S_0=0[/math], [math]S_n=X_1+...+X_n[/math], [math]X_j\in\{-1,+1\}[/math]. Our state space would be [math]\Omega_n[/math], containing all possible paths. Therefore we get that [math]\vert\Omega\vert=2^n[/math]. We set our [math]\sigma[/math]-Algebra [math]\A=\mathcal{P}(\Omega_n)[/math]. We set [math]\p[/math] to be our uniform probability measure. Consider the event [math]\{S_n=r\}[/math] (at time [math]n[/math] the particle is at the point [math]r[/math]). We shall also speak about a visit to [math]r[/math] at time [math]n[/math]. the number [math]N_{n,x}[/math] of paths from the origin to [math]N_{n,r}[/math] is given by

with [math]n=p+q[/math], [math]r=p-q[/math] and hence [math]p=\frac{n+r}{2}[/math]. Here we interpret [math]\binom{n}{\frac{n+r}{2}}[/math] as 0 if [math]n+r[/math] is not an even integer between 0 and [math]n[/math]. Hence we get that

A return to the origin occurs at time [math]k[/math] if [math]S_k=0[/math]. Here [math]k[/math] is necessarily an even integer, that we note [math]k=2\nu[/math] with [math]\nu[/math] an integer. The probability of return to the origin is

We shall denote it by [math]U_{2\nu}[/math], which is now given by

Stirling's formula implies that [math]U_{2\nu}\sim\frac{1}{\sqrt{\pi r}}[/math]. Among the returns to the origin, the first return receives special attention. A first return occurs at [math]2\nu[/math] if

We denote the probability of this event by [math]f_{2\nu}[/math].

For all [math]n\geq 0[/math] we have

When the event on the left hand side occurs, either all [math]S_j \gt 0[/math] or all [math]S_j \lt 0[/math]. Since these events are equally probable it follows that

We have

Saying that the first return to the origin occurs at [math]2^n[/math] amounts [math]S_1\not=0,S_2\not=0,...,S_{2n-1}\not=0,S_{2n}=0[/math], we have

and

which implies that

and hence for all [math]n\geq 1[/math] we have

Therefore we get the relation

The probability that up to time [math]2n[/math], the last visit to the origin occurs at [math]2k[/math] is

We are concerned with paths satisfying [math]S_{2k}=0,S_{2k+1}\not=0,...,S_{2n}\not=0[/math]. The first [math]2k[/math] vertices of such paths can be chosen in [math]2^{2k}N_{2k}[/math] different ways. Taking the point [math](2k,0)[/math] as new origin and using the last lemma, we see that the next [math](2n-k)[/math] vertices can be chosen in [math]2^{2n-2k}N_{2n-2k}[/math] different ways. Therefore we get

General references

Moshayedi, Nima (2020). "Lectures on Probability Theory". arXiv:2010.16280 [math.PR].