Instrumental Variables: When Confounders were not Collected

So far in this section we have considered a case where the confounder [math]x[/math] was available in the observational data. This allowed us to either fit the regressor directly on [math]p(y|a,x)[/math], use inverse probability weighting or re-balance the dataset using the matching scheme. It is however unlikely that we are given full access to the confounders (or any kind of covariate) in the real world. It is thus important to come up with an approach that works on passively collected data without covariates.

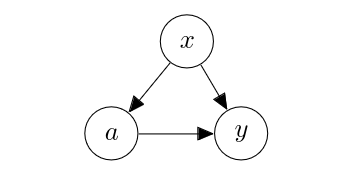

An instrumental variable estimator. Let us rewrite the following graph [math]G_0[/math] into a corresponding structural causal model:

The structural causal model is then

From this structural causal model, we can read out two important points. First, as we have learned earlier in Confounders, Colliders and Mediators, [math]x[/math] is a confounder, and when it is not observed, the path [math]a \leftarrow x \to y[/math] is open, creating a spurious effect of [math]a[/math] on [math]y[/math]. Second, the choice of [math]a[/math] is not fully determined by [math]x[/math]. It is determined by the combination of [math]x[/math] and [math]\epsilon_a[/math], where the latter is independent of [math]x[/math]. We are particularly interested in the second aspect here, since it gives us an opportunity to modify this graph by introducing a new variable that may help us remove the effect of the confounder [math]x[/math].

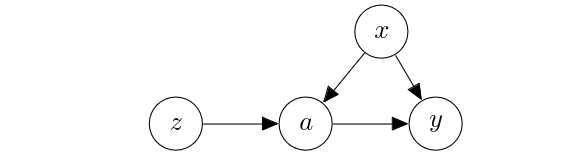

We now consider an alternative to the structural causal model above by assuming that we found another variable [math]z[/math] that largely explains the exogenous factor [math]\epsilon_a[/math]. That is, instead of saying that the action [math]a[/math] is determined by the combination of the covariate [math]x[/math] and an exogenous factor [math]\epsilon_a[/math], we now say that it is determined by the combination of the covariate [math]x[/math], this new variable [math]z[/math] and an exogenous factor [math]\epsilon'_a[/math]. Because [math]z[/math] explains a part of the exogenous factor rather than [math]x[/math], [math]z[/math] is independent of [math]x[/math] a priori. This introduction of [math]z[/math] alters the structural causal model to become

which corresponds to the following graph [math]G_1[/math]:

This altered graph [math]G_1[/math] does not help us infer the causal effect of [math]a[/math] on [math]y[/math] any more than the original graph [math]G_0[/math] did. It however provides us with an opportunity to replace the original action [math]a[/math] with a proxy based purely on the newly introduced variable [math]z[/math] independent of [math]x[/math]. We first notice that [math]x[/math] cannot be predicted from [math]z[/math], because [math]z[/math] and [math]x[/math] are by construction independent. The best we can do is thus to predict the associated action.[Notes 1] Let [math]p_{\tilde{a}}(a|z)[/math] be the conditional distribution induced by [math]g_{\tilde{a}}(z, \epsilon_a'')[/math]. Then, we want to find [math]g_a[/math] that minimizes

We also notice that [math]z[/math] cannot be predicted from [math]a[/math] perfectly without [math]x[/math], because [math]a[/math] is an observed collider, creating a dependency between [math]z[/math] and [math]x[/math]. The best we can do is to thus to predict the expected value of [math]z[/math].

That is, we look for [math]g_z[/math] that minimizes

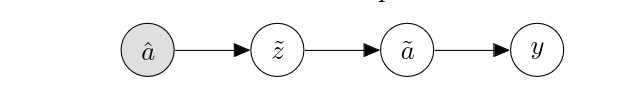

where we have used [math]p_{\tilde{z}}(z|a)[/math] be the conditional distribution induced by [math]g_{\tilde{z}}(a, \epsilon'_z)[/math], similarly to [math]p_{\tilde{a}}(a|z)[/math] above. Once we found reasonable solutions, [math]\hat{g}_{\tilde{a}}[/math] and [math]\hat{g}_{\tilde{z}}[/math], to the minimization problems above, respectively, we can further modify the structural causal model into

Since [math]x[/math] is really nothing but an exogenous factor of [math]y[/math] without impacting [math]\tilde{a}[/math] nor [math]z[/math] in this case, we can simplify this by merging [math]x[/math] and [math]\epsilon_y[/math] into

Because we assume the action [math]a[/math] is always given, we simply set it to a particular action [math]\hat{a}[/math]. This structural causal model can be depicted as the following graph [math]G_2[/math]:

In other words, we start from the action, approximately infer the extra variable [math]\tilde{z}[/math], approximately infer back the action and then predict the outcome. During two stages of inference ([math]\tilde{z} | a[/math] and [math]\tilde{a} | \tilde{z}[/math]), we drop the dependence of [math]a[/math] on [math]z[/math]. This happens, because [math]z[/math] was chosen to be independent of [math]x[/math] a priori. In this graph, we only have two mediators in sequence, [math]\tilde{z}[/math] and [math]\tilde{a}[/math], from the action [math]a[/math] to the outcome [math]y[/math]. We can then simply marginalize out both of these mediators in order to compute the interventional distribution over [math]y[/math] given [math]a[/math], as we learned in Confounders, Colliders and Mediators. That is,

We call this estimator an instrumental variable estimator and call the extra variable [math]z[/math] an instrument. Unlike the earlier approaches such as regression and IPW, this approach is almost guaranteed to give you a biased estimate of the causal effect.

Assume we are provided with [math]D=\left\{ (a_1, y_1, z_1), \ldots, (a_N, y_N, z_N) \right\}[/math] after we are done estimating those functions above. We can then get the approximate causal effect of the action of interest [math]\hat{a}[/math] by

Additionally, if we are provided further with [math]D_{\bar{z}}=\left\{ (a'_1, y'_1), \ldots, (a'_{N'}, y'_{N'}) \right\}[/math], we can use [math]\hat{g}_z(a, \epsilon'_z)[/math] to approximate this quantity, together with [math]D[/math]. Let [math](a_n,y_n,r_n,z_n) \in \bar{D}[/math] be

Then,

In the former case, we must solve two regression problems, finding [math]\hat{f}_y[/math] and [math]\hat{g}_{\tilde{a}}[/math]. When we do so by solving a least squares problem for each, we end up with two least squares problems that must be solved sequentially. Such a case is often referred as two-stage least squares. In the latter case, we benefit from extra data by solving three regression problems. Though, in most cases, we choose an easy-to-obtain instrument so that it is often enough to solve two regression problems.

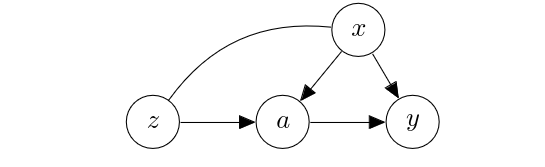

There are two criteria that need to be considered when choosing an instrument [math]z[/math]. First, the instrument must be independent of the confounder [math]x[/math] a priori. If this condition does not hold, we end up with the following graph:

The undirected edge between [math]z[/math] and [math]x[/math] indicates that they are not independent. In this case, even if we manage to remove the edge between [math]a[/math] and [math]x[/math], there is still a spurious path [math]a \leftarrow z \leftrightarrow x \to y[/math], that prevents us from avoiding this bias. This first criterion is therefore the most important consideration behind choosing an instrument.

Instrumental variables must be predictive of the action. The second criterion is that [math]z[/math] be a cause of [math]a[/math] together with [math]x[/math]. That is, a part of whatever cannot be explained by [math]x[/math] in determining [math]a[/math] must be captured by [math]z[/math]. We can see why this is important by recalling the IPW-based estimate against the instrumental variable based estimate. The IPW-based estimate from Eq.eq:ipw2 is reproduced here as

If we contrast it with the instrument variable based estimate in Eq.\eqref{eq:iv_estimate}, we get

where we assume [math]D=\left\{ (a_1, y_1, x_1, z_1), \ldots, (a_N, y_N, x_N, z_N)\right\}[/math]. There are two estimates within (a) above that result in a bias. Among these two, [math]\hat{f}_y[/math] and [math]\hat{g}_{\tilde{a}}[/math], the former does not stand a chance of being an unbiased estimate, because it is not given the unbiased estimate of the action nor the covariate [math]x[/math]. Contrast this with the regression-based estimate above where [math]\hat{f}_y[/math] afforded to rely on the true (sampled) action and the true (sampled) covariate. The latter is however where we have a clear control over. Assume that [math]f^*_y[/math] is linear. That is,

where [math]\alpha[/math] and [math]\beta[/math] are the coefficients. If [math]\mathbb{E}[x]=0[/math] and [math]a[/math] is selected independent of [math]x[/math],

The term (a), with the assumption that [math]\frac{y_n}{\hat{p}(a_n|x)} = f^*_y(a_n)[/math], can then be expressed as

where [math]r_{\alpha}[/math] is the error in estimating [math]\alpha^*[/math], i.e., [math]\hat{\alpha}=\alpha^* + r_{\alpha}[/math]. For brevity, let [math]\hat{g}(z_n) = \mathbb{E}_{\epsilon''_a} \hat{g}_{\tilde{a}}(z_n, \epsilon''_a)[/math], which allows us to rewrite it as

Let us now look at the overall squared error:

where we use [math]m[/math] to refer to each example with [math]a_m=\hat{a}[/math]. If we further expand the squared term,

The first term is constant. It simply tells us that the error would be greater if the variance of the relevant dimensions of the action on the outcome, where the relevancy is determined by [math]\alpha^*[/math], is great, the chance of mis-approximating it would be simply great as well. The second term tells us that the error would be proportional to the variance of the relevant dimensions of the predicted action on the outcome, where the relevancy is determined by the predicted coefficient, [math]\alpha^* - \hat{r}[/math]. That is, if the variance of the predicted outcome is great, the chance of a large error is also great. The third term is where we consider the correlation between the true action and the predicted action, again along the dimensions of relevance.

This derivation tells us that the instrument must be selected to be highly predictive of the action (the third term) but also exhibit a low variance in its prediction (the second term). Here comes the classical dilemma of bias-variance trade-off in machine learning.

The linear case.

The instrument variable approach is quite confusing. Consider a 1-dimensional fully linear case here in order to build up our intuition. Assume

where [math]\epsilon_x[/math] and [math]\epsilon_y[/math] are both zero-mean Normal variables. If we intervene on [math]a[/math], we would find that the expected outcome equals

and thereby the ATE is

With a properly selected instrument [math]z[/math], that is, [math]z \indep x[/math], we get

Because [math]z\indep x[/math], the best we can do is to estimate [math]\psi[/math] to minimize

given [math]N[/math] [math](a,z)[/math] pairs. The minimum attainable loss is

assuming zero-mean [math]\epsilon'_a[/math], because the contribution from [math]x[/math] cannot be explained by the instrument [math]z[/math]. With the estimated [math]\hat{\psi}[/math], we get

and know that

on expectation. By plugging in [math]\hat{a}[/math] into the original structural causal model, we end up with

We can now estimate [math]\tilde{\alpha}[/math] by minimizing

assuming both [math]\epsilon_x[/math] and [math]\epsilon_y'[/math] are centered. If we assume we have [math]x[/math] as well, we get

The first term is irreducible, and therefore we focus on the second term. The second term is the product of two things. The first one, [math](\alpha - \tilde{\alpha})^2[/math], measures the difference between the correct [math]\alpha[/math] and the estimated effect. It tells us that minimizing this loss w.r.t. [math]\tilde{\alpha}[/math] is the right way to approximate the true causal effect [math]\alpha[/math]. We can plug in [math]\hat{a}[/math] from Eq.\eqref{eq:iv-hat-a} instead:

The first term is about how predictive the instrument [math]z[/math] is of [math]y[/math], which is a key consideration in choosing the instrument. If the instrument is not predictive of [math]y[/math], the instrument variable approach fails dramatically. The second term corresponds to the variance of the action not explained by the instrument, implying that the instrument must also be highly correlated with the action. In this procedure, we have solved least squares twice, \eqref{eq:iv-1st-ls} and \eqref{eq:iv-2nd-ls}, which is a widely used practice with instrument variables. We also saw the importance of the choice of the instrument variable.

An example: taxation

One of the most typical example of an instrument is taxation. It is particularly in the United States of America (USA), due to the existence of different tax laws and rates across fifty states. For instance, imagine an example where the action is cigarette smoking, the outcome is the contraction of lung cancer and the confounder is an unknown genetic mutation that both affects the affinity to nicotine addiction and the incidence of a lung cancer. Because we do not know such a genetic mutation, we cannot easily draw a conclusion about the causal effect of cigarette smoking on lung cancer. There may be a spurious correlation arising from this unknown, and thereby unobserved, genetic mutation. Furthermore, it is definitely unethical to randomly force people to smoke cigarettes, which prevents us from running an RCT.

We can instead use state-level taxation on tobacco as an instrument, assuming that lower tax on tobacco products would lead to a higher chance and also rate of smoking, and vice versa. First, we build a predictor of smoking from the state (or even county, if applicable) tax rate. The predicted amount of cigarettes smoked by a participant can now work as a proxy to the original action, that is the actual amount of cigarettes smoked. We then build a predictor of the incidence of lung cancer as well as the reverse predictor (action-to-instrument prediction). We can then use one of the two instrument variable estimators above to approximate the potential outcome of smoking on lung cancer.

General references

Cho, Kyunghyun (2024). "A Brief Introduction to Causal Inference in Machine Learning". arXiv:2405.08793 [cs.LG].