Riemann Sums and the Trapezoid Rule

This section is divided into two parts. The first is devoted to an alternative approach to the definite integral, which is useful for many purposes. The second is an application of the first part to the problem of computing definite integrals by numerical approximations. We begin by reviewing briefly the definitions in Section 1 of Chapter 4. If the function [math]f[/math] is bounded on the closed interval [math][a, b][/math], then, for every partition [math]\sigma[/math] of [math][a, b][/math], there are defined an upper sum [math]U_\sigma[/math] and a lower sum [math]L_\sigma[/math], which approximate the definite integral from above and below, respectively. The function [math]f[/math] is defined to be integrable over [math][a, b][/math] if there exists one and only one number, denoted by [math]\int_a^b f[/math], with the property that

for every pair of partitions [math]\sigma[/math] and [math]\tau[/math] of [math][a, b][/math]. In the alternative description of the integral, the details are similar, but not the same. As above, let [math]\sigma = \{ x_0, . . ., x_n \}[/math] be a partition of [math][a, b][/math], which satisfies the inequalities

In each subinterval [math][x_{i-1}, x_i][/math] we select an arbitrary number, which we shall denote by [math]x_i^{*}[/math]. Then the sum

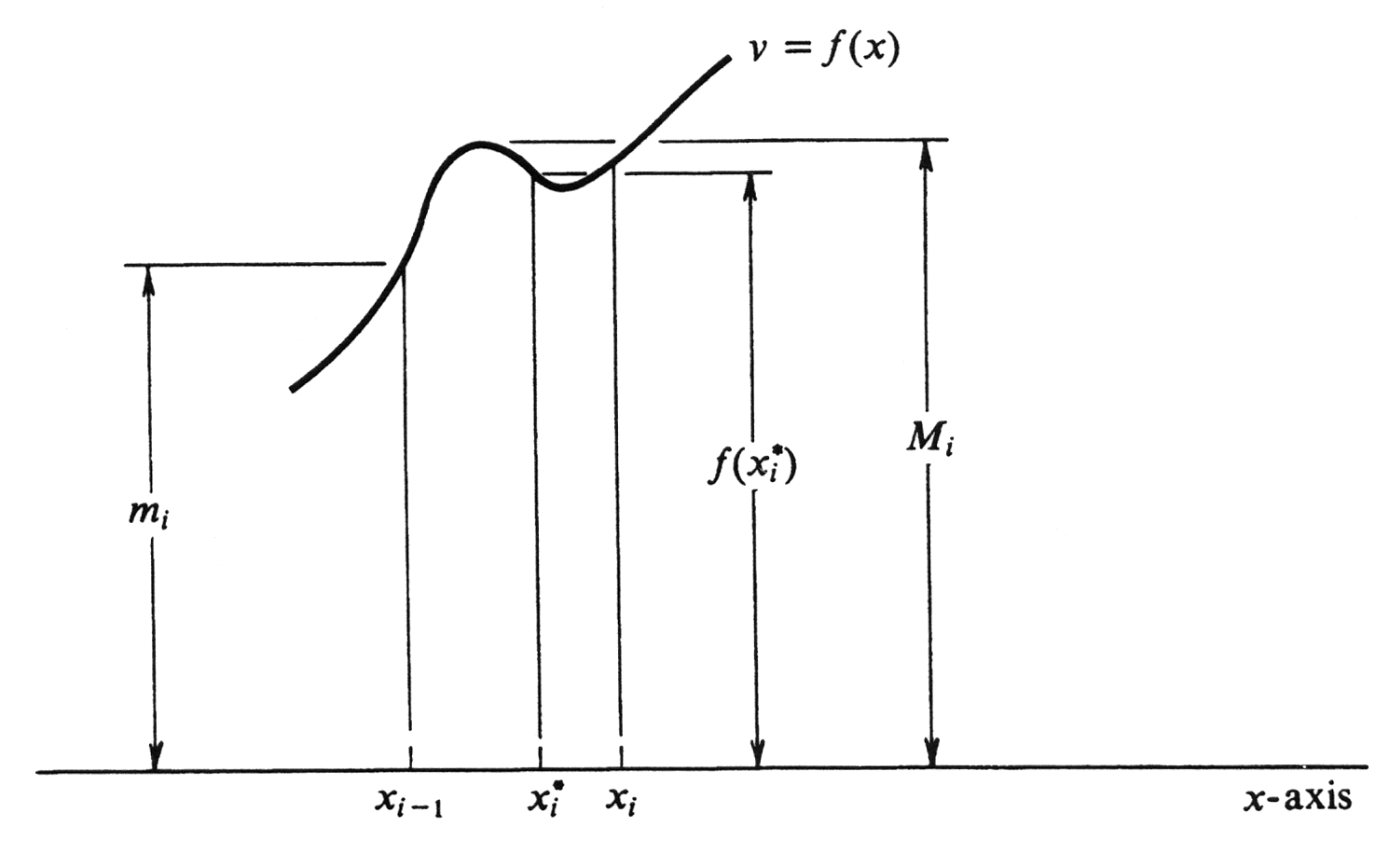

is called a Riemann sum for [math]f[/math] relative to the partition [math]\sigma[/math]. (The name commemorates the great mathematician Bernhard Riemann, 1826-1866.) It is important to realize that since [math]x_i^{*}[/math] may be any number which satisfies [math]x_{i-1} \leq x_i^{*} \leq x_i[/math], there are in general infinitely many Riemann sums [math]R_\sigma[/math] for a given [math]f[/math] and partition [math]\sigma[/math]. However, every [math]R_\sigma[/math] lies between the corresponding upper and lower sums [math]U_\sigma[/math] and [math]L_\sigma[/math]. For, if the least upper bound of the values of [math]f[/math] on [math][x_{i-1}, x_i][/math] is denoted by [math]M_i[/math] and the greatest lower bound by [math]m_i[/math], then [math]f(x_i^{*})[/math] is an intermediate value and

as shown in Figure 4. It follows that

which states that

for every Riemann sum [math]R_\sigma[/math] for [math]f[/math] relative to [math]\sigma[/math].

Every Riemann sum is an approximation to the definite integral. By taking partitions which subdivide the interval of integration into smaller and smaller subintervals, we should expect to get better and better approximations to [math]\int_a^b f[/math]. One number which measures the fineness of a given partition [math]\sigma[/math] is the length of the largest subinterval into which [math]\sigma[/math] subdivides [math][a, b][/math]. This number is called the mesh of the partition and is denoted by [math]||\sigma ||[/math]. Thus if [math]\sigma = \{ x_0, ..., x_n \}[/math] is a partition of [math][a, b][/math] with

then

The following definition states precisely what we mean when we say that the Riemann sums approach a limit as the mesh tends to zero. Let the function [math]f[/math] be bounded on the interval [math][a, b][/math]. We shall write

where [math]L[/math] is a real number, if the difference between the number [math]L[/math] and any Riemann sum [math]R_\sigma[/math] for [math]f[/math] is arbitrarily small provided the mesh [math]||\sigma||[/math] is sufficiently small. Stated formally, the limit exists if: For any positive real number [math]\epsilon[/math], there exists a positive real number [math]\delta[/math] such that, if [math]R_\sigma[/math] is any Riemann sum for [math]f[/math] relative to a partition [math]\sigma[/math] of [math][a, b][/math] and if [math]|| \sigma || \lt \delta[/math], then [math] | R_\sigma - L | \lt \epsilon[/math]. The fundamental fact that integrability is equivalent to the existence of the limit of Riemann sums is expressed in the following theorem.

Let [math]f[/math] be bounded on [math][a, b][/math]. Then [math]f[/math] is integrable over [math][a, b][/math] if and only if [math]\lim_{|| \sigma || \rightarrow 0} R_\sigma[/math] exists. If this limit exists, then it is equal to [math]\int_a^b f[/math].

The proof is given in Appendix C.

Example Using the fact that the definite integral is the limit of Riemann sums, evaluate

The numerator of this fraction suggests trying the function [math]f[/math] defined by [math]f(x) = \sqrt x[/math]. If [math] \sigma = \{ x_0, . . ., x_n \}[/math] is the partition of the interval [math][0, 1][/math] into subintervals of length [math]\frac{1}{n}[/math], then

In each subinterval [math][x_{i-1}, x_i][/math] we take [math]x_{i}^{*} = x_i[/math]. Then

and the resulting Riemann sum, denoted by [math]R_n[/math], is given by

The function [math]\sqrt x[/math] is continuous and hence integrable over the interval [math][0, 1][/math]. Since [math]|| \sigma || \rightarrow 0[/math] as [math]n \rightarrow \infty[/math], it follows from Theorem (2.1) that

Hence

and the problem is solved.

Theorem (2.1) shows that it is immaterial whether we define the definite integral in terms of upper and lower sums (as is done in this book) or as the limit of Riemann sums. Hence there is no logical necessity for introducing the latter at all. However, a striking illustration of the practical use of Riemann sums arises in studying the problem of evaluating definite integrals by numerical methods. In spite of the variety of techniques which exist for finding antiderivatives and the existence of tables of indefinite integrals,there are still many functions for which we cannog find an antiderivative. More often than not, the only way of computing [math]\int_a^b f(x) dx[/math] is by numerical approximation. However, the increasing availability of high-speed computers has placed these methods in an entirely new light. Evaluating [math]\int_a^b f (x) dx[/math] by numerical approximation is no longer to be regarded as a last resort to be used only if all else fails. It is an interesting, instructive, and simple task to write a machine program to do the job, and the hundreds, thousands, or even millions of arithmetic operations which may be needed to obtain the answer to a desired accuracy can be performed by a machine in a matter of seconds or minutes. One of the simplest and best of the techniques of numerical integration is the Trapezoid Rule, which we now describe. Suppose that the function [math]f[/math] is integrable over the interval [math][a, b][/math]. For every positive integer [math]n[/math], let [math]\sigma_n[/math] be the partition which subdivides [math][a, b][/math] into [math]n[/math] subintervals of equal length [math]h[/math]. Thus [math]\sigma_n = \{ x_0, . . ., x_n \}[/math] and

It is convenient to set

Then the Riemann sum obtained by choosing [math]x_i^*[/math] to be the left endpoint of each subinterval [math][x_{i-1}, x_i][/math], i.e., by choosing [math]x_{i}^{*} = x_{i-1}[/math], is given by

Similarly, the Riemann sum obtained by choosing [math]x_{i}^{*}[/math] to be the right endpoint, i.e., by taking [math]x_{i}^{*} = x_i[/math], is

The approximation to [math]\int_a^b f[/math] prescribed by the Trapezoid Rule, which we denote by [math]T_n[/math] is by definition the average of these two Riemann sums. Thus

The last expression above can be simplified by observing that

Hence

We shall express the fact that [math]T_n[/math] is an approximation to the integral [math]\int_a^b f[/math] by writing [math]\int_a^b f \approx T_n[/math]. The Trapezoid Rule then appears as the formula

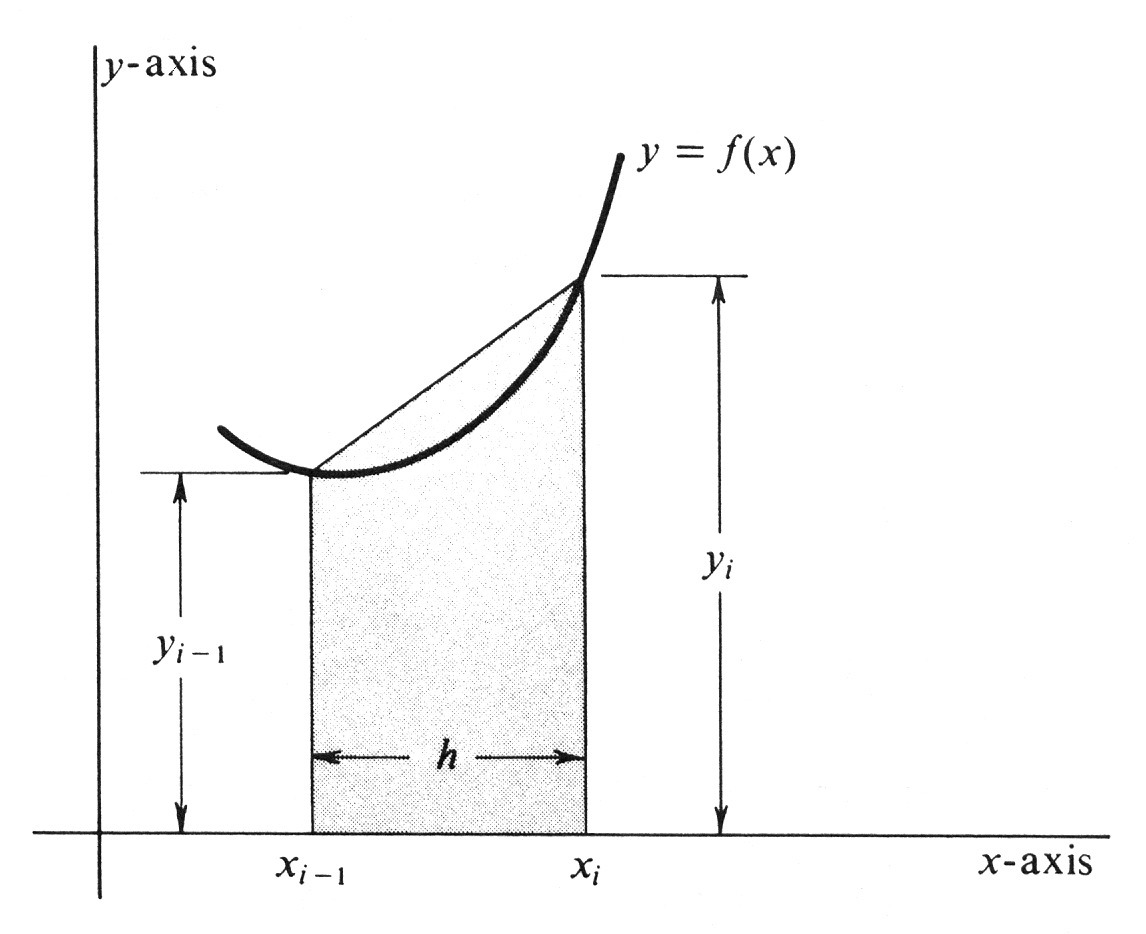

Why is this formula called the Trapezoid Rule? Suppose that [math]f(x) \geq 0[/math] for every [math]x[/math] in [math][a, b][/math], and observe that

The area of the shaded trapezoid shown in Figure 5 is an approximation to [math]\int_{x_{i-1}}^{x_i} f[/math]. This trapezoid has bases [math]y_{i-1} = f(x_{i-1})[/math] and [math]y_i = f(x_i)[/math] and altitude [math]h[/math]. By a well-known formula, its area is therefore equal to [math]\frac{h}{2} (y_{i-1} + y_i)[/math]. The integral [math]\int_a^b f[/math] is therefore approximated by the sum of the areas of the trapezoids, which is equal to

Equation (1) shows that this number is equal to [math]T_n[/math].

Example Using the Trapezoid Rule, find an approximate value for [math]\int_{-1}^1 (x^3 + x^2) dx[/math]. We shall subdivide the interval [math][-1, 1][/math] into [math]n = 8[/math] subintervals each of length [math]h = \frac{1}{4}[/math]. The relevant numbers are compiled in Table 1.

| [math]i[/math] | [math]x_i[/math] | [math]y_i = x_i^2 + x_i^3[/math] |

| 0 | [math]-1[/math] | [math]1 - 1 = 0\;\;[/math] |

| 1 | [math]-\frac{3}{4}[/math] | [math]\frac{9}{16} - \frac{27}{64} = \frac{9}{64}[/math] |

| 2 | [math]-\frac{2}{4}[/math] | [math]\frac{4}{16} - \frac{8}{64} = \frac{8}{64}[/math] |

| 3 | [math]-\frac{1}{4}[/math] | [math]\frac{1}{16} - \frac{1}{64} = \frac{3}{64}[/math] |

| 4 | 0 | [math]0 + 0 = 0\;\;[/math] |

| 5 | [math]\frac{1}{4}[/math] | [math]\frac{1}{16} + \frac{1}{64} = \frac{5}{64}[/math] |

| 6 | [math]\frac{2}{4}[/math] | [math]\frac{4}{16} + \frac{8}{64} = \frac{24}{64}[/math] |

| 7 | [math]\frac{3}{4}[/math] | [math]\frac{9}{16} + \frac{27}{64} = \frac{63}{64}[/math] |

| 8 | 1 | [math]1 + 1 = 2\;\;[/math] |

Hence

With the Fundamental Theorem of Calculus it is easy in this case to compute the integral exactly. We get

The error obtained using the Trapezoid Rule is, therefore,

In Example 2, the error can be reduced by taking a larger value of [math]n[/math], or, equivalently, a smaller value of [math]h = \frac{b - a}{n}[/math]. Indeed, it is easy to show that in any application of the Trapezoid Rule the error approaches zero as [math]h[/math] increases without bound (or as [math]h[/math] approaches zero). That is, we have the theorem

It follows from equation (1) that

As a practical aid to computation, Theorem (2.3) is actually of little value. What is needed instead is a method of estimating the error, which is equal to

for a particular choice of [math]n[/math] used in a particular application of the Trapezoid Rule. For this purpose the following theorem is useful.

If the second derivative [math]f''[/math] is continuous at every point of [math][a, b][/math], then there exists a number [math]c[/math] such that [math]a \lt c \lt b[/math] and

An outline of a proof of this theorem can be found in J. M. H. Olmsted, Advanced Calculus, Appleton-Century-Crofts, 1961, pages 118 and 119. To see how this theorem can be used, consider Example 2, in which [math]f(x) = x^3 + x^2[/math] and in which the interval of integration is [math][-1, 1][/math]. In this case [math]f''(x) = 6x + 2[/math], from which it is easy to see that

Applying (2.4), we have

Hence the error satisfies

It follows that by halving [math]h[/math], we could have quartered the error. If we were to replace [math]h[/math] by [math]\frac{h}{10}[/math], which would be no harder with a machine, we would divide the error by 100.

General references

Doyle, Peter G. (2008). "Crowell and Slesnick's Calculus with Analytic Geometry" (PDF). Retrieved Oct 29, 2024.