Dimension-free convex optimization

\label{dimfree} We investigate here variants of the {\em gradient descent} scheme. This iterative algorithm, which can be traced back to [1], is the simplest strategy to minimize a differentiable function [math]f[/math] on [math]\R^n[/math]. Starting at some initial point [math]x_1 \in \R^n[/math] it iterates the following equation:

where [math]\eta \gt 0[/math] is a fixed step-size parameter. The rationale behind \eqref{eq:Cau47} is to make a small step in the direction that minimizes the local first order Taylor approximation of [math]f[/math] (also known as the steepest descent direction). As we shall see, methods of the type \eqref{eq:Cau47} can obtain an oracle complexity {\em independent of the dimension}[Notes 1]. This feature makes them particularly attractive for optimization in very high dimension. Apart from Section Conditional gradient descent, aka Frank-Wolfe, in this chapter [math]\|\cdot\|[/math] denotes the Euclidean norm. The set of constraints [math]\cX \subset \R^n[/math] is assumed to be compact and convex. We define the projection operator [math]\Pi_{\cX}[/math] on [math]\cX[/math] by

The following lemma will prove to be useful in our study. It is an easy corollary of Proposition, see also Figure.

Let [math]x \in \cX[/math] and [math]y \in \R^n[/math], then

Unless specified otherwise all the proofs in this chapter are taken from [2] (with slight simplification in some cases).

Projected subgradient descent for Lipschitz functions

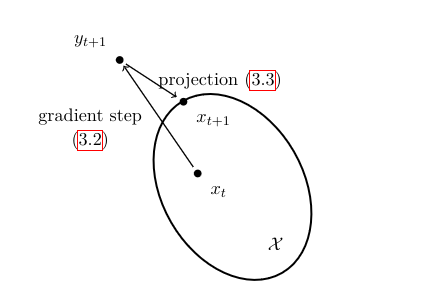

In this section we assume that [math]\cX[/math] is contained in an Euclidean ball centered at [math]x_1 \in \cX[/math] and of radius [math]R[/math]. Furthermore we assume that [math]f[/math] is such that for any [math]x \in \cX[/math] and any [math]g \in \partial f(x)[/math] (we assume [math]\partial f(x) \neq \emptyset[/math]), one has [math]\|g\| \leq L[/math]. Note that by the subgradient inequality and Cauchy-Schwarz this implies that [math]f[/math] is [math]L[/math]-Lipschitz on [math]\cX[/math], that is [math]|f(x) - f(y)| \leq L \|x-y\|[/math]. In this context we make two modifications to the basic gradient descent \eqref{eq:Cau47}. First, obviously, we replace the gradient [math]\nabla f(x)[/math] (which may not exist) by a subgradient [math]g \in \partial f(x)[/math]. Secondly, and more importantly, we make sure that the updated point lies in [math]\cX[/math] by projecting back (if necessary) onto it. This gives the {\em projected subgradient descent} algorithm[Notes 2] which iterates the following equations for [math]t \geq 1[/math]:

This procedure is illustrated in Figure. We prove now a rate of convergence for this method under the above assumptions.

The projected subgradient descent method with [math]\eta = \frac{R}{L \sqrt{t}}[/math] satisfies

Using the definition of subgradients, the definition of the method, and the elementary identity [math]2 a^{\top} b = \|a\|^2 + \|b\|^2 - \|a-b\|^2[/math], one obtains

We will show in Section Lower bounds that the rate given in Theorem is unimprovable from a black-box perspective. Thus to reach an [math]\epsilon[/math]-optimal point one needs [math]\Theta(1/\epsilon^2)[/math] calls to the oracle. In some sense this is an astonishing result as this complexity is independent[Notes 3] of the ambient dimension [math]n[/math]. On the other hand this is also quite disappointing compared to the scaling in [math]\log(1/\epsilon)[/math] of the center of gravity and ellipsoid method of Chapter. To put it differently with gradient descent one could hope to reach a reasonable accuracy in very high dimension, while with the ellipsoid method one can reach very high accuracy in reasonably small dimension. A major task in the following sections will be to explore more restrictive assumptions on the function to be optimized in order to have the best of both worlds, that is an oracle complexity independent of the dimension and with a scaling in [math]\log(1/\epsilon)[/math]. The computational bottleneck of the projected subgradient descent is often the projection step \eqref{eq:PGD2} which is a convex optimization problem by itself. In some cases this problem may admit an analytical solution (think of [math]\cX[/math] being an Euclidean ball), or an easy and fast combinatorial algorithm to solve it (this is the case for [math]\cX[/math] being an [math]\ell_1[/math]-ball, see [3]). We will see in Section Conditional gradient descent, aka Frank-Wolfe a projection-free algorithm which operates under an extra assumption of smoothness on the function to be optimized. Finally we observe that the step-size recommended by Theorem depends on the number of iterations to be performed. In practice this may be an undesirable feature. However using a time-varying step size of the form [math]\eta_s = \frac{R}{L \sqrt{s}}[/math] one can prove the same rate up to a [math]\log t[/math] factor. In any case these step sizes are very small, which is the reason for the slow convergence. In the next section we will see that by assuming {\em smoothness} in the function [math]f[/math] one can afford to be much more aggressive. Indeed in this case, as one approaches the optimum the size of the gradients themselves will go to [math]0[/math], resulting in a sort of ``auto-tuning" of the step sizes which does not happen for an arbitrary convex function.

Gradient descent for smooth functions

We say that a continuously differentiable function [math]f[/math] is [math]\beta[/math]-smooth if the gradient [math]\nabla f[/math] is [math]\beta[/math]-Lipschitz, that is

Note that if [math]f[/math] is twice differentiable then this is equivalent to the eigenvalues of the Hessians being smaller than [math]\beta[/math]. In this section we explore potential improvements in the rate of convergence under such a smoothness assumption. In order to avoid technicalities we consider first the unconstrained situation, where [math]f[/math] is a convex and [math]\beta[/math]-smooth function on [math]\R^n[/math]. The next theorem shows that {\em gradient descent}, which iterates [math]x_{t+1} = x_t - \eta \nabla f(x_t)[/math], attains a much faster rate in this situation than in the non-smooth case of the previous section.

Let [math]f[/math] be convex and [math]\beta[/math]-smooth on [math]\R^n[/math]. Then gradient descent with [math]\eta = \frac{1}{\beta}[/math] satisfies

Before embarking on the proof we state a few properties of smooth convex functions.

Let [math]f[/math] be a [math]\beta[/math]-smooth function on [math]\R^n[/math]. Then for any [math]x, y \in \R^n[/math], one has

We represent [math]f(x) - f(y)[/math] as an integral, apply Cauchy-Schwarz and then [math]\beta[/math]-smoothness:

In particular this lemma shows that if [math]f[/math] is convex and [math]\beta[/math]-smooth, then for any [math]x, y \in \R^n[/math], one has

This gives in particular the following important inequality to evaluate the improvement in one step of gradient descent:

The next lemma, which improves the basic inequality for subgradients under the smoothness assumption, shows that in fact [math]f[/math] is convex and [math]\beta[/math]-smooth if and only if \eqref{eq:defaltsmooth} holds true. In the literature \eqref{eq:defaltsmooth} is often used as a definition of smooth convex functions.

Let [math]f[/math] be such that \eqref{eq:defaltsmooth} holds true. Then for any [math]x, y \in \R^n[/math], one has

Let [math]z = y - \frac{1}{\beta} (\nabla f(y) - \nabla f(x))[/math]. Then one has

We can now prove Theorem \begin{proof} Using \eqref{eq:onestepofgd} and the definition of the method one has

In particular, denoting [math]\delta_s = f(x_s) - f(x^*)[/math], this shows:

One also has by convexity

We will prove that [math]\|x_s - x^*\|[/math] is decreasing with [math]s[/math], which with the two above displays will imply

Let us see how to use this last inequality to conclude the proof. Let [math]\omega = \frac{1}{2 \beta \|x_1 - x^*\|^2}[/math], then[Notes 4]

Thus it only remains to show that [math]\|x_s - x^*\|[/math] is decreasing with [math]s[/math]. Using Lemma one immediately gets

We use this as follows (together with [math]\nabla f(x^*) = 0[/math])

which concludes the proof. \end{proof} \subsection*{The constrained case} We now come back to the constrained problem

Similarly to what we did in Section Projected subgradient descent for Lipschitz functions we consider the projected gradient descent algorithm, which iterates [math]x_{t+1} = \Pi_{\cX}(x_t - \eta \nabla f(x_t))[/math]. The key point in the analysis of gradient descent for unconstrained smooth optimization is that a step of gradient descent started at [math]x[/math] will decrease the function value by at least [math]\frac{1}{2\beta} \|\nabla f(x)\|^2[/math], see \eqref{eq:onestepofgd}. In the constrained case we cannot expect that this would still hold true as a step may be cut short by the projection. The next lemma defines the ``right" quantity to measure progress in the constrained case.

Let [math]x, y \in \cX[/math], [math]x^+ = \Pi_{\cX}\left(x - \frac{1}{\beta} \nabla f(x)\right)[/math], and [math]g_{\cX}(x) = \beta(x - x^+)[/math]. Then the following holds true:

We first observe that

We can now prove the following result.

Let [math]f[/math] be convex and [math]\beta[/math]-smooth on [math]\cX[/math]. Then projected gradient descent with [math]\eta = \frac{1}{\beta}[/math] satisfies

{{{4}}}

Conditional gradient descent, aka Frank-Wolfe

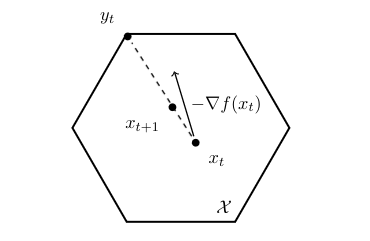

We describe now an alternative algorithm to minimize a smooth convex function [math]f[/math] over a compact convex set [math]\mathcal{X}[/math]. The {\em conditional gradient descent}, introduced in [4], performs the following update for [math]t \geq 1[/math], where [math](\gamma_s)_{s \geq 1}[/math] is a fixed sequence,

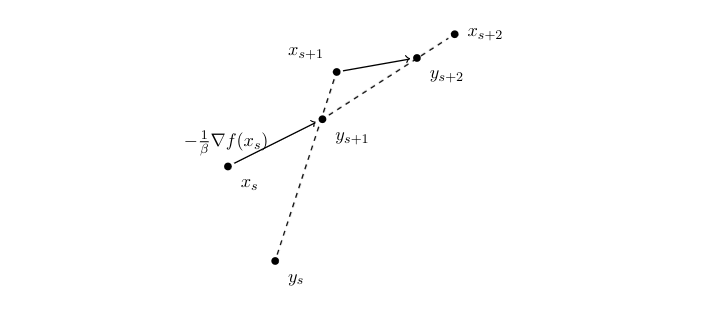

In words conditional gradient descent makes a step in the steepest descent direction {\em given the constraint set [math]\cX[/math]}, see Figure for an illustration. From a computational perspective, a key property of this scheme is that it replaces the projection step of projected gradient descent by a linear optimization over [math]\cX[/math], which in some cases can be a much simpler problem.

We now turn to the analysis of this method. A major advantage of conditional gradient descent over projected gradient descent is that the former can adapt to smoothness in an arbitrary norm. Precisely let [math]f[/math] be [math]\beta[/math]-smooth in some norm [math]\|\cdot\|[/math], that is [math]\|\nabla f(x) - \nabla f(y) \|_* \leq \beta \|x-y\|[/math] where the dual norm [math]\|\cdot\|_*[/math] is defined as [math]\|g\|_* = \sup_{x \in \mathbb{R}^n : \|x\| \leq 1} g^{\top} x[/math]. The following result is extracted from [5] (see also [6]).

Let [math]f[/math] be a convex and [math]\beta[/math]-smooth function w.r.t. some norm [math]\|\cdot\|[/math], [math]R = \sup_{x, y \in \mathcal{X}} \|x - y\|[/math], and [math]\gamma_s = \frac{2}{s+1}[/math] for [math]s \geq 1[/math]. Then for any [math]t \geq 2[/math], one has

The following inequalities hold true, using respectively [math]\beta[/math]-smoothness (it can easily be seen that \eqref{eq:defaltsmooth} holds true for smoothness in an arbitrary norm), the definition of [math]x_{s+1}[/math], the definition of [math]y_s[/math], and the convexity of [math]f[/math]:

In addition to being projection-free and ``norm-free", the conditional gradient descent satisfies a perhaps even more important property: it produces {\em sparse iterates}. More precisely consider the situation where [math]\cX \subset \R^n[/math] is a polytope, that is the convex hull of a finite set of points (these points are called the vertices of [math]\cX[/math]). Then Carath\’eodory's theorem states that any point [math]x \in \cX[/math] can be written as a convex combination of at most [math]n+1[/math] vertices of [math]\cX[/math]. On the other hand, by definition of the conditional gradient descent, one knows that the [math]t^{th}[/math] iterate [math]x_t[/math] can be written as a convex combination of [math]t[/math] vertices (assuming that [math]x_1[/math] is a vertex). Thanks to the dimension-free rate of convergence one is usually interested in the regime where [math]t \ll n[/math], and thus we see that the iterates of conditional gradient descent are very sparse in their vertex representation. We note an interesting corollary of the sparsity property together with the rate of convergence we proved: smooth functions on the simplex [math]\{x \in \mathbb{R}_+^n : \sum_{i=1}^n x_i = 1\}[/math] always admit sparse approximate minimizers. More precisely there must exist a point [math]x[/math] with only [math]t[/math] non-zero coordinates and such that [math]f(x) - f(x^*) = O(1/t)[/math]. Clearly this is the best one can hope for in general, as it can be seen with the function [math]f(x) = \|x\|^2_2[/math] since by Cauchy-Schwarz one has [math]\|x\|_1 \leq \sqrt{\|x\|_0} \|x\|_2[/math] which implies on the simplex [math]\|x\|_2^2 \geq 1 / \|x\|_0[/math]. Next we describe an application where the three properties of conditional gradient descent (projection-free, norm-free, and sparse iterates) are critical to develop a computationally efficient procedure. \subsection*{An application of conditional gradient descent: Least-squares regression with structured sparsity} This example is inspired by [7] (see also [8]). Consider the problem of approximating a signal [math]Y \in \mathbb{R}^n[/math] by a ‘`small" combination of dictionary elements [math]d_1, \hdots, d_N \in \mathbb{R}^n[/math]. One way to do this is to consider a LASSO type problem in dimension [math]N[/math] of the following form (with [math]\lambda \in \R[/math] fixed)

Let [math]D \in \mathbb{R}^{n \times N}[/math] be the dictionary matrix with [math]i^{th}[/math] column given by [math]d_i[/math]. Instead of considering the penalized version of the problem one could look at the following constrained problem (with [math]s \in \R[/math] fixed) on which we will now focus, see e.g. [9],

We make some assumptions on the dictionary. We are interested in situations where the size of the dictionary [math]N[/math] can be very large, potentially exponential in the ambient dimension [math]n[/math]. Nonetheless we want to restrict our attention to algorithms that run in reasonable time with respect to the ambient dimension [math]n[/math], that is we want polynomial time algorithms in [math]n[/math]. Of course in general this is impossible, and we need to assume that the dictionary has some structure that can be exploited. Here we make the assumption that one can do {\em linear optimization} over the dictionary in polynomial time in [math]n[/math]. More precisely we assume that one can solve in time [math]p(n)[/math] (where [math]p[/math] is polynomial) the following problem for any [math]y \in \mathbb{R}^n[/math]:

This assumption is met for many {\em combinatorial} dictionaries. For instance the dictionary elements could be vector of incidence of spanning trees in some fixed graph, in which case the linear optimization problem can be solved with a greedy algorithm. Finally, for normalization issues, we assume that the [math]\ell_2[/math]-norm of the dictionary elements are controlled by some [math]m \gt 0[/math], that is [math]\|d_i\|_2 \leq m, \forall i \in [N][/math]. Our problem of interest \eqref{eq:structuredsparsity} corresponds to minimizing the function [math]f(x) = \frac{1}{2} \| Y - D x \|^2_2[/math] on the [math]\ell_1[/math]-ball of [math]\mathbb{R}^N[/math] in polynomial time in [math]n[/math]. At first sight this task may seem completely impossible, indeed one is not even allowed to write down entirely a vector [math]x \in \mathbb{R}^N[/math] (since this would take time linear in [math]N[/math]). The key property that will save us is that this function admits {\em sparse minimizers} as we discussed in the previous section, and this will be exploited by the conditional gradient descent method. First let us study the computational complexity of the [math]t^{th}[/math] step of conditional gradient descent. Observe that

Now assume that [math]z_t = D x_t - Y \in \mathbb{R}^n[/math] is already computed, then to compute \eqref{eq:FW1} one needs to find the coordinate [math]i_t \in [N][/math] that maximizes [math]|[\nabla f(x_t)](i)|[/math] which can be done by maximizing [math]d_i^{\top} z_t[/math] and [math]- d_i^{\top} z_t[/math]. Thus \eqref{eq:FW1} takes time [math]O(p(n))[/math]. Computing [math]x_{t+1}[/math] from [math]x_t[/math] and [math]i_{t}[/math] takes time [math]O(t)[/math] since [math]\|x_t\|_0 \leq t[/math], and computing [math]z_{t+1}[/math] from [math]z_t[/math] and [math]i_t[/math] takes time [math]O(n)[/math]. Thus the overall time complexity of running [math]t[/math] steps is (we assume [math]p(n) = \Omega(n)[/math])

To derive a rate of convergence it remains to study the smoothness of [math]f[/math]. This can be done as follows:

which means that [math]f[/math] is [math]m^2[/math]-smooth with respect to the [math]\ell_1[/math]-norm. Thus we get the following rate of convergence:

Putting together \eqref{eq:structuredsparsity2} and \eqref{eq:structuredsparsity3} we proved that one can get an [math]\epsilon[/math]-optimal solution to \eqref{eq:structuredsparsity} with a computational effort of [math]O(m^2 p(n)/\epsilon + m^4/\epsilon^2)[/math] using the conditional gradient descent.

Strong convexity

We will now discuss another property of convex functions that can significantly speed-up the convergence of first order methods: strong convexity. We say that [math]f: \cX \rightarrow \mathbb{R}[/math] is [math]\alpha[/math]-{\em strongly convex} if it satisfies the following improved subgradient inequality:

Of course this definition does not require differentiability of the function [math]f[/math], and one can replace [math]\nabla f(x)[/math] in the inequality above by [math]g \in \partial f(x)[/math]. It is immediate to verify that a function [math]f[/math] is [math]\alpha[/math]-strongly convex if and only if [math]x \mapsto f(x) - \frac{\alpha}{2} \|x\|^2[/math] is convex (in particular if [math]f[/math] is twice differentiable then the eigenvalues of the Hessians of [math]f[/math] have to be larger than [math]\alpha[/math]). The strong convexity parameter [math]\alpha[/math] is a measure of the {\em curvature} of [math]f[/math]. For instance a linear function has no curvature and hence [math]\alpha = 0[/math]. On the other hand one can clearly see why a large value of [math]\alpha[/math] would lead to a faster rate: in this case a point far from the optimum will have a large gradient, and thus gradient descent will make very big steps when far from the optimum. Of course if the function is non-smooth one still has to be careful and tune the step-sizes to be relatively small, but nonetheless we will be able to improve the oracle complexity from [math]O(1/\epsilon^2)[/math] to [math]O(1/(\alpha \epsilon))[/math]. On the other hand with the additional assumption of [math]\beta[/math]-smoothness we will prove that gradient descent with a constant step-size achieves a {\em linear rate of convergence}, precisely the oracle complexity will be [math]O(\frac{\beta}{\alpha} \log(1/\epsilon))[/math]. This achieves the objective we had set after Theorem: strongly-convex and smooth functions can be optimized in very large dimension and up to very high accuracy. Before going into the proofs let us discuss another interpretation of strong-convexity and its relation to smoothness. Equation \eqref{eq:defstrongconv} can be read as follows: at any point [math]x[/math] one can find a (convex) quadratic lower bound [math]q_x^-(y) = f(x) + \nabla f(x)^{\top} (y - x) + \frac{\alpha}{2} \|x - y \|^2[/math] to the function [math]f[/math], i.e. [math]q_x^-(y) \leq f(y), \forall y \in \cX[/math] (and [math]q_x^-(x) = f(x)[/math]). On the other hand for [math]\beta[/math]-smoothness \eqref{eq:defaltsmooth} implies that at any point [math]y[/math] one can find a (convex) quadratic upper bound [math]q_y^+(x) = f(y) + \nabla f(y)^{\top} (x - y) + \frac{\beta}{2} \|x - y \|^2[/math] to the function [math]f[/math], i.e. [math]q_y^+(x) \geq f(x), \forall x \in \cX[/math] (and [math]q_y^+(y) = f(y)[/math]). Thus in some sense strong convexity is a {\em dual} assumption to smoothness, and in fact this can be made precise within the framework of Fenchel duality. Also remark that clearly one always has [math]\beta \geq \alpha[/math].

Strongly convex and Lipschitz functions

We consider here the projected subgradient descent algorithm with time-varying step size [math](\eta_t)_{t \geq 1}[/math], that is

The following result is extracted from [10].

Let [math]f[/math] be [math]\alpha[/math]-strongly convex and [math]L[/math]-Lipschitz on [math]\cX[/math]. Then projected subgradient descent with [math]\eta_s = \frac{2}{\alpha (s+1)}[/math] satisfies

Coming back to our original analysis of projected subgradient descent in Section Projected subgradient descent for Lipschitz functions and using the strong convexity assumption one immediately obtains

Strongly convex and smooth functions

As we will see now, having both strong convexity and smoothness allows for a drastic improvement in the convergence rate. We denote [math]\kappa= \frac{\beta}{\alpha}[/math] for the {\em condition number} of [math]f[/math]. The key observation is that Lemma can be improved to (with the notation of the lemma):

Let [math]f[/math] be [math]\alpha[/math]-strongly convex and [math]\beta[/math]-smooth on [math]\cX[/math]. Then projected gradient descent with [math]\eta = \frac{1}{\beta}[/math] satisfies for [math]t \geq 0[/math],

Using \eqref{eq:improvedstrongsmooth} with [math]y=x^*[/math] one directly obtains

We now show that in the unconstrained case one can improve the rate by a constant factor, precisely one can replace [math]\kappa[/math] by [math](\kappa+1) / 4[/math] in the oracle complexity bound by using a larger step size. This is not a spectacular gain but the reasoning is based on an improvement of \eqref{eq:coercive1} which can be of interest by itself. Note that \eqref{eq:coercive1} and the lemma to follow are sometimes referred to as {\em coercivity} of the gradient.

Let [math]f[/math] be [math]\beta[/math]-smooth and [math]\alpha[/math]-strongly convex on [math]\R^n[/math]. Then for all [math]x, y \in \mathbb{R}^n[/math], one has

Let [math]\phi(x) = f(x) - \frac{\alpha}{2} \|x\|^2[/math]. By definition of [math]\alpha[/math]-strong convexity one has that [math]\phi[/math] is convex. Furthermore one can show that [math]\phi[/math] is [math](\beta-\alpha)[/math]-smooth by proving \eqref{eq:defaltsmooth} (and using that it implies smoothness). Thus using \eqref{eq:coercive1} one gets

Let [math]f[/math] be [math]\beta[/math]-smooth and [math]\alpha[/math]-strongly convex on [math]\R^n[/math]. Then gradient descent with [math]\eta = \frac{2}{\alpha + \beta}[/math] satisfies

First note that by [math]\beta[/math]-smoothness (since [math]\nabla f(x^*) = 0[/math]) one has

Lower bounds

We prove here various oracle complexity lower bounds. These results first appeared in [11] but we follow here the simplified presentation of [2]. In general a black-box procedure is a mapping from ‘`history" to the next query point, that is it maps [math](x_1, g_1, \hdots, x_t, g_t)[/math] (with [math]g_s \in \partial f (x_s)[/math]) to [math]x_{t+1}[/math]. In order to simplify the notation and the argument, throughout the section we make the following assumption on the black-box procedure: [math]x_1=0[/math] and for any [math]t \geq 0[/math], [math]x_{t+1}[/math] is in the linear span of [math]g_1, \hdots, g_t[/math], that is

Let [math]e_1, \hdots, e_n[/math] be the canonical basis of [math]\mathbb{R}^n[/math], and [math]\mB_2(R) = \{x \in \R^n : \|x\| \leq R\}[/math]. We start with a theorem for the two non-smooth cases (convex and strongly convex).

Let [math]t \leq n[/math], [math]L, R \gt 0[/math]. There exists a convex and [math]L[/math]-Lipschitz function [math]f[/math] such that for any black-box procedure satisfying \eqref{eq:ass1},

We consider the following [math]\alpha[/math]-strongly convex function:

Wrapping up, we proved that for any [math]s \leq t[/math] one must have

We proceed now to the smooth case. As we will see in the following proofs we restrict our attention to quadratic functions, and it might be useful to recall that in this case one can attain the exact optimum in [math]n[/math] calls to the oracle (see Section Conjugate gradient). We also recall that for a twice differentiable function [math]f[/math], [math]\beta[/math]-smoothness is equivalent to the largest eigenvalue of the Hessians of [math]f[/math] being smaller than [math]\beta[/math] at any point, which we write

Furthermore [math]\alpha[/math]-strong convexity is equivalent to

Let [math]t \leq (n-1)/2[/math], [math]\beta \gt 0[/math]. There exists a [math]\beta[/math]-smooth convex function [math]f[/math] such that for any black-box procedure satisfying \eqref{eq:ass1},

In this proof for [math]h: \R^n \rightarrow \R[/math] we denote [math]h^* = \inf_{x \in \R^n} h(x)[/math]. For [math]k \leq n[/math] let [math]A_k \in \R^{n \times n}[/math] be the symmetric and tridiagonal matrix defined by

We consider now the following [math]\beta[/math]-smooth convex function:

To simplify the proof of the next theorem we will consider the limiting situation [math]n \to +\infty[/math]. More precisely we assume now that we are working in [math]\ell_2 = \{ x = (x(n))_{n \in \mathbb{N}} : \sum_{i=1}^{+\infty} x(i)^2 \lt + \infty\}[/math] rather than in [math]\mathbb{R}^n[/math]. Note that all the theorems we proved in this chapter are in fact valid in an arbitrary Hilbert space [math]\mathcal{H}[/math]. We chose to work in [math]\mathbb{R}^n[/math] only for clarity of the exposition.

Let [math]\kappa \gt 1[/math]. There exists a [math]\beta[/math]-smooth and [math]\alpha[/math]-strongly convex function [math]f: \ell_2 \rightarrow \mathbb{R}[/math] with [math]\kappa = \beta / \alpha[/math] such that for any [math]t \geq 1[/math] and any black-box procedure satisfying \eqref{eq:ass1} one has

The overall argument is similar to the proof of Theorem. Let [math]A : \ell_2 \rightarrow \ell_2[/math] be the linear operator that corresponds to the infinite tridiagonal matrix with [math]2[/math] on the diagonal and [math]-1[/math] on the upper and lower diagonals. We consider now the following function:

Geometric descent

So far our results leave a gap in the case of smooth optimization: gradient descent achieves an oracle complexity of [math]O(1/\epsilon)[/math] (respectively [math]O(\kappa \log(1/\epsilon))[/math] in the strongly convex case) while we proved a lower bound of [math]\Omega(1/\sqrt{\epsilon})[/math] (respectively [math]\Omega(\sqrt{\kappa} \log(1/\epsilon))[/math]). In this section we close these gaps with the geometric descent method which was recently introduced in [12]. Historically the first method with optimal oracle complexity was proposed in [11]. This method, inspired by the conjugate gradient (see Section Conjugate gradient), assumes an oracle to compute {\em plane searches}. In [13] this assumption was relaxed to a line search oracle (the geometric descent method also requires a line search oracle). Finally in [14] an optimal method requiring only a first order oracle was introduced. The latter algorithm, called Nesterov’s accelerated gradient descent, has been the most influential optimal method for smooth optimization up to this day. We describe and analyze this method in Section Nesterov's accelerated gradient descent. As we shall see the intuition behind Nesterov's accelerated gradient descent (both for the derivation of the algorithm and its analysis) is not quite transparent, which motivates the present section as geometric descent has a simple geometric interpretation loosely inspired from the ellipsoid method (see Section The ellipsoid method). We focus here on the unconstrained optimization of a smooth and strongly convex function, and we prove that geometric descent achieves the oracle complexity of [math]O(\sqrt{\kappa} \log(1/\epsilon))[/math], thus reducing the complexity of the basic gradient descent by a factor [math]\sqrt{\kappa}[/math]. We note that this improvement is quite relevant for machine learning applications. Consider for example the logistic regression problem described in Section Some convex optimization problems in machine learning: this is a smooth and strongly convex problem, with a smoothness of order of a numerical constant, but with strong convexity equal to the regularization parameter whose inverse can be as large as the sample size. Thus in this case [math]\kappa[/math] can be of order of the sample size, and a faster rate by a factor of [math]\sqrt{\kappa}[/math] is quite significant. We also observe that this improved rate for smooth and strongly convex objectives also implies an almost optimal rate of [math]O(\log(1/\epsilon) / \sqrt{\epsilon})[/math] for the smooth case, as one can simply run geometric descent on the function [math]x \mapsto f(x) + \epsilon \|x\|^2[/math]. In Section Warm-up: a geometric alternative to gradient descent we describe the basic idea of geometric descent, and we show how to obtain effortlessly a geometric method with an oracle complexity of [math]O(\kappa \log(1/\epsilon))[/math] (i.e., similar to gradient descent). Then we explain why one should expect to be able to accelerate this method in Section Acceleration. The geometric descent method is described precisely and analyzed in Section The geometric descent method.

Warm-up: a geometric alternative to gradient descent

We start with some notation. Let [math]\mB(x,r^2) := \{y \in \R^n : \|y-x\|^2 \leq r^2 \}[/math] (note that the second argument is the radius squared), and

Rewriting the definition of strong convexity \eqref{eq:defstrongconv} as

one obtains an enclosing ball for the minimizer of [math]f[/math] with the [math]0^{th}[/math] and [math]1^{st}[/math] order information at [math]x[/math]:

Furthermore recall that by smoothness (see \eqref{eq:onestepofgd}) one has [math]f(x^+) \leq f(x) - \frac{1}{2 \beta} \|\nabla f(x)\|^2[/math] which allows to shrink the above ball by a factor of [math]1-\frac{1}{\kappa}[/math] and obtain the following:

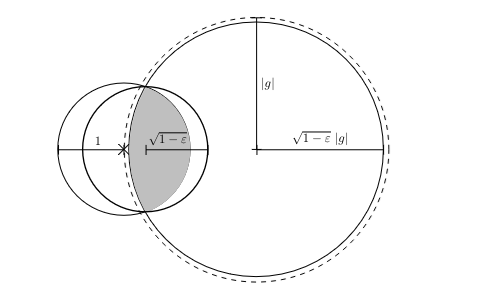

This suggests a natural strategy: assuming that one has an enclosing ball [math]A:=\mB(x,R^2)[/math] for [math]x^*[/math] (obtained from previous steps of the strategy), one can then enclose [math]x^*[/math] in a ball [math]B[/math] containing the intersection of [math]\mB(x,R^2)[/math] and the ball [math]\mB\left(x^{++}, \frac{\|\nabla f(x)\|^2}{\alpha^2} \left(1 - \frac{1}{\kappa}\right)\right)[/math] obtained by \eqref{eq:ball2}. Provided that the radius of [math]B[/math] is a fraction of the radius of [math]A[/math], one can then iterate the procedure by replacing [math]A[/math] by [math]B[/math], leading to a linear convergence rate. Evaluating the rate at which the radius shrinks is an elementary calculation: for any [math]g \in \R^n[/math], [math]\epsilon \in (0,1)[/math], there exists [math]x \in \R^n[/math] such that

Thus we see that in the strategy described above, the radius squared of the enclosing ball for [math]x^*[/math] shrinks by a factor [math]1 - \frac{1}{\kappa}[/math] at each iteration, thus matching the rate of convergence of gradient descent (see Theorem).

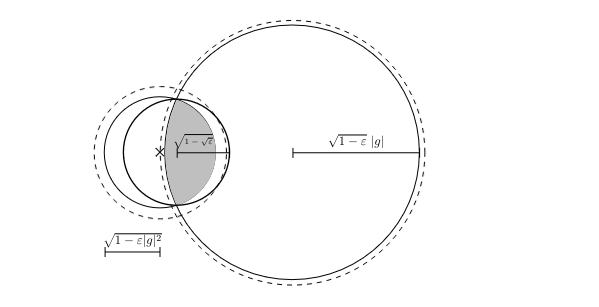

Acceleration

In the argument from the previous section we missed the following opportunity: observe that the ball [math]A=\mB(x,R^2)[/math] was obtained by intersections of previous balls of the form given by \eqref{eq:ball2}, and thus the new value [math]f(x)[/math] could be used to reduce the radius of those previous balls too (an important caveat is that the value [math]f(x)[/math] should be smaller than the values used to build those previous balls). Potentially this could show that the optimum is in fact contained in the ball [math]\mB\left(x, R^2 - \frac{1}{\kappa} \|\nabla f(x)\|^2\right)[/math]. By taking the intersection with the ball [math]\mB\left(x^{++}, \frac{\|\nabla f(x)\|^2}{\alpha^2} \left(1 - \frac{1}{\kappa}\right)\right)[/math] this would allow to obtain a new ball with radius shrunk by a factor [math]1- \frac{1}{\sqrt{\kappa}}[/math] (instead of [math]1 - \frac{1}{\kappa}[/math]): indeed for any [math]g \in \R^n[/math], [math]\epsilon \in (0,1)[/math], there exists [math]x \in \R^n[/math] such that

Thus it only remains to deal with the caveat noted above, which we do via a line search. In turns this line search might shift the new ball \eqref{eq:ball2}, and to deal with this we shall need the following strengthening of the above set inclusion (we refer to [12] for a simple proof of this result):

Let [math]a \in \R^n[/math] and [math]\epsilon \in (0,1), g \in \R_+[/math]. Assume that [math]\|a\| \geq g[/math]. Then there exists [math]c \in \R^n[/math] such that for any [math]\delta \geq 0[/math],

The geometric descent method

Let [math]x_0 \in \R^n[/math], [math]c_0 = x_0^{++}[/math], and [math]R_0^2 = \left(1 - \frac{1}{\kappa}\right)\frac{\|\nabla f(x_0)\|^2}{\alpha^2}[/math]. For any [math]t \geq 0[/math] let

and [math]c_{t+1}[/math] (respectively [math]R^2_{t+1}[/math]) be the center (respectively the squared radius) of the ball given by (the proof of) Lemma which contains

Formulas for [math]c_{t+1}[/math] and [math]R^2_{t+1}[/math] are given at the end of this section.

For any [math]t \geq 0[/math], one has [math]x^* \in \mB(c_t, R_t^2)[/math], [math]R_{t+1}^2 \leq \left(1 - \frac{1}{\sqrt{\kappa}}\right) R_t^2[/math], and thus

We will prove a stronger claim by induction that for each [math]t\geq 0[/math], one has

One can use the following formulas for [math]c_{t+1}[/math] and [math]R^2_{t+1}[/math] (they are derived from the proof of Lemma). If [math]|\nabla f(x_{t+1})|^2 / \alpha^2 \lt R_t^2 / 2[/math] then one can tate [math]c_{t+1} = x_{t+1}^{++}[/math] and [math]R_{t+1}^2 = \frac{|\nabla f(x_{t+1})|^2}{\alpha^2} \left(1 - \frac{1}{\kappa}\right)[/math]. On the other hand if [math]|\nabla f(x_{t+1})|^2 / \alpha^2 \geq R_t^2 / 2[/math] then one can tate

Nesterov's accelerated gradient descent

We describe here the original Nesterov's method which attains the optimal oracle complexity for smooth convex optimization. We give the details of the method both for the strongly convex and non-strongly convex case. We refer to [15] for a recent interpretation of the method in terms of differential equations, and to [16] for its relation to mirror descent (see Chapter).

The smooth and strongly convex case

Nesterov's accelerated gradient descent, illustrated in Figure, can be described as follows: Start at an arbitrary initial point [math]x_1 = y_1[/math] and then iterate the following equations for [math]t \geq 1[/math],

Let [math]f[/math] be [math]\alpha[/math]-strongly convex and [math]\beta[/math]-smooth, then Nesterov's accelerated gradient descent satisfies

We define [math]\alpha[/math]-strongly convex quadratic functions [math]\Phi_s, s \geq 1[/math] by induction as follows:

The smooth case

In this section we show how to adapt Nesterov’s accelerated gradient descent for the case [math]\alpha=0[/math], using a time-varying combination of the elements in the primary sequence [math](y_t)[/math]. First we define the following sequences:

(Note that [math]\gamma_t \leq 0[/math].) Now the algorithm is simply defined by the following equations, with [math]x_1 = y_1[/math] an arbitrary initial point,

Let [math]f[/math] be a convex and [math]\beta[/math]-smooth function, then Nesterov's accelerated gradient descent satisfies

Using the unconstrained version of Lemma one obtains

General references

Bubeck, Sébastien (2015). "Convex Optimization: Algorithms and Complexity". arXiv:1405.4980 [math.OC].

References

- Cite error: Invalid

<ref>tag; no text was provided for refs namedCau47 - 2.0 2.1 Cite error: Invalid

<ref>tag; no text was provided for refs namedNes04 - Cite error: Invalid

<ref>tag; no text was provided for refs namedMP89 - Cite error: Invalid

<ref>tag; no text was provided for refs namedFW56 - Cite error: Invalid

<ref>tag; no text was provided for refs namedJag13 - Cite error: Invalid

<ref>tag; no text was provided for refs namedDH78 - Cite error: Invalid

<ref>tag; no text was provided for refs namedLug10 - Cite error: Invalid

<ref>tag; no text was provided for refs namedJon92 - Cite error: Invalid

<ref>tag; no text was provided for refs namedFT07 - Cite error: Invalid

<ref>tag; no text was provided for refs namedLJSB12 - 11.0 11.1 Cite error: Invalid

<ref>tag; no text was provided for refs namedNY83 - 12.0 12.1 Cite error: Invalid

<ref>tag; no text was provided for refs namedBLS15 - Cite error: Invalid

<ref>tag; no text was provided for refs namedNem82 - Cite error: Invalid

<ref>tag; no text was provided for refs namedNes83 - Cite error: Invalid

<ref>tag; no text was provided for refs namedSBC14 - Cite error: Invalid

<ref>tag; no text was provided for refs namedAO14 - Cite error: Invalid

<ref>tag; no text was provided for refs namedBT09 - Cite error: Invalid

<ref>tag; no text was provided for refs namedTse08

Notes

- Of course the computational complexity remains at least linear in the dimension since one needs to manipulate gradients.

- In the optimization literature the term ‘`descent" is reserved for methods such that [math]f(x_{t+1}) \leq f(x_t)[/math]. In that sense the projected subgradient descent is not a descent method.

- Observe however that the quantities [math]R[/math] and [math]L[/math] may dependent on the dimension, see Chapter for more on this.

- The last step in the sequence of implications can be improved by taking [math]\delta_1[/math] into account. Indeed one can easily show with \eqref{eq:defaltsmooth} that [math]\delta_1 \leq \frac{1}{4 \omega}[/math]. This improves the rate of Theorem from [math]\frac{2 \beta \|x_1 - x^*\|^2}{t-1}[/math] to [math]\frac{2 \beta \|x_1 - x^*\|^2}{t+3}[/math].