Remaining Topics

As the purpose of this course is to be a thin and quick introductory course at the intersection of causal inference and machine learning, it is not the intention nor desirable to cover all topics in causal inference exhaustively. In this final chapter, I discuss a few topics that I did not feel necessary to be included in the main course but could be useful for students if they could be taught.

Other Techniques in Causal Inference

In practice the following observational causal inference techniques are widely used:

- Regression in Regression: Causal Inference can be Trivial

- Inverse probability weighting in Inverse Probability Weighting and Matching in Matching.

- Instrument variables in Instrumental Variables: When Confounders were not Collected

- Difference-in-difference

- Regression discontinuity design

- Double machine learning

Difference-in-difference and regression discontinuity design are heavily used in practice, but they work for relatively more specialized cases, which is why this course has omitted them so far. In this section, we briefly cover these two approaches for the sake of completeness. Furthermore, this section wraps up by providing a high-level intuition behind a more recently proposed and popularized technique of double machine learning.

Difference-in-Difference

The average treatment effect (ATE) from Causal Quantities of Interest measures the difference between the outcomes of two groups; treated and not treated, or more precisely, it measures the difference between the outcome of the treated group and the expected outcome over all possible actions. One way to interpret this is to view ATE as checking what happens to a treated individual had the individual was not treated, on average. First, we can compute what happens to the individual once they were treated, on average, as

where [math]y_{\mathrm{pre}}[/math] and [math]y_{\mathrm{post}}[/math] are the outcomes before and after the treatment ([math]a=1[/math]). We can similarly compute what happens to the individual had they not been treated, on average, as well by

We now check the difference between these two quantities:

If we used RCT from Randomized Controlled Trials to assign the action independent of the covariate [math]x[/math] and also uniformly, the second term, that is the difference in the pre-treatment outcome, should disappear, since the treatment had not been given to the treatment group yet. This leaves only the first term, which is precisely how we would compute the outcome from RCT.

In an observational study, that is passive causal inference, we often do not have a control over how the participants were split into treatment and placebo groups. This often leads to the discrepancy in the base outcome between the treated and placebo groups. In that case, the second term above would not vanish but will work to remove this baseline effect.

Consider measuring the effect of a vitamin supplement on the height of school-attending girls of age 10. Let us assume that this particular vitamin supplement is provided to school children by default in Netherlands from age 10 but is not in North Korea. We may be tempted to simply measure the average heights of school-attending girls of age 10 from these two countries, and draw a conclusion whether this supplement helps school children grow taller. This however would not be a reasonable way to draw the conclusion, since the averages heights of girls of age 9, right before the vitamin supplement begins to be provided in Netherlands, differ quite significantly between two countries (146.55cm vs. 140.58cm.) We would rather look at how much taller these children grew between ages of 9 and 10.

Because we consider the difference of the difference in Eq.\eqref{eq:diff-in-diff}, we call this estimator difference-in-difference. This approach is widely used and was one of the most successful cases of passive causal inference, dating back to the 19th century[1].

In the context of what we have learned this course, let us write a structural causal model that admits this difference-in-different estimator:

With zero-mean and symmetric [math]\epsilon_x[/math] and [math]\epsilon_a[/math], those with positive [math]x[/math] are more likely to be assigned to [math]a=1[/math]. Due to the first term in [math]y[/math], the outcome has a constant bias [math]y_0[/math] when [math]x[/math] is positive. In other words, those, who are likely to be given the treatment, have [math]y_0[/math] added to the outcome regardless of the treatment ([math]a=1[/math]) itself, since [math]+y_0[/math] does not depend on [math]a[/math]. The difference-in-difference estimator removes the effect of [math]y_0[/math] from estimating [math]\alpha[/math] which is the direct causal effect of [math]a[/math] on [math]y[/math]. This tells us when the difference-in-difference estimator works, and how we can extend it further. For instance, it is not necessary to assume the linearity between [math]a[/math] and [math]y[/math]. I leave it to you as an exercise.

Regression Discontinuity

Another popular technique for passive causal inference is called regression discontinuity[2](and references therein). Regression discontinuity assumes that there exists a simple rule to determine to which group, either treated or placebo, an individual is assigned based on the covariate [math]x[/math]. This rule can be written down as

If the [math]d[/math]-th covariate crosses over the threshold [math]c_0[/math], the individual is assigned to [math]a=1[/math]. We further assume that the outcome given a particular action is a smooth function of the covariate. That is, the outcome of a particular action, [math]f(\hat{a}, x)[/math], changes smoothly especially around the threshold [math]c_0[/math]. In other words, had it not been for the assignment rule above, [math]\lim_{x_d \to c_0} f(\hat{a}, x) = \lim_{c_0 \leftarrow x_d} f(\hat{a}, x)[/math]. There is no discontinuity of [math]f(\hat{a}, x)[/math] at [math]x_d=c_0[/math], and we can fit a smooth predictor that extrapolates well to approximate [math]f(\hat{a}, x)[/math] (or [math]\mathbb{E}_{x_{d' \neq d}} f(\hat{a}, x_{d'}\cup x_c=c_0)[/math].) If we assume that the threshold [math]c_0[/math] was chosen arbitrarily, that is independent of the values of [math]x_{\neq d}[/math], it follows that the distributions over [math]x_{\neq d}[/math] before and after [math]c_0[/math] to remain the same at least locally.[Notes 1] This means that the assignment of an action [math]a[/math] and the covariate other than [math]x_d[/math] are independent locally, i.e., [math]|x_d - c_0| \leq \epsilon[/math], where [math]\epsilon[/math] defines the radius of the local neighbourhood centered on [math]c_0[/math]. Thanks to this independence, which is the key difference between the conditional and interventional distributions, as we have seen repeatedly earlier, we can now compute the average treatment effect locally (so is often called a local average treatment effect) as

Of course, our assumption here is that we do not observed [math]x_{\neq d}[/math]. Even worse, we never observe [math]f(1,x)[/math] when [math]x_d \lt c_0[/math] and [math]f(0,x)[/math] when [math]x_d \gt c_0[/math]. Instead, we can fit a non-parametric regression model [math]\hat{f}(\hat{a}, x_d)[/math] to approximate [math]\mathbb{E}_{x_{\neq d} | x_d} f(\hat{a},x)[/math] and expect (or hope?) that it would extrapolate either before or after the threshold [math]c_0[/math]. Then, LATE becomes

thanks to the smoothness assumption of [math]f[/math]. The final line above tells us pretty plainly why this approach is called regression discontinuity design. We literally fit two regression models on the treated and placebo groups and look at their discrepancy at the decision threshold. The amount of the discrepancy implies the change in the outcome due to the change in the action, of course under the strong set of assumptions we have discussed so far.

Double Machine Learning

Recent advances in machine learning have open a door to training large-scale non-parametric methods on high-dimensional data. This allows us to expand some of the more conventional approaches. One such example is double machine learning[3]. We briefly describe one particular instantiation of double machine learning here. Recall the instrument variable approach from Instrumental Variables: When Confounders were not Collected. The basic idea was to notice that the action [math]a[/math] was determined using two independent sources of information, the confounder [math]x[/math] and the external noise [math]\epsilon_a[/math]:

with [math]x \indep \epsilon_a[/math]. We then introduced an instrument [math]z[/math] that is a subset of [math]\epsilon_a[/math], such that [math]z[/math] is predictive of [math]a[/math] but continues to be independent of [math]x[/math]. From [math]z[/math], using regression, we capture a part of variation in [math]a[/math] that is independent of [math]x[/math], in order to severe the edge from the confounder [math]x[/math] to the outcome [math]y[/math]. Then, we use this instrument-predicted action [math]a'[/math] to predict the outcome [math]y[/math]. We can instead think of fitting a regression model [math]g_a[/math] from [math]x[/math] to [math]a[/math] and use the residual [math]a_{\bot} = a - g_a(x)[/math] as the component of [math]a[/math] that is independent of [math]x[/math], because the residual was not predictable from [math]x[/math]. This procedure can now be applied to the outcome which is written down as

Because [math]x[/math] and [math]a_{\bot}[/math] are independent, we can estimate the portion of [math]y[/math] that is predictable from [math]y[/math] by building a predictor [math]g_y[/math] of [math]y[/math] given [math]x[/math]. The residual [math]y_{\bot} = y - g_y(x)[/math] is then what cannot be predicted by [math]x[/math], directly nor via [math]a[/math]. We are in fact relying on the fact that such a non-parametric predictor would capture both causal and spurious correlations indiscriminately. [math]a_{\bot}[/math] is a subset of [math]a[/math] that is independent of the confounder [math]x[/math], and [math]y_{\bot}[/math] is a subset of [math]y[/math] that is independent of the confounder [math]x[/math]. The relationship between [math]a_{\bot}[/math] and [math]y_{\bot}[/math] must then be the direct causal effect of the action on the outcome. In other words, we have removed the effect of [math]x[/math] on [math]a[/math] to close the backdoor path, resulting in [math]a_{\bot}[/math]. We have removed the effect of [math]x[/math] on [math]y[/math] to reduce non-causal noise, resulting [math]y_{\bot}[/math]. What remains is the direct effect of [math]a[/math] on the outcome [math]y[/math]. We therefore fit another regression from [math]a_{\bot}[/math] to [math]y_{\bot}[/math], in order to capture this remaining correlation that is equivalent to the direct cause of [math]a[/math] on [math]y[/math].

Behaviour Cloning from Multiple Expert Policies Requires a World Model

A Markov decision process (MDP) is often described as a tuple of the following items:

- [math]\mathcal{S}[/math]: a set of all possible states

- [math]\mathcal{A}[/math]: a set of all possible actions

- [math]\tau: \mathcal{S} \times \mathcal{A} \times \mathcal{E} \to \mathcal{S}[/math]: a transition dynamics. [math]s' = \tau(s, a, \epsilon)[/math].

- [math]\rho: \mathcal{S} \times \mathcal{A} \times \mathcal{S} \to \mathbb{R}[/math]: a reward function. [math]r = \rho(s, a, s')[/math].

The transition dynamics [math]\tau[/math] is a deterministic function but takes as input noise [math]\epsilon \in \mathcal{E}[/math], which overall makes it stochastic. We use [math]p_\tau(s' | s, a)[/math] to denote the conditional distribution over the next state given the current state and action by marginalizing out noise [math]\epsilon[/math]. The reward function [math]r[/math] depends on the current state, the action taken and the next state. It is however often the case that the reward function only depends on the next (resulting) state. A major goal is then to find a policy [math]p_\pi: \mathcal{S} \times \mathcal{A} \to \mathbb{R}_{ \gt 0}[/math] that maximizes

where [math]p_0(s_0)[/math] is the distribution over the initial state. [math]\gamma \in (0, 1][/math] is a discounting factor. The discounting factor can be viewed from two angles. First, we can view it conceptually as a way to express how much we care about the future rewards. With a large [math]\gamma[/math], our policy can sacrifice earlier time steps' rewards in return of higher rewards in the future. The other way to think of the discounting factor is purely computational. With [math]\gamma \lt 1[/math], we can prevent the total return [math]J(\pi)[/math] from diverging to infinity, even when the length of each episode is not bounded. As we have learned earlier when we saw the equivalence between the probabilistic graphical model and the structural causal model in Probababilistic Graphical Models--Structural Causal Models, we can guess the form of [math]\pi[/math] as a deterministic function:

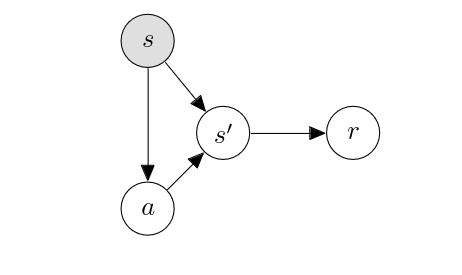

Together with the transition dynamics [math]\tau[/math] and the reward function [math]\rho[/math], we notice that the Markov decision process can be thought of as defining a structural causal model for each time step [math]t[/math] as follows:

where we make a simplifying assumption that the reward only depends on the landing state. Graphically,

Behaviour cloning.

With this in our mind, let us consider the problem of so-called ‘behavior cloning’. In behaviour cloning, we assume the existence of an expert policy [math]\pi^*[/math] that results in a high return [math]J(\pi^*)[/math] from Eq.\eqref{eq:return} and that we have access to a large amount of data collected from the expert policy. This dataset consists of tuples of current state [math]s[/math], action by the expert policy [math]a[/math] and the next state [math]s'[/math]. We often do not observe the associated reward directly.

where [math]a_n \sim p_{\pi^*}(a | s_n)[/math] and [math]s'_n \sim p_{\tau}(s' | s_n, a_n)[/math]. Behavior cloning refers to training a policy [math]\pi[/math] that imitates the expert policy [math]\pi^*[/math] using this dataset. We train a new policy [math]\pi[/math] often by maximizing

In other words, we ensure that the learned policy [math]\pi[/math] puts a high probability on the action that was taken by the expert policy [math]\pi^*[/math].

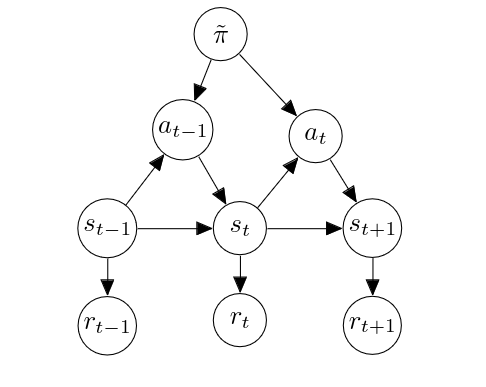

Behaviour cloning with multiple experts. It is however often that it is not just one expert policy that was used to collect data but a set of expert-like policies that collected these data points. It is furthermore often that we do not know which such expert-like policy was used to produce each tuple [math](s_n, a_n, s'_n)[/math]. This necessitates us to consider the policy used to collect these tuples as a random variable that we do not observe, resulting the following graphical model:[Notes 2]

The inclusion of an unobserved [math]\tilde{\pi}[/math] makes the original behaviour cloning objective in Eq.\eqref{eq:bc-loss} less than ideal. In the original graph, because we sampled both [math]s[/math] and [math]a[/math] without conditioning on [math]s'[/math], there was only one open path between [math]s[/math] and [math]a[/math], that is, [math]s\to a[/math]. We could thereby simply train a policy to capture the correlation between [math]s[/math] and [math]a[/math] to learn the policy which should capture [math]p(a | \mathrm{do}(s))[/math]. With the unobserved variable [math]\pi[/math], this does not hold anymore. Consider [math](s_t,a_t)[/math]. There are two open paths between these two variables. The first one is the original direct path; [math]s_t \to a_t[/math]. There is however the second path now; [math]s_t \leftarrow a_{t-1} \leftarrow \pi \rightarrow a_t[/math]. If we na\"ively train a policy [math]\pi[/math] on this dataset, this policy would learn to capture the correlation between the current state and associated action arising from both of these paths. This is not desirable as the second path is not causal, as we discussed earlier in Confounders, Colliders and Mediators. In other words, [math]\pi(a | s)[/math] would not correspond to [math]p(a | \mathrm{do}(s))[/math]. In order to block this backdoor path, we can use the idea of inverse probability weighting (IPW; Inverse Probability Weighting). If we assume we have access to the transition model [math]\tau[/math], we can use it to severe two direct connections into [math]s_t[/math]; [math]s_{t-1} \to s_t[/math] and [math]a_{t-1} \to s_t[/math], by

Learned transition: a world model. Of course, we often do not have access to [math]\tau[/math] directly, but must infer this transition dynamics from data. Unlike the policy [math]s \to a[/math], fortunately, the transition [math](s, a) \to s'[/math] is however not confounded by [math]\pi[/math]. We can therefore learn an approximate transition model, which is sometimes referred to as a world model[4][and references therein], from data. This can be done by

Deconfounded behaviour closing. Once training is done, we can use [math]\hat{\tau}[/math] in place of the true transition dynamics [math]\tau[/math], to train a de-confounded policy by

where [math]a'_n[/math] is the next action in the dataset. That is, the dataset now consists of [math](s_n, a_n, s'_n, a'_n)[/math] rather than [math](s_n, a_n, s'_n)[/math]. This effectively makes us lose a few examples from the original dataset that correspond to the final steps of episodes, although this is a small price to pay to avoid the confounding by multiple expert policies.

Causal reinforcement learning. This is an example of how causality can assist us in identifying a potential issue a priori and design a better learning algorithm without relying on trials and errors. In the context of reinforcement learning, which is a sub-field of machine learning focused on learning a policy, such as like behaviour cloning, this is often referred to as and studied in causal reinforcement learning[5].

Summary

In this final chapter, I have touched upon a few topics that were left out from the main chapters perhaps for no particular strong reason. These topics included

- Difference-in-Difference

- Regression discontinuity

- Double machine learning

- A taste of causal reinforcement learning

There are many interesting topics that were not discussed in this lecture note both due to the lack of time as well as the lack of my own knowledge and expertise. I find the following two areas to be particular interesting and recommend you to follow up on.

- Counterfactual analysis: Can we build an algorithm that can imagine taking an alternative action and guess the resulting outcome instead of the actual outcome?

- (Scalable) causal discovery: How can we infer useful causal relationship among many variables?

- Beyond invariance (The Principle of Invariance): Invariance is a strong assumption. Can we relax this assumption to identify a more flexible notion of causal prediction?

General references

Cho, Kyunghyun (2024). "A Brief Introduction to Causal Inference in Machine Learning". arXiv:2405.08793 [cs.LG].

Notes

- This provides a good ground for testing the validity of regression discontinuity. If the distributions of [math]x[/math] before and after [math]c_0[/math] differ significantly from each other, regression discontinuity cannot be used.

- I am only drawing two time steps for simplicity, however, without loss of generality.

References

- "On the Mode of Communication of Cholera." (1856). Edinburgh Medical Journal 1.

- "Regression discontinuity designs: A guide to practice" (2008). Journal of econometrics 142. Elsevier.

- "Effective training of a neural network character classifier for word recognition" (1996). Advances in neural information processing systems 9.

- LeCun, Yann (2022). "A path towards autonomous machine intelligence version 0.9. 2, 2022-06-27". Open Review 62.

- Elias Bareinboim (2020), ICML Tutorial on Causal Reinforcement Learning