guide:E5be6e0c81: Difference between revisions

No edit summary |

mNo edit summary |

||

| (2 intermediate revisions by 2 users not shown) | |||

| Line 1: | Line 1: | ||

<div class="d-none"><math> | |||

\newcommand{\NA}{{\rm NA}} | |||

\newcommand{\mat}[1]{{\bf#1}} | |||

\newcommand{\exref}[1]{\ref{##1}} | |||

\newcommand{\secstoprocess}{\all} | |||

\newcommand{\NA}{{\rm NA}} | |||

\newcommand{\mathds}{\mathbb}</math></div> | |||

In this section we consider the properties of the expected value and the variance of a continuous random variable. These quantities are defined just as for discrete random variables and share the same properties. | |||

===Expected Value=== | |||

{{defncard|label=|id=def 6.5|Let <math>X</math> be a real-valued random variable with density function <math>f(x)</math>. The ''expected value'' <math>\mu = E(X)</math> is defined by | |||

<math display="block"> | |||

\mu = E(X) = \int_{-\infty}^{+\infty} xf(x)\,dx\ , | |||

</math> | |||

provided the integral | |||

<math display="block"> | |||

\int_{-\infty}^{+\infty} |x|f(x)\,dx | |||

</math> | |||

is finite.}} | |||

The reader should compare this definition with the corresponding one for discrete | |||

random variables in [[guide:E4fd10ce73|Expected Value]]. Intuitively, we can interpret <math>E(X)</math>, as | |||

we did in the previous sections, as the value that we should expect to obtain if we | |||

perform a large number of independent experiments and average the resulting values of | |||

<math>X</math>. | |||

We can summarize the properties of <math>E(X)</math> as follows (cf. [[guide:E4fd10ce73#thm 6.1 |Theorem]]). | |||

{{proofcard|Theorem|thm_6.10|If <math>X</math> and <math>Y</math> are real-valued random variables and | |||

<math>c</math> is any constant, then | |||

<math display="block"> | |||

\begin{eqnarray*} | |||

E(X + Y) &=& E(X) + E(Y)\ , \\ | |||

E(cX) &=& cE(X)\ . | |||

\end{eqnarray*} | |||

</math> | |||

The proof is very similar to the proof of [[guide:E4fd10ce73#thm 6.1 |Theorem]], and we omit it.|}} | |||

More generally, if <math>X_1</math>, <math>X_2</math>, \dots, <math>X_n</math> are <math>n</math> real-valued random | |||

variables, and | |||

<math>c_1</math>, <math>c_2</math>, \dots, <math>c_n</math> are <math>n</math> constants, then | |||

<math display="block"> | |||

E(c_1X_1 + c_2X_2 +\cdots+ c_nX_n) = c_1E(X_1) + c_2E(X_2) +\cdots+ c_nE(X_n)\ . | |||

</math> | |||

<span id="exam 6.16"/> | |||

'''Example''' | |||

Let <math>X</math> be uniformly distributed on the interval <math>[0, 1]</math>. Then | |||

<math display="block"> | |||

E(X) = \int_0^1 x\,dx = 1/2\ . | |||

</math> | |||

It follows that if we choose a large number <math>N</math> of random numbers from <math>[0,1]</math> and take the average, then we can expect that this average should be close to the expected value of 1/2. | |||

<span id="exam 6.17"/> | |||

'''Example''' | |||

Let <math>Z = (x, y)</math> denote a point chosen uniformly and | |||

randomly from the unit disk, as in the dart game in randomly from the unit disk, as in the dart game in [[guide:523e6267ef#exam 2.2.2 |Example]] and let | |||

<math>X = (x^2 + y^2)^{1/2}</math> be the distance from <math>Z</math> to the center of the disk. The | |||

density function of <math>X</math> can easily be shown to equal <math>f(x) = 2x</math>, so by the | |||

definition of expected value, | |||

<math display="block"> | |||

\begin{eqnarray*} E(X) & = & \int_0^1 x f(x)\,dx \\ & = & \int_0^1 x (2x)\,dx \\ & = | |||

& \frac 23\ . | |||

\end{eqnarray*} | |||

</math> | |||

<span id="exam 6.18"/> | |||

'''Example''' | |||

In the example of the couple meeting at the Inn [[guide:523e6267ef#exam 2.2.7.4 |(Example)]], each person arrives at a time which is uniformly | |||

distributed between 5:00 and 6:00 PM. The random variable <math>Z</math> under consideration is the length of time the first person has to wait until the second one arrives. It was shown that | |||

<math display="block"> | |||

f_Z(z) = 2(1-z)\ , | |||

</math> | |||

for <math>0 \le z \le 1</math>. Hence, | |||

<math display="block"> | |||

\begin{eqnarray*} E(Z) & = & \int_0^1 zf_Z(z)\,dz \\ | |||

& = & \int_0^1 2z(1-z)\,dz \\ | |||

& = & \Bigl[z^2 - \frac 23 z^3\Bigl]_0^1 \\ = \frac 13\ . | |||

\end{eqnarray*} | |||

</math> | |||

===Expectation of a Function of a Random Variable=== | |||

Suppose that <math>X</math> is a real-valued random variable and <math>\phi(x)</math> is a continuous | |||

function from ''' R''' to ''' R'''. The following theorem is the continuous analogue | |||

of [[guide:E4fd10ce73#thm 6.3.5 |Theorem]]. | |||

{{proofcard|Theorem|thm_6.11|If <math>X</math> is a real-valued random variable and if | |||

<math>\phi :</math> ''' R''' <math>\to\ </math> ''' R''' is a continuous real-valued function with domain | |||

<math>[a,b]</math>, then | |||

<math display="block"> | |||

E(\phi(X)) = \int_{-\infty}^{+\infty} \phi(x) f_X(x)\, dx\ , | |||

</math> | |||

provided the integral exists.|}} | |||

For a proof of this theorem, see Ross.<ref group="Notes" >S. Ross, ''A First Course in | |||

Probability,'' (New York: Macmillan, 1984), pgs. 241-245.</ref> | |||

===Expectation of the Product of Two Random Variables=== | |||

In general, it is not true that <math>E(XY) = E(X)E(Y)</math>, since the integral of a product | |||

is not the product of integrals. But if <math>X</math> and <math>Y</math> are independent, then the | |||

expectations multiply. | |||

{{proofcard|Theorem|thm_6.12|Let <math>X</math> and <math>Y</math> be independent real-valued continuous | |||

random variables with finite expected values. Then we have | |||

<math display="block"> | |||

E(XY) = E(X)E(Y)\ . | |||

</math>|We will prove this only in the case that the ranges of <math>X</math> and <math>Y</math> are contained in | |||

the intervals <math>[a, b]</math> and <math>[c, d]</math>, respectively. Let the density functions of <math>X</math> and <math>Y</math> be | |||

denoted by <math>f_X(x)</math> and <math>f_Y(y)</math>, respectively. Since <math>X</math> and <math>Y</math> are independent, the joint | |||

density function of <math>X</math> and <math>Y</math> is the product of the individual density functions. Hence | |||

<math display="block"> | |||

\begin{eqnarray*} E(XY) & = & \int_a^b \int_c^d xy f_X(x) f_Y(y)\, dy\,dx \\ | |||

& = & \int_a^b x f_X(x)\, dx \int_c^d y f_Y(y)\, dy \\ | |||

& = & E(X)E(Y)\ . | |||

\end{eqnarray*} | |||

</math> | |||

The proof in the general case involves using sequences of bounded random variables that approach <math>X</math> | |||

and <math>Y</math>, and is somewhat technical, so we will omit it.}} | |||

In the same way, one can show that if <math>X_1</math>, <math>X_2</math>, \dots, <math>X_n</math> are <math>n</math> mutually | |||

independent real-valued random variables, then | |||

<math display="block"> | |||

E(X_1 X_2 \cdots X_n) = E(X_1)\,E(X_2)\,\cdots\,E(X_n)\ . | |||

</math> | |||

<span id="exam 6.19"/> | |||

'''Example''' | |||

unit square. Let <math>A = X^2</math> and | |||

<math>B = Y^2</math>. Then [[guide:E05b0a84f3#thm 4.3 |Theorem]] implies that <math>A</math> and <math>B</math> are independent. | |||

Using [[#thm 6.11 |Theorem]], the expectations of <math>A</math> and <math>B</math> are easy to calculate: | |||

<math display="block"> | |||

\begin{eqnarray*} E(A) = E(B) & = & \int_0^1 x^2\,dx \\ & = & \frac 13\ . | |||

\end{eqnarray*} | |||

</math> | |||

Using [[#thm 6.12 |Theorem]], the expectation of <math>AB</math> is just the | |||

product of <math>E(A)</math> and | |||

<math>E(B)</math>, or 1/9. The usefulness of this theorem is demonstrated by noting that it is | |||

quite a bit more difficult to calculate <math>E(AB)</math> from the definition of expectation. | |||

One finds that the density function of <math>AB</math> is | |||

<math display="block"> | |||

f_{AB}(t) = \frac {-\log(t)}{4\sqrt t}\ , | |||

</math> | |||

so | |||

<math display="block"> | |||

\begin{eqnarray*} E(AB) & = & \int_0^1 t f_{AB}(t)\,dt \\ & = & \frac 19\ . | |||

\end{eqnarray*} | |||

</math> | |||

<span id="exam 6.20"/> | |||

'''Example''' | |||

Again let <math>Z = (X, Y)</math> be a point chosen at random | |||

in the unit square, and let <math>W = X + Y</math>. Then <math>Y</math> and <math>W</math> are not independent, and | |||

we have | |||

<math display="block"> | |||

\begin{eqnarray*} E(Y) & = &\frac 12\ , \\ E(W) & = & 1\ , \\ E(YW) & = & E(XY + Y^2) | |||

= E(X)E(Y) + \frac 13 = \frac 7{12} | |||

\ne E(Y)E(W)\ . | |||

\end{eqnarray*} | |||

</math> | |||

We turn now to the variance. | |||

===Variance=== | |||

{{defncard|label=|id=def 6.6|Let <math>X</math> be a real-valued random variable with | |||

density function <math>f(x)</math>. The ''variance'' <math>\sigma^2 = V(X)</math> is defined by | |||

<math display="block"> | |||

\sigma^2 = V(X) = E((X - \mu)^2)\ . | |||

</math> | |||

}} | |||

The next result follows easily from [[guide:E4fd10ce73#thm 6.3.5 |Theorem]]. There is another | |||

way to calculate the variance of a continuous random variable, which is usually | |||

slightly easier. It is given in [[#thm 6.14 |Theorem]]. | |||

{{proofcard|Theorem|thm_6.13.5|If <math>X</math> is a real-valued random variable with <math>E(X) | |||

= \mu</math>, then | |||

<math display="block"> | |||

\sigma^2 = \int_{-\infty}^\infty (x - \mu)^2 f(x)\, dx\ . | |||

</math>|}} | |||

The properties listed in the next three theorems are all proved in exactly the same way that the corresponding theorems for discrete random variables were proved in [[guide:C631488f9a|Variance of Discrete Random Variables]]. | |||

{{proofcard|Theorem|thm_6.13|If <math>X</math> is a real-valued random variable defined on | |||

<math>\Omega</math> and <math>c</math> is any constant, then (cf. [[guide:C631488f9a#thm 6.6 |Theorem]]) | |||

<math display="block"> | |||

\begin{eqnarray*} | |||

V(cX) &=& c^2 V(X)\ , \\ | |||

V(X + c) &=& V(X)\ . | |||

\end{eqnarray*} | |||

</math>|}} | |||

{{proofcard|Theorem|thm_6.14|If <math>X</math> is a real-valued random variable with <math>E(X) = | |||

\mu</math>, then (cf. [[guide:C631488f9a#thm 6.7 |Theorem]]) | |||

<math display="block"> | |||

V(X) = E(X^2) - \mu^2\ . | |||

</math>|}} | |||

{{proofcard|Theorem|thm_6.15|If <math>X</math> and <math>Y</math> are independent real-valued random | |||

variables on <math>\Omega</math>, then (cf. [[guide:C631488f9a#thm 6.8 |Theorem]]) | |||

<math display="block"> | |||

V(X + Y) = V(X) + V(Y)\ . | |||

</math>|}} | |||

<span id="exam 6.18.5"/> | |||

'''Example''' | |||

(continuation of [[#exam 6.16 |Example]]) If <math>X</math> is uniformly distributed on <math>[0, 1]</math>, then, using [[#thm 6.14 |Theorem]], we have | |||

<math display="block"> | |||

V(X) = \int_0^1 \Bigl(x - \frac 12 \Bigr)^2\, dx = \frac 1{12}\ . | |||

</math> | |||

<span id="exam 6.21"/> | |||

'''Example''' | |||

Let <math>X</math> be an exponentially distributed random variable with parameter <math>\lambda</math>. Then the density function of <math>X</math> is | |||

<math display="block"> | |||

f_X(x) = \lambda e^{-\lambda x}\ . | |||

</math> | |||

From the definition of expectation and integration by parts, we have | |||

<math display="block"> | |||

\begin{eqnarray*} E(X) & = & \int_0^\infty x f_X(x)\, dx \\ | |||

& = & \lambda \int_0^\infty x e^{-\lambda x}\, dx \\ | |||

& = & \biggl.-xe^{-\lambda x}\biggr|_0^\infty + \int_0^\infty e^{-\lambda x}\, | |||

dx \\ | |||

& = & 0 + \biggl.\frac {e^{-\lambda x}}{-\lambda}\biggr|_0^\infty = | |||

\frac 1\lambda\ . | |||

\end{eqnarray*} | |||

</math> | |||

Similarly, using [[#thm 6.11 |Theorem]] and [[#thm 6.14 |Theorem]], we have | |||

<math display="block"> | |||

\begin{eqnarray*} V(X) & = & \int_0^\infty x^2 f_X(x)\, dx - \frac 1{\lambda^2} \\ | |||

& = & \lambda \int_0^\infty x^2 e^{-\lambda x}\, dx - \frac 1{\lambda^2} \\ | |||

& = & \biggl.-x^2 e^{-\lambda x}\biggr|_0^\infty + 2\int_0^\infty x e^{-\lambda | |||

x}\, dx - \frac 1{\lambda^2} \\ | |||

& = & \biggl.-x^2 e^{-\lambda x}\biggr|_0^\infty - \biggl.\frac {2xe^{-\lambda | |||

x}}\lambda\biggr|_0^\infty - \biggl.\frac 2{\lambda^2} e^{-\lambda x}\biggr|_0^\infty | |||

- | |||

\frac 1{\lambda^2} = | |||

\frac 2{\lambda^2} - \frac 1{\lambda^2} = \frac 1{\lambda^2}\ . | |||

\end{eqnarray*} | |||

</math> | |||

In this case, both <math>E(X)</math> and <math>V(X)</math> are finite if <math>\lambda > 0</math>. | |||

<span id="exam 6.22"/> | |||

'''Example''' | |||

Let <math>Z</math> be a standard normal random variable with density function | |||

<math display="block"> | |||

f_Z(x) = \frac 1{\sqrt{2\pi}} e^{-x^2/2}\ . | |||

</math> | |||

Since this density function is symmetric with respect to the <math>y</math>-axis, then it is easy to show that | |||

<math display="block"> | |||

\int_{-\infty}^\infty x f_Z(x)\, dx | |||

</math> | |||

has value 0. The reader should recall | |||

however, that the expectation is defined to be the above integral only if the integral | |||

<math display="block"> | |||

\int_{-\infty}^\infty |x| f_Z(x)\, dx | |||

</math> | |||

is finite. This integral equals | |||

<math display="block"> | |||

2\int_0^\infty x f_Z(x)\, dx\ , | |||

</math> | |||

which one can easily show is finite. Thus, the | |||

expected value of <math>Z</math> is 0. | |||

To calculate the variance of <math>Z</math>, we begin by applying [[#thm 6.14 |Theorem]]: | |||

<math display="block"> | |||

V(Z) = \int_{-\infty}^{+\infty} x^2 f_Z(x)\, dx - \mu^2\ . | |||

</math> | |||

If we write <math>x^2</math> as | |||

<math>x\cdot x</math>, and integrate by parts, we obtain | |||

<math display="block"> | |||

\biggl.\frac 1{\sqrt{2\pi}} (-x e^{-x^2/2})\biggr|_{-\infty}^{+\infty} + \frac | |||

1{\sqrt{2\pi}} \int_{-\infty}^{+\infty} e^{-x^2/2}\, dx\ . | |||

</math> | |||

The first summand above can be shown to equal 0, since as <math>x \rightarrow \pm | |||

\infty</math>, <math>e^{-x^2/2}</math> gets small more quickly than <math>x</math> gets large. The second | |||

summand is just the standard normal density integrated over its domain, so the value | |||

of this summand is 1. Therefore, the variance of the standard normal density equals | |||

1. | |||

Now let <math>X</math> be a (not necessarily standard) normal random variable with | |||

parameters | |||

<math>\mu</math> and <math>\sigma</math>. Then the density function of <math>X</math> is | |||

<math display="block"> | |||

f_X(x) = \frac 1{\sqrt{2\pi}\sigma} e^{-(x - \mu)^2/2\sigma^2}\ . | |||

</math> | |||

We can write <math>X = \sigma Z + \mu</math>, where <math>Z</math> is a standard normal random | |||

variable. Since | |||

<math>E(Z) = 0</math> and <math>V(Z) = 1</math> by the calculation above, [[#thm 6.10 |Theorem]] and [[#thm 6.13 |Theorem]] imply that | |||

<math display="block"> | |||

\begin{eqnarray*} | |||

E(X) &=& E(\sigma Z + \mu)\ =\ \mu\ , \\ | |||

V(X) &=& V(\sigma Z + \mu)\ =\ \sigma^2\ . | |||

\end{eqnarray*} | |||

</math> | |||

<span id="exam 6.23"/> | |||

'''Example''' | |||

Let <math>X</math> be a continuous random variable with the Cauchy density function | |||

<math display="block"> | |||

f_X(x) = \frac {a}{\pi} \frac {1}{a^2 + x^2}\ . | |||

</math> | |||

Then the expectation of <math>X</math> does not exist, because the integral | |||

<math display="block"> | |||

\frac a\pi \int_{-\infty}^{+\infty} \frac {|x|\,dx}{a^2 + x^2} | |||

</math> | |||

diverges. Thus the variance of <math>X</math> also fails to exist. Densities whose variance | |||

is not defined, like the Cauchy density, behave quite differently in a number of | |||

important respects from those whose variance is finite. We shall see one instance of | |||

this difference in [[guide:E5be6e0c81 |Continuous Random Variables]]. | |||

===Independent Trials=== | |||

{{proofcard|Corollary|cor_6.1|If <math>X_1, X_2, \ldots, X_n</math> is an independent trials | |||

process of real-valued random variables, with <math>E(X_i) = \mu</math> and <math>V(X_i) = \sigma^2</math>, | |||

and if | |||

<math display="block"> | |||

\begin{eqnarray*} S_n & = & X_1 + X_2 +\cdots+ X_n\ , \\ A_n & = & \frac {S_n}n\ , | |||

\end{eqnarray*} | |||

</math> | |||

then | |||

<math display="block"> | |||

\begin{eqnarray*} | |||

E(S_n) & = & n\mu\ ,\\ | |||

E(A_n) & = & \mu\ ,\\ | |||

V(S_n) & = & n\sigma^2\ ,\\ | |||

V(A_n) & = & \frac {\sigma^2} n\ . | |||

\end{eqnarray*} | |||

</math> | |||

It follows that if we set | |||

<math display="block"> | |||

S_n^* = \frac {S_n - n\mu}{\sqrt{n\sigma^2}}\ , | |||

</math> | |||

then | |||

<math display="block"> | |||

\begin{eqnarray*} | |||

E(S_n^*) & = & 0\ ,\\ | |||

V(S_n^*) & = & 1\ . | |||

\end{eqnarray*} | |||

</math> | |||

We say that <math>S_n^*</math> is a ''standardized version of'' <math>S_n</math> (see | |||

[[guide:C631488f9a#exer 6.2.13 |Exercise]] in [[guide:C631488f9a|Continuous Random Variables]]).|}} | |||

===Queues=== | |||

<span id="exam 6.24"/> | |||

'''Example''' | |||

Let us consider again the queueing problem, that is,the problem of the customers waiting in a queue for service (see [[guide:D26a5cb8f7#exam 5.21|Example]]). We suppose again that customers join the queue in such a way that the time | |||

between arrivals is an exponentially distributed random variable <math>X</math> with density | |||

function | |||

<math display="block"> | |||

f_X(t) = \lambda e^{-\lambda t}\ . | |||

</math> | |||

Then the expected value of the time between arrivals is simply <math>1/\lambda</math> (see | |||

[[#exam 6.21 |Example]]), as was stated in [[guide:D26a5cb8f7#exam 5.21 |Example]]. The reciprocal | |||

<math>\lambda</math> of this expected value is often referred to as the ''arrival rate.'' The | |||

''service time'' of an individual who is first in line is defined to be the amount | |||

of time that the person stays at the head of the line before leaving. We suppose that | |||

the customers are served in such a way that the service time is another exponentially | |||

distributed random variable | |||

<math>Y</math> with density function | |||

<math display="block"> | |||

f_X(t) = \mu e^{-\mu t}\ . | |||

</math> | |||

Then the expected value of the service time is | |||

<math display="block"> | |||

E(X) = \int_0^\infty t f_X(t)\, dt = \frac 1\mu\ . | |||

</math> | |||

The reciprocal <math>\mu</math> if this expected value is often referred to as the '' | |||

service rate.'' | |||

We expect on grounds of our everyday experience with queues that if the service | |||

rate is greater than the arrival rate, then the average queue size will tend to | |||

stabilize, but if the service rate is less than the arrival rate, then the queue will | |||

tend to increase in length without limit (see [[guide:D26a5cb8f7#fig 5.17|Figure]]). The simulations in [[guide:D26a5cb8f7#exam 5.21 |Example]] tend to bear out our everyday experience. We can make this conclusion more precise if we introduce the ''traffic intensity'' as the product | |||

<math display="block"> | |||

\rho = ({\rm arrival\ rate})({\rm average\ service\ time}) = \frac \lambda\mu = | |||

\frac {1/\mu}{1/\lambda}\ . | |||

</math> | |||

The traffic intensity is also the ratio of the average service time to the average time between arrivals. If the traffic intensity is less than 1 the queue will | |||

perform reasonably, but if it is greater than 1 the queue will grow indefinitely large. In the critical case of <math>\rho = 1</math>, it can be shown that the queue will become large but there will always be times at which the queue is empty.<ref group="Notes" >L.Kleinrock, ''Queueing Systems,'' vol. 2 (New York: John Wiley and Sons, 1975).</ref> In the case that the traffic intensity is less than 1 we can consider the length of | |||

the queue as a random variable <math>Z</math> whose expected value is finite, | |||

<math display="block"> | |||

E(Z) = N\ . | |||

</math> | |||

The time spent in the queue by a single customer can be considered as a random | |||

variable <math>W</math> whose expected value is finite, | |||

<math display="block"> | |||

E(W) = T\ . | |||

</math> | |||

Then we can argue that, when a customer joins the queue, he expects to find <math>N</math> | |||

people ahead of him, and when he leaves the queue, he expects to find <math>\lambda T</math> | |||

people behind him. Since, in equilibrium, these should be the same, we would expect | |||

to find that | |||

<math display="block"> | |||

N = \lambda T\ . | |||

</math> | |||

This last relationship is called ''Little's law for queues.''<ref group="Notes" >ibid., p. 17.</ref> We will not prove it here. A proof may be found in | |||

Ross.<ref group="Notes" >S. M. Ross, ''Applied Probability Models with Optimization | |||

Applications,'' (San Francisco: Holden-Day, 1970)</ref> Note that in this case we are counting the | |||

waiting time of all customers, even those that do not have to wait at all. In our simulation in [[guide:E05b0a84f3|Continuous Conditional Probability]], we did not consider these customers. | |||

If we knew the expected queue length then we could use Little's law to obtain | |||

the expected waiting time, since | |||

<math display="block"> | |||

T = \frac N\lambda\ . | |||

</math> | |||

The queue length is a random variable with a discrete distribution. We can estimate | |||

this distribution by simulation, keeping track of the queue lengths at the times at | |||

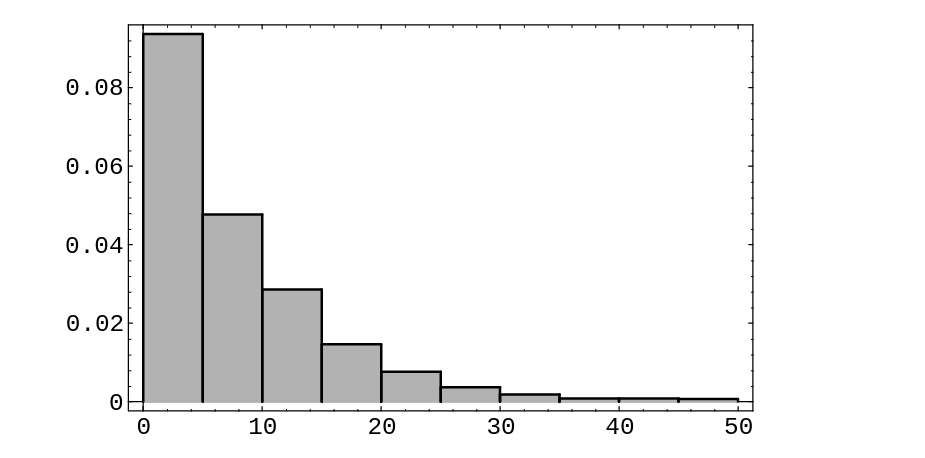

which a customer arrives. We show the result of this simulation (using the program '''Queue''') in [[#fig 6.5|Figure]]. | |||

<div id="fig 6.5" class="d-flex justify-content-center"> | |||

[[File:guide_e6d15_PSfig6-5.png | 400px | thumb | Distribution of queue lengths. ]] | |||

</div> | |||

We note that the distribution appears to be a geometric distribution. In the study | |||

of queueing theory it is shown that the distribution for the queue length in equilibrium | |||

is indeed a geometric distribution with | |||

<math display="block"> | |||

s_j = (1 - \rho) \rho^j \qquad {\rm for}\ j = 0, 1, 2, \dots\ , | |||

</math> | |||

if <math>\rho < 1</math>. | |||

The expected value of a random variable with this distribution is | |||

<math display="block"> | |||

N = \frac \rho{(1 - \rho)} | |||

</math> | |||

(see [[guide:E4fd10ce73#exam 6.8 |Example]]). Thus by Little's result the expected waiting time is | |||

<math display="block"> | |||

T = \frac \rho{\lambda(1 - \rho)} = \frac 1{\mu - \lambda}\ , | |||

</math> | |||

where <math>\mu</math> is the service rate, <math>\lambda</math> the arrival rate, and <math>\rho</math> the | |||

traffic intensity. | |||

In our simulation, the arrival rate is 1 and the service rate is 1.1. Thus, the | |||

traffic intensity is <math>1/1.1 = 10/11</math>, the expected queue size is | |||

<math display="block"> | |||

\frac {10/11}{(1 - 10/11)} = 10\ , | |||

</math> | |||

and the expected waiting time is | |||

<math display="block"> | |||

\frac 1{1.1 - 1} = 10\ . | |||

</math> | |||

In our simulation the average queue size was 8.19 and the average waiting time | |||

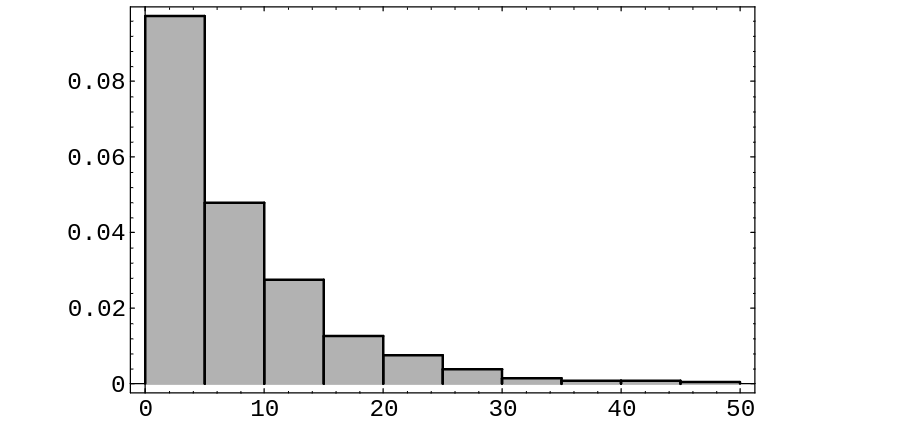

was 7.37. In [[#fig 6.5.5|Figure]], we show the histogram for the waiting times. | |||

This histogram suggests that the density for the waiting times is exponential with | |||

parameter | |||

<math>\mu - \lambda</math>, and this is the case. | |||

<div id="PSfig6-5-5" class="d-flex justify-content-center"> | |||

[[File:guide_e6d15_PSfig6-5-5.png | 400px | thumb | Distribution of queue waiting times. ]] | |||

</div> | |||

==General references== | |||

{{cite web |url=https://math.dartmouth.edu/~prob/prob/prob.pdf |title=Grinstead and Snell’s Introduction to Probability |last=Doyle |first=Peter G.|date=2006 |access-date=June 6, 2024}} | |||

==Notes== | |||

{{Reflist|group=Notes}} | |||

Latest revision as of 02:32, 11 June 2024

In this section we consider the properties of the expected value and the variance of a continuous random variable. These quantities are defined just as for discrete random variables and share the same properties.

Expected Value

Let [math]X[/math] be a real-valued random variable with density function [math]f(x)[/math]. The expected value [math]\mu = E(X)[/math] is defined by

The reader should compare this definition with the corresponding one for discrete random variables in Expected Value. Intuitively, we can interpret [math]E(X)[/math], as we did in the previous sections, as the value that we should expect to obtain if we perform a large number of independent experiments and average the resulting values of [math]X[/math].

We can summarize the properties of [math]E(X)[/math] as follows (cf. Theorem).

If [math]X[/math] and [math]Y[/math] are real-valued random variables and [math]c[/math] is any constant, then

The proof is very similar to the proof of Theorem, and we omit it.

More generally, if [math]X_1[/math], [math]X_2[/math], \dots, [math]X_n[/math] are [math]n[/math] real-valued random

variables, and

[math]c_1[/math], [math]c_2[/math], \dots, [math]c_n[/math] are [math]n[/math] constants, then

Example Let [math]X[/math] be uniformly distributed on the interval [math][0, 1][/math]. Then

It follows that if we choose a large number [math]N[/math] of random numbers from [math][0,1][/math] and take the average, then we can expect that this average should be close to the expected value of 1/2.

Example Let [math]Z = (x, y)[/math] denote a point chosen uniformly and randomly from the unit disk, as in the dart game in randomly from the unit disk, as in the dart game in Example and let [math]X = (x^2 + y^2)^{1/2}[/math] be the distance from [math]Z[/math] to the center of the disk. The density function of [math]X[/math] can easily be shown to equal [math]f(x) = 2x[/math], so by the definition of expected value,

Example In the example of the couple meeting at the Inn (Example), each person arrives at a time which is uniformly distributed between 5:00 and 6:00 PM. The random variable [math]Z[/math] under consideration is the length of time the first person has to wait until the second one arrives. It was shown that

for [math]0 \le z \le 1[/math]. Hence,

Expectation of a Function of a Random Variable

Suppose that [math]X[/math] is a real-valued random variable and [math]\phi(x)[/math] is a continuous function from R to R. The following theorem is the continuous analogue of Theorem.

If [math]X[/math] is a real-valued random variable and if [math]\phi :[/math] R [math]\to\ [/math] R is a continuous real-valued function with domain [math][a,b][/math], then

For a proof of this theorem, see Ross.[Notes 1]

Expectation of the Product of Two Random Variables

In general, it is not true that [math]E(XY) = E(X)E(Y)[/math], since the integral of a product is not the product of integrals. But if [math]X[/math] and [math]Y[/math] are independent, then the expectations multiply.

Let [math]X[/math] and [math]Y[/math] be independent real-valued continuous random variables with finite expected values. Then we have

We will prove this only in the case that the ranges of [math]X[/math] and [math]Y[/math] are contained in the intervals [math][a, b][/math] and [math][c, d][/math], respectively. Let the density functions of [math]X[/math] and [math]Y[/math] be denoted by [math]f_X(x)[/math] and [math]f_Y(y)[/math], respectively. Since [math]X[/math] and [math]Y[/math] are independent, the joint density function of [math]X[/math] and [math]Y[/math] is the product of the individual density functions. Hence

The proof in the general case involves using sequences of bounded random variables that approach [math]X[/math]

and [math]Y[/math], and is somewhat technical, so we will omit it.

In the same way, one can show that if [math]X_1[/math], [math]X_2[/math], \dots, [math]X_n[/math] are [math]n[/math] mutually independent real-valued random variables, then

Example unit square. Let [math]A = X^2[/math] and [math]B = Y^2[/math]. Then Theorem implies that [math]A[/math] and [math]B[/math] are independent. Using Theorem, the expectations of [math]A[/math] and [math]B[/math] are easy to calculate:

Using Theorem, the expectation of [math]AB[/math] is just the product of [math]E(A)[/math] and [math]E(B)[/math], or 1/9. The usefulness of this theorem is demonstrated by noting that it is quite a bit more difficult to calculate [math]E(AB)[/math] from the definition of expectation. One finds that the density function of [math]AB[/math] is

so

Example Again let [math]Z = (X, Y)[/math] be a point chosen at random in the unit square, and let [math]W = X + Y[/math]. Then [math]Y[/math] and [math]W[/math] are not independent, and we have

We turn now to the variance.

Variance

Let [math]X[/math] be a real-valued random variable with density function [math]f(x)[/math]. The variance [math]\sigma^2 = V(X)[/math] is defined by

The next result follows easily from Theorem. There is another way to calculate the variance of a continuous random variable, which is usually slightly easier. It is given in Theorem.

If [math]X[/math] is a real-valued random variable with [math]E(X) = \mu[/math], then

The properties listed in the next three theorems are all proved in exactly the same way that the corresponding theorems for discrete random variables were proved in Variance of Discrete Random Variables.

If [math]X[/math] is a real-valued random variable defined on [math]\Omega[/math] and [math]c[/math] is any constant, then (cf. Theorem)

If [math]X[/math] is a real-valued random variable with [math]E(X) = \mu[/math], then (cf. Theorem)

If [math]X[/math] and [math]Y[/math] are independent real-valued random variables on [math]\Omega[/math], then (cf. Theorem)

Example (continuation of Example) If [math]X[/math] is uniformly distributed on [math][0, 1][/math], then, using Theorem, we have

Example Let [math]X[/math] be an exponentially distributed random variable with parameter [math]\lambda[/math]. Then the density function of [math]X[/math] is

From the definition of expectation and integration by parts, we have

Similarly, using Theorem and Theorem, we have

In this case, both [math]E(X)[/math] and [math]V(X)[/math] are finite if [math]\lambda \gt 0[/math].

Example Let [math]Z[/math] be a standard normal random variable with density function

Since this density function is symmetric with respect to the [math]y[/math]-axis, then it is easy to show that

has value 0. The reader should recall however, that the expectation is defined to be the above integral only if the integral

is finite. This integral equals

which one can easily show is finite. Thus, the expected value of [math]Z[/math] is 0.

To calculate the variance of [math]Z[/math], we begin by applying Theorem:

If we write [math]x^2[/math] as [math]x\cdot x[/math], and integrate by parts, we obtain

The first summand above can be shown to equal 0, since as [math]x \rightarrow \pm \infty[/math], [math]e^{-x^2/2}[/math] gets small more quickly than [math]x[/math] gets large. The second summand is just the standard normal density integrated over its domain, so the value of this summand is 1. Therefore, the variance of the standard normal density equals 1.

Now let [math]X[/math] be a (not necessarily standard) normal random variable with

parameters

[math]\mu[/math] and [math]\sigma[/math]. Then the density function of [math]X[/math] is

We can write [math]X = \sigma Z + \mu[/math], where [math]Z[/math] is a standard normal random variable. Since [math]E(Z) = 0[/math] and [math]V(Z) = 1[/math] by the calculation above, Theorem and Theorem imply that

Example Let [math]X[/math] be a continuous random variable with the Cauchy density function

Then the expectation of [math]X[/math] does not exist, because the integral

diverges. Thus the variance of [math]X[/math] also fails to exist. Densities whose variance is not defined, like the Cauchy density, behave quite differently in a number of important respects from those whose variance is finite. We shall see one instance of this difference in Continuous Random Variables.

Independent Trials

If [math]X_1, X_2, \ldots, X_n[/math] is an independent trials process of real-valued random variables, with [math]E(X_i) = \mu[/math] and [math]V(X_i) = \sigma^2[/math], and if

then

then

Queues

Example Let us consider again the queueing problem, that is,the problem of the customers waiting in a queue for service (see Example). We suppose again that customers join the queue in such a way that the time between arrivals is an exponentially distributed random variable [math]X[/math] with density function

Then the expected value of the time between arrivals is simply [math]1/\lambda[/math] (see Example), as was stated in Example. The reciprocal [math]\lambda[/math] of this expected value is often referred to as the arrival rate. The service time of an individual who is first in line is defined to be the amount of time that the person stays at the head of the line before leaving. We suppose that the customers are served in such a way that the service time is another exponentially distributed random variable [math]Y[/math] with density function

Then the expected value of the service time is

The reciprocal [math]\mu[/math] if this expected value is often referred to as the service rate.

We expect on grounds of our everyday experience with queues that if the service

rate is greater than the arrival rate, then the average queue size will tend to

stabilize, but if the service rate is less than the arrival rate, then the queue will

tend to increase in length without limit (see Figure). The simulations in Example tend to bear out our everyday experience. We can make this conclusion more precise if we introduce the traffic intensity as the product

The traffic intensity is also the ratio of the average service time to the average time between arrivals. If the traffic intensity is less than 1 the queue will perform reasonably, but if it is greater than 1 the queue will grow indefinitely large. In the critical case of [math]\rho = 1[/math], it can be shown that the queue will become large but there will always be times at which the queue is empty.[Notes 2] In the case that the traffic intensity is less than 1 we can consider the length of the queue as a random variable [math]Z[/math] whose expected value is finite,

The time spent in the queue by a single customer can be considered as a random variable [math]W[/math] whose expected value is finite,

Then we can argue that, when a customer joins the queue, he expects to find [math]N[/math] people ahead of him, and when he leaves the queue, he expects to find [math]\lambda T[/math] people behind him. Since, in equilibrium, these should be the same, we would expect to find that

This last relationship is called Little's law for queues.[Notes 3] We will not prove it here. A proof may be found in Ross.[Notes 4] Note that in this case we are counting the waiting time of all customers, even those that do not have to wait at all. In our simulation in Continuous Conditional Probability, we did not consider these customers.

If we knew the expected queue length then we could use Little's law to obtain

the expected waiting time, since

The queue length is a random variable with a discrete distribution. We can estimate this distribution by simulation, keeping track of the queue lengths at the times at which a customer arrives. We show the result of this simulation (using the program Queue) in Figure.

We note that the distribution appears to be a geometric distribution. In the study

of queueing theory it is shown that the distribution for the queue length in equilibrium

is indeed a geometric distribution with

if [math]\rho \lt 1[/math]. The expected value of a random variable with this distribution is

(see Example). Thus by Little's result the expected waiting time is

where [math]\mu[/math] is the service rate, [math]\lambda[/math] the arrival rate, and [math]\rho[/math] the traffic intensity.

In our simulation, the arrival rate is 1 and the service rate is 1.1. Thus, the

traffic intensity is [math]1/1.1 = 10/11[/math], the expected queue size is

and the expected waiting time is

In our simulation the average queue size was 8.19 and the average waiting time was 7.37. In Figure, we show the histogram for the waiting times. This histogram suggests that the density for the waiting times is exponential with parameter [math]\mu - \lambda[/math], and this is the case.

General references

Doyle, Peter G. (2006). "Grinstead and Snell's Introduction to Probability" (PDF). Retrieved June 6, 2024.